centos7安裝Hadoop+hbase+hive步驟

centos7安裝Hadoop+hbase+hive步驟

一、IP、DNS、主機名

二、Hadoop

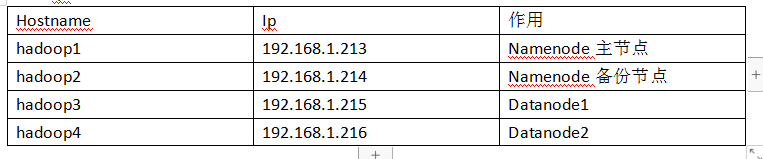

####1. IP分配

####2. 安裝jdk8(四臺)

yum list java*

yum install -y java-1.8.0-openjdk-devel.x86_64

預設jre jdk 安裝路徑是/usr/lib/jvm

####3. 配置jdk環境變數(四臺)

vim /etc/profile

export JAVA_HOME=/usr/lib/jvm/java

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JAVA_HOME/jre/lib/rt.jar

export PATH=$PATH:$JAVA_HOME/bin

使得配置生效

. /etc/profile

檢視變數

echo $JAVA_HOME

輸出

/usr/lib/jvm/jre-1.8.0-openjdk-1.8.0.161-0.b14.el7_4.x86_64

####** 4. 安裝Hadoop**

建立資料夾(四臺) mkdir /lp mkdir /lp/hadoop

複製hadoop安裝包到/tmp(以下開始,操作只在主節點)

解壓:

tar -xzvf /tmp/hadoop-3.1.2.tar.gz

mv hadoop-3.1.2/ /lp/hadoop/

etc/hadoop/hadoop-env.sh 新增如下內容

export JAVA_HOME=/usr/lib/jvm/java/ export HDFS_NAMENODE_USER="root" export HDFS_DATANODE_USER="root" export HDFS_SECONDARYNAMENODE_USER="root" export YARN_RESOURCEMANAGER_USER="root" export YARN_NODEMANAGER_USER="root"

修改etc/hadoop/core-site.xml,把配置改成:

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.1.213:9001</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

</configuration>

修改etc/hadoop/hdfs-site.xml,把配置改成:

<configuration>

<!-- Configurations for NameNode: -->

<property>

<name>dfs.namenode.name.dir</name>

<value>/lp/hadoop/hdfs/name/</value>

</property>

<property>

<name>dfs.blocksize</name>

<value>268435456</value>

</property>

<property>

<name>dfs.namenode.handler.count </name>

<value>100</value>

</property>

<property>

<name>dfs.namenode.http-address</name>

<value>192.168.1.213:8305</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>192.168.1.214:8310</value>

</property>

<!-- Configurations for DataNode: -->

<property>

<name>dfs.datanode.data.dir</name>

<value>/lp/hadoop/hdfs/data/</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

etc/hadoop/yarn-site.xml,把配置改成:

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>192.168.1.213</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>192.168.1.213:8320</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>864000</value>

</property>

<property>

<name>yarn.log-aggregation.retain-check-interval-seconds</name>

<value>86400</value>

</property>

<property>

<name>yarn.nodemanager.remote-app-log-dir</name>

<value>/lp/hadoop/YarnApp/Logs</value>

</property>

<property>

<name>yarn.log.server.url</name>

<value>http://192.168.1.213:8325/jobhistory/logs/</value>

</property>

<property>

<name>yarn.nodemanager.local-dirs</name>

<value>/lp/hadoop/YarnApp/nodemanager</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>5000</value>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>1024</value>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>4.1</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

</configuration>

** etc/hadoop/mapred-site.xml,內容改為如下:**

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>yarn.app.mapreduce.am.staging-dir</name>

<value>/lp/hadoop/YarnApp/tmp/hadoop-yarn/staging</value>

</property>

<!--MapReduce JobHistory Server地址-->

<property>

<name>mapreduce.jobhistory.address</name>

<value>192.168.1.213:8330</value>

</property>

<!--MapReduce JobHistory Server Web UI地址-->

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>192.168.1.213:8331</value>

</property>

<!--MR JobHistory Server管理的日誌的存放位置-->

<property>

<name>mapreduce.jobhistory.done-dir</name>

<value>${yarn.app.mapreduce.am.staging-dir}/history/done</value>

</property>

<!--MapReduce作業產生的日誌存放位置-->

<property>

<name>mapreduce.jobhistory.intermediate-done-dir</name>

<value>${yarn.app.mapreduce.am.staging-dir}/history/done_intermediate</value>

</property>

<property>

<name>mapreduce.jobhistory.joblist.cache.size</name>

<value>1000</value>

</property>

<property>

<name>mapreduce.tasktracker.map.tasks.maximum</name>

<value>8</value>

</property>

<property>

<name>mapreduce.tasktracker.reduce.tasks.maximum</name>

<value>8</value>

</property>

<property>

<name>mapreduce.jobtracker.maxtasks.perjob</name>

<value>5</value>

</property>

</configuration>

修改etc/hadoop/workers

vim etc/hadoop/workers

hadoop3

hadoop4

壓縮配置好的hadoop資料夾

tar -czvf hadoop.tar.gz /lp/hadoop/hadoop-3.1.2/

拷貝到其餘節點:

scp hadoop.tar.gz [email protected]:/

解壓刪除:

tar -xzvf hadoop.tar.gz

rm –rf hadoop.tar.gz

5.配置Hadoop環境變數(四臺)

vim /etc/profile.d/hadoop-3.1.2.sh

export HADOOP_HOME="/lp/hadoop/hadoop-3.1.2"

export PATH="$HADOOP_HOME/bin:$PATH"

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export YARN_CONF_DIR=$HADOOP_HOME/etc/hadoop

source /etc/profile

配置hosts(四臺)

vim /etc/hosts

192.168.1.213 hadoop1

192.168.1.214 hadoop2

192.168.1.215 hadoop3

192.168.1.216 hadoop4

免密碼登入自身(四臺)

ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

chmod 0600 ~/.ssh/authorized_keys

master免密碼登入worker【單臺,只需在namenode1上執行】

ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop2

ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop3

ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop4

格式化HDFS [只有首次部署才可使用]【謹慎操作,只在master上操作】

/lp/hadoop/hadoop-3.1.2/bin/hdfs namenode -format myClusterName

開啟hadoop服務 【只在master上操作】

/lp/hadoop/hadoop-3.1.2/sbin/start-dfs.sh

/lp/hadoop/hadoop-3.1.2/sbin/start-yarn.sh

web地址

Hdfs頁面:

主:192.168.1.213:8305

從:192.168.1.214:8310

Yarn頁面:

192.168.1.213:8320

三、Hbase

基於以上的hadoop配置好各個節點。並且使用hbase自帶的Zookeeper

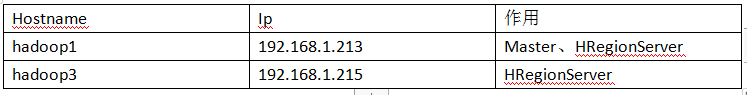

分配

解壓對應的hbase

tar -xzvf /tmp/hbase-2.1.2-bin.tar.gz

mv hbase-2.1.2/ /lp/hadoop/

修改/hbase-2.1.2/conf/hbase-site.xml

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.rootdir</name>

<value>hdfs://192.168.1.213:9001/hbase</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>hadoop1,hadoop3</value>

</property>

<property>

<name>hbase.master</name>

<value>psyDebian:60000</value>

</property>

<property>

<name>hbase.master.maxclockskew</name>

<value>180000</value>

</property>

<property>

<name>hbase.wal.provider</name>

<value>filesystem</value>

</property>

<property>

<name>hbase.unsafe.stream.capability.enforce</name>

<value>false</value>

</property>

修改/hbase-2.1.2/conf/hbase-env.sh

export JAVA_HOME=/usr/lib/jvm/java/

export HBASE_CLASSPATH=/lp/hadoop/hbase-2.1.2/conf

export HBASE_MANAGES_ZK=true

修改/hbase-2.1.2/conf/regionservers

hadoop1

hadoop3

把/lp/hadoop/hbase-2.1.2/lib/client-facing-thirdparty目錄下的htrace-core-3.1.0-incubating.jar 複製到/lp/hadoop/hbase-2.1.2/lib

cp /hbase-2.1.2/lib/client-facing-thirdparty/htrace-core-3.1.0-incubating.jar /hbase-2.1.2/lib

壓縮配置好的hbase-2.1.2資料夾

tar -czvf hbase-2.1.2.tar.gz hadoop-3.1.2/

拷貝到hadoop3節點:

scp hbase-2.1.2.tar.gz root@hadoop3:/lp/hadoop

解壓刪除

tar -xzvf hbase-2.1.2.tar.gz

rm –rf hbase-2.1.2.tar.gz

啟動

./bin/start-hbase.sh

進入shell

./bin/hbase shell

web頁面訪問

192.168.1.213:16010

<