Spark叢集奇怪的問題之Workers只顯示一個

首先問一個問題引出問題的根源所在:

hostname 僅僅是在 /etc/hosts檔案這裡控制的嗎?

答案: 不是

那麼開始今天我的問題記錄吧...

環境前置說明:

Ubuntu 16.04 LTS版 (三臺機器 ,hostname分別是: hadoop-master, hadoop-s1, hadoop-s2, 其中 master機器本身也做了一個slave)

jdk 1.8

Hadoop-2.7.3

spark-2.1.1-bin-hadoop2.7 - standalone叢集

Scala 2.12.x

我在三臺虛擬機器上分別輸入 jps

都有work程序在裡面

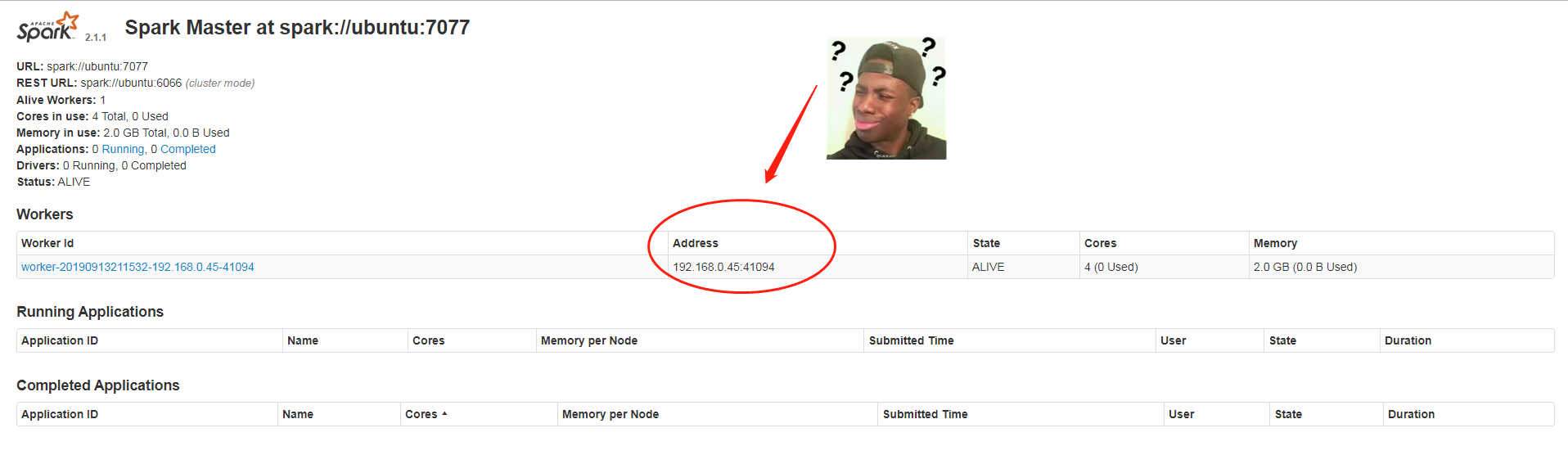

但是我開啟 hadoop-master:8080 web介面卻只能看到一個workers,如圖

只有一個,這是怎麼回事呢

我們master機器執行 $SPARK_HOME/sbin/start-all.sh 的時候開啟一下啟動的日誌看看,日誌位於

$SPARK_HOME/logs, 看看slave1,slave2節點的啟動日誌

異常有點長, 其實有用的就是這第一行 !

19/09/13 21:49:35 WARN worker.Worker: Failed to connect to master ubuntu:7077

org.apache.spark.SparkException: Exception thrown in awaitResult

at org.apache.spark.rpc.RpcTimeout$$anonfun$1.applyOrElse(RpcTimeout.scala:77)

at org.apache.spark.rpc.RpcTimeout$$anonfun$1.applyOrElse(RpcTimeout.scala:75)

at scala.runtime.AbstractPartialFunction.apply(AbstractPartialFunction.scala:36)

at org.apache.spark.rpc.RpcTimeout$$anonfun$addMessageIfTimeout$1.applyOrElse(RpcTimeout.scala:59)

at org.apache.spark.rpc.RpcTimeout$$anonfun$addMessageIfTimeout$1.applyOrElse(RpcTimeout.scala:59)

at scala.PartialFunction$OrElse.apply(PartialFunction.scala:167)

at org.apache.spark.rpc.RpcTimeout.awaitResult(RpcTimeout.scala:83)

at org.apache.spark.rpc.RpcEnv.setupEndpointRefByURI(RpcEnv.scala:100)

at org.apache.spark.rpc.RpcEnv.setupEndpointRef(RpcEnv.scala:108)

at org.apache.spark.deploy.worker.Worker$$anonfun$org$apache$spark$deploy$worker$Worker$$tryRegisterAllMasters$1$$anon$1.run(Worker.scala:218)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.io.IOException: Failed to connect to ubuntu:7077

at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:232)

at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:182)

at org.apache.spark.rpc.netty.NettyRpcEnv.createClient(NettyRpcEnv.scala:197)

at org.apache.spark.rpc.netty.Outbox$$anon$1.call(Outbox.scala:194)

at org.apache.spark.rpc.netty.Outbox$$anon$1.call(Outbox.scala:190)

... 4 more

Caused by: java.nio.channels.UnresolvedAddressException

at sun.nio.ch.Net.checkAddress(Net.java:101)

at sun.nio.ch.SocketChannelImpl.connect(SocketChannelImpl.java:622)

at io.netty.channel.socket.nio.NioSocketChannel.doConnect(NioSocketChannel.java:242)

at io.netty.channel.nio.AbstractNioChannel$AbstractNioUnsafe.connect(AbstractNioChannel.java:205)

at io.netty.channel.DefaultChannelPipeline$HeadContext.connect(DefaultChannelPipeline.java:1226)

at io.netty.channel.AbstractChannelHandlerContext.invokeConnect(AbstractChannelHandlerContext.java:550)

at io.netty.channel.AbstractChannelHandlerContext.connect(AbstractChannelHandlerContext.java:535)

at io.netty.channel.ChannelOutboundHandlerAdapter.connect(ChannelOutboundHandlerAdapter.java:47)

at io.netty.channel.AbstractChannelHandlerContext.invokeConnect(AbstractChannelHandlerContext.java:550)

at io.netty.channel.AbstractChannelHandlerContext.connect(AbstractChannelHandlerContext.java:535)

at io.netty.channel.ChannelDuplexHandler.connect(ChannelDuplexHandler.java:50)

at io.netty.channel.AbstractChannelHandlerContext.invokeConnect(AbstractChannelHandlerContext.java:550)

at io.netty.channel.AbstractChannelHandlerContext.connect(AbstractChannelHandlerContext.java:535)

at io.netty.channel.AbstractChannelHandlerContext.connect(AbstractChannelHandlerContext.java:517)

at io.netty.channel.DefaultChannelPipeline.connect(DefaultChannelPipeline.java:970)

at io.netty.channel.AbstractChannel.connect(AbstractChannel.java:215)

at io.netty.bootstrap.Bootstrap$2.run(Bootstrap.java:166)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:408)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:455)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:140)

at io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:144)

... 1 more

19/09/13 21:49:43 INFO worker.Worker: Retrying connection to master (attempt # 1)

19/09/13 21:49:43 INFO worker.Worker: Connecting to master ubuntu:7077...

19/09/13 21:49:43 WARN worker.Worker: Failed to connect to master ubuntu:7077

org.apache.spark.SparkException: Exception thrown in awaitResult

at org.apache.spark.rpc.RpcTimeout$$anonfun$1.applyOrElse(RpcTimeout.scala:77)

at org.apache.spark.rpc.RpcTimeout$$anonfun$1.applyOrElse(RpcTimeout.scala:75)

at scala.runtime.AbstractPartialFunction.apply(AbstractPartialFunction.scala:36)

at org.apache.spark.rpc.RpcTimeout$$anonfun$addMessageIfTimeout$1.applyOrElse(RpcTimeout.scala:59)

at org.apache.spark.rpc.RpcTimeout$$anonfun$addMessageIfTimeout$1.applyOrElse(RpcTimeout.scala:59)

at scala.PartialFunction$OrElse.apply(PartialFunction.scala:167)

at org.apache.spark.rpc.RpcTimeout.awaitResult(RpcTimeout.scala:83)

at org.apache.spark.rpc.RpcEnv.setupEndpointRefByURI(RpcEnv.scala:100)

at org.apache.spark.rpc.RpcEnv.setupEndpointRef(RpcEnv.scala:108)

at org.apache.spark.deploy.worker.Worker$$anonfun$org$apache$spark$deploy$worker$Worker$$tryRegisterAllMasters$1$$anon$1.run(Worker.scala:218)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.io.IOException: Failed to connect to ubuntu:7077

at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:232)

at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:182)

at org.apache.spark.rpc.netty.NettyRpcEnv.createClient(NettyRpcEnv.scala:197)

at org.apache.spark.rpc.netty.Outbox$$anon$1.call(Outbox.scala:194)

at org.apache.spark.rpc.netty.Outbox$$anon$1.call(Outbox.scala:190)

... 4 more

Caused by: java.nio.channels.UnresolvedAddressException

at sun.nio.ch.Net.checkAddress(Net.java:101)

at sun.nio.ch.SocketChannelImpl.connect(SocketChannelImpl.java:622)

at io.netty.channel.socket.nio.NioSocketChannel.doConnect(NioSocketChannel.java:242)

at io.netty.channel.nio.AbstractNioChannel$AbstractNioUnsafe.connect(AbstractNioChannel.java:205)

at io.netty.channel.DefaultChannelPipeline$HeadContext.connect(DefaultChannelPipeline.java:1226)

at io.netty.channel.AbstractChannelHandlerContext.invokeConnect(AbstractChannelHandlerContext.java:550)

at io.netty.channel.AbstractChannelHandlerContext.connect(AbstractChannelHandlerContext.java:535)

at io.netty.channel.ChannelOutboundHandlerAdapter.connect(ChannelOutboundHandlerAdapter.java:47)

at io.netty.channel.AbstractChannelHandlerContext.invokeConnect(AbstractChannelHandlerContext.java:550)

at io.netty.channel.AbstractChannelHandlerContext.connect(AbstractChannelHandlerContext.java:535)

at io.netty.channel.ChannelDuplexHandler.connect(ChannelDuplexHandler.java:50)

at io.netty.channel.AbstractChannelHandlerContext.invokeConnect(AbstractChannelHandlerContext.java:550)

at io.netty.channel.AbstractChannelHandlerContext.connect(AbstractChannelHandlerContext.java:535)

at io.netty.channel.AbstractChannelHandlerContext.connect(AbstractChannelHandlerContext.java:517)

at io.netty.channel.DefaultChannelPipeline.connect(DefaultChannelPipeline.java:970)

at io.netty.channel.AbstractChannel.connect(AbstractChannel.java:215)

at io.netty.bootstrap.Bootstrap$2.run(Bootstrap.java:166)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:408)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:455)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:140)

at io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:144)

... 1 more

19/09/13 21:49:52 INFO worker.Worker: Retrying connection to master (attempt # 2)

19/09/13 21:49:52 INFO worker.Worker: Connecting to master ubuntu:7077...

19/09/13 21:49:52 WARN worker.Worker: Failed to connect to master ubuntu:7077

org.apache.spark.SparkException: Exception thrown in awaitResult

at org.apache.spark.rpc.RpcTimeout$$anonfun$1.applyOrElse(RpcTimeout.scala:77)

at org.apache.spark.rpc.RpcTimeout$$anonfun$1.applyOrElse(RpcTimeout.scala:75)

at scala.runtime.AbstractPartialFunction.apply(AbstractPartialFunction.scala:36)

at org.apache.spark.rpc.RpcTimeout$$anonfun$addMessageIfTimeout$1.applyOrElse(RpcTimeout.scala:59)

at org.apache.spark.rpc.RpcTimeout$$anonfun$addMessageIfTimeout$1.applyOrElse(RpcTimeout.scala:59)

at scala.PartialFunction$OrElse.apply(PartialFunction.scala:167)

at org.apache.spark.rpc.RpcTimeout.awaitResult(RpcTimeout.scala:83)

at org.apache.spark.rpc.RpcEnv.setupEndpointRefByURI(RpcEnv.scala:100)

at org.apache.spark.rpc.RpcEnv.setupEndpointRef(RpcEnv.scala:108)

at org.apache.spark.deploy.worker.Worker$$anonfun$org$apache$spark$deploy$worker$Worker$$tryRegisterAllMasters$1$$anon$1.run(Worker.scala:218)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.io.IOException: Failed to connect to ubuntu:7077

at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:232)

at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:182)

at org.apache.spark.rpc.netty.NettyRpcEnv.createClient(NettyRpcEnv.scala:197)

at org.apache.spark.rpc.netty.Outbox$$anon$1.call(Outbox.scala:194)

at org.apache.spark.rpc.netty.Outbox$$anon$1.call(Outbox.scala:190)

... 4 more

Caused by: java.nio.channels.UnresolvedAddressException

at sun.nio.ch.Net.checkAddress(Net.java:101)

at sun.nio.ch.SocketChannelImpl.connect(SocketChannelImpl.java:622)

at io.netty.channel.socket.nio.NioSocketChannel.doConnect(NioSocketChannel.java:242)

at io.netty.channel.nio.AbstractNioChannel$AbstractNioUnsafe.connect(AbstractNioChannel.java:205)

at io.netty.channel.DefaultChannelPipeline$HeadContext.connect(DefaultChannelPipeline.java:1226)

at io.netty.channel.AbstractChannelHandlerContext.invokeConnect(AbstractChannelHandlerContext.java:550)

at io.netty.channel.AbstractChannelHandlerContext.connect(AbstractChannelHandlerContext.java:535)

at io.netty.channel.ChannelOutboundHandlerAdapter.connect(ChannelOutboundHandlerAdapter.java:47)

at io.netty.channel.AbstractChannelHandlerContext.invokeConnect(AbstractChannelHandlerContext.java:550)

at io.netty.channel.AbstractChannelHandlerContext.connect(AbstractChannelHandlerContext.java:535)

at io.netty.channel.ChannelDuplexHandler.connect(ChannelDuplexHandler.java:50)

at io.netty.channel.AbstractChannelHandlerContext.invokeConnect(AbstractChannelHandlerContext.java:550)

at io.netty.channel.AbstractChannelHandlerContext.connect(AbstractChannelHandlerContext.java:535)

at io.netty.channel.AbstractChannelHandlerContext.connect(AbstractChannelHandlerContext.java:517)

at io.netty.channel.DefaultChannelPipeline.connect(DefaultChannelPipeline.java:970)

at io.netty.channel.AbstractChannel.connect(AbstractChannel.java:215)

at io.netty.bootstrap.Bootstrap$2.run(Bootstrap.java:166)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:408)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:455)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:140)

at io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:144)

... 1 more

19/09/13 21:50:01 INFO worker.Worker: Retrying connection to master (attempt # 3)

19/09/13 21:50:01 INFO worker.Worker: Connecting to master ubuntu:7077...

19/09/13 21:50:01 WARN worker.Worker: Failed to connect to master ubuntu:7077

org.apache.spark.SparkException: Exception thrown in awaitResult

at org.apache.spark.rpc.RpcTimeout$$anonfun$1.applyOrElse(RpcTimeout.scala:77)

at org.apache.spark.rpc.RpcTimeout$$anonfun$1.applyOrElse(RpcTimeout.scala:75)

at scala.runtime.AbstractPartialFunction.apply(AbstractPartialFunction.scala:36)

at org.apache.spark.rpc.RpcTimeout$$anonfun$addMessageIfTimeout$1.applyOrElse(RpcTimeout.scala:59)

at org.apache.spark.rpc.RpcTimeout$$anonfun$addMessageIfTimeout$1.applyOrElse(RpcTimeout.scala:59)

at scala.PartialFunction$OrElse.apply(PartialFunction.scala:167)

at org.apache.spark.rpc.RpcTimeout.awaitResult(RpcTimeout.scala:83)

at org.apache.spark.rpc.RpcEnv.setupEndpointRefByURI(RpcEnv.scala:100)

at org.apache.spark.rpc.RpcEnv.setupEndpointRef(RpcEnv.scala:108)

at org.apache.spark.deploy.worker.Worker$$anonfun$org$apache$spark$deploy$worker$Worker$$tryRegisterAllMasters$1$$anon$1.run(Worker.scala:218)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.io.IOException: Failed to connect to ubuntu:7077

at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:232)

at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:182)

at org.apache.spark.rpc.netty.NettyRpcEnv.createClient(NettyRpcEnv.scala:197)

at org.apache.spark.rpc.netty.Outbox$$anon$1.call(Outbox.scala:194)

at org.apache.spark.rpc.netty.Outbox$$anon$1.call(Outbox.scala:190)

... 4 more

Caused by: java.nio.channels.UnresolvedAddressException

at sun.nio.ch.Net.checkAddress(Net.java:101)

at sun.nio.ch.SocketChannelImpl.connect(SocketChannelImpl.java:622)

at io.netty.channel.socket.nio.NioSocketChannel.doConnect(NioSocketChannel.java:242)

at io.netty.channel.nio.AbstractNioChannel$AbstractNioUnsafe.connect(AbstractNioChannel.java:205)

at io.netty.channel.DefaultChannelPipeline$HeadContext.connect(DefaultChannelPipeline.java:1226)

at io.netty.channel.AbstractChannelHandlerContext.invokeConnect(AbstractChannelHandlerContext.java:550)

at io.netty.channel.AbstractChannelHandlerContext.connect(AbstractChannelHandlerContext.java:535)

at io.netty.channel.ChannelOutboundHandlerAdapter.connect(ChannelOutboundHandlerAdapter.java:47)

at io.netty.channel.AbstractChannelHandlerContext.invokeConnect(AbstractChannelHandlerContext.java:550)

at io.netty.channel.AbstractChannelHandlerContext.connect(AbstractChannelHandlerContext.java:535)

at io.netty.channel.ChannelDuplexHandler.connect(ChannelDuplexHandler.java:50)

at io.netty.channel.AbstractChannelHandlerContext.invokeConnect(AbstractChannelHandlerContext.java:550)

at io.netty.channel.AbstractChannelHandlerContext.connect(AbstractChannelHandlerContext.java:535)

at io.netty.channel.AbstractChannelHandlerContext.connect(AbstractChannelHandlerContext.java:517)

at io.netty.channel.DefaultChannelPipeline.connect(DefaultChannelPipeline.java:970)

at io.netty.channel.AbstractChannel.connect(AbstractChannel.java:215)

at io.netty.bootstrap.Bootstrap$2.run(Bootstrap.java:166)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:408)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:455)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:140)

at io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:144)

... 1 more

重點在這行!:

19/09/13 21:49:35 WARN worker.Worker: Failed to connect to master ubuntu:7077

可是 !!!!!!!!!!!!!!!!!

我已經把master機器的hostname改為了 hadoop-master啊 。這裡為什麼還顯示主機名 ubuntu無法解析呢

我再次檢查 /etc/hosts 檔案 ,也完全木有問題啊

我的hosts檔案是這樣的

127.0.0.1 localhost

192.168.0.45 hadoop-master

192.168.0.46 hadoop-s1

192.168.0.42 hadoop-s2我決定閱讀一下$SPARK_HOME/sbin 下面的這些啟動指令碼檔案,看看裡面有什麼樣的玄機

首先我開啟start-all.sh

程式碼如下

#!/usr/bin/env bash

#這裡有一大段註釋,我去掉了

if [ -z "${SPARK_HOME}" ]; then

export SPARK_HOME="$(cd "`dirname "$0"`"/..; pwd)"

fi

# Load the Spark configuration

. "${SPARK_HOME}/sbin/spark-config.sh"

# Start Master

"${SPARK_HOME}/sbin"/start-master.sh

# Start Workers

"${SPARK_HOME}/sbin"/start-slaves.sh

從上面程式碼可以看出: start-all.sh啟動的是 start-master.sh 和 start-slaves.sh (注意是 slaves 而不是 slave)

那麼start-master.sh 從名字可以看出,只是啟動主節點的,和slaves半毛錢關係沒有,

那麼接下來我們看看start-slaves.sh

程式碼如下:

#!/usr/bin/env bash

# Starts a slave instance on each machine specified in the conf/slaves file.

if [ -z "${SPARK_HOME}" ]; then

export SPARK_HOME="$(cd "`dirname "$0"`"/..; pwd)"

fi

. "${SPARK_HOME}/sbin/spark-config.sh"

. "${SPARK_HOME}/bin/load-spark-env.sh"

# Find the port number for the master

if [ "$SPARK_MASTER_PORT" = "" ]; then

SPARK_MASTER_PORT=7077

fi

if [ "$SPARK_MASTER_HOST" = "" ]; then

case `uname` in

(SunOS)

SPARK_MASTER_HOST="`/usr/sbin/check-hostname | awk '{print $NF}'`"

;;

(*)

SPARK_MASTER_HOST="`hostname -f`"

;;

esac

fi

# Launch the slaves

"${SPARK_HOME}/sbin/slaves.sh" cd "${SPARK_HOME}" \; "${SPARK_HOME}/sbin/start-slave.sh" "spark://$SPARK_MASTER_HOST:$SPARK_MASTER_PORT"

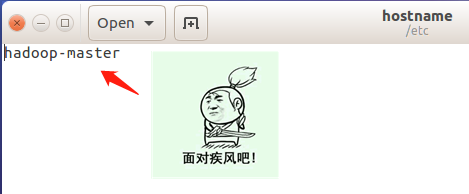

在終端執行一下 hostsname 發現居然輸出的就是 "ubuntu" !!!

我擦,這下問題找到了 !

那麼問題來了 如何修改這個hostname呢,這個我搜索了一下 ,發現ubuntu和Centos系列的修改方法還不一樣

Ubuntu修改需要修改 /etc/hostname 檔案

改完我重啟機器 ,問題解決

相關推薦

Spark叢集奇怪的問題之Workers只顯示一個

首先問一個問題引出問題的根源所在: hostname 僅僅是在 /etc/hosts檔案這裡控制的嗎? 答案:

spark叢集8080埠頁面只顯示master的情況

電腦配置是一臺物理機作為master,一臺物理機作為slave,在master啟動執行後,使用jps命令分別檢視兩臺機器的執行狀況,master與slave均執行正常,但是進入master:8080的web控制端檢視執行狀態時候,發現只有master一個節點作為wor

問題:combo只顯示一個選項,其他不顯示(調整框的高度即可。)

== .com idc 普通 內容 重新 運行 padding href 解決辦法:調整框的高度即可。 轉自:http://blog.163.com/strive_only/blog/static/89380168200971010114665/ 雖然我也是用了好一段VC的

NestedScrollView中巢狀Listview後只顯示一個item

出現這種情況的原因是Listview能識別NestedScrollView的高度。 解決辦法是新建一個MyListView繼承ListView然後重寫其中的onMeasure方法 具體實現: public class MyListView extends ListView {

使用strings.xml中文字資源發現多個空格只顯示一個空格

先上圖 strings.xml程式碼如下: <resources> <string name="app_name">Test</string> <string name="app_text">型別:型別1\n

openlayers google v3只顯示一個marker故障

openlayers google v3只顯示一個marker故障 找了半天也沒找到,後來看了OpenStreetMap網站原始碼,才最終解決的, 看來用OpenLayers的不算太多哈。 markers.addMarker(new OpenLayers.Marker(new Op

[Android應用開發]添加了兩個Button後發現只顯示一個

在相關的layout.xml檔案中添加了兩個button後,發現只顯示一個button: <Button android:id="@+id/start_normal_activity" android:layout

解決Wordpress只顯示一個主題

出現Wordpress只顯示一個主題的情況, 是因為php禁用了scandir函式, 開啟即可. PHP配置檔案 vim /usr/local/php/etc/php.ini 搜尋disabl

easyui實現tabs選項卡之間的切換(只顯示一個)

一、建立選單項 <div class="easyui-accordion" id="wl_accordion" data-options="fit:true,border:false">

UITableViewCell 設定單元格選中後只顯示一個打勾的狀態

今天做個表格,突然發現在選中某行時打勾,再次選中其它行時,上次選中的行的勾還在,不能自動消失。 於是試了以下3種方法: 1、// 第一種方法:在選中時先遍歷整個可見單元格,設定所有行的預設樣式,再設定選中的這行樣式,此方法不能取消單元格的選中 - (void)tableView:(UITableView

python 實現雙縱軸(y)軸影象的繪製(中文label),解決只顯示一個折線label的問題

資料:原始資料中,分為第0,1,2,3列,這裡使用第1(橫軸),2列(左縱軸),3列(右縱軸)200649.735.0229.07200751.2533.6829.62200854.7537.8831.39200953.5736.2129.44201046.0830.7720

iOS整合微信支付的一些坑:onResp不回撥、只顯示一個確定按鈕、閃回

iOS整合微信支付總體來說還是比較容易的(如果沒有那些坑的話),所有文件都在: https://pay.weixin.qq.com/wiki/doc/api/app.php?chapter=8_1甚至只要看: https://pay.weixin.qq.com/w

android ListView中只顯示一個item問題的兩種解決辦法

為什麼會listview中顯示一個item,而本身資料有多個item? 存在原因有兩點: 1.該listview存在於listview的巢狀下 2.該listview存在於scrollview下或者具有scrollview滑動功能的控制元件下 解決辦法:1根據ite

UITableView設定單元格選中後只顯示一個打勾的三種簡單方法(僅供參考)

1、第一種方法:先定位到最後一行,若選中最後一行直接退出,否則用遞迴改變上次選中的狀態,重新設定本次選中的狀態。 - (UITableViewCell*)tableView:(UITableView*)tableViewcellForRowAtIndexPath:(NSIn

recyclerview23+出現多個item只顯示第一個item的問題

inflate 參數設置 解決方案 ren view 使用 方案 ont match 1.改成21+可以,如果不行,就使用第2或第3個解決方案 2.對每個item的inflate,傳入兩個參數,第二個參數設置為null,而不是使用3個參數(第二個parent,第三個fal

C# ArcgisEngine開發中,對一個圖層進行過濾,只顯示符合條件的要素

layer style where sky 要求 我們 ase get filter 轉自原文 C# ArcgisEngine開發中,對一個圖層進行過濾,只顯示符合條件的要素 有時候,我們要對圖層上的地物進行有選擇性的顯示,以此來滿足實際的功能要求。 按下面介紹的

python之pygal:擲一個骰子統計次數並以直方圖形式顯示

統計 tle bar any http append 一個 die() title 源碼如下: 1 # pygal包:生成可縮放的矢量圖形文件,可自適應不同尺寸的屏幕顯示 2 # 安裝:python -m pip intall pygal-2.4.0-py2.py3-

pushbutton成為可點擊的圖標(實現全透明,不論點擊與否都只顯示Icon)(也就是一個萬能控件)

name 點擊 strong alt -cp log oar lai nbsp 需求 需要2個按鈕,一個是音樂開關,一個是關閉窗口,此文章關閉pushButton的透明問題(hovered+pressed都不會有背景色和邊框的變化) 原理

Oracle踩坑之解決數值0.2只顯示成.2方法

一、簡介 最近在做統計查詢時,遇到一個數值0.2查詢出來卻顯示.2的問題,於是查詢原因,發現oracle對數值0.n轉換成char型別的時候會自動忽略前面的0。本文將通過實際案例講解怎麼解決這種問題。 實際專案中一個統計示例sql: select r.xqid,r.yf,r.roomid

百度地圖之九如何在一個地圖上顯示多條導航路線

分享一下我老師大神的人工智慧教程!零基礎,通俗易懂!http://blog.csdn.net/jiangjunshow 也歡迎大家轉載本篇文章。分享知識,造福人民,實現我們中華民族偉大復興!