解決專案遷移至Kubernetes叢集中的代理問題

解決專案遷移至Kubernetes叢集中的代理問題

隨著Kubernetes技術的日益成熟,越來越多的企業選擇用Kubernetes叢集來管理專案。新專案還好,可以選擇合適的叢集規模從零開始構建專案;舊專案遷移進Kubernetes叢集就需要考慮很多因素,畢竟專案不能中斷時間過久。

問題來源

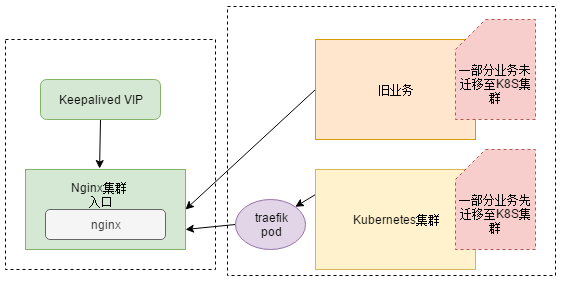

近日在做專案遷移至Kubernetes叢集時,遇到了一件有意思的問題:因為開發用的dubbo版本過低,在zookeeper註冊不上,需要開發升級dobbo,然後在打包成映象,所以要先把nodejs遷移進Kubernets叢集。因為是部分業務遷移進Kubernets叢集,所以要在traefik 前面還得加一層代理Nginx(Nginx為舊業務的入口,反向代理後面的微服務,阿里雲的slb指向nginx,等到業務全部遷移完畢,slb就指向traefik)。此種架構為雙層代理,即Slb-->Nginx-->Traefik-->Service 。

圖解

解決方案:

- 遷移至k8s叢集的業務走Nodeport,Nginx --> Nodeport。業務應用直接Nodeport,不好管理,1萬臺機器的時候 不能也Nodeport吧,埠自己要規劃,機器多了 每個機器還都暴露埠,想想都不現實

- 遷移至k8s叢集的業務走Clusterip,Nginx --> Traefik --> Service。這種方式合理。

解決問題

總不能拿生產環境來寫博文吧,用虛機講明。其實把虛機和生產機也就網路環境存在差別。

思路分析

- 部署k8s叢集

- 部署nginx

- 部署traefik

- 部署應用

- 聯調聯試

部署k8s叢集

使用我之前的博文部署方法:https://www.cnblogs.com/zisefeizhu/p/12505117.html

部署nginx

下載必要的元件

# hostname -I 20.0.0.101 # cat /etc/redhat-release CentOS Linux release 7.6.1810 (Core) # uname -a Linux fuxi-node02-101 4.4.186-1.el7.elrepo.x86_64 #1 SMP Sun Jul 21 04:06:52 EDT 2019 x86_64 x86_64 x86_64 GNU/Linux # wget http://nginx.org/download/nginx-1.10.2.tar.gz # wget http://www.openssl.org/source/openssl-fips-2.0.10.tar.gz # wget http://zlib.net/zlib-1.2.11.tar.gz # wget https://ftp.pcre.org/pub/pcre/pcre-8.40.tar.gz # yum install gcc-c++

配置-編譯-安裝軟體

# tar zxvf openssl-fips-2.0.10.tar.gz

# cd openssl-fips-2.0.10/

# ./config && make && make install

# cd ..

# ll

tar zxvf pcre-8.40.tar.gz

# cd pcre-8.40/

# ./configure && make && make install

# tar zxvf zlib-1.2.11.tar.gz

# cd zlib-1.2.11/

# ./configure && make && make install

# tar zxvf nginx-1.10.2.tar.gz

# cd nginx-1.10.2/

#./configure --with-http_stub_status_module --prefix=/opt/nginx

# make && make install

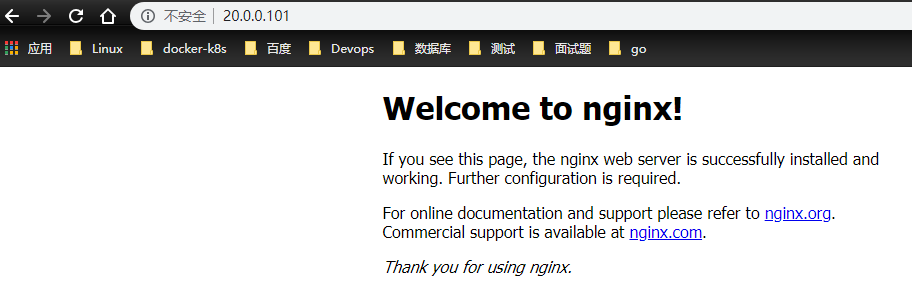

啟動Nginx

# pwd

/opt/nginx

# ll

總用量 4

drwx------ 2 nobody root 6 4月 22 11:30 client_body_temp

drwxr-xr-x 2 root root 4096 4月 22 12:53 conf

drwx------ 2 nobody root 6 4月 22 11:30 fastcgi_temp

drwxr-xr-x 2 root root 40 4月 22 11:29 html

drwxr-xr-x 2 root root 41 4月 22 14:24 logs

drwx------ 2 nobody root 6 4月 22 11:30 proxy_temp

drwxr-xr-x 2 root root 19 4月 22 11:29 sbin

drwx------ 2 nobody root 6 4月 22 11:30 scgi_temp

drwx------ 2 nobody root 6 4月 22 11:30 uwsgi_temp

# sbin/nginx

traefik 部署

https://www.cnblogs.com/zisefeizhu/p/12692979.html

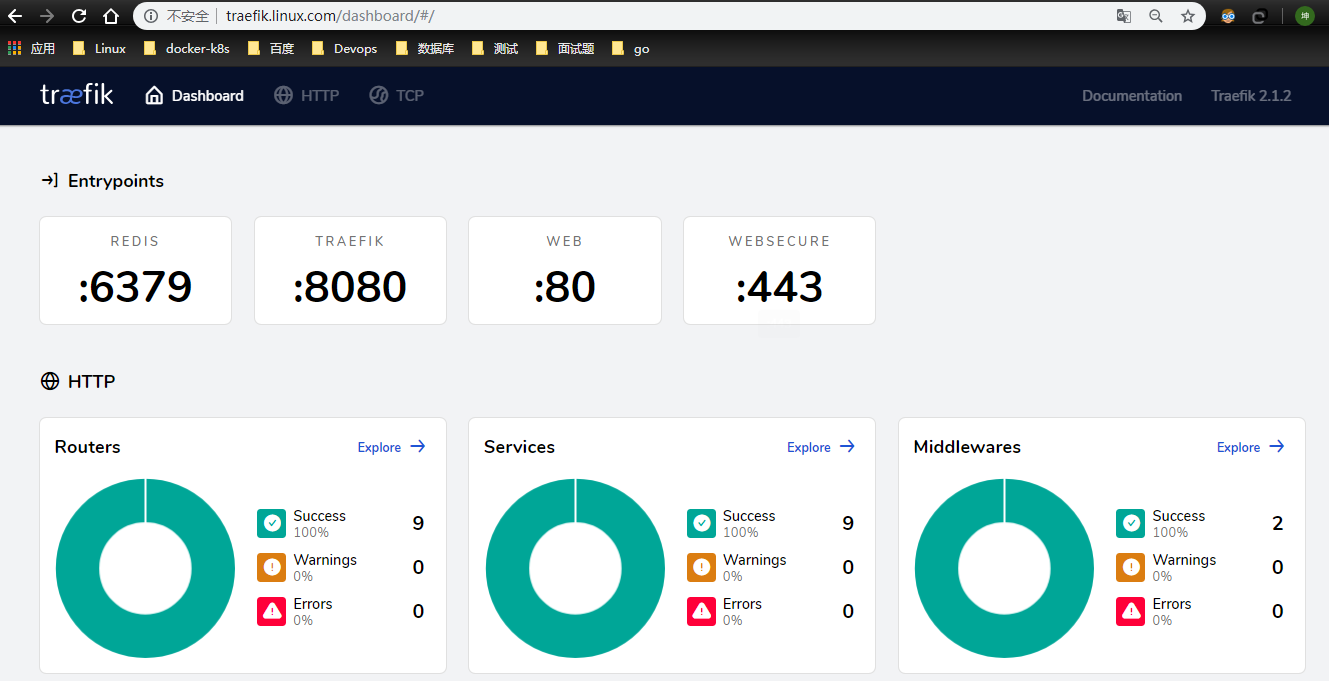

環境檢查

# kubectl get pods,svc -A | grep traefik

kube-system pod/traefik-ingress-controller-z5qd7 1/1 Running 0 136m

kube-system service/traefik ClusterIP 10.68.251.132 <none> 80/TCP,443/TCP,8080/TCP 4h14m

瀏覽器訪問

部署應用

這裡的測試應用選擇containous/whoami映象

測試應用部署

# cat whoami.yaml

##########################################################################

#Author: zisefeizhu

#QQ: 2********0

#Date: 2020-04-22

#FileName: whoami.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): 2020 All rights reserved

###########################################################################

apiVersion: v1

kind: Service

metadata:

name: whoami

spec:

ports:

- protocol: TCP

name: web

port: 80

selector:

app: whoami

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: whoami

labels:

app: whoami

spec:

replicas: 2

selector:

matchLabels:

app: whoami

template:

metadata:

labels:

app: whoami

spec:

containers:

- name: whoami

image: containous/whoami

ports:

- name: web

containerPort: 80

# kubectl get svc,pod

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/whoami ClusterIP 10.68.109.151 <none> 80/TCP 3h30m

NAME READY STATUS RESTARTS AGE

pod/whoami-bd6b677dc-jvqc2 1/1 Running 0 3h30m

pod/whoami-bd6b677dc-lvcxp 1/1 Running 0 3h30m

聯調聯試

因為選擇的解決問題的方案是:nginx --> traefik --> service

- traefik -->service

- nginx --> traefik

- nginx --> service

traefik --> service

使用traefik 代理測試應用的資源清單:

# cat traefik-whoami.yaml

##########################################################################

#Author: zisefeizhu

#QQ: 2********0

#Date: 2020-04-22

#FileName: traefik-whoami.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): 2020 All rights reserved

###########################################################################

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: simpleingressroute

spec:

entryPoints:

- web

routes:

- match: Host(`who.linux.com`) && PathPrefix(`/notls`)

kind: Rule

services:

- name: whoami

port: 80

本地hosts解析

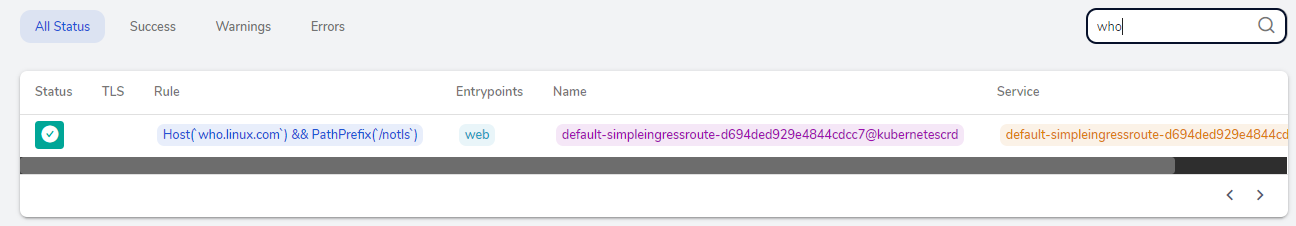

traefik介面觀察是代理成功:

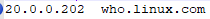

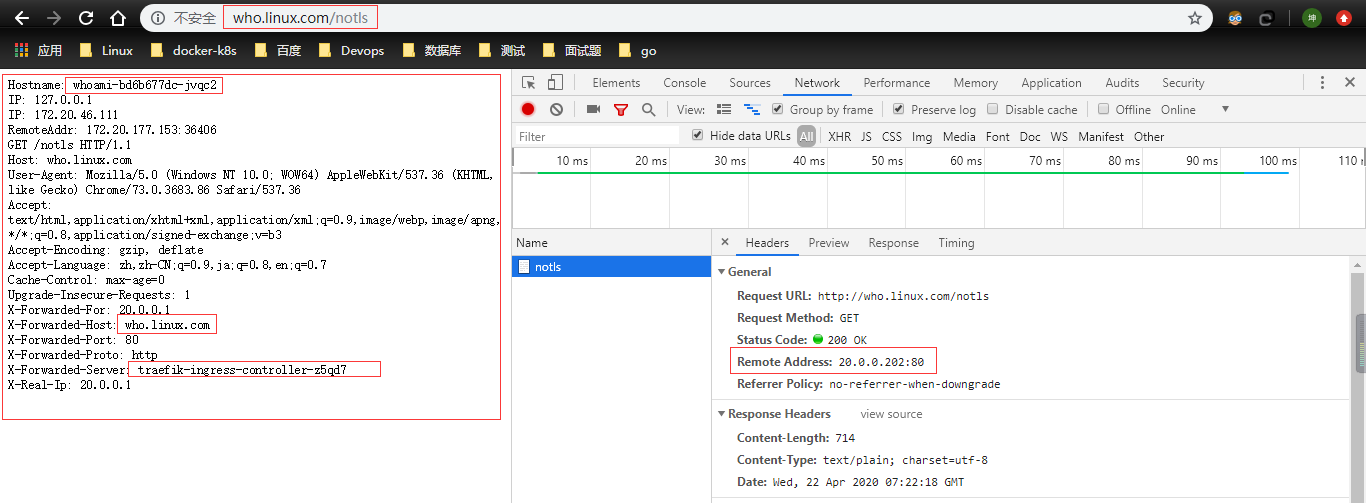

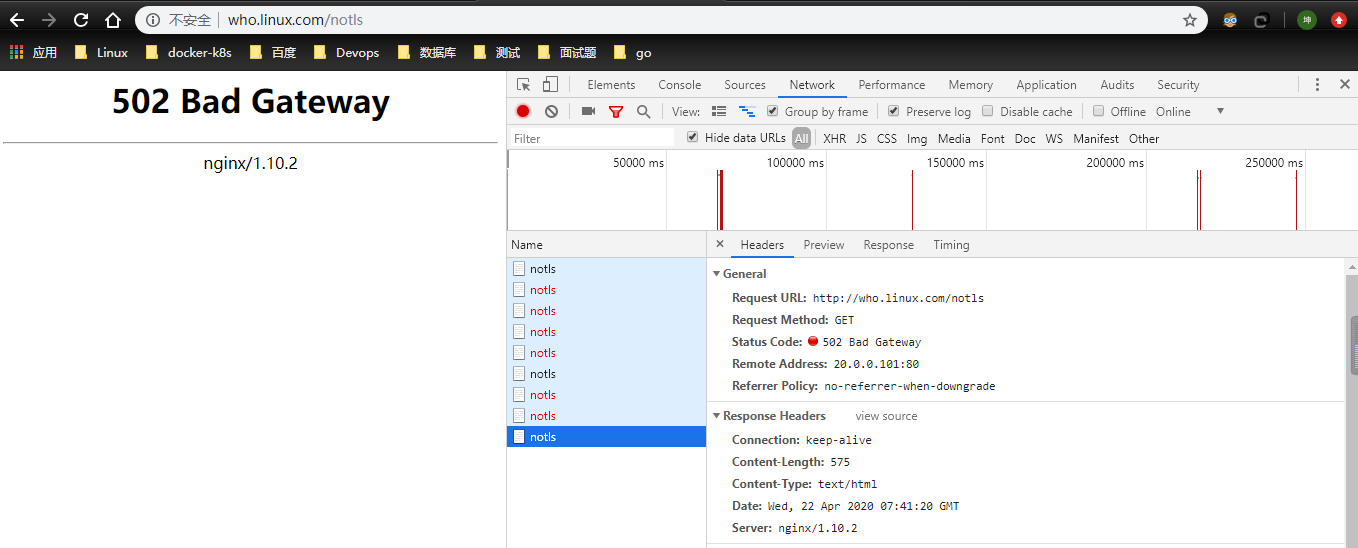

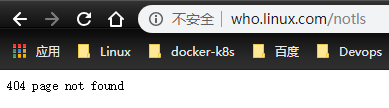

訪問who.linux.com/notls

nginx --> traefik

# cat conf/nginx.conf

user nobody;

worker_processes 4;

events {

use epoll;

worker_connections 2048;

}

http {

upstream app {

server 20.0.0.202;

}

server {

listen 80;

# server_name who2.linux.com;

access_log logs/access.log;

error_log logs/error.log;

location / {

proxy_set_header X-Forwarded-For $remote_addr;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Host $host;

proxy_headers_hash_max_size 51200;

proxy_headers_hash_bucket_size 6400;

proxy_redirect off;

proxy_read_timeout 600;

proxy_connect_timeout 600;

proxy_pass http://app;

}

}

}

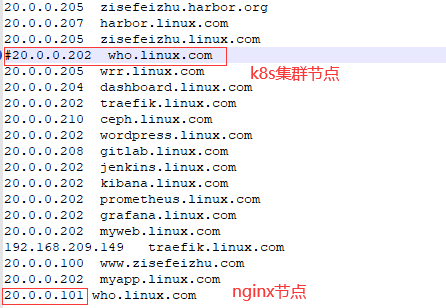

# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

20.0.0.202 who.linux.com //k8s叢集traefik所落節點,其實K8s任意節點都隨便拉

# curl -iL who.linux.com/notls

HTTP/1.1 200 OK

Content-Length: 388

Content-Type: text/plain; charset=utf-8

Date: Wed, 22 Apr 2020 07:33:52 GMT

Hostname: whoami-bd6b677dc-lvcxp

IP: 127.0.0.1

IP: 172.20.46.67

RemoteAddr: 172.20.177.153:58168

GET /notls HTTP/1.1

Host: who.linux.com

User-Agent: curl/7.29.0

Accept: */*

Accept-Encoding: gzip

X-Forwarded-For: 20.0.0.101

X-Forwarded-Host: who.linux.com

X-Forwarded-Port: 80

X-Forwarded-Proto: http

X-Forwarded-Server: traefik-ingress-controller-z5qd7

X-Real-Ip: 20.0.0.101

nginx要是不熟悉就看這大佬的博文吧:https://www.cnblogs.com/kevingrace/p/6095027.html

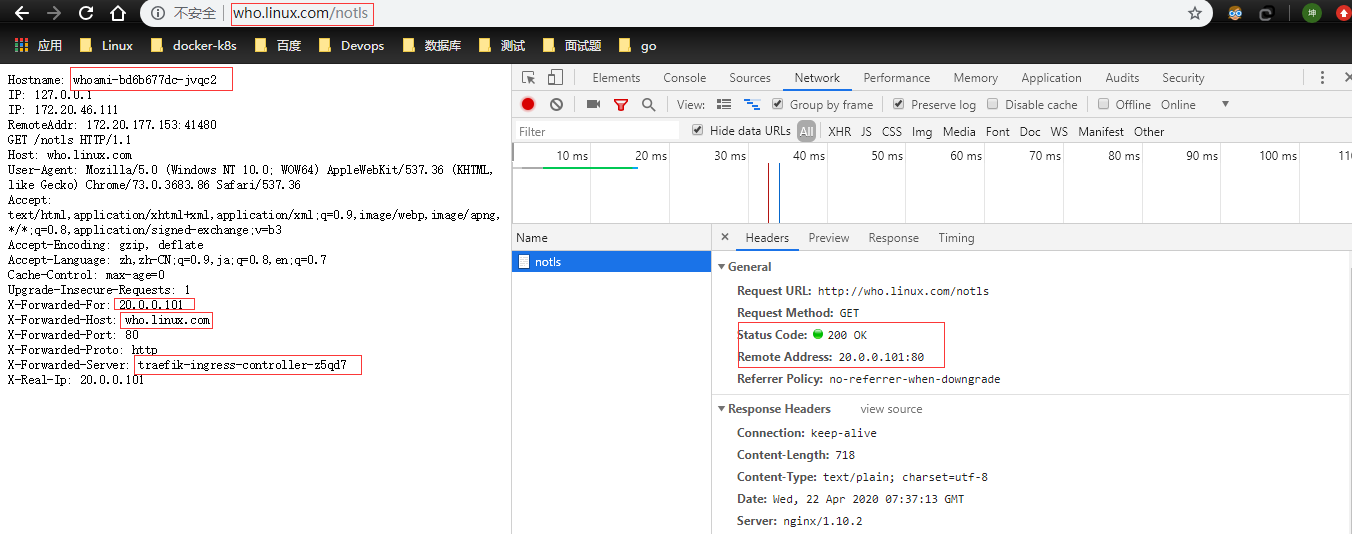

nginx --> service

# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

20.0.0.101 who.linux.com

# curl -iL who.linux.com/notls

HTTP/1.1 200 OK //響應資訊

Server: nginx/1.10.2 //響應服務

Date: Wed, 22 Apr 2020 07:27:46 GMT

Content-Type: text/plain; charset=utf-8

Content-Length: 389

Connection: keep-alive

Hostname: whoami-bd6b677dc-jvqc2

IP: 127.0.0.1

IP: 172.20.46.111

RemoteAddr: 172.20.177.153:38298

GET /notls HTTP/1.1

Host: who.linux.com

User-Agent: curl/7.29.0

Accept: */*

Accept-Encoding: gzip

X-Forwarded-For: 20.0.0.101

X-Forwarded-Host: who.linux.com

X-Forwarded-Port: 80

X-Forwarded-Proto: http

X-Forwarded-Server: traefik-ingress-controller-z5qd7

X-Real-Ip: 20.0.0.101

nginx日誌

# tail -f access.log

20.0.0.101 - - [22/Apr/2020:15:28:28 +0800] "GET /notls HTTP/1.1" 200 389 "-" "curl/7.29.0"

瀏覽器測試

繼續測試

把traefik應用給關了,然後再測試

# kubectl delete -f .

configmap "traefik-config" deleted

customresourcedefinition.apiextensions.k8s.io "ingressroutes.traefik.containo.us" deleted

customresourcedefinition.apiextensions.k8s.io "ingressroutetcps.traefik.containo.us" deleted

customresourcedefinition.apiextensions.k8s.io "middlewares.traefik.containo.us" deleted

customresourcedefinition.apiextensions.k8s.io "tlsoptions.traefik.containo.us" deleted

customresourcedefinition.apiextensions.k8s.io "traefikservices.traefik.containo.us" deleted

ingressroute.traefik.containo.us "traefik-dashboard-route" deleted

service "traefik" deleted

daemonset.apps "traefik-ingress-controller" deleted

serviceaccount "traefik-ingress-controller" deleted

clusterrole.rbac.authorization.k8s.io "traefik-ingress-controller" deleted

clusterrolebinding.rbac.authorization.k8s.io "traefik-ingress-controller" deleted

# kubectl delete -f traefik-whoami.yaml //關閉whoami traefik代理

ingressroute.traefik.containo.us "simpleingressroute" deleted

沒得說了 測試結果很明確了:訪問who.linux.com 流量走向:nginx-->traefik --> service