Python大戰機器學習

一 矩陣求導

復雜矩陣問題求導方法:可以從小到大,從scalar到vector再到matrix。

x is a column vector, A is a matrix

d(A?x)/dx=A

d(A?x)/dx=A

d(xT?A)/dxT=A

d(xT?A)/dxT=A

d(xT?A)/dx=AT

d(xT?A)/dx=AT

d(xT?A?x)/dx=xT(AT+A)

d(xT?A?x)/dx=xT(AT+A)

practice:

常用的舉證求導公式如下:

Y = A * X --> DY/DX = A‘

Y = X * A --> DY/DX = A

Y = A‘ * X * B --> DY/DX = A * B‘

Y = A‘ * X‘ * B --> DY/DX = B * A‘

1. 矩陣Y對標量x求導:

相當於每個元素求導數後轉置一下,註意M×N矩陣求導後變成N×M了

Y = [y(ij)] --> dY/dx = [dy(ji)/dx]

2. 標量y對列向量X求導:

註意與上面不同,這次括號內是求偏導,不轉置,對N×1向量求導後還是N×1向量

y = f(x1,x2,..,xn) --> dy/dX = (Dy/Dx1,Dy/Dx2,..,Dy/Dxn)‘

3. 行向量Y‘對列向量X求導:

註意1×M向量對N×1向量求導後是N×M矩陣。

將Y的每一列對X求偏導,將各列構成一個矩陣。

重要結論:

dX‘/dX = I

d(AX)‘/dX = A‘

4. 列向量Y對行向量X’求導:

轉化為行向量Y’對列向量X的導數,然後轉置。

註意M×1向量對1×N向量求導結果為M×N矩陣。

dY/dX‘ = (dY‘/dX)‘

5. 向量積對列向量X求導運算法則:

註意與標量求導有點不同。

d(UV‘)/dX = (dU/dX)V‘ + U(dV‘/dX)

d(U‘V)/dX = (dU‘/dX)V + (dV‘/dX)U

重要結論:

d(X‘A)/dX = (dX‘/dX)A + (dA/dX)X‘ = IA + 0X‘ = A

d(AX)/dX‘ = (d(X‘A‘)/dX)‘ = (A‘)‘ = A

d(X‘AX)/dX = (dX‘/dX)AX + (d(AX)‘/dX)X = AX + A‘X

6. 矩陣Y對列向量X求導:

將Y對X的每一個分量求偏導,構成一個超向量。

註意該向量的每一個元素都是一個矩陣。

7. 矩陣積對列向量求導法則:

d(uV)/dX = (du/dX)V + u(dV/dX)

d(UV)/dX = (dU/dX)V + U(dV/dX)

重要結論:

d(X‘A)/dX = (dX‘/dX)A + X‘(dA/dX) = IA + X‘0 = A

8. 標量y對矩陣X的導數:

類似標量y對列向量X的導數,

把y對每個X的元素求偏導,不用轉置。

dy/dX = [ Dy/Dx(ij) ]

重要結論:

y = U‘XV = ΣΣu(i)x(ij)v(j) 於是 dy/dX = [u(i)v(j)] = UV‘

y = U‘X‘XU 則 dy/dX = 2XUU‘

y = (XU-V)‘(XU-V) 則 dy/dX = d(U‘X‘XU - 2V‘XU + V‘V)/dX = 2XUU‘ - 2VU‘ + 0 = 2(XU-V)U‘

9. 矩陣Y對矩陣X的導數:

將Y的每個元素對X求導,然後排在一起形成超級矩陣。

10. 乘積的導數

d(f*g)/dx=(df‘/dx)g+(dg/dx)f‘

結論

d(x‘Ax)=(d(x‘‘)/dx)Ax+(d(Ax)/dx)(x‘‘)=Ax+A‘x (註意:‘‘是表示兩次轉置)

二 線性模型

2.1 普通的最小二乘

由 LinearRegression 函數實現。最小二乘法的缺點是依賴於自變量的相關性,當出現復共線性時,設計陣會接近奇異,因此由最小二乘方法得到的結果就非常敏感,如果隨機誤差出現什麽波動,最小二乘估計也可能出現較大的變化。而當數據是由非設計的試驗獲得的時候,復共線性出現的可能性非常大。

1 print __doc__

2

3 import pylab as pl

4 import numpy as np

5 from sklearn import datasets, linear_model

6

7 diabetes = datasets.load_diabetes() #載入數據

8

9 diabetes_x = diabetes.data[:, np.newaxis]

10 diabetes_x_temp = diabetes_x[:, :, 2]

11

12 diabetes_x_train = diabetes_x_temp[:-20] #訓練樣本

13 diabetes_x_test = diabetes_x_temp[-20:] #檢測樣本

14 diabetes_y_train = diabetes.target[:-20]

15 diabetes_y_test = diabetes.target[-20:]

16

17 regr = linear_model.LinearRegression()

18

19 regr.fit(diabetes_x_train, diabetes_y_train)

20

21 print ‘Coefficients :\n‘, regr.coef_

22

23 print ("Residual sum of square: %.2f" %np.mean((regr.predict(diabetes_x_test) - diabetes_y_test) ** 2))

24

25 print ("variance score: %.2f" % regr.score(diabetes_x_test, diabetes_y_test))

26

27 pl.scatter(diabetes_x_test,diabetes_y_test, color = ‘black‘)

28 pl.plot(diabetes_x_test, regr.predict(diabetes_x_test),color=‘blue‘,linewidth = 3)

29 pl.xticks(())

30 pl.yticks(())

31 pl.show()

View Code

2.2 嶺回歸

嶺回歸是一種正則化方法,通過在損失函數中加入L2範數懲罰項,來控制線性模型的復雜程度,從而使得模型更穩健。

from sklearn import linear_model

clf = linear_model.Ridge (alpha = .5)

clf.fit([[0,0],[0,0],[1,1]],[0,.1,1])

clf.coef_

2.3 Lassio

asso和嶺估計的區別在於它的懲罰項是基於L1範數的。因此,它可以將系數控制收縮到0,從而達到變量選擇的效果。它是一種非常流行的變量選擇 方法。Lasso估計的算法主要有兩種,其一是用於以下介紹的函數Lasso的coordinate descent。另外一種則是下面會介紹到的最小角回歸。

clf = linear_model.Lasso(alpha = 0.1)

clf.fit([[0,0],[1,1]],[0,1])

clf.predict([[1,1]])

2.4 Elastic Net

ElasticNet是對Lasso和嶺回歸的融合,其懲罰項是L1範數和L2範數的一個權衡。下面的腳本比較了Lasso和Elastic Net的回歸路徑,並做出了其圖形。

1 print __doc__

2

3 # Author: Alexandre Gramfort

4

5

6 # License: BSD Style.

7

8 import numpy as np

9 import pylab as pl

10

11 from sklearn.linear_model import lasso_path, enet_path

12 from sklearn import datasets

13

14 diabetes = datasets.load_diabetes()

15 X = diabetes.data

16 y = diabetes.target

17

18 X /= X.std(0) # Standardize data (easier to set the l1_ratio parameter)

19

20 # Compute paths

21

22 eps = 5e-3 # the smaller it is the longer is the path

23

24 print "Computing regularization path using the lasso..."

25 models = lasso_path(X, y, eps=eps)

26 alphas_lasso = np.array([model.alpha for model in models])

27 coefs_lasso = np.array([model.coef_ for model in models])

28

29 print "Computing regularization path using the positive lasso..."

30 models = lasso_path(X, y, eps=eps, positive=True)#lasso path

31 alphas_positive_lasso = np.array([model.alpha for model in models])

32 coefs_positive_lasso = np.array([model.coef_ for model in models])

33

34 print "Computing regularization path using the elastic net..."

35 models = enet_path(X, y, eps=eps, l1_ratio=0.8)

36 alphas_enet = np.array([model.alpha for model in models])

37 coefs_enet = np.array([model.coef_ for model in models])

38

39 print "Computing regularization path using the positve elastic net..."

40 models = enet_path(X, y, eps=eps, l1_ratio=0.8, positive=True)

41 alphas_positive_enet = np.array([model.alpha for model in models])

42 coefs_positive_enet = np.array([model.coef_ for model in models])

43

44 # Display results

45

46 pl.figure(1)

47 ax = pl.gca()

48 ax.set_color_cycle(2 * [‘b‘, ‘r‘, ‘g‘, ‘c‘, ‘k‘])

49 l1 = pl.plot(coefs_lasso)

50 l2 = pl.plot(coefs_enet, linestyle=‘--‘)

51

52 pl.xlabel(‘-Log(lambda)‘)

53 pl.ylabel(‘weights‘)

54 pl.title(‘Lasso and Elastic-Net Paths‘)

55 pl.legend((l1[-1], l2[-1]), (‘Lasso‘, ‘Elastic-Net‘), loc=‘lower left‘)

56 pl.axis(‘tight‘)

57

58 pl.figure(2)

59 ax = pl.gca()

60 ax.set_color_cycle(2 * [‘b‘, ‘r‘, ‘g‘, ‘c‘, ‘k‘])

61 l1 = pl.plot(coefs_lasso)

62 l2 = pl.plot(coefs_positive_lasso, linestyle=‘--‘)

63

64 pl.xlabel(‘-Log(lambda)‘)

65 pl.ylabel(‘weights‘)

66 pl.title(‘Lasso and positive Lasso‘)

67 pl.legend((l1[-1], l2[-1]), (‘Lasso‘, ‘positive Lasso‘), loc=‘lower left‘)

68 pl.axis(‘tight‘)

69

70 pl.figure(3)

71 ax = pl.gca()

72 ax.set_color_cycle(2 * [‘b‘, ‘r‘, ‘g‘, ‘c‘, ‘k‘])

73 l1 = pl.plot(coefs_enet)

74 l2 = pl.plot(coefs_positive_enet, linestyle=‘--‘)

75

76 pl.xlabel(‘-Log(lambda)‘)

77 pl.ylabel(‘weights‘)

78 pl.title(‘Elastic-Net and positive Elastic-Net‘)

79 pl.legend((l1[-1], l2[-1]), (‘Elastic-Net‘, ‘positive Elastic-Net‘),

80 loc=‘lower left‘)

81 pl.axis(‘tight‘)

82 pl.show()

View Code

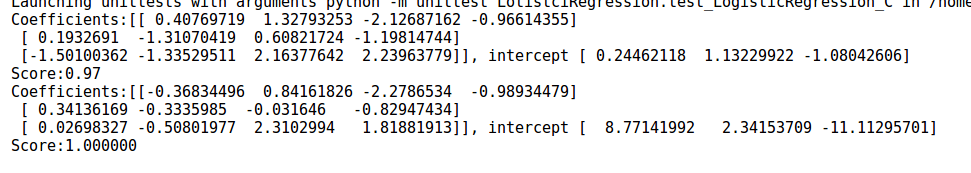

2.5 邏輯回歸

Logistic回歸是一個線性分類器。類 LogisticRegression 實現了該分類器,並且實現了L1範數,L2範數懲罰項的logistic回歸。為了使用邏輯回歸模型,我對鳶尾花進行分類。鳶尾花數據集一共150個數據,這些數據分為3類(分別為setosa,versicolor,virginica),每類50個數據。每個數據包含4個屬性:萼片長度,萼片寬度,花瓣長度,花瓣寬度。具體代碼如下:

1 import matplotlib.pyplot as plt

2 import numpy as np

3 from sklearn import datasets,linear_model,discriminant_analysis,cross_validation

4

5 def load_data():

6 iris=datasets.load_iris()

7 X_train=iris.data

8 Y_train=iris.target

9 return cross_validation.train_test_split(X_train,Y_train,test_size=0.25,random_state=0,stratify=Y_train)

10

11 def test_LogisticRegression(*data): # default use one vs rest

12 X_train, X_test, Y_train, Y_test = data

13 regr=linear_model.LogisticRegression()

14 regr.fit(X_train,Y_train)

15 print("Coefficients:%s, intercept %s"%(regr.coef_,regr.intercept_))

16 print("Score:%.2f"%regr.score(X_test,Y_test))

17

18 def test_LogisticRegression_multionmial(*data): #use multi_class

19 X_train, X_test, Y_train, Y_test = data

20 regr=linear_model.LogisticRegression(multi_class=‘multinomial‘,solver=‘lbfgs‘)

21 regr.fit(X_train,Y_train)

22 print(‘Coefficients:%s, intercept %s‘%(regr.coef_,regr.intercept_))

23 print("Score:%2f"%regr.score(X_test,Y_test))

24

25 def test_LogisticRegression_C(*data):#C is the reciprocal of the regularization term

26 X_train, X_test, Y_train, Y_test = data

27 Cs=np.logspace(-2,4,num=100) #create equidistant series

28 scores=[]

29 for C in Cs:

30 regr=linear_model.LogisticRegression(C=C)

31 regr.fit(X_train,Y_train)

32 scores.append(regr.score(X_test,Y_test))

33 fig=plt.figure()

34 ax=fig.add_subplot(1,1,1)

35 ax.plot(Cs,scores)

36 ax.set_xlabel(r"C")

37 ax.set_ylabel(r"score")

38 ax.set_xscale(‘log‘)

39 ax.set_title("logisticRegression")

40 plt.show()

41

42 X_train,X_test,Y_train,Y_test=load_data()

43 test_LogisticRegression(X_train,X_test,Y_train,Y_test)

44 test_LogisticRegression_multionmial(X_train,X_test,Y_train,Y_test)

45 test_LogisticRegression_C(X_train,X_test,Y_train,Y_test)

View Code

結果輸出如下:

可見多分類策略可以提高準確率。

可見隨著C的增大,預測的準確率也是在增大的。當C增大到一定的程度,預測的準確率維持在較高的水準保持不變。

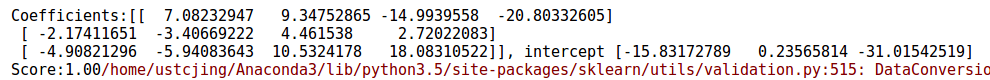

2.6 線性判別分析

這裏同樣適用鳶尾花的數據,具體代碼如下:

1 import matplotlib.pyplot as plt 2 import numpy as np 3 from sklearn import datasets,linear_model,discriminant_analysis,cross_validation 4 5 def load_data(): 6 iris=datasets.load_iris() 7 X_train=iris.data 8 Y_train=iris.target 9 return cross_validation.train_test_split(X_train,Y_train,test_size=0.25,random_state=0,stratify=Y_train) 10 11 def test_LinearDiscriminantAnalysis(*data): 12 X_train,X_test,Y_train,Y_test=data 13 lda=discriminant_analysis.LinearDiscriminantAnalysis() 14 lda.fit(X_train,Y_train) 15 print("Coefficients:%s, intercept %s"%(lda.coef_,lda.intercept_)) 16 print("Score:%.2f"%lda.score(X_test,Y_test)) 17 18 19 20 def plot_LDA(converted_X,Y): 21 from mpl_toolkits.mplot3d import Axes3D 22 fig=plt.figure() 23 ax=Axes3D(fig) 24 colors=‘rgb‘ 25 markers=‘o*s‘ 26 for target,color,marker in zip([0,1,2],colors,markers): 27 pos=(Y==target).ravel() 28 X=converted_X[pos,:] 29 ax.scatter(X[:,0],X[:,1],X[:,2],color=color,marker=marker,label="Label %d"%target) 30 ax.legend(loc="best") 31 fig.suptitle("Iris After LDA") 32 plt.show() 33 34 X_train,X_test,Y_train,Y_test=load_data() 35 test_LinearDiscriminantAnalysis(X_train,X_test,Y_train,Y_test) 36 X=np.vstack((X_train,X_test)) 37 Y=np.vstack((Y_train.reshape(Y_train.size,1),Y_test.reshape(Y_test.size,1))) 38 lda=discriminant_analysis.LinearDiscriminantAnalysis() 39 lda.fit(X,Y) 40 converted_X=np.dot(X,np.transpose(lda.coef_))+lda.intercept_ 41 plot_LDA(converted_X,Y)View Code

運行結果如下:

可以看出經過線性判別分析之後,不同種類的鳶尾花之間的間隔較遠;相同種類的鳶尾花之間的已經相互聚集了

Python大戰機器學習