今日頭條爬蟲

阿新 • • 發佈:2017-09-18

comm bsp .html __main__ true lan 3.0 from iges

今日頭條是一個js動態加載的網站,嘗試了兩種方式爬取,一是頁面直接提取,一是通過接口提取:

version1:直接頁面提取

#coding=utf-8 #今日頭條 from lxml import etree import requests import urllib2,urllib def get_url(): url = ‘https://www.toutiao.com/ch/news_hot/‘ global count try: headers = { ‘Host‘: ‘www.toutiao.com‘, ‘User-Agent‘: ‘Mozilla/4.0 (compatible; MSIE 9.0; Windows NT 6.1; 125LA; .NET CLR 2.0.50727; .NET CLR 3.0.04506.648; .NET CLR 3.5.21022)‘, ‘Connection‘: ‘Keep-Alive‘, ‘Content-Type‘: ‘text/plain; Charset=UTF-8‘, ‘Accept‘: ‘*/*‘, ‘Accept-Language‘: ‘zh-cn‘, ‘cookie‘:‘__tasessionId=u690hhtp21501983729114;cp=59861769FA4FFE1‘} response = requests.get(url,headers = headers) print response.status_code html = response.content #print html tree = etree.HTML(html) title = tree.xpath(‘//a[@class="link title"]/text()‘) source = tree.xpath(‘//a[@class="lbtn source"]/text()‘) comment= tree.xpath(‘//a[@class="lbtn comment"]/text()‘) stime = tree.xpath(‘//span[@class="lbtn"]/text()‘) print len(title) #0 print type(title) #<type ‘list‘> for x,y,z,q in zip(title,source,comment,stime): count += 1 data = { ‘title‘:x.text, ‘source‘:y.text, ‘comment‘:z.text, ‘stime‘:q.text} print count,‘|‘,data except urllib2.URLError, e: print e.reason if __name__ == ‘__main__‘: count = 0 get_url()

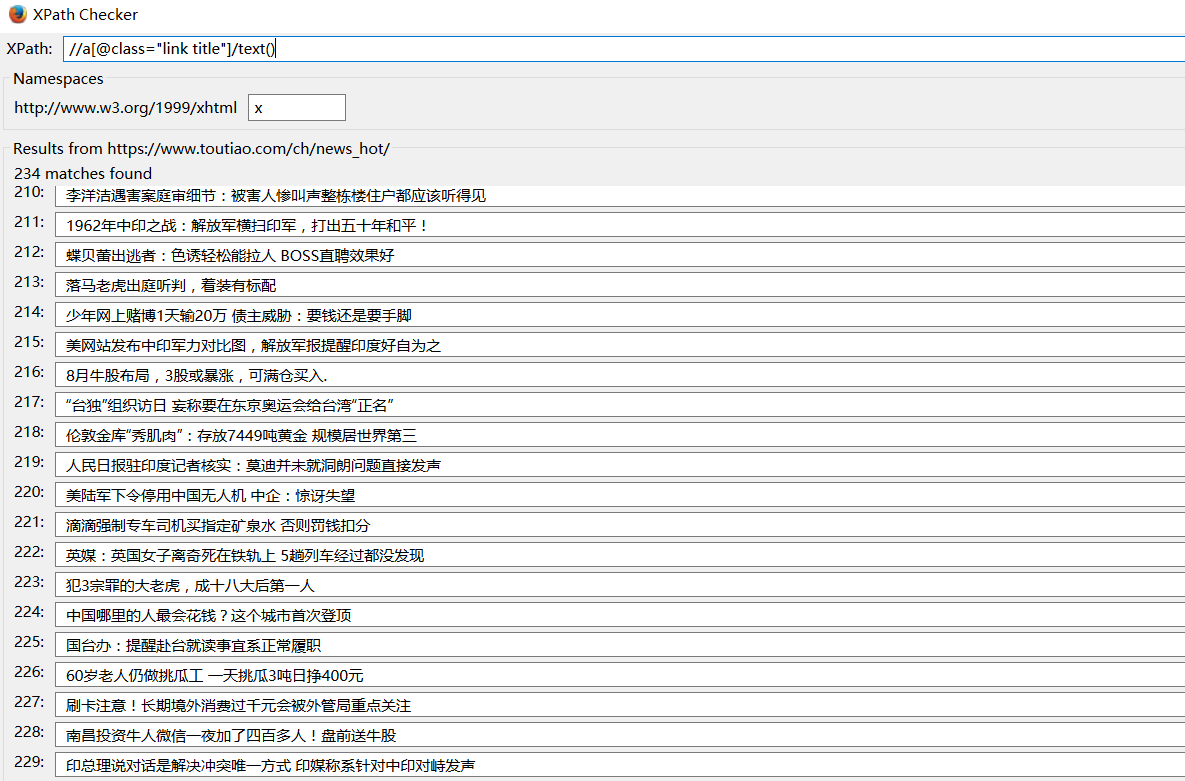

問題:title = tree.xpath(‘//a[@class="link title"]/text()‘)提取內容失敗,用xpath check插件提取成功

version2:通過接口提取

1.通過F12-network,查看?category=news_society&utm_source=toutiao&widen=1&max_behot_time=0&max_behot_time_tmp=0&tadrequire=true&as=A1B5093B4F12B65&cp=59BF52DB0655DE1的response

2.有效參數:

&category=news_society :頭條類型,必填

&max_behot_time=0 &max_behot_time_tmp=0 :打開網頁的時間(格林威治秒)和時間戳

&as=A1B5093B4F12B65&cp=59BF52DB0655DE1 :as和cp是用來提取和驗證訪問者頁面停留時間的參數,cp對瀏覽器的時間進行了加密混淆,as通過md5來對時間進行驗證

import requests import json url = ‘http://www.toutiao.com/api/pc/feed/?category=news_society&utm_source=toutiao&widen=1&max_behot_time=0&max_behot_time_tmp=0&tadrequire=true&as=A1B5093B4F12B65&cp=59BF52DB0655DE1‘ resp = requests.get(url) print resp.status_code Jdata = json.loads(resp.text) #print Jdata news = Jdata[‘data‘] for n in news: title = n[‘title‘]

source = n[‘source‘]

groupID = n[‘group_id‘]

print title,‘|‘,source,‘|‘,groupID

註:只爬取了7條數據

關於as和cp參數,有大神研究如下:

1.找到js代碼,直接crtl+f 找as和cp關鍵字

function(t) {

var e = {};

e.getHoney = function() {

var t = Math.floor((new Date).getTime() / 1e3),

e = t.toString(16).toUpperCase(),

i = md5(t).toString().toUpperCase();

if (8 != e.length) return {

as: "479BB4B7254C150",

cp: "7E0AC8874BB0985"

};

for (var n = i.slice(0, 5), a = i.slice(-5), s = "", o = 0; 5 > o; o++) s += n[o] + e[o];

for (var r = "", c = 0; 5 > c; c++) r += e[c + 3] + a[c];

return {

as: "A1" + s + e.slice(-3),

cp: e.slice(0, 3) + r + "E1"

}

},

2.模擬as和cp參數:

import time import hashlib def get_as_cp(): zz ={} now = round(time.time()) print now #獲取計算機時間 e = hex(int(now)).upper()[2:] #hex()轉換一個整數對象為十六進制的字符串表示 print e i = hashlib.md5(str(int(now))).hexdigest().upper() #hashlib.md5().hexdigest()創建hash對象並返回16進制結果 if len(e)!=8: zz = {‘as‘: "479BB4B7254C150", ‘cp‘: "7E0AC8874BB0985"} return zz n=i[:5] a=i[-5:] r = "" s = "" for i in range(5): s = s+n[i]+e[i] for j in range(5): r = r+e[j+3]+a[j] zz = { ‘as‘: "A1" + s + e[-3:], ‘cp‘: e[0:3] + r + "E1" } print zz if __name__ == "__main__": get_as_cp()

今日頭條爬蟲