tensorflow之tf.nn.l2_normalize與l2_loss的計算

1.tf.nn.l2_normalize

tf.nn.l2_normalize(x, dim, epsilon=1e-12, name=None)

上式:

x為輸入的向量;

dim為l2範化的維數,dim取值為0或0或1;

epsilon的範化的最小值邊界;

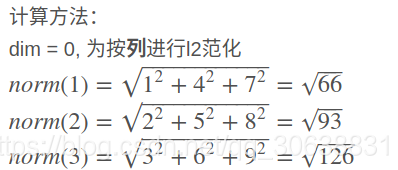

按照列計算:

import tensorflow as tf input_data = tf.constant([[1.0,2,3],[4.0,5,6],[7.0,8,9]]) output = tf.nn.l2_normalize(input_data, dim = 0) with tf.Session() as sess: print sess.run(input_data) print sess.run(output)

[[1. 2. 3.]

[4. 5. 6.]

[7. 8. 9.]]

[[0.12309149 0.20739034 0.26726127]

[0.49236596 0.51847583 0.53452253]

[0.86164045 0.82956135 0.80178374]]

[[1./norm(1), 2./norm(2) , 3./norm(3) ]

[4./norm(1) , 5./norm(2) , 6./norm(3) ] =

[7./norm(1) , 8./norm(2) , 9./norm(3) ]]

[[0.12309149 0.20739034 0.26726127]

[0.49236596 0.51847583 0.53452253]

[0.86164045 0.82956135 0.80178374]]

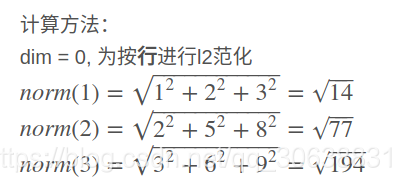

按照行計算:

import tensorflow as tf

input_data = tf.constant([[1.0,2,3],[4.0,5,6],[7.0,8,9]])

output = tf.nn.l2_normalize(input_data, dim = 1)

with tf.Session() as sess:

print sess.run(input_data)

print sess.run(output)

[[1. 2. 3.] [4. 5. 6.] [7. 8. 9.]] [[0.26726124 0.5345225 0.8017837 ] [0.45584232 0.5698029 0.6837635 ] [0.5025707 0.5743665 0.64616233]]

[[1./norm(1), 2./norm(1) , 3./norm(1) ]

[4./norm(2) , 5./norm(2) , 6./norm(2) ] =

[7./norm(3) , 8..norm(3) , 9./norm(3) ]]

[[0.12309149 0.20739034 0.26726127]

[0.49236596 0.51847583 0.53452253]

[0.86164045 0.82956135 0.80178374]]

2.tf.nn.l2_loss

tf.nn.l2_loss(t, name=None)

解釋:這個函式的作用是利用 L2 範數來計算張量的誤差值,但是沒有開方並且只取 L2 範數的值的一半,具體如下:

output = sum(t ** 2) / 2

import tensorflow as tf

a=tf.constant([1,2,3],dtype=tf.float32)

b=tf.constant([[1,1],[2,2],[3,3]],dtype=tf.float32)

with tf.Session() as sess:

print('a:')

print(sess.run(tf.nn.l2_loss(a)))

print('b:')

print(sess.run(tf.nn.l2_loss(b)))

sess.close()輸出結果: a: 7.0 b: 14.0

輸入引數:

- t: 一個Tensor。資料型別必須是一下之一:float32,float64,int64,int32,uint8,int16,int8,complex64,qint8,quint8,qint32。雖然一般情況下,資料維度是二維的。但是,資料維度可以取任意維度。

- name: 為這個操作取個名字。

輸出引數:

一個 Tensor ,資料型別和 t 相同,是一個標量。