mask_rcnn keras原始碼跟讀1)模型搭建

基礎知識:faster_rcnn相關內容,mask_rcnn相關內容

原始碼git:https://github.com/matterport/Mask_RCNN/tree/v2.1

1.模型搭建

主要在類MaskRCNN的build方法內(model.py_1822行)

1.1基礎網路_resnet101網路

# Build the shared convolutional layers.

# Bottom-up Layers

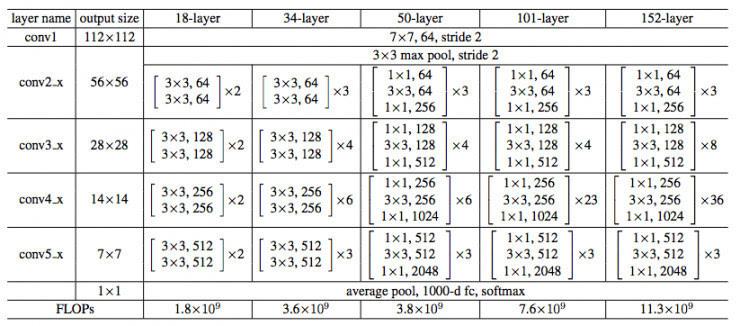

# Returns a list of the last layers of each stage, 5 in total. resnet_graph就是標準的resnet網路,如圖1所示C1,C2和layer_name對應,這裡沒有用C1(stage 5),C2, C3, C4, C5用於FPN網路

圖1-resnet

圖1-resnet

resnet相關介紹: https://blog.csdn.net/jiangpeng59/article/details/79609392

1.2 特徵提取網路_FPN網路

簡單介紹FPN:把底層的特徵和高層的特徵進行融合,便於細緻檢測。這裡P5=C5,然後P4=P5+C4,最終得到rpn_feature_maps,注意這裡多了個P6,其僅是由P5下采樣獲得。

FPN網路相關:https://blog.csdn.net/u014380165/article/details/72890275

# Top-down Layers

# TODO: add assert to 1.3 RPN網路

RPN主要實現2個功能:

1> box的前景色和背景色的分類

2>box框體的迴歸修正

# RPN Model

rpn = build_rpn_model(config.RPN_ANCHOR_STRIDE,

len(config.RPN_ANCHOR_RATIOS), 256)然後進入到build_rpn_model函式,其把rpn定義成模型,便於重複使用

def build_rpn_model(anchor_stride, anchors_per_location, depth):

"""Builds a Keras model of the Region Proposal Network.

It wraps the RPN graph so it can be used multiple times with shared

weights.

anchors_per_location: number of anchors per pixel in the feature map

anchor_stride: Controls the density of anchors. Typically 1 (anchors for

every pixel in the feature map), or 2 (every other pixel).

depth: Depth of the backbone feature map.

Returns a Keras Model object. The model outputs, when called, are:

rpn_logits: [batch, H, W, 2] Anchor classifier logits (before softmax)

rpn_probs: [batch, W, W, 2] Anchor classifier probabilities.

rpn_bbox: [batch, H, W, (dy, dx, log(dh), log(dw))] Deltas to be

applied to anchors.

"""

input_feature_map = KL.Input(shape=[None, None, depth],

name="input_rpn_feature_map")

outputs = rpn_graph(input_feature_map, anchors_per_location, anchor_stride)

return KM.Model([input_feature_map], outputs, name="rpn_model")主要看rpn_graph函式,首先對FPN傳遞過來的feature_map進行一個3*3的卷積(增強魯棒性?),然後對這個輸出的共享feature_map進行了2個分支:(1)前景和背景的分類 (2)框體座標迴歸

以背景/前景分類為例,shared的feature_map中每個畫素點都有anchors_per_location個anchor, 而每個anchor都有2個概率的取值(BG/FG),reshape後即可獲得所有anchor對應BG/FG的取值。

def rpn_graph(feature_map, anchors_per_location, anchor_stride):

"""Builds the computation graph of Region Proposal Network.

feature_map: backbone features [batch, height, width, depth]

anchors_per_location: number of anchors per pixel in the feature map

anchor_stride: Controls the density of anchors. Typically 1 (anchors for

every pixel in the feature map), or 2 (every other pixel).

Returns:

rpn_logits: [batch, H, W, 2] Anchor classifier logits (before softmax)

rpn_probs: [batch, H, W, 2] Anchor classifier probabilities.

rpn_bbox: [batch, H, W, (dy, dx, log(dh), log(dw))] Deltas to be

applied to anchors.

"""

# TODO: check if stride of 2 causes alignment issues if the featuremap

# is not even.

# Shared convolutional base of the RPN

shared = KL.Conv2D(512, (3, 3), padding='same', activation='relu',

strides=anchor_stride,

name='rpn_conv_shared')(feature_map)

# Anchor Score. [batch, height, width, anchors per location * 2].

x = KL.Conv2D(2 * anchors_per_location, (1, 1), padding='valid',

activation='linear', name='rpn_class_raw')(shared)

# Reshape to [batch, anchors, 2]

rpn_class_logits = KL.Lambda(

lambda t: tf.reshape(t, [tf.shape(t)[0], -1, 2]))(x)

# Softmax on last dimension of BG/FG.

rpn_probs = KL.Activation(

"softmax", name="rpn_class_xxx")(rpn_class_logits)

# Bounding box refinement. [batch, H, W, anchors per location, depth]

# where depth is [x, y, log(w), log(h)]

x = KL.Conv2D(anchors_per_location * 4, (1, 1), padding="valid",

activation='linear', name='rpn_bbox_pred')(shared)

# Reshape to [batch, anchors, 4]

rpn_bbox = KL.Lambda(lambda t: tf.reshape(t, [tf.shape(t)[0], -1, 4]))(x)

return [rpn_class_logits, rpn_probs, rpn_bbox]然後讓FPN網路生成的 rpn_feature_maps = [P2, P3, P4, P5, P6],依次通過RPN網路,獲得對應的輸出[“rpn_class_logits”, “rpn_class”, “rpn_bbox”],並把對應的結果有序地整合在一起

# Loop through pyramid layers

layer_outputs = [] # list of lists

for p in rpn_feature_maps:

layer_outputs.append(rpn([p]))

# Concatenate layer outputs

# Convert from list of lists of level outputs to list of lists

# of outputs across levels.

# e.g. [[a1, b1, c1], [a2, b2, c2]] => [[a1, a2], [b1, b2], [c1, c2]]

output_names = ["rpn_class_logits", "rpn_class", "rpn_bbox"]

outputs = list(zip(*layer_outputs))

outputs = [KL.Concatenate(axis=1, name=n)(list(o))

for o, n in zip(outputs, output_names)]

rpn_class_logits, rpn_class, rpn_bbox = outputs1.4 ProposalLayer 層

proposals簡介:篩選後的anchor(擁有更多目標前景,anchor更加接近target_box)

生成的proposal並非越多越好,因而設定了閾值proposal_count ,關於非極大值抑制NMS,可以參考:非極大值抑制的幾種實現

# Generate proposals

# Proposals are [batch, N, (y1, x1, y2, x2)] in normalized coordinates

# and zero padded.

proposal_count = config.POST_NMS_ROIS_TRAINING if mode == "training"\

else config.POST_NMS_ROIS_INFERENCE

rpn_rois = ProposalLayer(

proposal_count=proposal_count,

nms_threshold=config.RPN_NMS_THRESHOLD,

name="ROI",

config=config)([rpn_class, rpn_bbox, anchors])然後進入到自定義的層ProposalLayer,其接受的引數有3個:

rpn_class:所有畫素點BG/FG的概率值。

rpn_bbox:所有畫素點對應anchor上的4個偏移值[dy, dx, log(dh), log(dw)]。

anchors:預先生成的有序anchor列表,注意這裡有序表示feature_map上畫素點生成的anchor以及該畫素點生成的rpn_class和rpn_bbox是對應的(這樣理解對?)。

最終ProposalLayer會返回一個邊框修正後及mns過濾的anchor集合(稱為roi或proposal),至此已經完成了目標的檢測

class ProposalLayer(KE.Layer):

"""Receives anchor scores and selects a subset to pass as proposals

to the second stage. Filtering is done based on anchor scores and

non-max suppression to remove overlaps. It also applies bounding

box refinement deltas to anchors.

Inputs:

rpn_probs: [batch, anchors, (bg prob, fg prob)]

rpn_bbox: [batch, anchors, (dy, dx, log(dh), log(dw))]

anchors: [batch, (y1, x1, y2, x2)] anchors in normalized coordinates

Returns:

Proposals in normalized coordinates [batch, rois, (y1, x1, y2, x2)]

"""

def __init__(self, proposal_count, nms_threshold, config=None, **kwargs):

super(ProposalLayer, self).__init__(**kwargs)

self.config = config

self.proposal_count = proposal_count

self.nms_threshold = nms_threshold

def call(self, inputs):

# Box Scores. Use the foreground class confidence. [Batch, num_rois, 1]

scores = inputs[0][:, :, 1]

# Box deltas [batch, num_rois, 4]

deltas = inputs[1]

deltas = deltas * np.reshape(self.config.RPN_BBOX_STD_DEV, [1, 1, 4])

# Anchors

anchors = inputs[2]

# Improve performance by trimming to top anchors by score

# and doing the rest on the smaller subset.

pre_nms_limit = tf.minimum(6000, tf.shape(anchors)[1])

ix = tf.nn.top_k(scores, pre_nms_limit, sorted=True,

name="top_anchors").indices

scores = utils.batch_slice([scores, ix], lambda x, y: tf.gather(x, y),

self.config.IMAGES_PER_GPU)

deltas = utils.batch_slice([deltas, ix], lambda x, y: tf.gather(x, y),

self.config.IMAGES_PER_GPU)

pre_nms_anchors = utils.batch_slice([anchors, ix], lambda a, x: tf.gather(a, x),

self.config.IMAGES_PER_GPU,

names=["pre_nms_anchors"])

# Apply deltas to anchors to get refined anchors.

# [batch, N, (y1, x1, y2, x2)]

boxes = utils.batch_slice([pre_nms_anchors, deltas],

lambda x, y: apply_box_deltas_graph(x, y),

self.config.IMAGES_PER_GPU,

names=["refined_anchors"])

#normalized coordinates就是對應原圖的百分比座標

#下面的作用:防止修正後的anchor座標超出了邊界即0<=x,y<=1

# Clip to image boundaries. Since we're in normalized coordinates,

# clip to 0..1 range. [batch, N, (y1, x1, y2, x2)]

window = np.array([0, 0, 1, 1], dtype=np.float32)

boxes = utils.batch_slice(boxes,

lambda x: clip_boxes_graph(x, window),

self.config.IMAGES_PER_GPU,

names=["refined_anchors_clipped"])

# Filter out small boxes

# According to Xinlei Chen's paper, this reduces detection accuracy

# for small objects, so we're skipping it.

# Non-max suppression

def nms(boxes, scores):

indices = tf.image.non_max_suppression(

boxes, scores, self.proposal_count,

self.nms_threshold, name="rpn_non_max_suppression")

proposals = tf.gather(boxes, indices)

# Pad if needed

padding = tf.maximum(self.proposal_count - tf.shape(proposals)[0], 0)

#不足閾值大小則底部補0來填充

proposals = tf.pad(proposals, [(0, padding), (0, 0)])

return proposals

proposals = utils.batch_slice([boxes, scores], nms,

self.config.IMAGES_PER_GPU)

return proposals

def compute_output_shape(self, input_shape):

return (None, self.proposal_count, 4)1.5DetectionTargetLayer層

# Generate detection targets

# Subsamples proposals and generates target outputs for training

# Note that proposal class IDs, gt_boxes, and gt_masks are zero

# padded. Equally, returned rois and targets are zero padded.

#target_rois即上面proposal_layer的rpn_rois輸出(通常會用rpn網路)

rois, target_class_ids, target_bbox, target_mask =\

DetectionTargetLayer(config, name="proposal_targets")([

target_rois, input_gt_class_ids, gt_boxes, input_gt_masks])在其類中,主要關注call部分

class DetectionTargetLayer(KE.Layer):

"""Subsamples proposals and generates target box refinement, class_ids,

and masks for each.

Inputs:

proposals: [batch, N, (y1, x1, y2, x2)] in normalized coordinates. Might

be zero padded if there are not enough proposals.

gt_class_ids: [batch, MAX_GT_INSTANCES] Integer class IDs.

gt_boxes: [batch, MAX_GT_INSTANCES, (y1, x1, y2, x2)] in normalized

coordinates.

gt_masks: [batch, height, width, MAX_GT_INSTANCES] of boolean type

Returns: Target ROIs and corresponding class IDs, bounding box shifts,

and masks.

rois: [batch, TRAIN_ROIS_PER_IMAGE, (y1, x1, y2, x2)] in normalized

coordinates

target_class_ids: [batch, TRAIN_ROIS_PER_IMAGE]. Integer class IDs.

target_deltas: [batch, TRAIN_ROIS_PER_IMAGE, NUM_CLASSES,

(dy, dx, log(dh), log(dw), class_id)]

Class-specific bbox refinements.

target_mask: [batch, TRAIN_ROIS_PER_IMAGE, height, width)

Masks cropped to bbox boundaries and resized to neural

network output size.

Note: Returned arrays might be zero padded if not enough target ROIs."""

def call(self, inputs):

proposals = inputs[0]

gt_class_ids = inputs[1]

gt_boxes = inputs[2]

gt_masks = inputs[3]

# Slice the batch and run a graph for each slice

# TODO: Rename target_bbox to target_deltas for clarity

names = ["rois", "target_class_ids", "target_bbox", "target_mask"]

outputs = utils.batch_slice(

[proposals, gt_class_ids, gt_boxes, gt_masks],

lambda w, x, y, z: detection_targets_graph(

w, x, y, z, self.config),

self.config.IMAGES_PER_GPU, names=names)

return outputsdetection_targets_graph函式負責處理輸入的[proposals, gt_class_ids, gt_boxes, gt_masks]

程式碼有點長,首先計算proposals和gt_boxes的覆蓋度矩陣proposals*gt_boxes,然後獲得每個proposals一個與gt_boxes最大覆蓋度值roi_iou_max,如果roi_iou_max>0.5,則認為該proposals是 positive_roi,最後再把對應gt_box分配給對應的positive_roi並進行框體微調獲得對應偏移。最終返回的rois包含positive_roi和negative_roi。

def detection_targets_graph(proposals, gt_class_ids, gt_boxes, gt_masks, config):

"""Generates detection targets for one image. Subsamples proposals and

generates target class IDs, bounding box deltas, and masks for each.

Inputs:

proposals: [N, (y1, x1, y2, x2)] in normalized coordinates. Might

be zero padded if there are not enough proposals.

gt_class_ids: [MAX_GT_INSTANCES] int class IDs

gt_boxes: [MAX_GT_INSTANCES, (y1, x1, y2, x2)] in normalized coordinates.

gt_masks: [height, width, MAX_GT_INSTANCES] of boolean type.

Returns: Target ROIs and corresponding class IDs, bounding box shifts,

and masks.

rois: [TRAIN_ROIS_PER_IMAGE, (y1, x1, y2, x2)] in normalized coordinates

class_ids: [TRAIN_ROIS_PER_IMAGE]. Integer class IDs. Zero padded.

deltas: [TRAIN_ROIS_PER_IMAGE, NUM_CLASSES, (dy, dx, log(dh), log(dw))]

Class-specific bbox refinements.

masks: [TRAIN_ROIS_PER_IMAGE, height, width). Masks cropped to bbox

boundaries and resized to neural network output size.

Note: Returned arrays might be zero padded if not enough target ROIs.

"""

# Assertions

asserts = [

tf.Assert(tf.greater(tf.shape(proposals)[0], 0), [proposals],

name="roi_assertion"),

]

with tf.control_dependencies(asserts):

proposals = tf.identity(proposals)

# Remove zero padding

#去除padding的0

proposals, _ = trim_zeros_graph(proposals, name="trim_proposals")

gt_boxes, non_zeros = trim_zeros_graph(gt_boxes, name="trim_gt_boxes")

gt_class_ids = tf.boolean_mask(gt_class_ids, non_zeros,

name="trim_gt_class_ids")

gt_masks = tf.gather(gt_masks, tf.where(non_zeros)[:, 0], axis=2,

name="trim_gt_masks")

#這裡crowds沒看懂 gt_class_ids < 0 ??

# Handle COCO crowds

# A crowd box in COCO is a bounding box around several instances. Exclude

# them from training. A crowd box is given a negative class ID.

crowd_ix = tf.where(gt_class_ids < 0)[:, 0]

non_crowd_ix = tf.where(gt_class_ids > 0)[:, 0]

crowd_boxes = tf.gather(gt_boxes, crowd_ix)

crowd_masks = tf.gather(gt_masks, crowd_ix, axis=2)

gt_class_ids = tf.gather(gt_class_ids, non_crowd_ix)

gt_boxes = tf.gather(gt_boxes, non_crowd_ix)

gt_masks = tf.gather(gt_masks, non_crowd_ix, axis=2)

#計算proposals和gt_boxes的覆蓋度矩陣proposals*gt_boxes

# Compute overlaps matrix [proposals, gt_boxes]

overlaps = overlaps_graph(proposals, gt_boxes)

# Compute overlaps with crowd boxes [anchors, crowds]

crowd_overlaps = overlaps_graph(proposals, crowd_boxes)

crowd_iou_max = tf.reduce_max(crowd_overlaps, axis=1)

no_crowd_bool = (crowd_iou_max < 0.001)

# Determine postive and negative ROIs

#獲得proposals和gt_box最大的覆蓋度值[n_proposals,1]

roi_iou_max = tf.reduce_max(overlaps, axis=1)

# 1. Positive ROIs are those with >= 0.5 IoU with a GT box

#roi覆蓋度值>0.5則認為其是positive_roi bool[n_proposals,1]

positive_roi_bool = (roi_iou_max >= 0.5)

#獲取positive_roi的索引[filter_n_proposals,1]

positive_indices = tf.where(positive_roi_bool)[:, 0]

# 2. Negative ROIs are those with < 0.5 with every GT box. Skip crowds.

negative_indices = tf.where(tf.logical_and(roi_iou_max < 0.5, no_crowd_bool))[:, 0]

#對positive_roi和inegative_roi進行了取樣

# Subsample ROIs. Aim for 33% positive

# Positive ROIs

positive_count = int(config.TRAIN_ROIS_PER_IMAGE *

config.ROI_POSITIVE_RATIO)

positive_indices = tf.random_shuffle(positive_indices)[:positive_count]

positive_count = tf.shape(positive_indices)[0]

# Negative ROIs. Add enough to maintain positive:negative ratio.

r = 1.0 / config.ROI_POSITIVE_RATIO

negative_count = tf.cast(r * tf.cast(positive_count, tf.float32), tf.int32) - positive_count

negative_indices = tf.random_shuffle(negative_indices)[:negative_count]

# Gather selected ROIs

positive_rois = tf.gather(proposals, positive_indices)

negative_rois = tf.gather(proposals, negative_indices)

#把取樣後的positive_roi分配給gt_box

# Assign positive ROIs to GT boxes.

positive_overlaps = tf.gather(overlaps, positive_indices)

#每個positive_rois對應gt_box覆蓋度最大值的下標(即列下標)[filter_n_proposals,1]

roi_gt_box_assignment = tf.argmax(positive_overlaps, axis=1)

roi_gt_boxes = tf.gather(gt_boxes, roi_gt_box_assignment)

roi_gt_class_ids = tf.gather(gt_class_ids, roi_gt_box_assignment)

# Compute bbox refinement for positive ROIs

deltas = utils.box_refinement_graph(positive_rois, roi_gt_boxes)

deltas /= config.BBOX_STD_DEV

# Assign positive ROIs to GT masks

# Permute masks to [N, height, width, 1]

transposed_masks = tf.expand_dims(tf.transpose(gt_masks, [2, 0, 1]), -1)

# Pick the right mask for each ROI

roi_masks = tf.gather(transposed_masks, roi_gt_box_assignment)

# Compute mask targets....

# Append negative ROIs and pad bbox deltas and masks that

# are not used for negative ROIs with zeros.

rois = tf.concat([positive_rois, negative_rois], axis=0)

N = tf.shape(negative_rois)[0]

P = tf.maximum(config.TRAIN_ROIS_PER_IMAGE - tf.shape(rois)[0], 0)

rois = tf.pad(rois, [(0, P), (0, 0)])

roi_gt_boxes = tf.pad(roi_gt_boxes, [(0, N + P), (0, 0)])

roi_gt_class_ids = tf.pad(roi_gt_class_ids, [(0, N + P)])

deltas = tf.pad(deltas, [(0, N + P), (0, 0)])

masks = tf.pad(masks, [[0, N + P], (0, 0), (0, 0)])

#....

return rois, roi_gt_class_ids, deltas, masks1.6Network Heads

經過DetectionTargetLayer層生成了很多rois,但它們大小不一,這裡需要對其進行大小歸一處理,並和之前的FPN生成的feature_map聯絡起來。

# Network Heads

# TODO: verify that this handles zero padded ROIs

mrcnn_class_logits, mrcnn_class, mrcnn_bbox =\

fpn_classifier_graph(rois, mrcnn_feature_maps, input_image_meta,

config.POOL_SIZE, config.NUM_CLASSES,

train_bn=config.TRAIN_BN)然後進入到fpn_classifier_graph函式,首先通過PyramidROIAlign層獲得rois固定pooling大小輸出,之後用了2個fc層特徵化了feature_map,和上面提及的RPN類似對類別進行分類,對4個座標點進行迴歸。

def fpn_classifier_graph(rois, feature_maps, image_meta,

pool_size, num_classes, train_bn=True):

"""Builds the computation graph of the feature pyramid network classifier

and regressor heads.

rois: [batch, num_rois, (y1, x1, y2, x2)] Proposal boxes in normalized

coordinates.

feature_maps: List of feature maps from diffent layers of the pyramid,

[P2, P3, P4, P5]. Each has a different resolution.

- image_meta: [batch, (meta data)] Image details. See compose_image_meta()

pool_size: The width of the square feature map generated from ROI Pooling.

num_classes: number of classes, which determines the depth of the results

train_bn: Boolean. Train or freeze Batch Norm layres

Returns:

logits: [N, NUM_CLASSES] classifier logits (before softmax)

probs: [N, NUM_CLASSES] classifier probabilities

bbox_deltas: [N, (dy, dx, log(dh), log(dw))] Deltas to apply to

proposal boxes

"""

# ROI Pooling

# Shape: [batch, num_boxes, pool_height, pool_width, channels]

x = PyramidROIAlign([pool_size, pool_size],

name="roi_align_classifier")([rois, image_meta] + feature_maps)

# Two 1024 FC layers (implemented with Conv2D for consistency)

x = KL.TimeDistributed(KL.Conv2D(1024, (pool_size, pool_size), padding="valid"),

name="mrcnn_class_conv1")(x)

x = KL.TimeDistributed(BatchNorm(), name='mrcnn_class_bn1')(x, training=train_bn)

x = KL.Activation('relu')(x)

x = KL.TimeDistributed(KL.Conv2D(1024, (1, 1)),

name="mrcnn_class_conv2")(x)

x = KL.TimeDistributed(BatchNorm(), name='mrcnn_class_bn2')(x, training=train_bn)

x = KL.Activation('relu')(x)

shared = KL.Lambda(lambda x: K.squeeze(K.squeeze(x, 3), 2),

name="pool_squeeze")(x)

# Classifier head

mrcnn_class_logits = KL.TimeDistributed(KL.Dense(num_classes),

name='mrcnn_class_logits')(shared)

mrcnn_probs = KL.TimeDistributed(KL.Activation("softmax"),

name="mrcnn_class")(mrcnn_class_logits)

# BBox head

# [batch, boxes, num_classes * (dy, dx, log(dh), log(dw))]

x = KL.TimeDistributed(KL.Dense(num_classes * 4, activation='linear'),

name='mrcnn_bbox_fc')(shared)

# Reshape to [batch, boxes, num_classes, (dy, dx, log(dh), log(dw))]

s = K.int_shape(x)

mrcnn_bbox = KL.Reshape((s[1], num_classes, 4), name="mrcnn_bbox")(x)

return mrcnn_class_logits, mrcnn_probs, mrcnn_bbox下面重點看下PyramidROIAlign

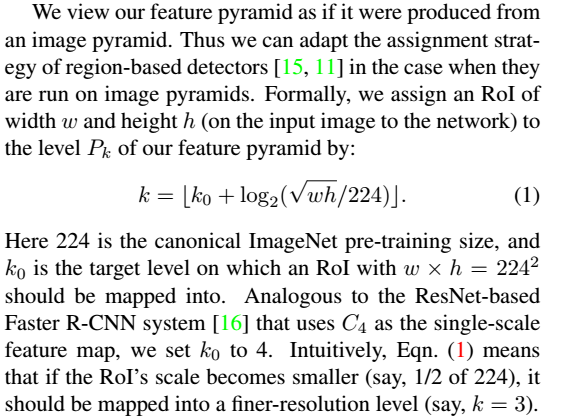

首先關注一個問題:目前獲得的rois(經RPN網路的長和寬大多都不一樣了)是通過FPN獲得的(P2-P5),P2-P5預設設定anchor的也不一樣,比如說(16,32,64,128,256),那生成的某個roi來自於哪個feature_map(P2-P5對應的featrue_map) ? 也就是說對應給定的roi,應該去哪個feature_map上做ROIAlign?因為之前生成anchor數目過多,沒有記錄其來自哪個feature_map,論文提出了一個近似的計算公式:(截圖來自於FPN網路原論文)

圖2

然後在看程式碼就清晰多了,至於roialign,程式碼直接呼叫了tf.image.crop_and_resize函式,這裡有個較好的roi_pooling博文:https://blog.deepsense.ai/region-of-interest-pooling-explained/

class PyramidROIAlign(KE.Layer):

"""Implements ROI Pooling on multiple levels of the feature pyramid.

Params:

- pool_shape: [height, width] of the output pooled regions. Usually [7, 7]

Inputs:

- boxes: [batch, num_boxes, (y1, x1, y2, x2)] in normalized

coordinates. Possibly padded with zeros if not enough

boxes to fill the array.

- image_meta: [batch, (meta data)] Image details. See compose_image_meta()

- Feature maps: List of feature maps from different levels of the pyramid.

Each is [batch, height, width, channels]

Output:

Pooled regions in the shape: [batch, num_boxes, height, width, channels].

The width and height are those specific in the pool_shape in the layer

constructor.

"""

def __init__(self, pool_shape, **kwargs):

super(PyramidROIAlign, self).__init__(**kwargs)

self.pool_shape = tuple(pool_shape)

def call(self, inputs):

# Crop boxes [batch, num_boxes, (y1, x1, y2, x2)] in normalized coords

boxes = inputs[0]

# Image meta

# Holds details about the image. See compose_image_meta()

image_meta = inputs[1]

# Feature Maps. List of feature maps from different level of the

# feature pyramid. Each is [batch, height, width, channels]

feature_maps = inputs[2:]

# Assign each ROI to a level in the pyramid based on the ROI area.

y1, x1, y2, x2 = tf.split(boxes, 4, axis=2)

h = y2 - y1

w = x2 - x1

# Use shape of first image. Images in a batch must have the same size.

image_shape = parse_image_meta_graph(image_meta)['image_shape'][0]

# Equation 1 in the Feature Pyramid Networks paper. Account for

# the fact that our coordinates are normalized here.

# e.g. a 224x224 ROI (in pixels) maps to P4

image_area = tf.cast(image_shape[0] * image_shape[1], tf.float32)

roi_level = log2_graph(tf.sqrt(h * w) / (224.0 / tf.sqrt(image_area)))

roi_level = tf.minimum(5, tf.maximum(

2, 4 + tf.cast(tf.round(roi_level), tf.int32)))

roi_level = tf.squeeze(roi_level, 2)

# Loop through levels and apply ROI pooling to each. P2 to P5.

pooled = []

box_to_level = []

for i, level in enumerate(range(2, 6)):

ix = tf.where(tf.equal(roi_level, level))

level_boxes = tf.gather_nd(boxes, ix)

# Box indicies for crop_and_resize.

box_indices = tf.cast(ix[:, 0], tf.int32)

# Keep track of which box is mapped to which level

box_to_level.append(ix)

# Stop gradient propogation to ROI proposals

level_boxes = tf.stop_gradient(level_boxes)

box_indices = tf.stop_gradient(box_indices)

# Crop and Resize

# From Mask R-CNN paper: "We sample four regular locations, so

# that we can evaluate either max or average pooling. In fact,

# interpolating only a single value at each bin center (without

# pooling) is nearly as effective."

#

# Here we use the simplified approach of a single value per bin,

# which is how it's done in tf.crop_and_resize()

# Result: [batch * num_boxes, pool_height, pool_width, channels]

pooled.append(tf.image.crop_and_resize(

feature_maps[i], level_boxes, box_indices, self.pool_shape,

method="bilinear"))

# Pack pooled features into one tensor

pooled = tf.concat(pooled, axis=0)

#.....1.7build_fpn_mask_graph

在roi的基礎上用了一個簡易版的FCN網路進行mask (簡易版?FPN網路也具有不同層次的抽象特徵)

mrcnn_mask = build_fpn_mask_graph(rois, mrcnn_feature_maps,

input_image_meta,

config.MASK_POOL_SIZE,

config.NUM_CLASSES,

train_bn=config.TRAIN_BN)

def build_fpn_mask_graph(rois, feature_maps, image_meta,

pool_size, num_classes, train_bn=True):

"""Builds the computation graph of the mask head of Feature Pyramid Network.

rois: [batch, num_rois, (y1, x1, y2, x2)] Proposal boxes in normalized

coordinates.

feature_maps: List of feature maps from diffent layers of the pyramid,

[P2, P3, P4, P5]. Each has a different resolution.

image_meta: [batch, (meta data)] Image details. See compose_image_meta()

pool_size: The width of the square feature map generated from ROI Pooling.

num_classes: number of classes, which determines the depth of the results

train_bn: Boolean. Train or freeze Batch Norm layres

Returns: Masks [batch, roi_count, height, width, num_classes]

"""

# ROI Pooling

# Shape: [batch, boxes, pool_height, pool_width, channels]

x = PyramidROIAlign([pool_size, pool_size],

name="roi_align_mask")([rois, image_meta] + feature_maps)

# Conv layers

x = KL.TimeDistributed(KL.Conv2D(256, (3, 3), padding="same"),

name="mrcnn_mask_conv1")(x)

x = KL.TimeDistributed(BatchNorm(),

name='mrcnn_mask_bn1')(x, training=train_bn)

x = KL.Activation('relu')(x)

x = KL.TimeDistributed(KL.Conv2D(256, (3, 3), padding="same"),

name="mrcnn_mask_conv2")(x)

x = KL.TimeDistributed(BatchNorm(),

name='mrcnn_mask_bn2')(x, training=train_bn)

x = KL.Activation('relu')(x)

x = KL.TimeDistributed(KL.Conv2D(256, (3, 3), padding="same"),

name="mrcnn_mask_conv3")(x)

x = KL.TimeDistributed(BatchNorm(),

name='mrcnn_mask_bn3')(x, training=train_bn)

x = KL.Activation('relu')(x)

x = KL.TimeDistributed(KL.Conv2D(256, (3, 3), padding="same"),

name="mrcnn_mask_conv4")(x)

x = KL.TimeDistributed(BatchNorm(),

name='mrcnn_mask_bn4')(x, training=train_bn)

x = KL.Activation('relu')(x)

x = KL.TimeDistributed(KL.Conv2DTranspose(256, (2, 2), strides=2, activation="relu"),

name="mrcnn_mask_deconv")(x)

x = KL.TimeDistributed(KL.Conv2D(num_classes, (1, 1), strides=1, activation="sigmoid"),

name="mrcnn_mask")(x)

return x前面4個卷積feature_map_size儲存不變,然後接了一個逆卷積Conv2DTranspose,feature_map_size變成了之前的2倍(類似於上取樣),這和配置檔案中的引數是對應:

MASK_POOL_SIZE = 14

# Shape of output mask

# To change this you also need to change the neural network mask branch

MASK_SHAPE = [28, 28]1.8 loss+model

下面是loss和keras模型生成的部分

# Losses

rpn_class_loss = KL.Lambda(lambda x: rpn_class_loss_graph(*x), name="rpn_class_loss")(

[input_rpn_match, rpn_class_logits])

rpn_bbox_loss = KL.Lambda(lambda x: rpn_bbox_loss_graph(config, *x), name="rpn_bbox_loss")(

[input_rpn_bbox, input_rpn_match, rpn_bbox])

class_loss = KL.Lambda(lambda x: mrcnn_class_loss_graph(*x), name="mrcnn_class_loss")(

[target_class_ids, mrcnn_class_logits, active_class_ids])

bbox_loss = KL.Lambda(lambda x: mrcnn_bbox_loss_graph(*x), name="mrcnn_bbox_loss")(

[target_bbox, target_class_ids, mrcnn_bbox])

mask_loss = KL.Lambda(lambda x: mrcnn_mask_loss_graph(*x), name="mrcnn_mask_loss")(

[target_mask, target_class_ids, mrcnn_mask])

# Model

inputs = [input_image, input_image_meta,

input_rpn_match, input_rpn_bbox, input_gt_class_ids, input_gt_boxes, input_gt_masks]

if not config.USE_RPN_ROIS:

inputs.append(input_rois)

outputs = [rpn_class_logits, rpn_class, rpn_bbox,

mrcnn_class_logits, mrcnn_class, mrcnn_bbox, mrcnn_mask,

rpn_rois, output_rois,

rpn_class_loss, rpn_bbox_loss, class_loss, bbox_loss, mask_loss]

model = KM.Model(inputs, outputs, name='mask_rcnn')Summary:

1.由FPN網路獲得feature_map[P2, P3, P4, P5, P6],並獲取其對應下的anchor集合

2.feature_map[P2-P6]依次通過RNP網路,獲得對應的[“rpn_class_logits”, “rpn_class”, “rpn_bbox”],這裡對座標進行了第1次線性迴歸。

3.ProposalLayer把RNP網路生成的[“rpn_class”, “rpn_bbox”]和Anchor對應起來,對前景概率FG降序並取前N(N=6000)個對應的indics,並由此indics獲得對應的rpn_bbox(deltas)和anchors(pre_nms_anchors),然後利用deltas對pre_nms_anchors進行座標修正。最後用極大值抑制演算法對修正後的anchors進行過濾獲得rois

4.DetectionTargetLayer根據roi和gt_box的iou(交併比)劃分positive_roi和negative_roi.取其比例1:3,最終返回

5.fpn_classifier_graph把roi和feature_map[P2-P6]聯絡起來,首先把roi在對應的feature_map進行roi_pooling獲得固定大小的feature_map(7*7),然後在feature_map(7*7)的基礎上進行類別分類和第2次座標線性迴歸