TensorFlow之MNIST 分類以及Dropout的使用

阿新 • • 發佈:2018-11-10

一、簡單的一層神經網路

import tensorflow as tf

#下載MNIST資料集(28*28,輸入維度為784)

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('MNIST_data', one_hot=True)

#構建簡單的一層神經網路,包括常見引數weights、biases和activation_function #首先定義新增神經層的函式def add_layer(),它有四個引數:輸入值、輸入的大小、輸出的大小和激勵函式 def add_layer(inputs,in_size,out_size,activation_function = None): #定義weights和biases,weight為隨機變數(variable) Weights = tf.Variable(tf.random_normal([in_size,out_size]))#矩陣大小為in_size*out_size biases = tf.Variable(tf.zeros([1,out_size])+0.1)#biases不為0,加上任意一個小數值 #定義Wx_plus_b, 即神經網路未啟用的值 Wx_plus_b = tf.matmul(inputs,Weights) + biases if activation_function is None: outputs = Wx_plus_b else: outputs = activation_function(Wx_plus_b) return outputs

#定義一個計算準確率的函式 def compute_accuracy(v_xs, v_ys): global prediction y_pre = sess.run(prediction, feed_dict={xs: v_xs}) correct_prediction = tf.equal(tf.argmax(y_pre,1), tf.argmax(v_ys,1)) accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32)) result = sess.run(accuracy, feed_dict={xs: v_xs, ys: v_ys}) return result # 定義placeholder存放資料 xs = tf.placeholder(tf.float32, [None, 784]) ys = tf.placeholder(tf.float32, [None, 10]) #用add_layer函式搭建一個最簡單的訓練網路結構,只有輸入層和輸出層 prediction = add_layer(xs, 784, 10, activation_function=tf.nn.softmax) #損失函式(cross_entropy)和優化方法 cross_entropy = tf.reduce_mean(-tf.reduce_sum(ys*tf.log(prediction), reduction_indices=[1])) train_step = tf.train.GradientDescentOptimizer(0.5).minimize(cross_entropy) #session會話控制 sess = tf.Session() sess.run(tf.global_variables_initializer()) #訓練1000次,每訓練50次輸出測試資料的訓練精度 for i in range(1000): #開始訓練,訓練集中每次取100個數據(batch_xs, batch_ys) batch_xs, batch_ys = mnist.train.next_batch(100) sess.run(train_step, feed_dict={xs: batch_xs, ys: batch_ys})#placeholder和feed_dict同時出現 if i%50 == 0: print(compute_accuracy( mnist.test.images, mnist.test.labels))

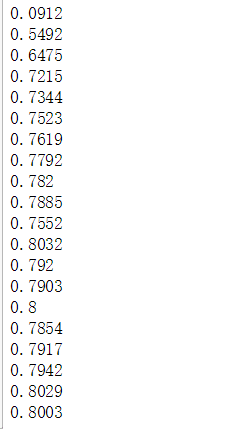

結果為:

二、Dropout的使用

1.Dropout指部分神經元的啟用值以一定的概率p暫停工作,在這次訓練過程中不更新權值,但它的權值仍保留。

2. tf.nn.dropout

tf.nn.dropout(x, keep_prob, noise_shape=None, seed=None,name=None)

keep_prob為保留概率,即我們要保留的結果所佔比例

它作為一個placeholder,在run時傳入,一般用於全連線層。

下面在前面的程式碼的基礎上加上dropout,需要改動的地方如下

import tensorflow as tf from tensorflow.examples.tutorials.mnist import input_data mnist = input_data.read_data_sets('MNIST_data', one_hot=True)

在def layer時增加一行 Wx_plus_b = tf.nn.dropout(Wx_plus_b, keep_prob)

其餘不變

def add_layer(inputs,in_size,out_size,activation_function = None):

Weights = tf.Variable(tf.random_normal([in_size,out_size]))

biases = tf.Variable(tf.zeros([1,out_size])+0.1)

Wx_plus_b = tf.matmul(inputs,Weights) + biases

#這裡增加一行dropout

Wx_plus_b = tf.nn.dropout(Wx_plus_b, keep_prob)

if activation_function is None:

outputs = Wx_plus_b

else:

outputs = activation_function(Wx_plus_b)

return outputs注意:因為keep_prob作為一個placeholder,所以在feed_dict中傳入!

keep_prob: 1(保留全部神經元) keep_prob: 0.5(保留50%神經元)

dropout僅在train中使用,在驗證和test中keep_prob為1

#定義一個計算準確率的函式

def compute_accuracy(v_xs, v_ys):

global prediction

#keep_prob為1

y_pre = sess.run(prediction, feed_dict={xs: v_xs,keep_prob: 1})

correct_prediction = tf.equal(tf.argmax(y_pre,1), tf.argmax(v_ys,1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

result = sess.run(accuracy, feed_dict={xs: v_xs, ys: v_ys,keep_prob: 1})

return resultkeep_prob作為一個placeholder,在run時傳入

增加一行:keep_prob = tf.placeholder(tf.float32)

#keep_prob作為一個placeholder,在run時傳入

keep_prob = tf.placeholder(tf.float32)

# 定義placeholder存放資料

xs = tf.placeholder(tf.float32, [None, 784])

ys = tf.placeholder(tf.float32, [None, 10])

#用add_layer函式搭建一個簡單的訓練網路結構

layer1 = add_layer(xs, 784, 50, activation_function=tf.nn.tanh)

prediction = add_layer(layer1, 50, 10, activation_function=tf.nn.softmax)

#損失函式(cross_entropy)和優化方法

cross_entropy = tf.reduce_mean(-tf.reduce_sum(ys*tf.log(prediction),

reduction_indices=[1]))

train_step = tf.train.GradientDescentOptimizer(0.5).minimize(cross_entropy)在run時傳入keep_prob

#session會話控制

sess = tf.Session()

sess.run(tf.global_variables_initializer())

#訓練1000次,每訓練50次輸出測試資料的訓練精度

for i in range(1000):

#開始訓練,訓練集中每次取100個數據(batch_xs, batch_ys)

batch_xs, batch_ys = mnist.train.next_batch(100)

sess.run(train_step, feed_dict={xs: batch_xs, ys: batch_ys, keep_prob: 0.5})#placeholder和feed_dict同時出現

if i%50 == 0:

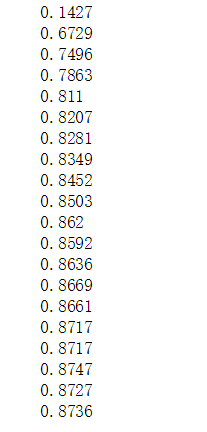

print(compute_accuracy( mnist.test.images, mnist.test.labels))結果:(變差了,我只是隨便試一試)