python學習(23)requests庫爬取貓眼電影

阿新 • • 發佈:2018-11-11

本文介紹如何結合前面講解的基本知識,採用requests,正則表示式,cookies結合起來,做一次實戰,抓取貓眼電影排名資訊。

用requests寫一個基本的爬蟲

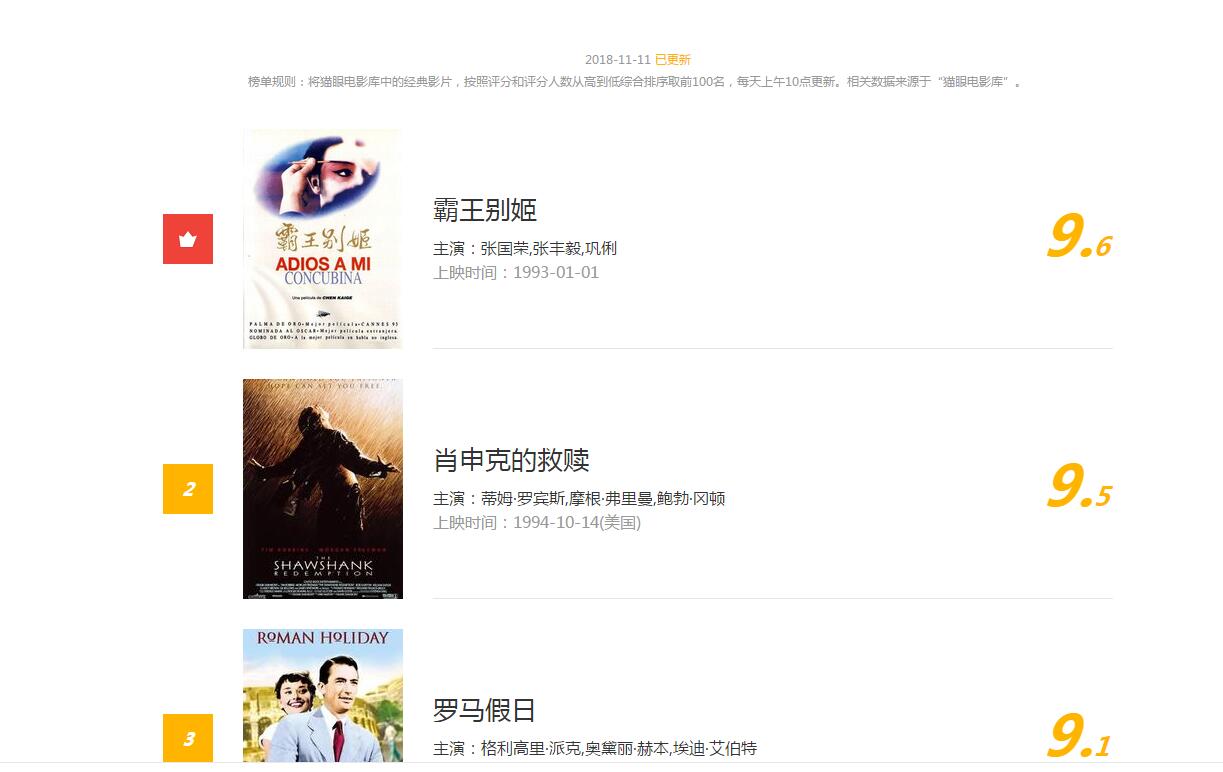

排行資訊大致如下圖

網址連結為http://maoyan.com/board/4?offset=0

我們通過點選檢視原始檔,可以看到網頁資訊

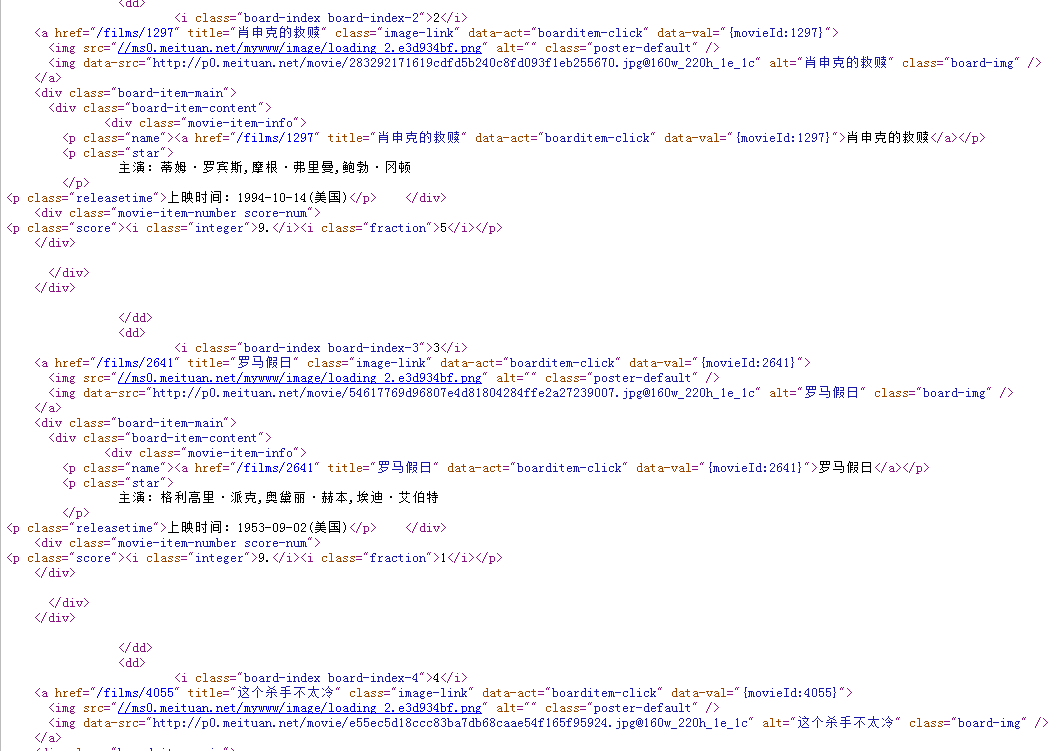

每一個電影的html資訊都是下邊的這種結構

<i class="board-index board-index-3">3</i> <a href="/films/2641" title="羅馬假日" class="image-link" data-act="boarditem-click" data-val="{movieId:2641}"> <img src="//ms0.meituan.net/mywww/image/loading_2.e3d934bf.png" alt="" class="poster-default" /> <img data-src="http://p0.meituan.net/movie/[email protected]_220h_1e_1c" alt="羅馬假日" class="board-img" /> </a> <div class="board-item-main"> <div class="board-item-content"> <div class="movie-item-info"> <p class="name"><a href="/films/2641" title="羅馬假日" data-act="boarditem-click" data-val="{movieId:2641}">羅馬假日</a></p> <p class="star"> 主演:格利高裡·派克,奧黛麗·赫本,埃迪·艾伯特</p>

其實對我們有用的就是 img src(圖片地址) title 電影名 star 主演。

所以根據前邊介紹過的正則表示式寫法,可以推匯出正則表示式

compilestr = r'''<dd>.*?<i class="board-index.*?<img data-src="(.*?)@.*?title="(.*?)".*?<p class="star"> (.*?)</p>.*?<p class="releasetime">.*?(.*?)</p'''

‘.’表示匹配任意字元,如果正則表示式用re.S模式,.還可以匹配換行符,’‘表示匹配前一個字元0到n個,’?’表示非貪婪匹配,

所以’.?’可以理解為匹配任意字元。接下來寫程式碼列印我們匹配的條目

import requests import re USER_AGENT = 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.221 Safari/537.36 SE 2.X MetaSr 1.0' if __name__ == "__main__": headers={'User-Agent':USER_AGENT, } session = requests.Session() req = session.get('http://maoyan.com/board/4?offset=0',headers = headers, timeout = 5) compilestr = r'<dd>.*?<i class="board-index.*?<img data-src="(.*?)@.*?title="(.*?)".*?<p class="star">(.*?)</p>.*?<p class="releasetime">.*?(.*?)</p' #print(req.content) pattern = re.compile(compilestr,re.S) #print(req.content.decode('utf-8')) lists = re.findall(pattern,req.content.decode('utf-8')) for item in lists: #print(item) print(item[0].strip()) print(item[1].strip()) print(item[2].strip()) print(item[3].strip()) print('\n')

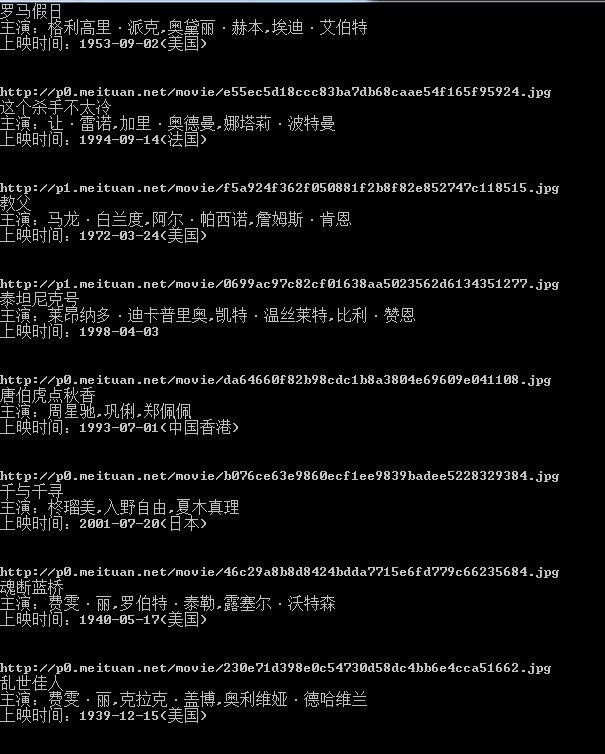

執行一下,結果如下

看來我們抓取到資料了,我們只爬取了這一頁的資訊,接下來我們分析第二頁,第三頁的規律,點選第二頁,網址變為’http://maoyan.com/board/4?offset=10',點選第三頁網址變為'http://maoyan.com/board/4?offset=20',所以每一頁的offset偏移量為20,這樣我們可以計算偏移量達到抓取不同頁碼的資料,將上一個程式稍作修改,變為可以爬取n頁資料的程式

import requests import re import time USER_AGENT = 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.221 Safari/537.36 SE 2.X MetaSr 1.0' class MaoYanScrapy(object): def __init__(self,pages=1): self.m_session = requests.Session() self.m_headers = {'User-Agent':USER_AGENT,} self.m_compilestr = r'<dd>.*?<i class="board-index.*?<img data-src="(.*?)@.*?title="(.*?)".*?<p class="star">(.*?)</p>.*?<p class="releasetime">.*?(.*?)</p' self.m_pattern = re.compile(self.m_compilestr,re.S) self.m_pages = pages def getPageData(self): try: for i in range(self.m_pages): httpstr = 'http://maoyan.com/board/4?offset='+str(i) req = self.m_session.get(httpstr,headers=self.m_headers,timeout=5) lists = re.findall(self.m_pattern,req.content.decode('utf-8')) time.sleep(1) for item in lists: img = item[0] print(img.strip()+'\n') name = item[1] print(name.strip()+'\n') actor = item[2] print(actor.strip()+'\n') fiemtime = item[3] print(fiemtime.strip()+'\n') except: print('get error') if __name__ == "__main__": maoyanscrapy = MaoYanScrapy() maoyanscrapy.getPageData()

執行下,效果和之前一樣,只是支援了頁碼的傳參了。

下面繼續完善下程式,把每個電影的圖片抓取並儲存下來,這裡面用到了建立資料夾,路徑拼接,檔案儲存的基礎知識,綜合運用如下

import requests import re import time import os USER_AGENT = 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.221 Safari/537.36 SE 2.X MetaSr 1.0' class MaoYanScrapy(object): def __init__(self,pages=1): self.m_session = requests.Session() self.m_headers = {'User-Agent':USER_AGENT,} self.m_compilestr = r'<dd>.*?<i class="board-index.*?<img data-src="(.*?)@.*?title="(.*?)".*?<p class="star">(.*?)</p>.*?<p class="releasetime">.*?(.*?)</p' self.m_pattern = re.compile(self.m_compilestr,re.S) self.m_pages = pages self.dirpath = os.path.split(os.path.abspath(__file__))[0] def getPageData(self): try: for i in range(self.m_pages): httpstr = 'http://maoyan.com/board/4?offset='+str(i) req = self.m_session.get(httpstr,headers=self.m_headers,timeout=5) lists = re.findall(self.m_pattern,req.content.decode('utf-8')) time.sleep(1) for item in lists: img = item[0] print(img.strip()+'\n') name = item[1] dirpath = os.path.join(self.dirpath,name) if(os.path.exists(dirpath)==False): os.makedirs(dirpath) print(name.strip()+'\n') actor = item[2] print(actor.strip()+'\n') fiemtime = item[3] print(fiemtime.strip()+'\n') txtname = name+'.txt' txtname = os.path.join(dirpath,txtname) if(os.path.exists(txtname)==True): os.remove(txtname) with open (txtname,'w') as f: f.write(img.strip()+'\n') f.write(name.strip()+'\n') f.write(actor.strip()+'\n') f.write(fiemtime.strip()+'\n') picname=os.path.join(dirpath,name+'.'+img.split('.')[-1]) if(os.path.exists(picname)): os.remove(picname) req=self.m_session.get(img,headers=self.m_headers,timeout=5) time.sleep(1) with open(picname,'wb') as f: f.write(req.content) except: print('get error') if __name__ == "__main__": maoyanscrapy = MaoYanScrapy() maoyanscrapy.getPageData()

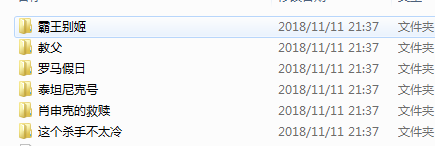

執行一下,可以看到在檔案的目錄裡多了幾個資料夾

點選一個資料夾,看到裡邊有我們儲存的圖片和資訊

好了,到此為止,正則表示式和requests結合,做的爬蟲實戰完成。

謝謝關注我的公眾號: