3.1tensorflow引數調整-學習率(learning rate)

1. 什麼是學習率

調參的第一步是知道這個引數是什麼,它的變化對模型有什麼影響。

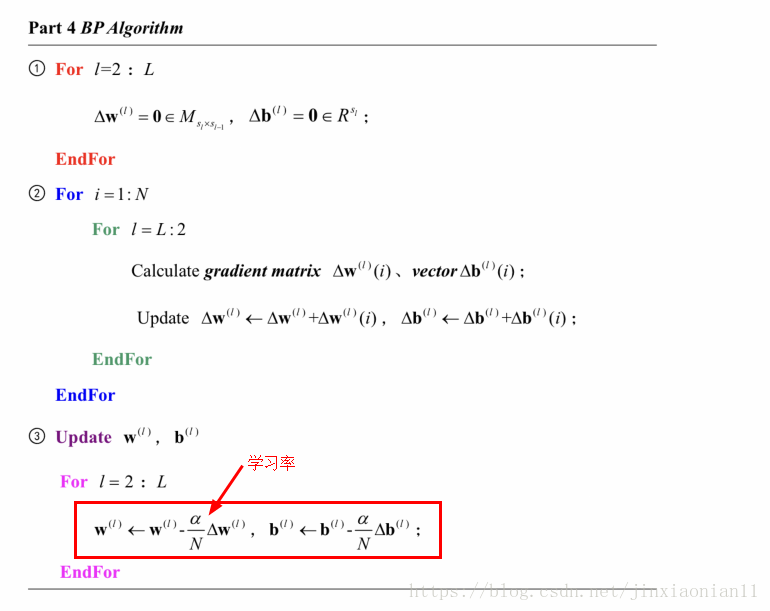

(1)要理解學習率是什麼,首先得弄明白神經網路引數更新的機制-梯度下降+反向傳播。參考資料:https://www.cnblogs.com/softzrp/p/6718909.html。

總結一句話:將輸出誤差反向傳播給網路引數,以此來擬合樣本的輸出。本質上是最優化的一個過程,逐步趨向於最優解。但是每一次更新引數利用多少誤差,就需要通過一個引數來控制,這個引數就是學習率(Learning rate),也稱為步長。從bp演算法的公式可以更好理解:

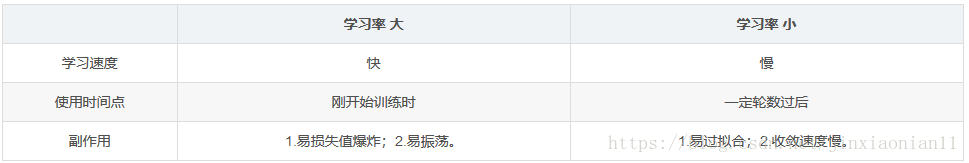

(2)學習率對模型的影響

從公式就可以看出,學習率越大,輸出誤差對引數的影響就越大,引數更新的就越快,但同時受到異常資料的影響也就越大,很容易發散。

2. 學習率指數衰減機制

在1. 中理解了學習率變化對模型的影響,我們可以看出,最理想的學習率不是固定值,而是一個隨著訓練次數衰減的變化的值,也就是在訓練初期,學習率比較大,隨著訓練的進行,學習率不斷減小,直到模型收斂。常用的衰減機制有:

decayed_lr = lr0*(decay_rate^(global_steps/decay_steps)

引數解釋: decayed_lr:衰減後的學習率,也就是當前訓練不使用的真實學習率 lr0: 初始學習率 decay_rate: 衰減率,每次衰減的比例 global_steps:當前訓練步數 decay_steps:衰減步數,每隔多少步衰減一次。

tensorflow對應API:

global_step = tf.Variable(0)

lr = tf.train.exponential_decay(

lr0,

global_step,

decay_steps=lr_step,

decay_rate=lr_decay,

staircase=True)

staircase=True 引數是說 global_steps/decay_steps 取整更新,也就是能做到每隔decay_steps學習率更新一次。

3. 例項解析

# -*- coding: utf-8 -*- # @Time : 18-10-5 下午3:38 # @Author : gxrao # @Site : # @File : cnn_mnist_2.py # @Software: PyCharm import os # os.environ["CUDA_VISIBLE_DEVICES"]="-1" import tensorflow as tf import matplotlib.pylab as plt from functools import reduce import time # prepare the data import tensorflow.examples.tutorials.mnist.input_data as input_data mnist = input_data.read_data_sets('./data/MNIST/', one_hot=True) # create the data graph and set it as default sess = tf.InteractiveSession() # parameters setting batch_size = 50 max_steps = 16000 lr0 = 0.0001 regularizer_rate = 0.0001 lr_decay = 0.99 lr_step = 500 sample_size = 40000 # init weights and bias def init_variable(w_shape, b_shape, regularizer=None): weights = tf.get_variable('weights',w_shape,initializer=tf.truncated_normal_initializer(stddev=0.1)) if regularizer != None: tf.add_to_collection('lossess', regularizer(weights)) biases = tf.get_variable('biases', b_shape, initializer=tf.constant_initializer(0.0)) return weights, biases # create conv2d def conv2d(x,w,b,keep_prob): # conv conv_res = tf.nn.conv2d(x,w,strides=[1,1,1,1],padding='SAME') + b # activation function activation_res = tf.nn.relu(conv_res) # pooling pool_res = tf.nn.max_pool(activation_res, ksize=[1,2,2,1],strides=[1,2,2,1],padding='SAME') # drop out drop_res = tf.nn.dropout(pool_res,keep_prob) return drop_res def inference(x,reuse=False,regularizer=None,dropout=1.0): # layer_1: x_img = tf.reshape(x,shape=[-1,28,28,1]) with tf.variable_scope('cnn_layer1',reuse=reuse): weights, biases = init_variable([5,5,1,32],[32]) cnn1_res = conv2d(x_img,weights,biases,1.0) # layer_2 with tf.variable_scope('cnn_layer2',reuse=reuse): weights, biases = init_variable([3,3,32,64],[64]) cnn2_res = conv2d(cnn1_res,weights, biases,1.0) # layer_3 with tf.variable_scope('cnn_layer3',reuse=reuse): weights, biases = init_variable([3, 3, 64, 128], [128]) cnn3_res = conv2d(cnn2_res, weights, biases, 1.0) cnn3_shape = cnn3_res.shape.as_list()[1:] h3_s = reduce(lambda x, y: x * y, cnn3_shape) cnn3_reshape = tf.reshape(cnn3_res,[-1,h3_s]) # layer_4 with tf.variable_scope('fcn1',reuse=reuse): weights, biases = init_variable([h3_s,5000],[5000],regularizer) fcn1_res = tf.nn.relu(tf.matmul(cnn3_reshape,weights)+biases) fcn1_dropout = tf.nn.dropout(fcn1_res,dropout) # layer_5 with tf.variable_scope('fcn2',reuse=reuse): weights, biases = init_variable([5000,500],[500], regularizer) fcn2_res = tf.nn.relu(tf.matmul(fcn1_dropout,weights)+biases) fcn2_dropout = tf.nn.dropout(fcn2_res,1.0) # output layer with tf.variable_scope('out_put_layer',reuse=reuse): weights, biases = init_variable([500,10],10) y = tf.nn.softmax(tf.matmul(fcn2_dropout,weights)+biases) return y train_acc = [] validation_acc = [] train_loss = [] # create train model def train_model(): start = time.time() # add th input placeholder x = tf.placeholder(tf.float32, shape=[None, 784], name='x') y_ = tf.placeholder(tf.float32, shape=[None, 10]) keep_prob = tf.placeholder(tf.float32) global_step = tf.Variable(0) lr = tf.train.exponential_decay( lr0, global_step, decay_steps=lr_step, decay_rate=lr_decay, staircase=True) # select regularizer regularizer = tf.contrib.layers.l2_regularizer(regularizer_rate) # define loss and select optimizer y = inference(x, reuse=False,regularizer=None, dropout= keep_prob) cross_entropy = -tf.reduce_sum(y_ * tf.log(y)) loss = cross_entropy train_step = tf.train.AdamOptimizer(learning_rate=lr).minimize( loss,global_step=global_step) predict = tf.equal(tf.argmax(y_, 1), tf.argmax(y, 1)) acc = tf.reduce_mean(tf.cast(predict, 'float')) # train the model # init all variables init = tf.global_variables_initializer() init.run() for i in range(max_steps): x_batch, y_batch = mnist.train.next_batch(batch_size) _, _loss = sess.run( [train_step, loss], feed_dict={ x: x_batch, y_: y_batch, keep_prob: 0.8 }) train_loss.append(_loss) if (i + 1) % 200 == 0: print('training steps: %d' % (i + 1)) validation_x, validation_y = mnist.validation.next_batch(500) _validation_acc = acc.eval(feed_dict={ x: validation_x, y_: validation_y, keep_prob: 1.0 }) validation_acc.append(_validation_acc) print('validation accurary is %f' % _validation_acc) x_batch, y_batch = mnist.train.next_batch(500) _train_acc = acc.eval(feed_dict={x: x_batch, y_: y_batch,keep_prob: 1.0}) train_acc.append(_train_acc) print('train accurary is %f' % _train_acc) stop = time.time() total= (stop - start) print('train time: %d'%int(total)) def test_model(): x = tf.placeholder(tf.float32, shape=[None, 784], name='x') y_ = tf.placeholder(tf.float32, shape=[None, 10]) keep_prob = tf.placeholder(tf.float32) y = inference(x, reuse=tf.AUTO_REUSE, dropout=keep_prob) predict = tf.equal(tf.argmax(y_, 1), tf.argmax(y, 1)) acc = tf.reduce_mean(tf.cast(predict, 'float')) # because it raise error when i select all test data.I select 2000 images as testing data acc_test = acc.eval(feed_dict={ x: mnist.test.images[:2000, :], y_: mnist.test.labels[:2000, :], keep_prob:1.0 }) print('test accurary is:%f' % acc_test) def plot_acc(): # plot the train acc and validation acc x_axis = [i for i in range(len(train_acc))] plt.plot(x_axis, train_acc, 'r-', label='train accuracy') plt.plot(x_axis, validation_acc, 'b:', label='validation accuracy') plt.legend() plt.xlabel('train steps') plt.ylabel('accuracy') plt.savefig('accuracy.jpg') def plot_loss(): x_axis = [i for i in range(len(train_loss))] plt.plot(x_axis, train_loss, 'r-') plt.legend() plt.xlabel('train steps') plt.ylabel('train loss') plt.savefig('train_loss.jpg') def save_model(): # save the model module_save_dir = './model/cnn_mnist_2/' if not os.path.exists(module_save_dir): os.makedirs(module_save_dir) saver = tf.train.Saver() saver.save(sess, module_save_dir + 'model.ckpt') sess.close() if __name__ == "__main__": train_model() test_model() plot_acc() plot_loss() save_model()

這是一個簡單的基於mnist的cnn分類程式。

# parameters setting

batch_size = 50

max_steps = 16000

lr0 = 0.0001

regularizer_rate = 0.0001

lr_decay = 0.99

lr_step = 500

定義了相關引數

global_step = tf.Variable(0)

lr = tf.train.exponential_decay(

lr0,

global_step,

decay_steps=lr_step,

decay_rate=lr_decay,

staircase=True)

在使用指數衰減學習率時,一定要記得global_step = tf.Variable(0)的定義,不然後面引數不會更新

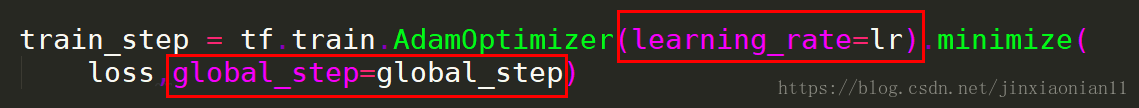

train_step = tf.train.AdamOptimizer(learning_rate=lr).minimize(

loss,global_step=global_step)

訓練步中指明學習率以及global_step,這兩個千萬不能忘記。

4. 總結

指數衰減學習率是深度學習調參過程中比較使用的一個方法,剛開始訓練時,學習率以 0.01 ~ 0.001 為宜, 接近訓練結束的時候,學習速率的衰減應該在100倍以上。按照這個經驗去設定相關引數,對於模型的精度會有很大幫助。