關於機器學習當中的正則化、範數的一些理解

The blog is fantastic! What I want to know is why we should regularlize and how we can regularlize, fortunately, this article tells all them to me.

What do we want to regularlize?

Generally speaking, regularization will prevent overfitting and increase generalization. In other words, regularization has the function of reducing test error and improve the performance of model in test.

Obviously, the red line in this figure describes the situation of overfitting.

How do we solve the problem from a linear model point of view?

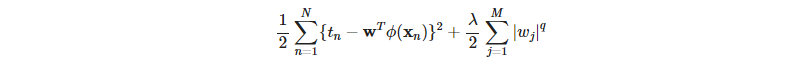

This is a loss function of a linear model. We can regard it as sum of squared errors. When the regular term is added, the loss funtion is changed into target funtion.

target function = loss funtion + regular term

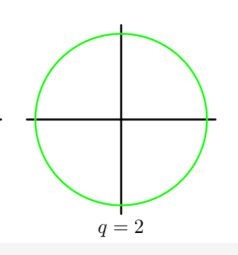

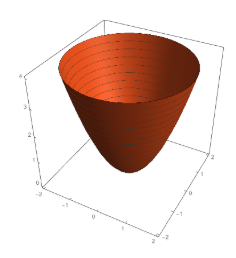

When q = 2 , we can get those figures:

And it had been demonstrated that q = 2 was the best value because 2D will reduce the complexity of model, meanwhile, it can take derivatives everywhere!

About norm

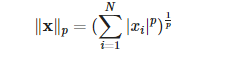

1. p-norm

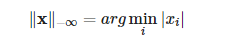

2.-∞ norm  the minimum value in vector

the minimum value in vector

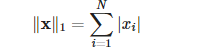

3.1-norm  city-block

city-block

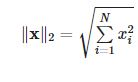

4.2-norm  Euclidean distance

Euclidean distance

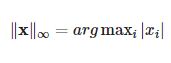

5.∞-norm  the maximum value in vector

the maximum value in vector