kubernetes k8s 部署

叢集規劃

centos-test-ip-207-master 192.168.11.207

centos-test-ip-208 192.168.11.208

centos-test-ip-209 192.168.11.209kubernetes 1.10.7

flannel flannel-v0.10.0-linux-amd64.tar

ETCD etcd-v3.3.8-linux-amd64.tar

CNI cni-plugins-amd64-v0.7.1

docker 18.03.1-ce

安裝包下載

etcd:https://github.com/coreos/etcd/releases/

flannel:https://github.com/coreos/flannel/releases/

cni:https://github.com/containernetworking/plugins/releases

kubernetes:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.10.md#v1107

注:安裝包 kubernetes1.10 看官笑納

連結:https://pan.baidu.com/s/1_7EfOMlRkQSybEH_p6NtTw

提取碼:345b 互相解析,關防火牆,關掉分割槽,伺服器時間 (三臺同步)

解析

vim /etc/hosts 192.168.11.207 centos-test-ip-207-master 192.168.11.208 centos-test-ip-208 192.168.11.209 centos-test-ip-209

防火牆

systemctl stop firewalld

setenforce 0關閉swap

swapoff -a

vim /etc/fstab // swap設定註釋同步服務時區 # 時間同步可忽略

tzselect公鑰傳輸 # 主傳從

ssh-keygen

ssh-copy-id安裝docker (三臺同步)

解除安裝原有版本

yum remove docker docker-common docker-selinux docker-engine安裝docker所依賴驅動

yum install -y yum-utils device-mapper-persistent-data lvm2

新增yum源 #官方源拉取延遲,故選擇國內阿里源

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum makecache fast選擇docker版本安裝

yum list docker-ce --showduplicates | sort -r選擇安裝18.03.1.ce

yum -y install docker-ce-18.03.1.ce啟動docker

systemctl start docker安裝ETCD叢集

同步操作

tar xvf etcd-v3.3.8-linux-amd64.tar.gz

cd etcd-v3.3.8-linux-amd64

cp etcd etcdctl /usr/bin

mkdir -p /var/lib/etcd /etc/etcd #建立相關資料夾etcd配置檔案

主要檔案操作

/usr/lib/systemd/system/etcd.service和/etc/etcd/etcd.conf

etcd叢集的主從節點關係與kubernetes叢集的主從節點關係不是同的

etcd叢集在啟動和執行過程中會選舉出主節點

因此三個節點命名 etcd-i,etcd-ii,etcd-iii 體驗關係

207-master

cat /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

[Service]

Type=notify

WorkingDirectory=/var/lib/etcd/

EnvironmentFile=/etc/etcd/etcd.conf

ExecStart=/usr/bin/etcd

[Install]

WantedBy=multi-user.targetcat /etc/etcd/etcd.conf

# [member]

# 節點名稱

ETCD_NAME=etcd-i

# 資料存放位置

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

# 監聽其他Etcd例項的地址

ETCD_LISTEN_PEER_URLS="http://192.168.11.207:2380"

# 監聽客戶端地址

ETCD_LISTEN_CLIENT_URLS="http://192.168.11.207:2379,http://127.0.0.1:2379"

#[cluster]

# 通知其他Etcd例項地址

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.11.207:2380"

# 初始化叢集內節點地址

ETCD_INITIAL_CLUSTER="etcd-i=http://192.168.11.207:2380,etcd-ii=http://192.168.11.208:2380,etcd-iii=http://192.168.11.209:2380"

# 初始化叢集狀態,new表示新建

ETCD_INITIAL_CLUSTER_STATE="new"

# 初始化叢集token

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-token"

# 通知客戶端地址

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.11.207:2379,http://127.0.0.1:2379"208

cat /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

[Service]

Type=notify

WorkingDirectory=/var/lib/etcd/

EnvironmentFile=/etc/etcd/etcd.conf

ExecStart=/usr/bin/etcd

[Install]

WantedBy=multi-user.targetcat /etc/etcd/etcd.conf

# [member]

# 節點名稱

ETCD_NAME=etcd-ii

# 資料存放位置

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

# 監聽其他Etcd例項的地址

ETCD_LISTEN_PEER_URLS="http://192.168.11.208:2380"

# 監聽客戶端地址

ETCD_LISTEN_CLIENT_URLS="http://192.168.11.208:2379,http://127.0.0.1:2379"

#[cluster]

# 通知其他Etcd例項地址

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.11.208:2380"

# 初始化叢集內節點地址

ETCD_INITIAL_CLUSTER="etcd-i=http://192.168.11.207:2380,etcd-ii=http://192.168.11.208:2380,etcd-iii=http://192.168.11.209:2380"

# 初始化叢集狀態,new表示新建

ETCD_INITIAL_CLUSTER_STATE="new"

# 初始化叢集token

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-token"

# 通知客戶端地址

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.11.208:2379,http://127.0.0.1:2379"209

cat /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

[Service]

Type=notify

WorkingDirectory=/var/lib/etcd/

EnvironmentFile=/etc/etcd/etcd.conf

ExecStart=/usr/bin/etcd

[Install]

WantedBy=multi-user.targetcat /etc/etcd/etcd.conf

# [member]

# 節點名稱

ETCD_NAME=etcd-iii

# 資料存放位置

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

# 監聽其他Etcd例項的地址

ETCD_LISTEN_PEER_URLS="http://192.168.11.209:2380"

# 監聽客戶端地址

ETCD_LISTEN_CLIENT_URLS="http://192.168.11.209:2379,http://127.0.0.1:2379"

#[cluster]

# 通知其他Etcd例項地址

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.11.209:2380"

# 初始化叢集內節點地址

ETCD_INITIAL_CLUSTER="etcd-i=http://192.168.11.207:2380,etcd-ii=http://192.168.11.208:2380,etcd-iii=http://192.168.11.209:2380"

# 初始化叢集狀態,new表示新建

ETCD_INITIAL_CLUSTER_STATE="new"

# 初始化叢集token

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-token"

# 通知客戶端地址

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.11.209:2379,http://127.0.0.1:2379"啟動ETCD叢集

主→從順序操作

systemctl daemon-reload ## 重新載入配置檔案

systemctl start etcd.service檢視叢集資訊

[[email protected] ~]# etcdctl member list

e8bd2d4d9a7cba8: name=etcd-ii peerURLs=http://192.168.11.208:2380 clientURLs=http://127.0.0.1:2379,http://192.168.11.208:2379 isLeader=true

50a675761b915629: name=etcd-i peerURLs=http://192.168.11.207:2380 clientURLs=http://127.0.0.1:2379,http://192.168.11.207:2379 isLeader=false

9a891df60a11686b: name=etcd-iii peerURLs=http://192.168.11.209:2380 clientURLs=http://127.0.0.1:2379,http://192.168.11.209:2379 isLeader=false[[email protected] ~]# etcdctl cluster-health

member e8bd2d4d9a7cba8 is healthy: got healthy result from http://127.0.0.1:2379

member 50a675761b915629 is healthy: got healthy result from http://127.0.0.1:2379

member 9a891df60a11686b is healthy: got healthy result from http://127.0.0.1:2379

cluster is healthy安裝flannel

同步操作

mkdir -p /opt/flannel/bin/

tar xvf flannel-v0.10.0-linux-amd64.tar.gz -C /opt/flannel/bin/cat /usr/lib/systemd/system/flannel.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network.target

After=network-online.target

Wants=network-online.target

After=etcd.service

Before=docker.service

[Service]

Type=notify

ExecStart=/opt/flannel/bin/flanneld -etcd-endpoints=http://192.168.11.207:2379,http://192.168.11.208:2379,http://192.168.11.209:2379 -etcd-prefix=coreos.com/network

ExecStartPost=/opt/flannel/bin/mk-docker-opts.sh -d /etc/docker/flannel_net.env -c

Restart=on-failure

[Install]

WantedBy=multi-user.target

RequiredBy=docker.service設定flannel網路配置(網段劃分,網段資訊可修改) # 主操作即可

[[email protected] ~]# etcdctl mk /coreos.com/network/config '{"Network":"172.18.0.0/16", "SubnetMin": "172.18.1.0", "SubnetMax": "172.18.254.0", "Backend": {"Type": "vxlan"}}'

修改網段:刪除:etcdctl rm /coreos.com/network/config ,再執行配置命令即可下載flannel

同步操作

flannel服務依賴flannel映象,所以要先下載flannel映象,執行以下命令從阿里雲下載,建立映象tag

docker pull registry.cn-beijing.aliyuncs.com/k8s_images/flannel:v0.10.0-amd64

docker tag registry.cn-beijing.aliyuncs.com/k8s_images/flannel:v0.10.0-amd64 quay.io/coreos/flannel:v0.10.0注:

配置docker

flannel配置中有一項

ExecStartPost=/opt/flannel/bin/mk-docker-opts.sh -d /etc/docker/flannel_net.env -c

flannel啟動後執行mk-docker-opts.sh,並生成/etc/docker/flannel_net.env檔案

flannel會修改docker網路,flannel_net.env是flannel生成的docker配置引數,因此,還要修改docker配置項

cat /usr/lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

ExecStart=/usr/bin/dockerd

EnvironmentFile=/etc/docker/flannel_net.env # 新增

ExecReload=/bin/kill -s HUP $MAINPID

ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT #新增

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

# Uncomment TasksMax if your systemd version supports it.

# Only systemd 226 and above support this version.

#TasksMax=infinity

TimeoutStartSec=0

# set delegate yes so that systemd does not reset the cgroups of docker containers

Delegate=yes

# kill only the docker process, not all processes in the cgroup

KillMode=process

# restart the docker process if it exits prematurely

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target注:

After:flannel啟動之後再啟動docker

EnvironmentFile:配置docker的啟動引數,由flannel生成

ExecStart:增加docker啟動引數

ExecStartPost:在docker啟動之後執行,會修改主機的iptables路由規則

啟動flannel

同步操作

systemctl daemon-reload

systemctl start flannel.service

systemctl restart docker.service安裝CNI

同步操作

mkdir -p /opt/cni/bin /etc/cni/net.d

tar xvf cni-plugins-amd64-v0.7.1.tgz -C /opt/cni/bincat /etc/cni/net.d/10-flannel.conflist

{

"name":"cni0",

"cniVersion":"0.3.1",

"plugins":[

{

"type":"flannel",

"delegate":{

"forceAddress":true,

"isDefaultGateway":true

}

},

{

"type":"portmap",

"capabilities":{

"portMappings":true

}

}

]

}安裝K8S叢集

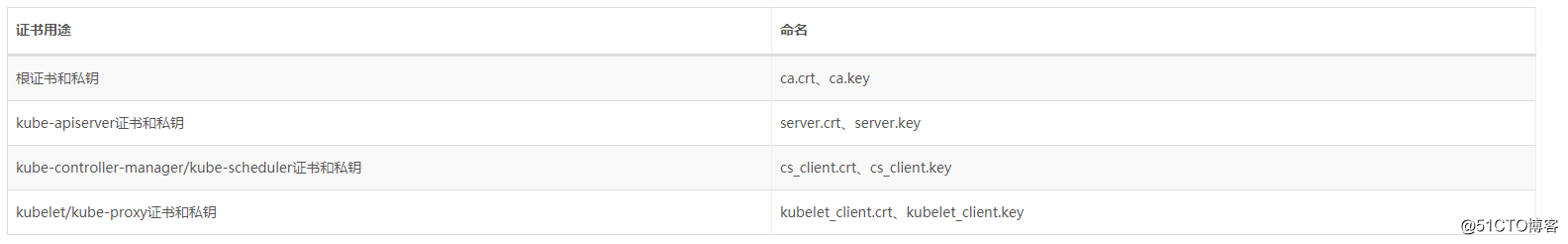

CA證書

同步操作

mkdir -p /etc/kubernetes/ca207

cd /etc/kubernetes/ca/生產證書和私鑰

[[email protected] ca]# openssl genrsa -out ca.key 2048

[[email protected] ca]# openssl req -x509 -new -nodes -key ca.key -subj "/CN=k8s" -days 5000 -out ca.crt生成kube-apiserver證書和私鑰

[[email protected] ca]# cat master_ssl.conf

[req]

req_extensions = v3_req

distinguished_name = req_distinguished_name

[req_distinguished_name]

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[alt_names]

DNS.1 = kubernetes

DNS.2 = kubernetes.default

DNS.3 = kubernetes.default.svc

DNS.4 = kubernetes.default.svc.cluster.local

DNS.5 = k8s

IP.1 = 172.18.0.1

IP.2 = 192.168.11.207[[email protected] ca]# openssl genrsa -out apiserver-key.pem 2048

[[email protected] ca]# openssl req -new -key apiserver-key.pem -out apiserver.csr -subj "/CN=k8s" -config master_ssl.conf

[[email protected] ca]# openssl x509 -req -in apiserver.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out apiserver.pem -days 365 -extensions v3_req -extfile master_ssl.conf生成kube-controller-manager/kube-scheduler證書和私鑰

[[email protected] ca]# openssl genrsa -out cs_client.key 2048

[[email protected] ca]# openssl req -new -key cs_client.key -subj "/CN=k8s" -out cs_client.csr

[[email protected] ca]# openssl x509 -req -in cs_client.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out cs_client.crt -days 5000拷貝證書到208,209

[[email protected] ca]# scp ca.crt ca.key centos-test-ip-208:/etc/kubernetes/ca/

[[email protected] ca]# scp ca.crt ca.key centos-test-ip-209:/etc/kubernetes/ca/208證書配置

/CN 對應本機IPcd /etc/kubernetes/ca/

[[email protected] ca]# openssl genrsa -out kubelet_client.key 2048

[[email protected]t-ip-208 ca]# openssl req -new -key kubelet_client.key -subj "/CN=192.168.3.193" -out kubelet_client.csr

[[email protected] ca]# openssl x509 -req -in kubelet_client.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out kubelet_client.crt -days 5000209證書配置

/CN 對應本機IPcd /etc/kubernetes/ca/

[[email protected] ca]# openssl genrsa -out kubelet_client.key 2048

[[email protected] ca]# openssl req -new -key kubelet_client.key -subj "/CN=192.168.11.209" -out kubelet_client.csr

[[email protected] ca]# openssl x509 -req -in kubelet_client.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out kubelet_client.crt -days 5000安裝k8s

207

[[email protected] ~]# tar xvf kubernetes-server-linux-amd64.tar.gz -C /opt

[[email protected] ~]# cd /opt/kubernetes/server/bin

[[email protected] bin]# cp -a `ls |egrep -v "*.tar|*_tag"` /usr/bin

[[email protected] bin]# mkdir -p /var/log/kubernetes配置kube-apiserver

[[email protected] bin]# cat /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=etcd.service

Wants=etcd.service

[Service]

EnvironmentFile=/etc/kubernetes/apiserver.conf

ExecStart=/usr/bin/kube-apiserver $KUBE_API_ARGS

Restart=on-failure

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target配置apiserver.conf

[[email protected] bin]# cat /etc/kubernetes/apiserver.conf

KUBE_API_ARGS="\

--storage-backend=etcd3 \

--etcd-servers=http://192.168.11.207:2379,http://192.168.11.208:2379,http://192.168.11.209:2379 \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--service-cluster-ip-range=172.18.0.0/16 \

--service-node-port-range=1-65535 \

--kubelet-port=10250 \

--advertise-address=192.168.11.207 \

--allow-privileged=false \

--anonymous-auth=false \

--client-ca-file=/etc/kubernetes/ca/ca.crt \

--tls-private-key-file=/etc/kubernetes/ca/apiserver-key.pem \

--tls-cert-file=/etc/kubernetes/ca/apiserver.pem \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,NamespaceExists,SecurityContextDeny,ServiceAccount,DefaultStorageClass,ResourceQuota \

--logtostderr=true \

--log-dir=/var/log/kubernets \

--v=2"注:

#解釋說明

--etcd-servers #連線到etcd叢集

--secure-port #開啟安全埠6443

--client-ca-file、--tls-private-key-file、--tls-cert-file配置CA證書

--enable-admission-plugins #開啟准入許可權

--anonymous-auth=false #不接受匿名訪問,若為true,則表示接受,此處設定為false,便於dashboard訪問

配置kube-controller-manager

[[email protected] bin]# cat /etc/kubernetes/kube-controller-config.yaml

apiVersion: v1

kind: Config

users:

- name: controller

user:

client-certificate: /etc/kubernetes/ca/cs_client.crt

client-key: /etc/kubernetes/ca/cs_client.key

clusters:

- name: local

cluster:

certificate-authority: /etc/kubernetes/ca/ca.crt

contexts:

- context:

cluster: local

user: controller

name: default-context

current-context: default-context配置kube-controller-manager.service

[[email protected] bin]# cat /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=kube-apiserver.service

Requires=kube-apiserver.service

[Service]

EnvironmentFile=/etc/kubernetes/controller-manager.conf

ExecStart=/usr/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target配置controller-manager.conf

[[email protected] bin]# cat /etc/kubernetes/controller-manager.conf

KUBE_CONTROLLER_MANAGER_ARGS="\

--master=https://192.168.11.207:6443 \

--service-account-private-key-file=/etc/kubernetes/ca/apiserver-key.pem \

--root-ca-file=/etc/kubernetes/ca/ca.crt \

--cluster-signing-cert-file=/etc/kubernetes/ca/ca.crt \

--cluster-signing-key-file=/etc/kubernetes/ca/ca.key \

--kubeconfig=/etc/kubernetes/kube-controller-config.yaml \

--logtostderr=true \

--log-dir=/var/log/kubernetes \

--v=2"注:

master連線到master節點

service-account-private-key-file、root-ca-file、cluster-signing-cert-file、cluster-signing-key-file配置CA證書

kubeconfig是配置檔案

配置kube-scheduler

[[email protected] bin]# cat /etc/kubernetes/kube-scheduler-config.yaml

apiVersion: v1

kind: Config

users:

- name: scheduler

user:

client-certificate: /etc/kubernetes/ca/cs_client.crt

client-key: /etc/kubernetes/ca/cs_client.key

clusters:

- name: local

cluster:

certificate-authority: /etc/kubernetes/ca/ca.crt

contexts:

- context:

cluster: local

user: scheduler

name: default-context

current-context: default-context[[email protected] bin]# cat /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=kube-apiserver.service

Requires=kube-apiserver.service

[Service]

User=root

EnvironmentFile=/etc/kubernetes/scheduler.conf

ExecStart=/usr/bin/kube-scheduler $KUBE_SCHEDULER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target[[email protected] bin]# cat /etc/kubernetes/scheduler.conf

KUBE_SCHEDULER_ARGS="\

--master=https://192.168.11.207:6443 \

--kubeconfig=/etc/kubernetes/kube-scheduler-config.yaml \

--logtostderr=true \

--log-dir=/var/log/kubernetes \

--v=2"啟動master

systemctl daemon-reload

systemctl start kube-apiserver.service

systemctl start kube-controller-manager.service

systemctl start kube-scheduler.service日誌檢視

journalctl -xeu kube-apiserver --no-pager

journalctl -xeu kube-controller-manager --no-pager

journalctl -xeu kube-scheduler --no-pager

# 實時檢視加 -f節點部署K8S

從(節點)同步操作

tar -zxvf kubernetes-server-linux-amd64.tar.gz -C /opt

cd /opt/kubernetes/server/bin

cp -a kubectl kubelet kube-proxy /usr/bin/

mkdir -p /var/log/kubernetescat /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

# 修改核心引數,iptables過濾規則生效.如果未用到可忽略sysctl -p #配置生效208 配置kubelet

[[email protected] ~]# cat /etc/kubernetes/kubelet-config.yaml

apiVersion: v1

kind: Config

users:

- name: kubelet

user:

client-certificate: /etc/kubernetes/ca/kubelet_client.crt

client-key: /etc/kubernetes/ca/kubelet_client.key

clusters:

- cluster:

certificate-authority: /etc/kubernetes/ca/ca.crt

server: https://192.168.11.207:6443

name: local

contexts:

- context:

cluster: local

user: kubelet

name: default-context

current-context: default-context

preferences: {}[[email protected] ~]# cat /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubelet Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

EnvironmentFile=/etc/kubernetes/kubelet.conf

ExecStart=/usr/bin/kubelet $KUBELET_ARGS

Restart=on-failure

[Install]

WantedBy=multi-user.target[[email protected] ~]# cat /etc/kubernetes/kubelet.conf

KUBELET_ARGS="\

--kubeconfig=/etc/kubernetes/kubelet-config.yaml \

--pod-infra-container-image=registry.aliyuncs.com/archon/pause-amd64:3.0 \

--hostname-override=192.168.11.208 \

--network-plugin=cni \

--cni-conf-dir=/etc/cni/net.d \

--cni-bin-dir=/opt/cni/bin \

--logtostderr=true \

--log-dir=/var/log/kubernetes \

--v=2"注:###################

--hostname-override #配置node名稱 建議使用node節點的IP

#--pod-infra-container-image=gcr.io/google_containers/pause-amd64:3.0 \

--pod-infra-container-image #指定pod的基礎映象 預設是google的,建議改為國內,或者FQ

或者 下載到本地重新命名映象

docker pull registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0

docker tag registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0 gcr.io/google_containers/pause-amd64:3.0

--kubeconfig #為配置檔案

配置KUBE-代理

[[email protected] ~]# cat /etc/kubernetes/proxy-config.yaml

apiVersion: v1

kind: Config

users:

- name: proxy

user:

client-certificate: /etc/kubernetes/ca/kubelet_client.crt

client-key: /etc/kubernetes/ca/kubelet_client.key

clusters:

- cluster:

certificate-authority: /etc/kubernetes/ca/ca.crt

server: https://192.168.11.207:6443

name: local

contexts:

- context:

cluster: local

user: proxy

name: default-context

current-context: default-context

preferences: {}[[email protected] ~]# cat /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

Requires=network.service

[Service]

EnvironmentFile=/etc/kubernetes/proxy.conf

ExecStart=/usr/bin/kube-proxy $KUBE_PROXY_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target[[email protected] ~]# cat /etc/kubernetes/proxy.conf

KUBE_PROXY_ARGS="\

--master=https://192.168.11.207:6443 \

--hostname-override=192.168.11.208 \

--kubeconfig=/etc/kubernetes/proxy-config.yaml \

--logtostderr=true \

--log-dir=/var/log/kubernetes \

--v=2"209 配置kubelet

[[email protected] ~]# cat /etc/kubernetes/kubelet-config.yaml

apiVersion: v1

kind: Config

users:

- name: kubelet

user:

client-certificate: /etc/kubernetes/ca/kubelet_client.crt

client-key: /etc/kubernetes/ca/kubelet_client.key

clusters:

- cluster:

certificate-authority: /etc/kubernetes/ca/ca.crt

server: https://192.168.11.207:6443

name: local

contexts:

- context:

cluster: local

user: kubelet

name: default-context

current-context: default-context

preferences: {}[[email protected] ~]# cat /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubelet Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

EnvironmentFile=/etc/kubernetes/kubelet.conf

ExecStart=/usr/bin/kubelet $KUBELET_ARGS

Restart=on-failure

[Install]

WantedBy=multi-user.target[[email protected] ~]# cat /etc/kubernetes/kubelet.conf

KUBELET_ARGS="\

--kubeconfig=/etc/kubernetes/kubelet-config.yaml \

--pod-infra-container-image=registry.aliyuncs.com/archon/pause-amd64:3.0 \

--hostname-override=192.168.11.209 \

--network-plugin=cni \

--cni-conf-dir=/etc/cni/net.d \

--cni-bin-dir=/opt/cni/bin \

--logtostderr=true \

--log-dir=/var/log/kubernetes \

--v=2"注:

###################

--hostname-override #配置node名稱 建議使用node節點的IP

#--pod-infra-container-image=gcr.io/google_containers/pause-amd64:3.0 \

--pod-infra-container-image #指定pod的基礎映象 預設是google的,建議改為國內,或者FQ

或者 下載到本地重新命名映象

docker pull registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0

docker tag registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0 gcr.io/google_containers/pause-amd64:3.0

--kubeconfig #為配置檔案

配置KUBE-代理

[[email protected] ~]# cat /etc/kubernetes/proxy-config.yaml

apiVersion: v1

kind: Config

users:

- name: proxy

user:

client-certificate: /etc/kubernetes/ca/kubelet_client.crt

client-key: /etc/kubernetes/ca/kubelet_client.key

clusters:

- cluster:

certificate-authority: /etc/kubernetes/ca/ca.crt

server: https://192.168.3.121:6443

name: local

contexts:

- context:

cluster: local

user: proxy

name: default-context

current-context: default-context

preferences: {} [[email protected] ~]# cat /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

Requires=network.service

[Service]

EnvironmentFile=/etc/kubernetes/proxy.conf

ExecStart=/usr/bin/kube-proxy $KUBE_PROXY_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target [[email protected] ~]# cat /etc/kubernetes/proxy.conf

KUBE_PROXY_ARGS="\

--master=https://192.168.11.207:6443 \

--hostname-override=192.168.11.209 \

--kubeconfig=/etc/kubernetes/proxy-config.yaml \

--logtostderr=true \

--log-dir=/var/log/kubernetes \

--v=2"注:

--hostname-override #配置node名稱,要與kubelet對應,kubelet配置了,則kube-proxy也要配置

--master #連線master服務

--kubeconfig #為配置檔案

啟動節點,日誌檢視 #注:一定要關閉swap分割槽

從(節點)同步操作

systemctl daemon-reload

systemctl start kubelet.service

systemctl start kube-proxy.service

journalctl -xeu kubelet --no-pager

journalctl -xeu kube-proxy --no-pager

# 實時檢視加 -fmaster檢視節點

[[email protected] ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.11.208 Ready <none> 1d v1.10.7

192.168.11.209 Ready <none> 1d v1.10.7叢集測試

配置nginx 測試檔案 (master)

[[email protected] bin]# cat nginx-rc.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx-rc

labels:

name: nginx-rc

spec:

replicas: 2

selector:

name: nginx-pod

template:

metadata:

labels:

name: nginx-pod

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80[[email protected] bin]# cat nginx-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-service

labels:

name: nginx-service

spec:

type: NodePort

ports:

- port: 80

protocol: TCP

targetPort: 80

nodePort: 30081

selector:

name: nginx-pod啟動

master(207)

kubectl create -f nginx-rc.yaml

kubectl create -f nginx-svc.yaml#檢視pod建立情況

[[email protected] bin]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-rc-d9kkc 1/1 Running 0 1d 172.18.30.2 192.168.11.209

nginx-rc-l9ctn 1/1 Running 0 1d 172.18.101.2 192.168.11.208注:http://節點:30081/ 出現nginx介面配置完成

刪除服務及nginx的部署 #配置檔案出現問題以下命令可以刪除重新操作

kubectl delete -f nginx-svc.yaml

kubectl delete -f nginx-rc.yaml介面 UI 下載部署 (master)

(主)207 操作

下載dashboard yaml

wget https://raw.githubusercontent.com/kubernetes/dashboard/master/src/deploy/recommended/kubernetes-dashboard.yaml修改檔案 kubernetes-dashboard.yaml

image 那裡 要修改下.預設的地址被牆了

#image: k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.0

image: mirrorgooglecontainers/kubernetes-dashboard-amd64:v1.8.3# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort # 新增 type:NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30000 # 新增 nodePort: 30000

selector:

k8s-app: kubernetes-dashboard建立許可權控制yaml

dashboard-admin.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system建立,檢視

kubectl create -f kubernetes-dashboard.yaml

kubectl create -f dashboard-admin.yaml[[email protected] ~]# kubectl get pods --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE

default nginx-rc-d9kkc 1/1 Running 0 1d 172.18.30.2 192.168.11.209

default nginx-rc-l9ctn 1/1 Running 0 1d 172.18.101.2 192.168.11.208

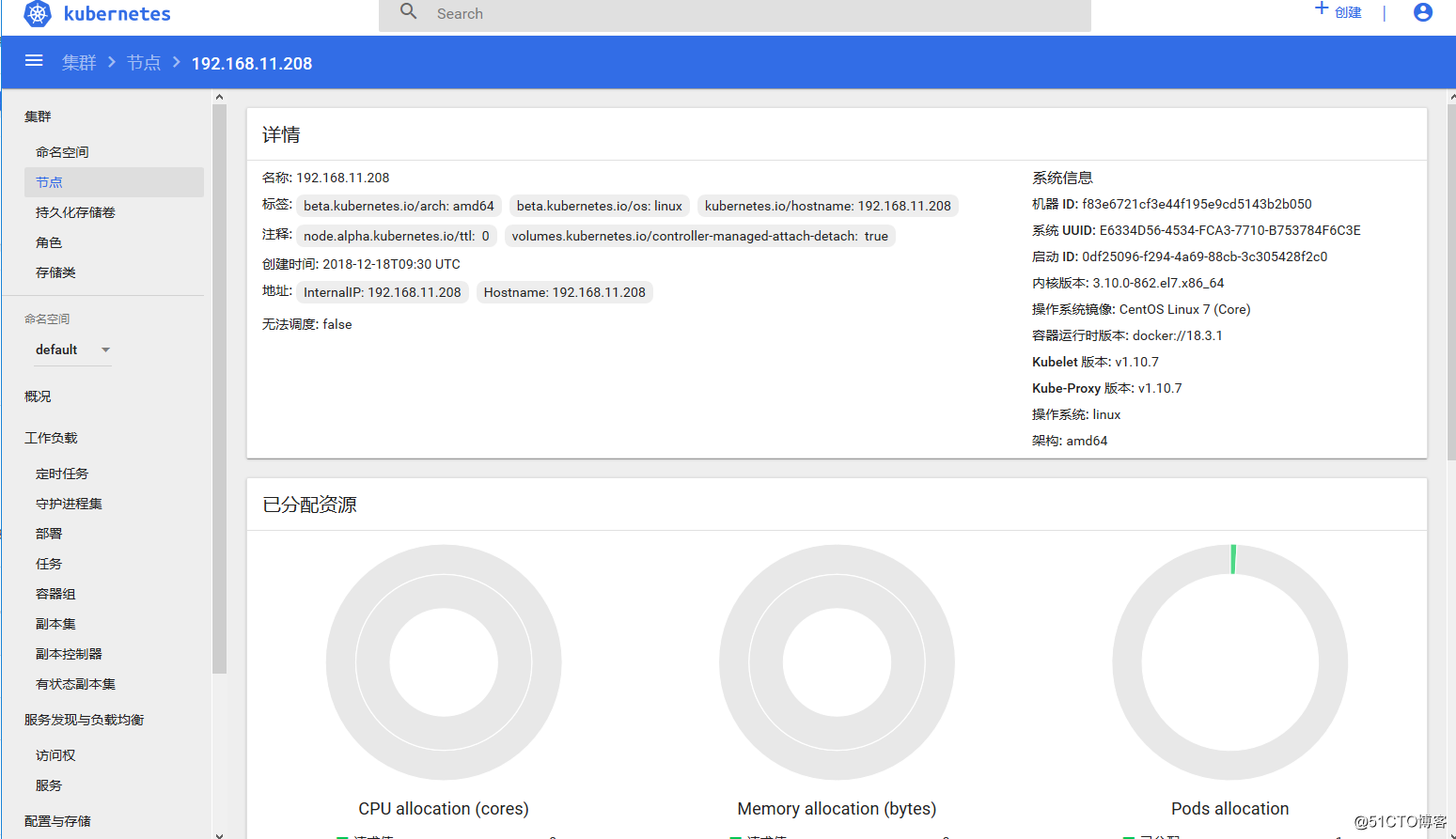

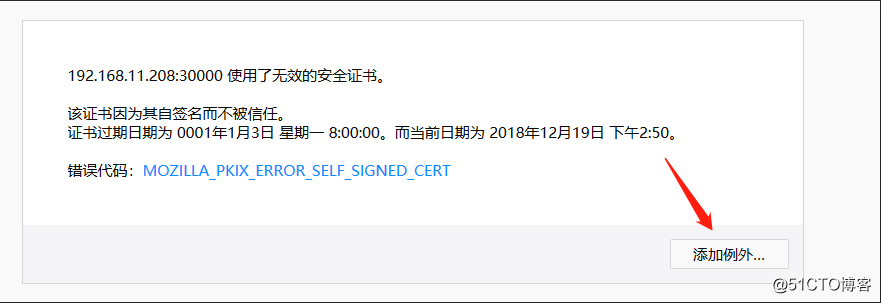

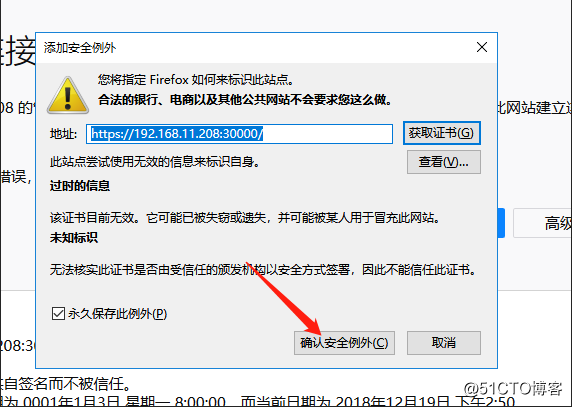

kube-system kubernetes-dashboard-66c9d98865-qgbgq 1/1 Running 0 20h 172.18.30.9 192.168.11.209訪問 # 火狐訪問 google 出現不了祕鑰介面

注意:HTTPS 訪問

直接訪問 https://節點:配置的埠 訪問

訪問會提示登入.我們採取token登入

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}') | grep token[[email protected] ~]# kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}') | grep token

Name: default-token-t8hbl

Type: kubernetes.io/service-account-token

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9..(很多字元)

####

#將這些字元複製到前端登入即可.