IDEA 除錯 Hadoop程式

阿新 • • 發佈:2018-12-25

1、解壓Hadoop到任意目錄

比如:D:\soft\dev\Hadoop-2.7.2

2、設定環境變數

HADOOP_HOME:D:\soft\dev\hadoop-2.7.2

HADOOP_BIN_PATH:%HADOOP_HOME%\bin

HADOOP_PREFIX:%HADOOP_HOME%

在Path後面加上%HADOOP_HOME%\bin;%HADOOP_HOME%\sbin;- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 1

- 2

- 3

- 4

- 5

- 6

- 7

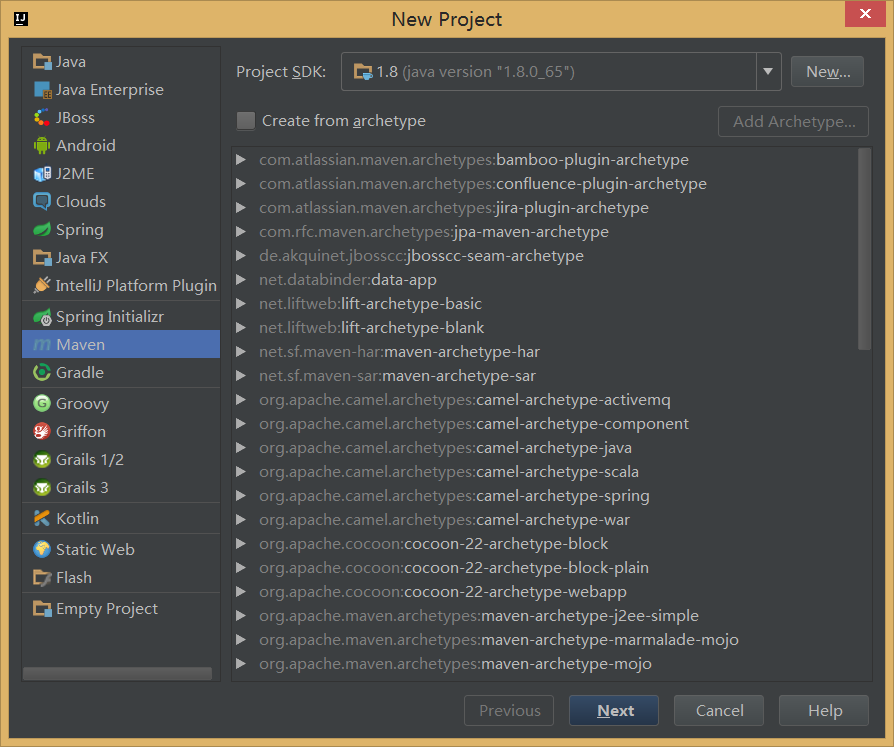

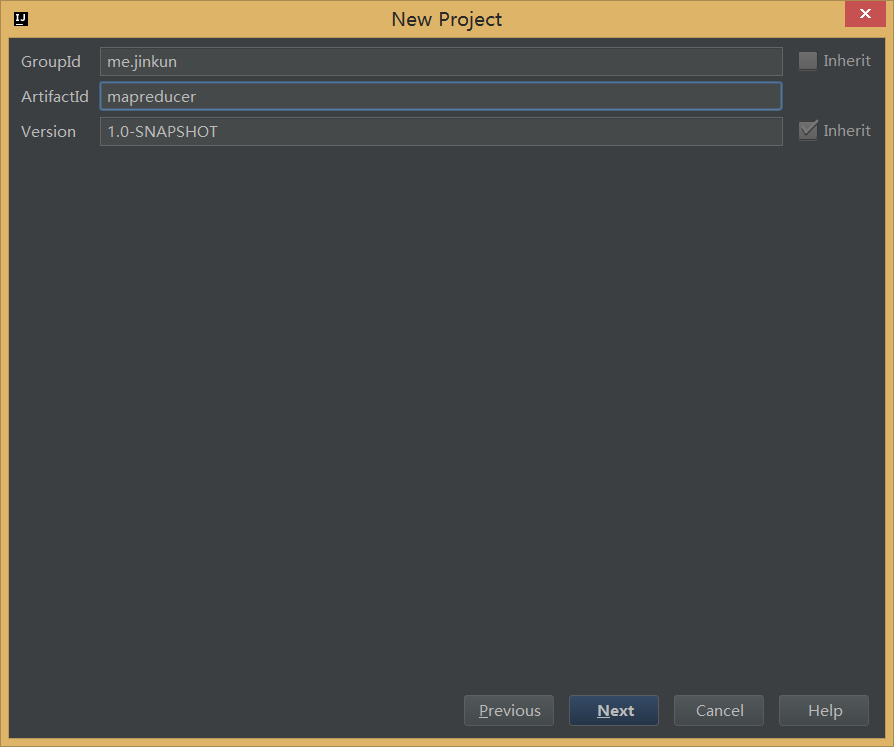

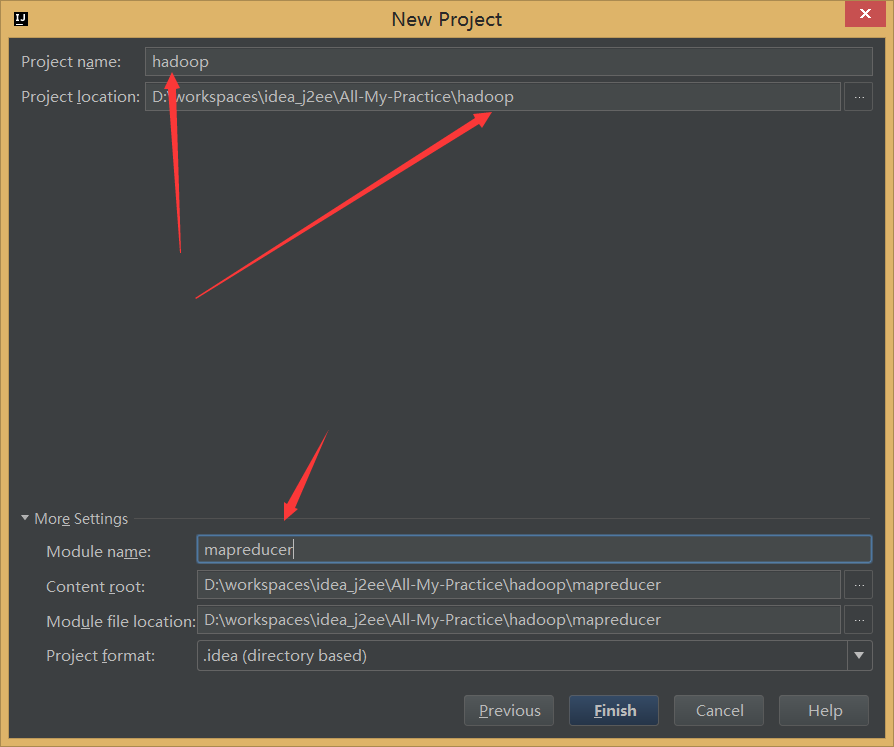

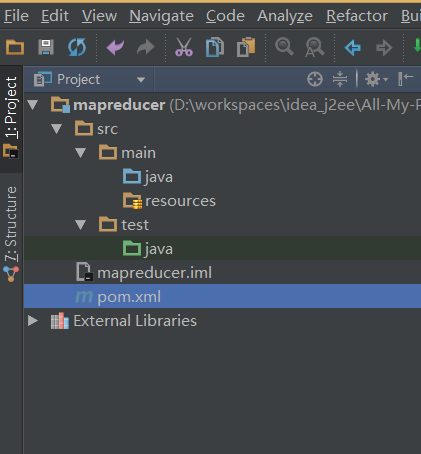

3、新建專案

3.1、新建Maven專案

3.2、加入依賴

<dependency>

<groupId>junit</groupId - 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

3.3、編寫WordCount程式

WcMapper.Java

public class WcMapper extends Mapper<LongWritable, Text, Text, IntWritable> {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String[] words = StringUtils.split(value.toString(), ' ');

for (String w : words) {

context.write(new Text(w), new IntWritable(1));

}

}

}- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

WcReducer.java

public class WcReducer extends Reducer<Text, IntWritable, Text, IntWritable> {

@Override

protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable i : values) {

sum = sum + i.get();

}

context.write(key, new IntWritable(sum));

}

}- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

RunJob.java

public class RunJob {

public static void main(String[] args) throws Exception {

Configuration config = new Configuration();

//設定hdfs的通訊地址

config.set("fs.defaultFS", "hdfs://node1:8020");

//設定RN的主機

config.set("yarn.resourcemanager.hostname", "node1");

try {

FileSystem fs = FileSystem.get(config);

Job job = Job.getInstance(config);

job.setJarByClass(RunJob.class);

job.setJobName("wc");

job.setMapperClass(WcMapper.class);

job.setReducerClass(WcReducer.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path("/usr/input/wc.txt"));

Path outpath = new Path("/usr/output/wc");

if (fs.exists(outpath)) {

fs.delete(outpath, true);

}

FileOutputFormat.setOutputPath(job, outpath);

boolean f = job.waitForCompletion(true);

if (f) {

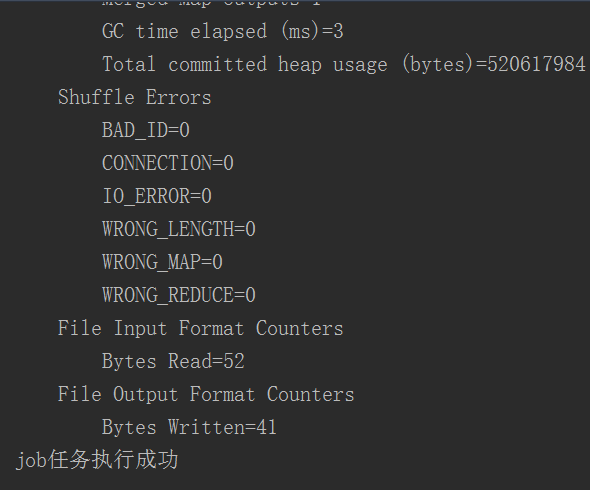

System.out.println("job任務執行成功");

}

} catch (Exception e) {

e.printStackTrace();

}

}

}- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

注意:(改自己的主機)

//設定hdfs的通訊地址

config.set(“fs.defaultFS”, “hdfs://node1:8020”);

//設定RN的主機

config.set(“yarn.resourcemanager.hostname”, “node1”);

日誌檔案:log4j.properties

log4j.appender.console=org.apache.log4j.ConsoleAppender

log4j.appender.console.Target=System.out

log4j.appender.console.layout=org.apache.log4j.PatternLayout

log4j.appender.console.layout.ConversionPattern=%d{ABSOLUTE} %5p %c{1}:%L - %m%n

log4j.rootLogger=INFO, console- 1

- 2

- 3

- 4

- 5

- 1

- 2

- 3

- 4

- 5

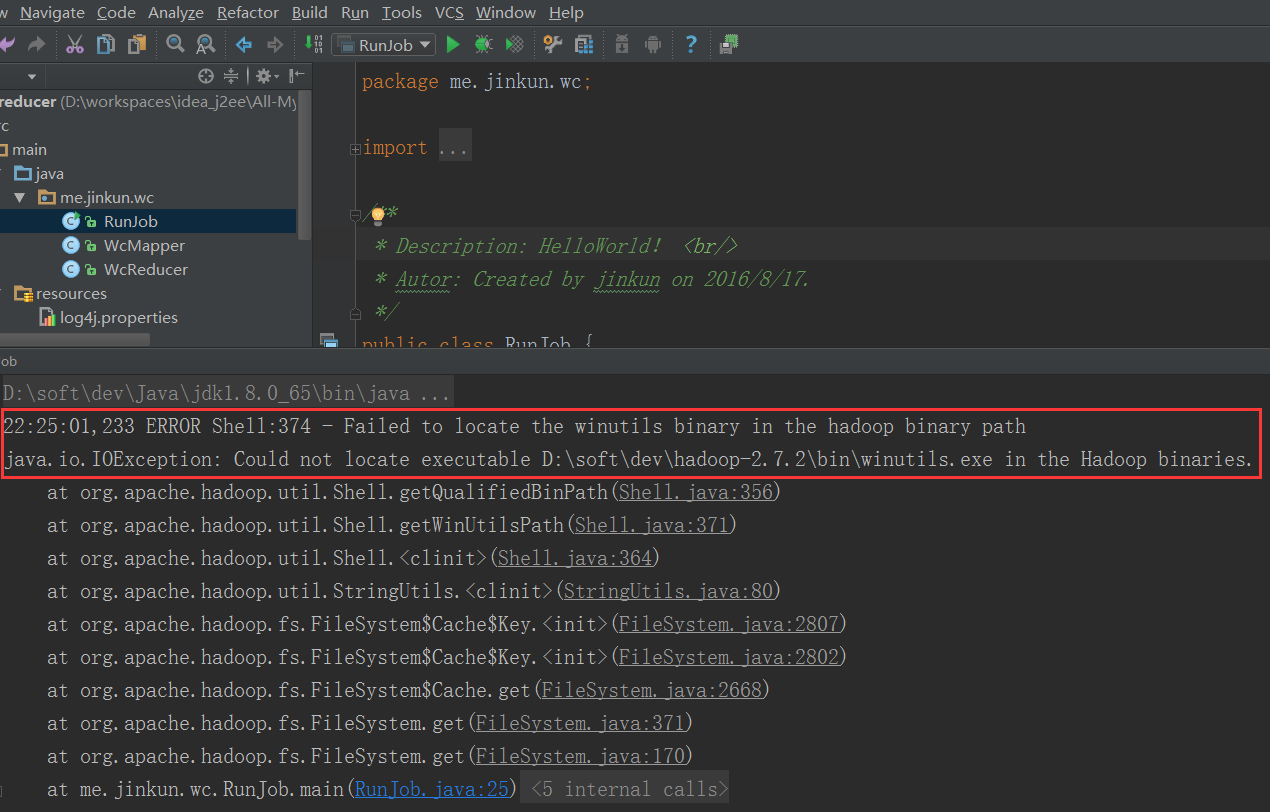

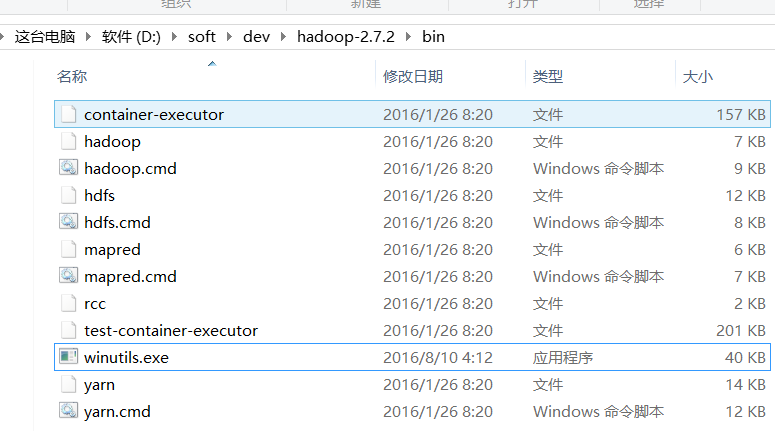

3.4、執行WordCount程式

再次執行:

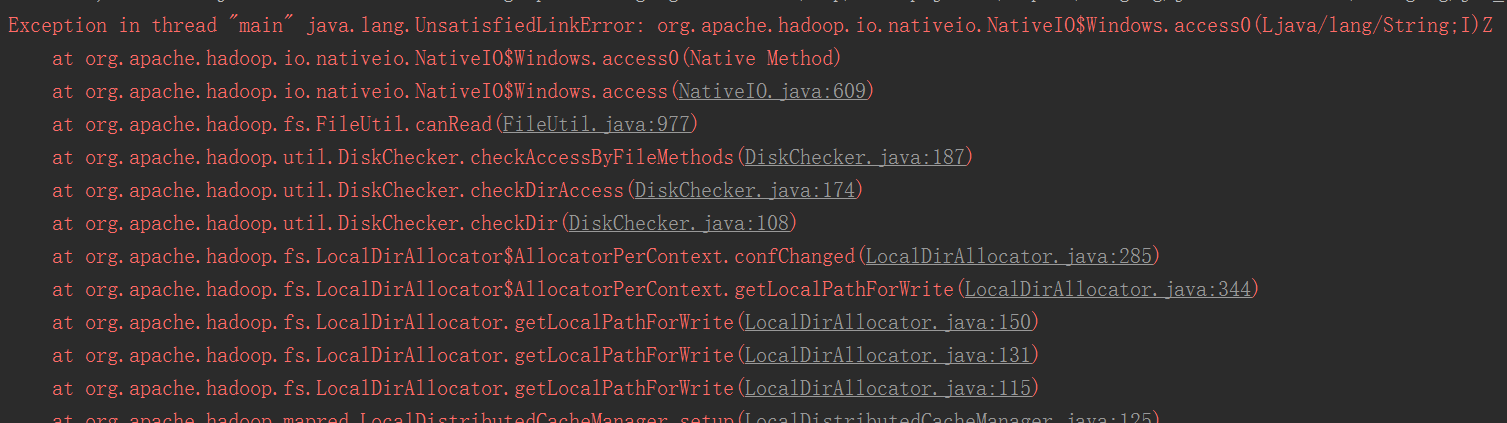

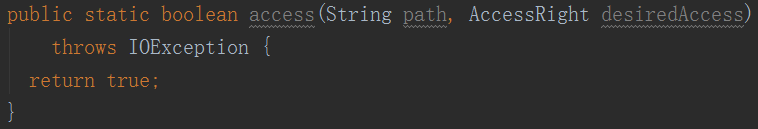

報錯:java.lang.UnsatisfiedLinkError: org.apache.hadoop.io.nativeio.NativeIO$Windows.access0(Ljava/lang/String;I)Z

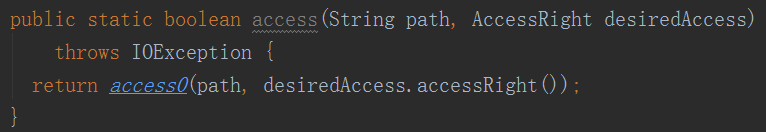

修改org.apache.hadoop.io.nativeio.NativeIO原始碼:

為:

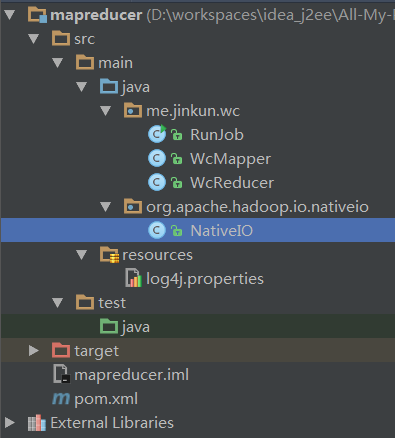

拷貝到src下如下結構:

3.5、再次執行WordCount程式

如果提示許可權異常則:

修改hdfs-site.xml

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>- 1

- 2

- 3

- 4

- 1

- 2

- 3

- 4

重啟hdfs 即可