How we built the Google Cloud Infrastructure WebGL experience

Smooth transitions

Another goal was to be able to make smooth transitions from one scene to another. Now the most important thing to remember when dealing with GPUs and 60FPS is that any new information that needs to get uploaded to the GPU takes time and can cause frames to drop. Especially textures can be heavy to upload. There are various formats (PVRTC, DDS, ETC…) optimized for quick decompression and low memory usage on the GPU, however the native support on different platforms varies wildly and the file-size compression settings in our testing couldn’t match regular JPGs. So we decided to use JPGs because of their small network load, and sacrifice some runtime performance.

This is one of the areas we’d like to explore more, but for this project we opted to try and mitigate some of the runtime overhead by pre-uploading all textures and precompiling all materials (shaders) needed for the entire site on initial site-load. At a high level this basically means that we tried to make the cameras see all the different scenes at load by making everything visible, forcing a render once and then resetting everything to their original visibility:

The quality selector & various performance tips

Building a WebGL site that needs to run 60FPS is no small feat. It’s a constant compromise between look, feel, and responsiveness. Many factors come into play such as not knowing what the capabilities of the device it’s gonna run on is, what resolution it will run at and so on. A visitor might have a Macbook hooked up to a 4K display — that’s just never gonna fly for anything but a fairly static site. We tried to set sensible defaults, but also explored adding a quality switcher to allow visitors to choose themselves. Admittedly, this is probably not the most used feature of the site, but building it gave some insights into which parameters matter in terms of performance, and how to change these at runtime without reloading the full site. We did not want to have the user choose a quality setting when they entered the site.

To keep it simple and to avoid adding a ton of conditional code based on the settings, we chose to tweak the rendering size (pixel ratio) and toggle anti aliasing on/off.

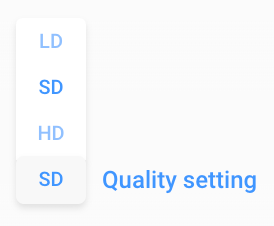

This resulted in three quality settings:

- Low Definition (LD) Turns off anti aliasing and sets a max pixel ratio = 1.

- Standard Definition (SD) Anti aliasing is turned on. Slightly adaptive in that it doesn’t just set one fixed max pixel ratio but tries to be a bit smart by adapting the max pixel ratio based on window size and screen pixel density. For example we increase the max pixel ratio slightly on 5K displays for increased sharpness, assuming that a device connected to this type of display is slightly beefier than your average laptop.

- High Definition (HD) Limit pixel ratio to a max of 2.5 with anti aliasing enabled.

The tricky part was to toggle anti aliasing on/off as this currently requires us to setup a new THREE.WebGLRenderer(); and clean up the old one. Pseudo code:

For further tips on how to optimize performance the responses in this Twitter thread by @mrdoob gives some good quick hints.

Detecting device capabilities:

Since we now had a way to tweak the device requirements we wanted to explore if there was a way to automatically detect and set the quality setting based on how powerful a visitors device is. Long story short —kinda, but it’s a bit of a hack and there’s too many false positives.

The approach relies on sniffing out a specific GFX card name, using the WebGL extension WEBGL_debug_renderer_info and then correlating it to a performance score.

However their project was limited to mobile devices where it may be more reasonable to link certain GFX cards to a specific performance value, since mobile devices’ GPU capabilities are usually better matched to the devices’ screen resolutions, whereas on desktop devices this may not necessarily be the case.

It is however possible to use this information if there’s a known GFX card that is underpowered for your project even at very low resolutions —think of it like minimum requirements for a game. It would have to be a GFX card that we know is used on a lot of devices to make it worthwhile the effort of testing all these GFX cards’ performance, not to mention the regular expressions needed to detect them all.

On top of that iOS 11 introduced throttling requestAnimationFrame(); to 30FPS when in Low Power mode, probably a sensible feature to save battery, but they left out a way for a site to differentiate between intentional system-wide throttling or if we are simply running on a slow device.

We decided that for the time being the best option was to not try and be too smart about auto-changing quality settings, not even based on just a simple average frame rate tracking technique.

Going beyond 60FPS by disabling VSync

It can be hard to track how small changes affect the performance of a WebGL project if you’re well within the limits of your GPU and browser. So let’s say you wanted to figure out how adding a new light to a scene impacts performance, but all you’re seeing is a smooth 60FPS — with and without the new light. We found it helpful to disable Chrome’s frame rate limit of 60 FPS and just let it run as fast as it can. You can do this by opening Chrome from the terminal:

open -a "Google Chrome" --args --disable-gpu-vsync

Be mindful that other tasks running on your laptop or in other tabs may affect the FPS …looking at your Dropbox!

Chrome vs. Safari WebGL performance

This is more of an observation than a learning, but Safari’s WebGL performance simply blows away Chrome’s on 5K displays (and probably also on lower res displays). We found that we could easily increase the pixel ratio to almost native display resolution in Safari and still have 60 FPS, sadly this is not the case with Chrome as of today.

Improvements to explore for the future

The biggest pain point right now for us when developing a WebGL heavy site is all the intermediary steps between having an idea in Cinema 4D to actually previewing how that idea carries over into Three.js and the browser — a lot of good work has gone into solving some of these pain points, but we’d still like to find easier workflows to enable higher parity between how materials, lights and cameras look and behave in Cinema 4D vs. their Three.js counterparts. PBR materials and the work put into glTF goes a long way to smoothen these things out, but there’s still room for improvement. Biggest wish for now would be direct export to glTF from Cinema 4D.