Evaluating PlaidML and GPU Support for Deep Learning on a Windows 10 Notebook

Evaluating PlaidML and GPU Support for Deep Learning on a Windows 10 Notebook

PlaidML is a deep learning software platform which enables GPU supports from different hardware vendors. One major scenario of PlaidML is shown in Figure 2, where PlaidML uses OpenCL to access GPUs made by NVIDIA, AMD, or Intel, and acts as the backend for

Let me use the configuration of my Windows 10 notebook to further illustrate this scenario. My Windows 10 notebook made in 2015 by ASUS has the following setup:

- Intel Core i5–5200U CPU

- 2 built-in GPUs: Intel HD Graphics 5500 and AMD Radeon R5 M320

I use this notebook to dive into deep learning for computer vision, for example, feature extraction, multi-label classification, visual question answering, etc., mainly under Keras over Tensorflow or Keras over Theano. However, as far as I can tell, both Tensorflow and Theano only support GPUs from NVIDIA, not GPUs from other hardware vendors. As a result, all my previous studies were conducted on CPU, not on GPU. But, PlaidML provides an environment which could uses built-in GPUs on my Windows 10 notebook to improve the performance of my deep learning programs. Note that, under the configuration of Keras over PlaidML

This post briefly describes the installation and configuration of PlaidML, then presents the performance of Keras over PlaidML on GPU against the performance of Keras over Tensorflow on CPU using a simple convolutional neural network on the CIFAR-10 dataset.

1. Installation and Configuration

Installing PlaidML is pretty straightforward: follow the instruction on github or pip the whl file from pypi. After installation, running plaidml-setup will guide you to select the target GPU device. Figure 3 briefs the process of setup.

The next step is to define PlaidML as the backend for Keras as follows.

set “KERAS_BACKEND=plaidml.keras.backend”

Now, we are ready to run our deep learning programs. There is no need to change our codes!

2. Experiment and Evaluation

Here I use cifar10_cnn.py (only 10 epochs and without data augmentation) from keras-team on github to conduct the tests. Figure 4 shows the performance of this program on three backends:

- Tensorflow on CPU

- PlaidML on Intel HD Graphics 5500

- PlaidML on AMD Radeon R5 M320

After 10 epochs, the accuracy is about the same, i.e., 67%, 69%, and 68%, respectively. However, the training time of PlaidML on Intel GPU is about 4 times faster than Tensorflow on CPU (16969/4314 = 3.93) and the training time of PlaidML on AMD GPU is about 9 times fasters than Tensorflow on CPU (16969/1841 = 9.21).

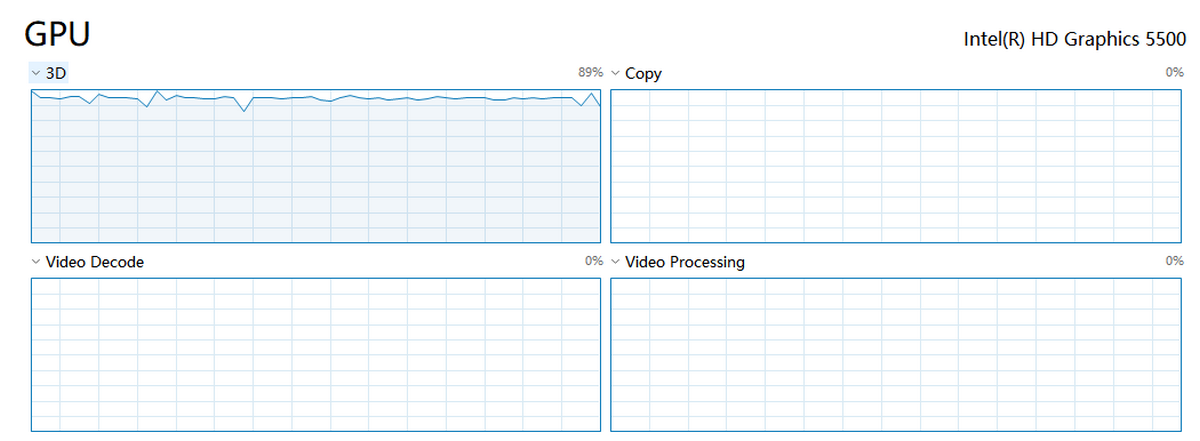

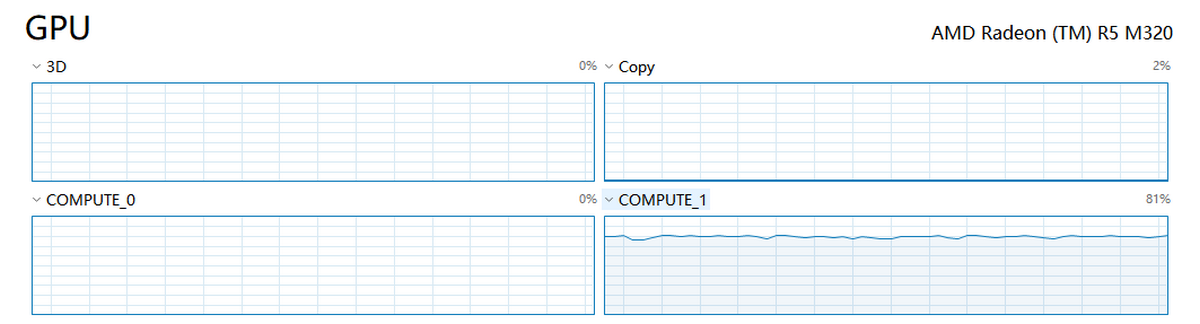

Task Manager on Windows 10 allows us to examine the usage of GPU as shown in Figure 5 and Figure 6. However, the meaning of tags might be somewhat confusing here.

3. Problems on Software/Hardware?!

I ran into two problems on using PlaidML 0.3.5:

(1) On Intel HD Graphics 5500, either a small neural network (such as cifar10_cnn.py) or a large neural network (such as MobileNet) might run into an access violation problem. [ref]

(2) On AMD Radeon R5 M320, a large neural network (e.g., VGG, Inception, ResNet, etc.) will run into a memory allocation problem. [ref]

4. Conclusions

Although there are some problems on running PlaidML on both Intel GPU and AMD GPU on my notebook. I am still glad to see this solution for deep learning and hope the team behind it to further improve the system in the future.

Last Note: Intel acquired the company who created PlaidML (Vertex.AI) on Aug 2018.