Handmade mutex in 28 lines of go

Mutexes and other lockers is used to protect access to data from different computation threads. Without protection you can get inconsistent state called race condition. So if we want to create application that will work fine in multithreading environment we need an instrument to synchronize access to the data.

Should we create our own mutex instead of using existed from standard library? Yes! (Of course not.)

Where do we start?

Our mutex must match the sync.Locker interface to make it possible to replace any existed mutex or other locker with our implementation.

type Locker interface { Lock() Unlock()}Ok, here it is:

type Lock struct {}func (l *Lock) Lock() {}func (l *Lock) Unlock() {}What’s next?

Goroutines that are trying to obtain lock must “sleep” until it will be possible.

We have several possibilities to satisfy this requirement. One of them is an atomic.CompareAndSwap operation. Let’s look closer to it.

CAS compares the contents of a memory location with a given value and, only if they are the same, modifies the contents of that memory location to a new given value. This is done as a single atomic operation. © Wikipedia

Sounds like what we need.

First of all we need values that we will swap later.

const ( unlocked int32 = iota locked)

Then we need swap them.

import ( "atomic")

type Lock struct { state int32}func (l *Lock) Lock() { for !atomic.CompareAndSwapInt32(&lock.state, unlocked, locked) { }}func (l *Lock) Unlock() { for !atomic.CompareAndSwapInt32(&lock.state, locked, unlocked) { }}This approach is called spinlock:

Spinlock is a lock which causes a thread trying to acquire it to simply wait in a loop (“spin”) while repeatedly checking if the lock is available. © Wikipedia

Our Lock method will try to swap unlockedstate to locked until it will be possible.

Are we finished? Let’s check it with simple test:

import ( "testing")

func TestLock(t *testing.T) { mx := &Lock{} resource := make(map[int]int) done := make(chan struct{}) go func() { for i := 0; i < 10; i++ { mx.Lock() resource[i] = i time.Sleep(time.Millisecond) mx.Unlock() } done <- struct{}{} }() for i := 0; i < 10; i++ { mx.Lock() _ = resource[i] time.Sleep(time.Millisecond) mx.Unlock() } <-done}We do some concurrent reads and writes here. Let’s run it:

$ go test -cpu 1 -run Lock

But… Wait. Why test is running so long? Stop. SIGQUIT? Killed due to timeout?

Probably you asked yourself why we’ve added time.Sleep to test. It have done to illustrate how our solution will [not] work inside the one system thread.

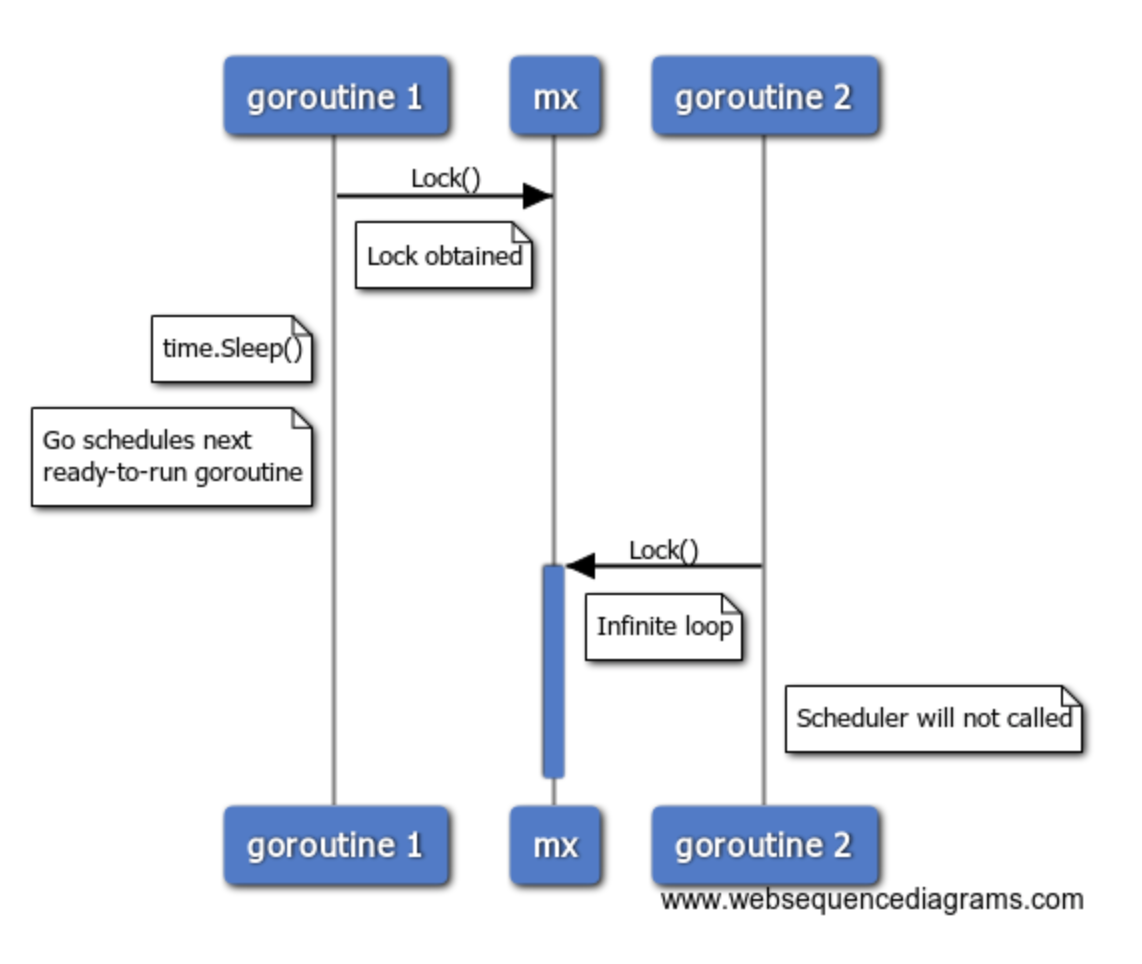

Let’s look closer to what’s happening here:

First goroutine obtained lock, updated resource and then called time.Sleep. After that Go scheduler somehow scheduled other goroutine for exectution. Second goroutine tries to get lock in loop. The problem is that the scheduler can’t interrupt goroutine execution at any time and returns to the first goroutine to unlock resource. Instead of this we got infinite loop.

We can fix it with runtime.Gosched function.

Gosched tells the scheduler that it can schedule next goroutine for execution (even if current and next goroutine are the same).

import ( "atomic" "runtime")

type Lock struct { state int32}func (l *Lock) Lock() { for !atomic.CompareAndSwapInt32(&lock.state, unlocked, locked) { runtime.Gosched() }}func (l *Lock) Unlock() { for !atomic.CompareAndSwapInt32(&lock.state, locked, unlocked) { runtime.Gosched() }}Run test again. Everything works. Even if we add flag -race to check code for data races.

And last but not least — benchmarks!

$ go test -bench=. -cpu 1,2,4

BenchmarkLock 100000000 15.0 ns/opBenchmarkLock-2 100000000 15.0 ns/opBenchmarkLock-4 100000000 15.0 ns/opBenchmarkMutex 100000000 15.3 ns/opBenchmarkMutex-2 100000000 15.1 ns/opBenchmarkMutex-4 100000000 15.4 ns/opBenchmarkWMutex 50000000 32.2 ns/opBenchmarkWMutex-2 50000000 32.4 ns/opBenchmarkWMutex-4 50000000 32.2 ns/opBenchmarkRMutex 100000000 15.7 ns/opBenchmarkRMutex-2 100000000 14.7 ns/opBenchmarkRMutex-4 100000000 15.4 ns/op

This post is an example of how you can use CompareAndSwap and Goshed. But don’t use this locker on production without good reason.

Thanks for reading. Comments are welcome.