機器學習sklearn19.0——整合學習——boosting與梯度提升演算法(GBDT)、Adaboost演算法

阿新 • • 發佈:2019-01-01

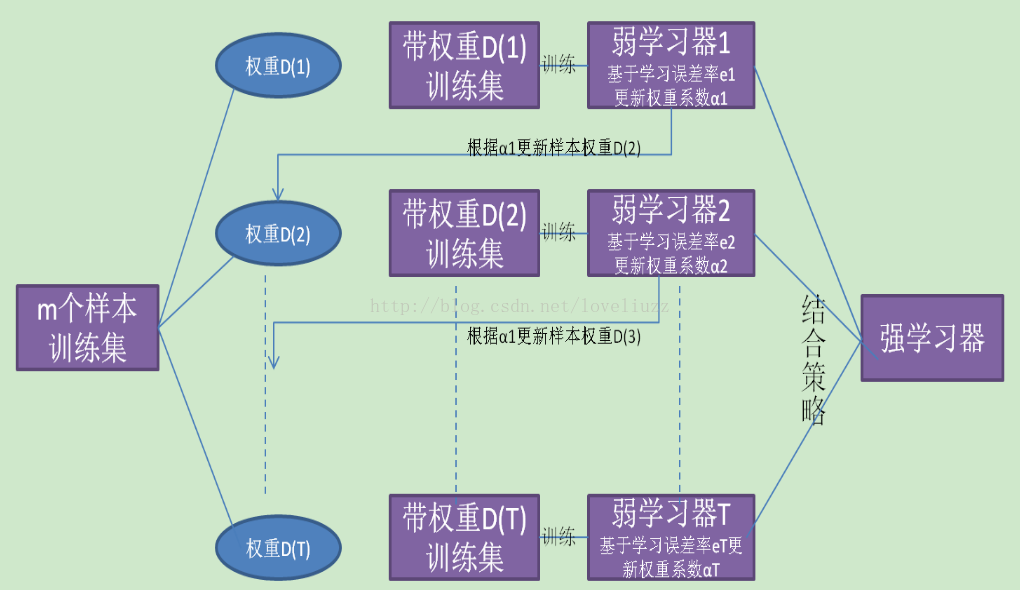

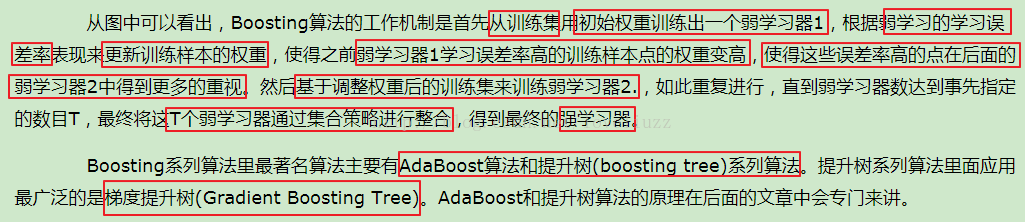

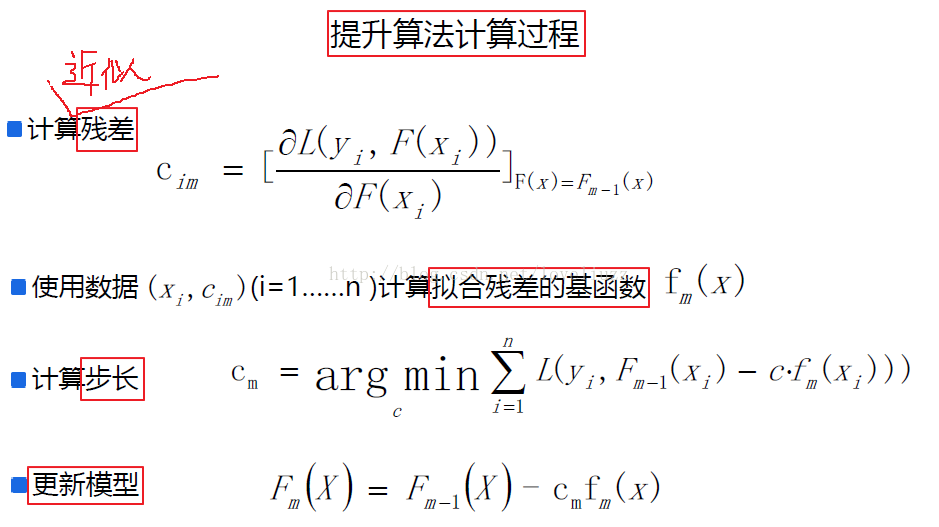

一、boosting演算法原理

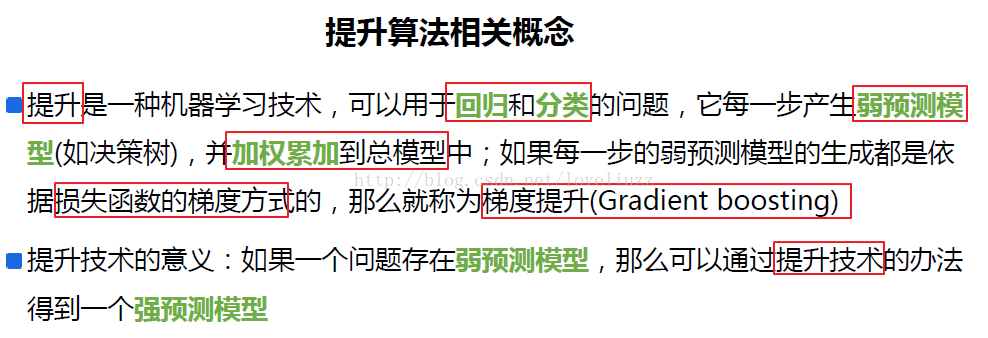

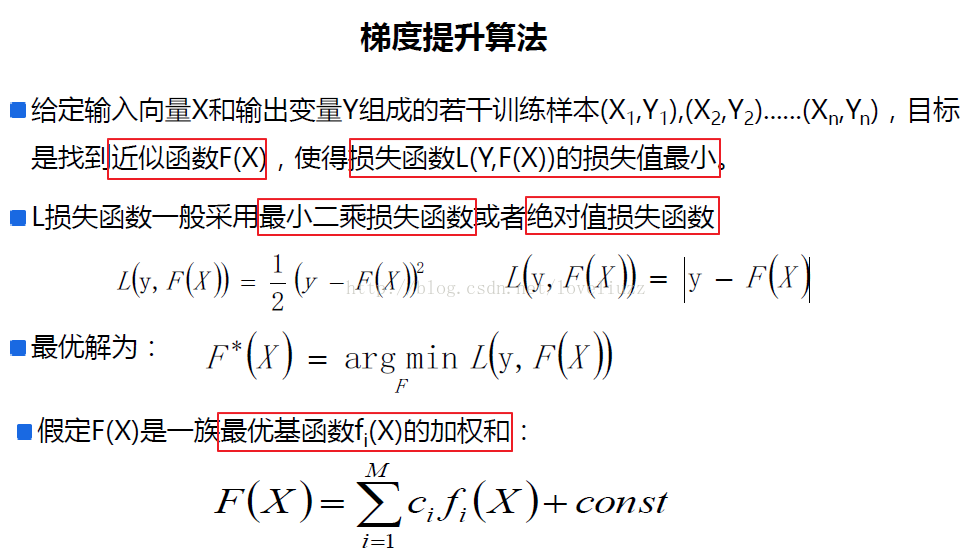

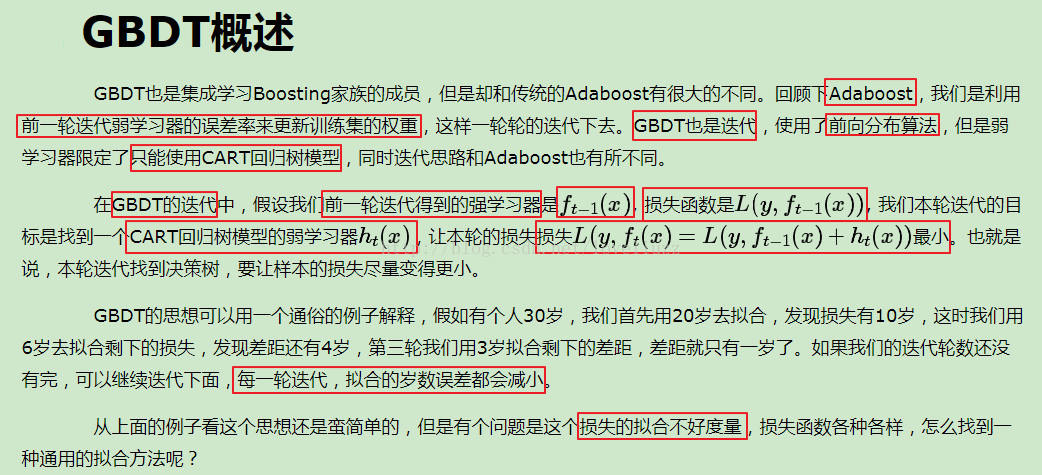

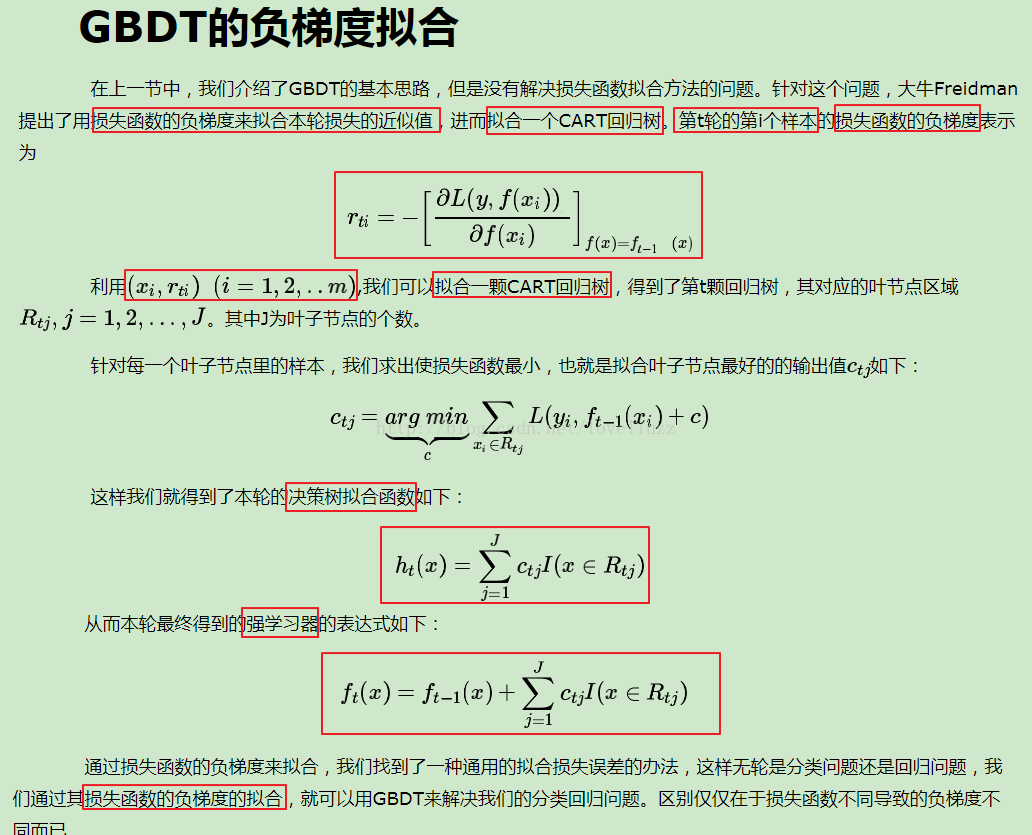

二、梯度提升演算法

關於提升梯度演算法的詳細介紹,參照部落格:http://www.cnblogs.com/pinard/p/6140514.html

對該演算法的sklearn的類庫介紹和調參,參照網址:http://www.cnblogs.com/pinard/p/6143927.html

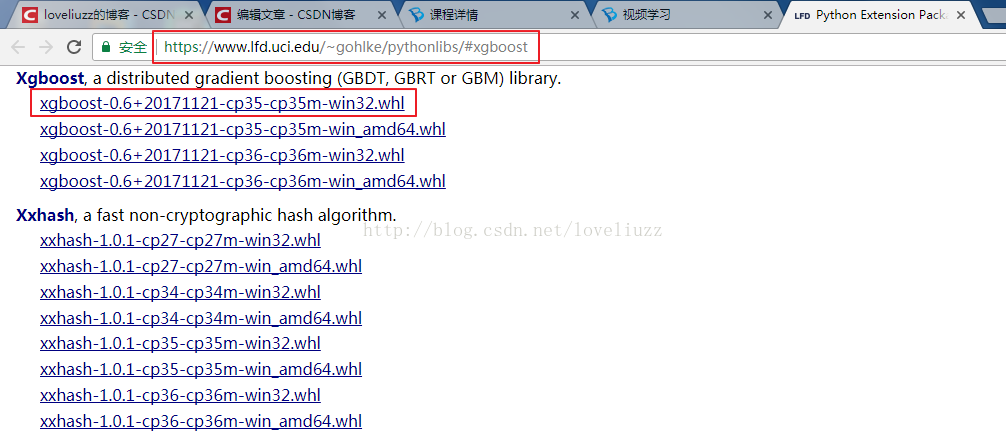

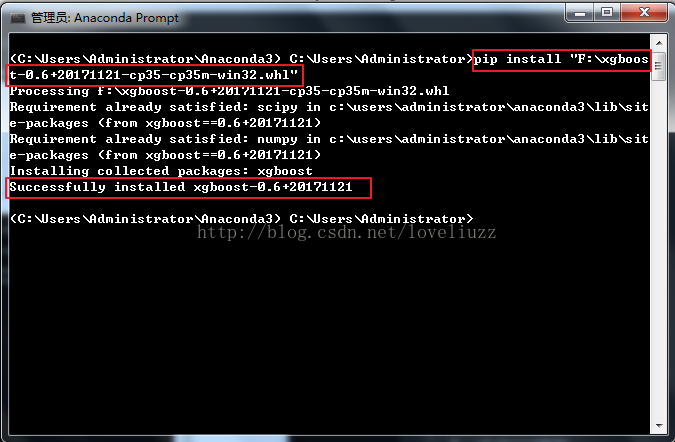

xgboost安裝

(1)在網址 https://www.lfd.uci.edu/~gohlke/pythonlibs/#xgboost 中下載相應的版本

(2)在anaconda prompt中安裝

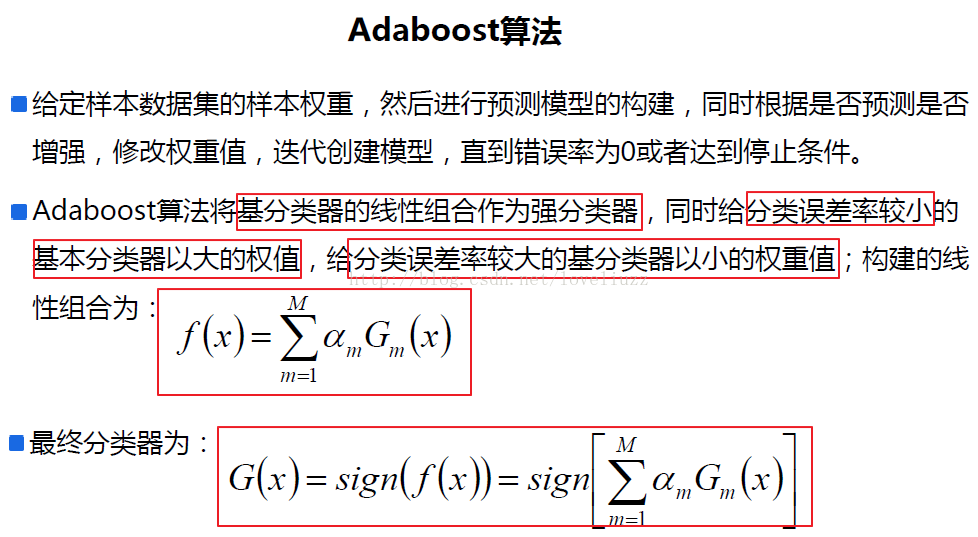

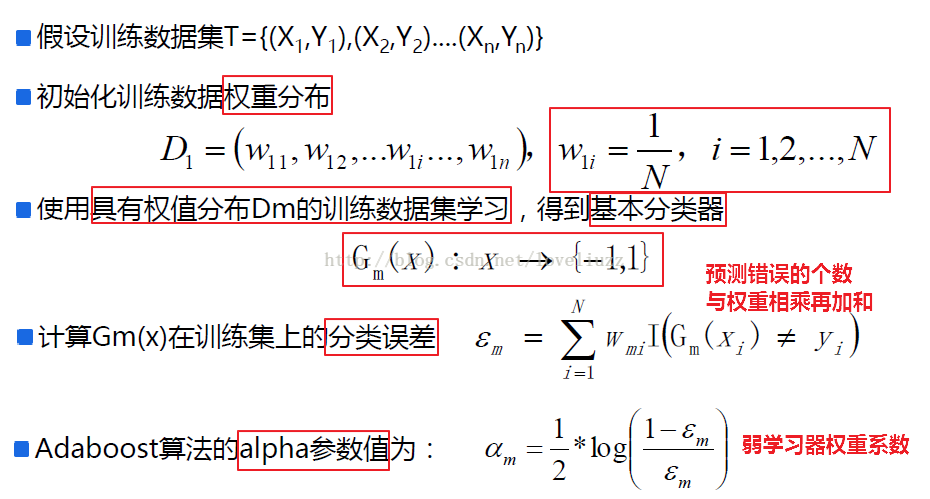

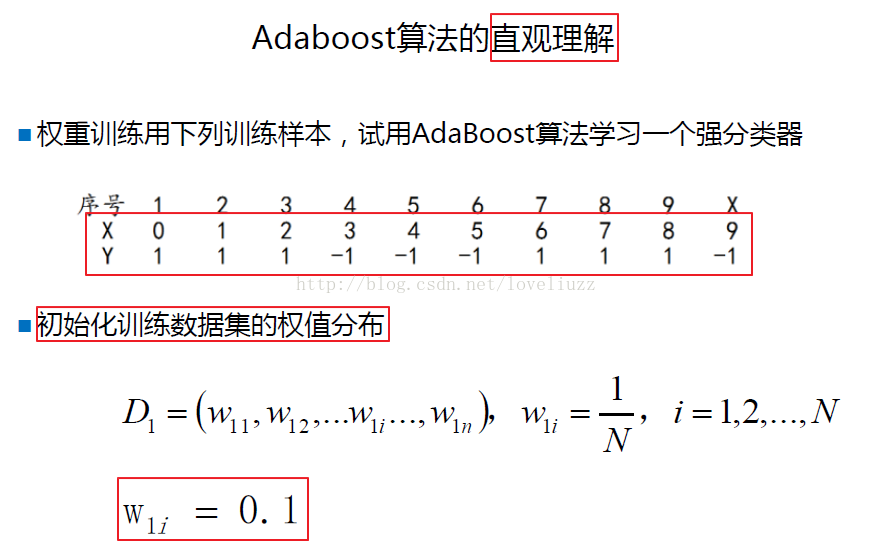

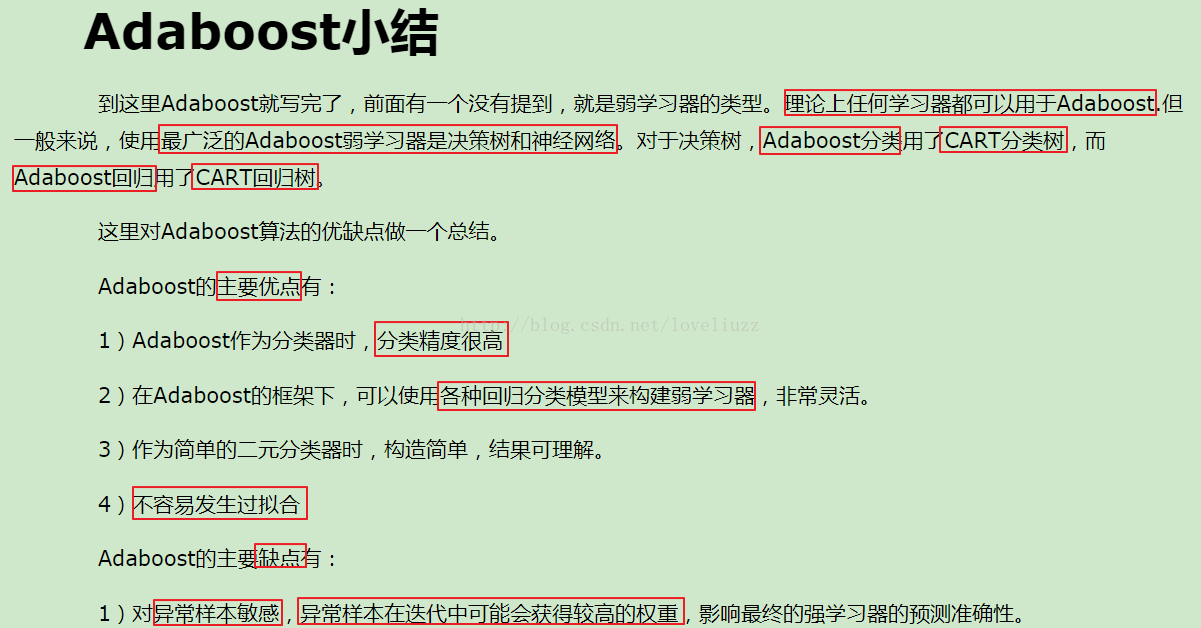

三、adaboost演算法

注:adaboost演算法詳細介紹參照部落格地址:http://www.cnblogs.com/pinard/p/6133937.html

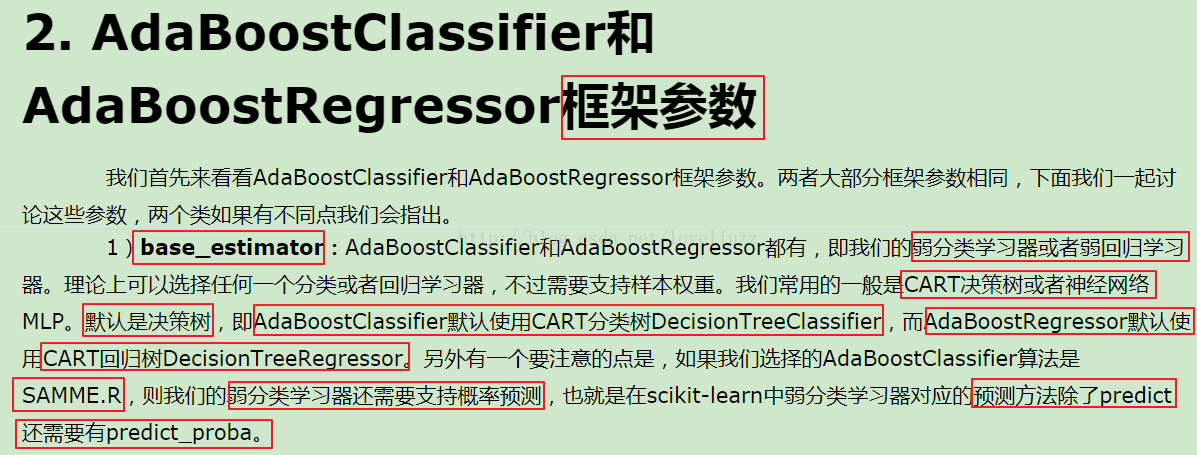

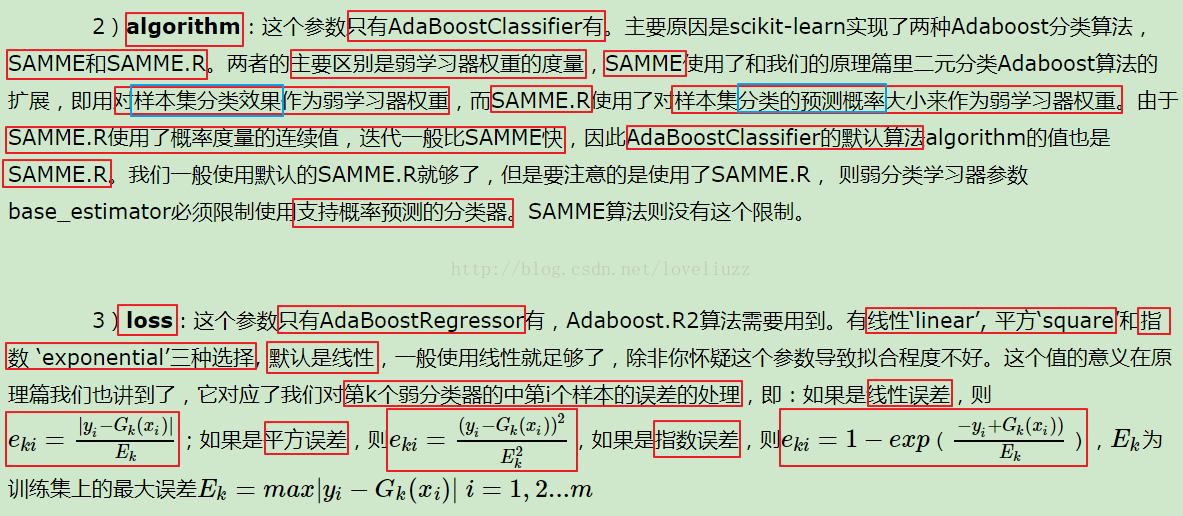

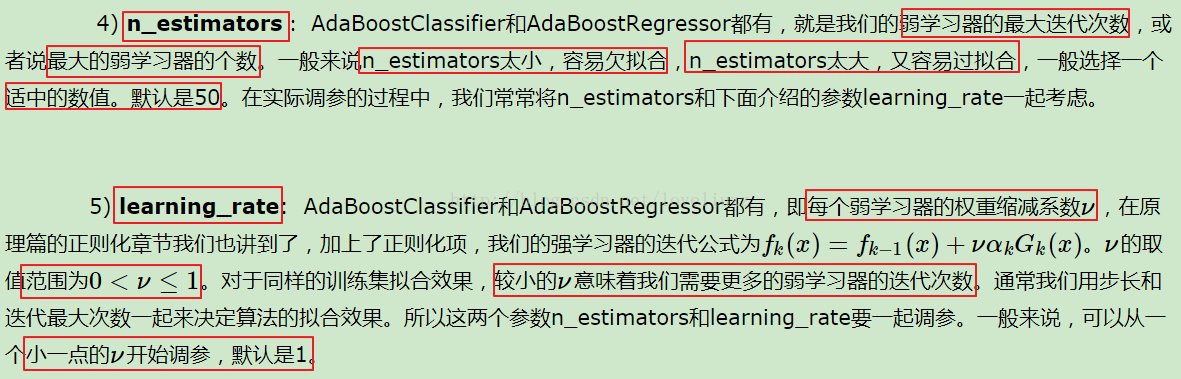

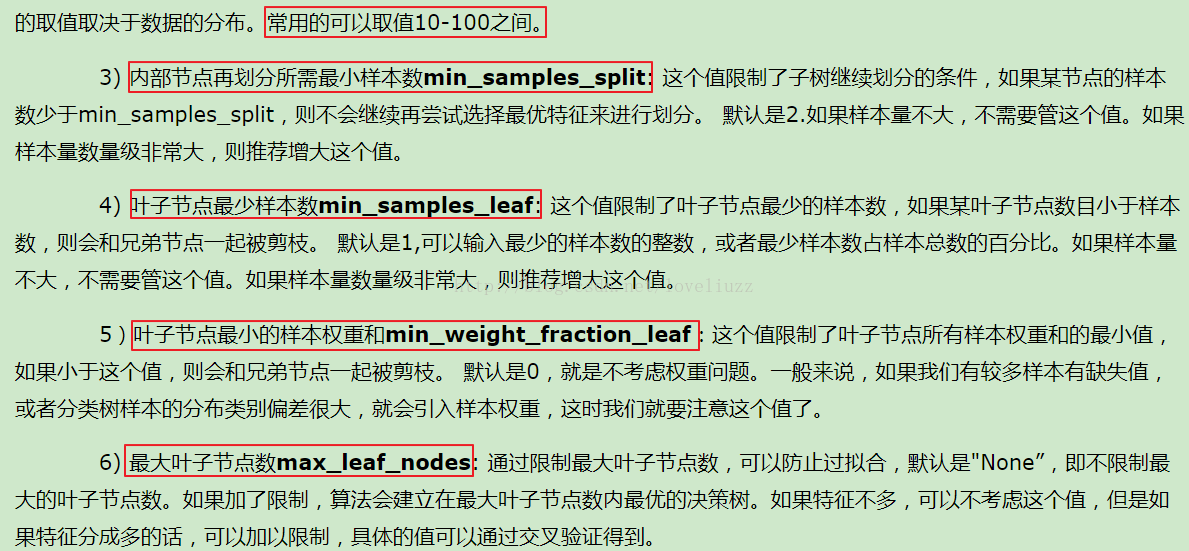

四、adaboost演算法類庫介紹

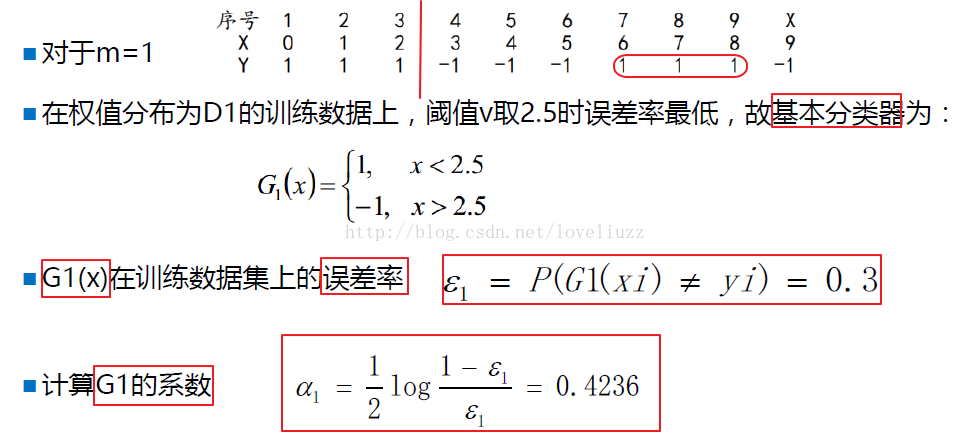

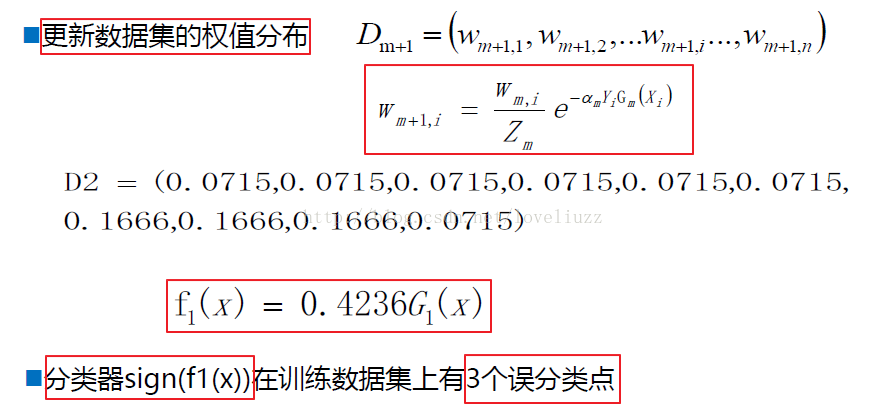

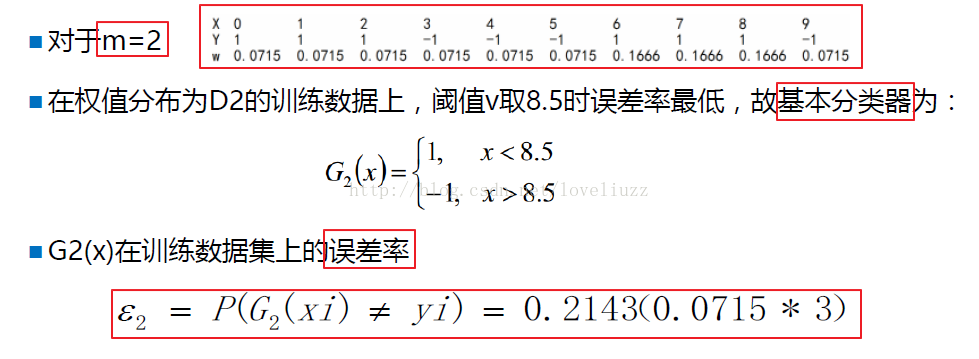

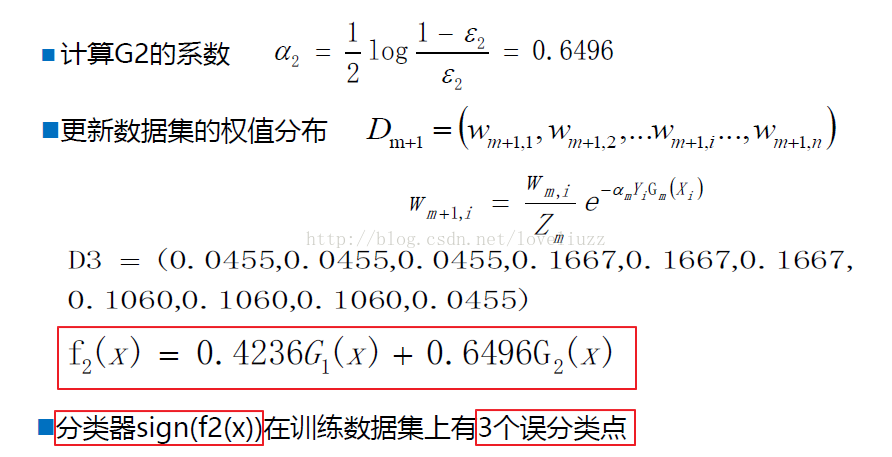

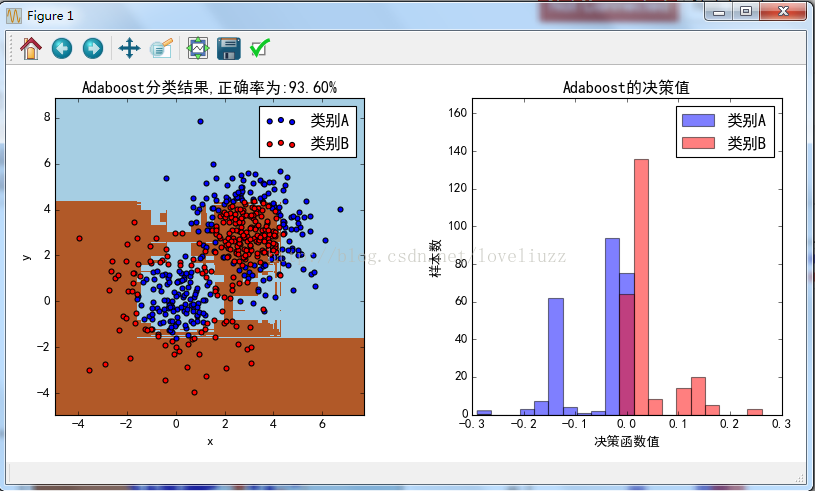

五、adaboost演算法示例舉例

(1)知識點介紹

(2)示例程式碼

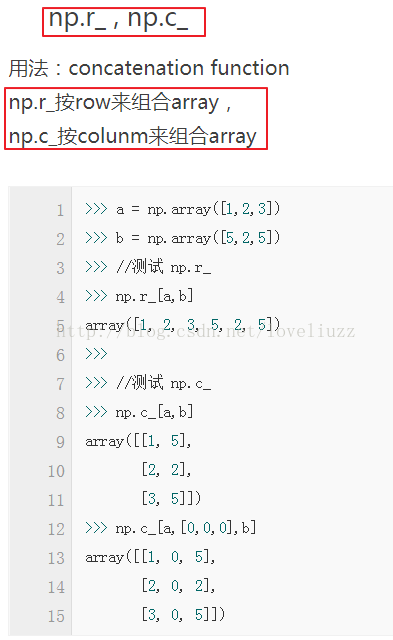

#!/usr/bin/env python # -*- coding:utf-8 -*- # Author:ZhengzhengLiu #Adaboost演算法 from sklearn.ensemble import AdaBoostClassifier from sklearn.tree import DecisionTreeClassifier from sklearn.datasets import make_gaussian_quantiles import numpy as np import matplotlib as mpl import matplotlib.pyplot as plt #解決中文顯示問題 mpl.rcParams['font.sans-serif']=[u'simHei'] mpl.rcParams['axes.unicode_minus']=False #建立資料 #生成2維正態分佈,生成的資料按分位數分為兩類,200個樣本,2個樣本特徵,協方差係數為2 X1,y1 = make_gaussian_quantiles(cov=2,n_samples=200,n_features=2, n_classes=2,random_state=1) #建立符合高斯分佈的資料集 X2,y2 = make_gaussian_quantiles(mean=(3,3),cov=1.5,n_samples=300,n_features=2, n_classes=2,random_state=1) #將兩組資料合成一組資料 X = np.concatenate((X1,X2)) y = np.concatenate((y1,-y2+1)) #構建adaboost模型 bdt = AdaBoostClassifier(DecisionTreeClassifier(max_depth=1), algorithm="SAMME.R",n_estimators=200) #資料量大時,可以增加內部分類器的max_depth(樹深),也可不限制樹深,樹深的範圍為:10-100 #資料量小時,一般可以設定樹深較小或者n_estimators較小 #n_estimators:迭代次數或最大弱分類器數 #base_estimator:DecisionTreeClassifier,選擇弱分類器,預設為CART樹 #algorithm:SAMME和SAMME.R,運算規則,後者是優化演算法,以概率調整權重,迭代,需要有能計算概率的分類器支援 #learning_rate:0<v<=1,預設為1,正則項 衰減指數 #loss:誤差計算公式,有線性‘linear’,平方‘square’和指數'exponential’三種選擇,一般用linear足夠 #訓練 bdt.fit(X,y) plot_step = 0.02 x_min,x_max = X[:,0].min()-1,X[:,0].max()+1 y_min,y_max = X[:,1].min()-1,X[:,1].max()+1 #meshgrid的作用:生成網格型資料 xx,yy = np.meshgrid(np.arange(x_min,x_max,plot_step), np.arange(y_min,y_max,plot_step)) #預測 # np.c_ 按照列來組合陣列 Z = bdt.predict(np.c_[xx.ravel(),yy.ravel()]) #設定維度 Z = Z.reshape(xx.shape) #畫圖 plot_coloes = "br" class_names = "AB" plt.figure(figsize=(10,5),facecolor="w") #區域性子圖 plt.subplot(1,2,1) plt.pcolormesh(xx,yy,Z,cmap=plt.cm.Paired) for i,n,c in zip(range(2),class_names,plot_coloes): idx = np.where(y == i) plt.scatter(X[idx,0],X[idx,1],c=c,cmap=plt.cm.Paired,label=u"類別%s"%n) plt.xlim(x_min,x_max) plt.ylim(y_min,y_max) plt.legend(loc="upper right") plt.xlabel("x") plt.ylabel("y") plt.title(u"Adaboost分類結果,正確率為:%.2f%%"%(bdt.score(X,y)*100)) plt.savefig("Adaboost分類結果.png") #獲取決策函式的數值 twoclass_out = bdt.decision_function(X) #獲取範圍 plot_range = (twoclass_out.min(),twoclass_out.max()) plt.subplot(1,2,2) for i,n,c in zip(range(2),class_names,plot_coloes): #直方圖 plt.hist(twoclass_out[y==i],bins=20,range=plot_range, facecolor=c,label=u"類別%s"%n,alpha=.5) x1,x2,y1,y2 = plt.axis() plt.axis((x1,x2,y1,y2*1.2)) plt.legend(loc="upper right") plt.xlabel(u"決策函式值") plt.ylabel(u"樣本數") plt.title(u"Adaboost的決策值") plt.tight_layout() plt.subplots_adjust(wspace=0.35) plt.savefig("Adaboost的決策值.png") plt.show()

六、分類演算法比較

#!/usr/bin/env python # -*- coding:utf-8 -*- # Author:ZhengzhengLiu #分類演算法比較 import numpy as np import matplotlib as mpl import matplotlib.pyplot as plt from matplotlib.colors import ListedColormap from sklearn.model_selection import train_test_split from sklearn.preprocessing import StandardScaler from sklearn.linear_model import LogisticRegressionCV from sklearn.neighbors import KNeighborsClassifier from sklearn.tree import DecisionTreeClassifier from sklearn.ensemble import RandomForestClassifier,AdaBoostClassifier,GradientBoostingClassifier from sklearn.datasets import make_moons,make_circles,make_classification #生成月牙形、圓形和分型別的資料集 #解決中文顯示問題 mpl.rcParams['font.sans-serif']=[u'simHei'] mpl.rcParams['axes.unicode_minus']=False X,y = make_classification(n_features=2,n_redundant=0,n_informative=2, random_state=1,n_clusters_per_class=1) rng = np.random.RandomState(2) X+=2*rng.uniform(size=X.shape) linearly_separable = (X,y) datasets = [make_moons(noise=0.3,random_state=0), make_circles(noise=0.2,factor=0.4,random_state=1), linearly_separable] names = ["Nearest Neighbors", "Logistic","Decision Tree", "Random Forest", "AdaBoost", "GBDT"] classifiers = [ KNeighborsClassifier(3), LogisticRegressionCV(), DecisionTreeClassifier(max_depth=5), RandomForestClassifier(max_depth=5,n_estimators=10,max_features=1), AdaBoostClassifier(n_estimators=10,learning_rate=1.5), GradientBoostingClassifier(n_estimators=10,learning_rate=1.5) ] #畫圖 figure = plt.figure(figsize=(27,9),facecolor="w") i = 1 h = .02 #步長 for ds in datasets: X,y = ds X = StandardScaler().fit_transform(X) X_train,X_test,y_train,y_test = train_test_split(X,y,test_size=.4) x_min,x_max = X[:,0].min()-.5,X[:,0].max()+.5 y_min,y_max = X[:,1].min()-.5,X[:,1].max()+.5 xx,yy = np.meshgrid(np.arange(x_min,x_max,h), np.arange(y_min,y_max,h)) cm = plt.cm.RdBu cm_bright = ListedColormap(["r","b","y"]) ax = plt.subplot(len(datasets),len(classifiers)+1,i) ax.scatter(X_train[:,0],X_train[:,1],c=y_train,cmap=cm_bright) ax.scatter(X_test[:,0],X_test[:,1],c=y_test,cmap=cm_bright,alpha=0.6) ax.set_xlim(xx.min(),xx.max()) ax.set_ylim(yy.min(),yy.max()) ax.set_xticks(()) ax.set_yticks(()) i+=1 #畫每個演算法的圖 for name,clf in zip(names,classifiers): ax = plt.subplot(len(datasets),len(classifiers)+1,i) clf.fit(X_train,y_train) score = clf.score(X_test,y_test) if hasattr(clf,"decision_function"): Z = clf.decision_function(np.c_[xx.ravel(),yy.ravel()]) else: Z = clf.predict_proba(np.c_[xx.ravel(),yy.ravel()])[:,1] Z = Z.reshape(xx.shape) ax.contourf(xx,yy,Z,cmap=cm,alpha=.8) ax.scatter(X_train[:,0],X_train[:,1],c=y_train,cmap=cm_bright) ax.scatter(X_test[:, 0], X_test[:, 1], c=y_test, cmap=cm_bright, alpha=0.6) ax.set_xlim(xx.min(), xx.max()) ax.set_ylim(yy.min(), yy.max()) ax.set_xticks(()) ax.set_yticks(()) ax.set_title(name) ax.text(xx.max()-.3,yy.min()+.3,("%.2f"%score).lstrip("0"), size=15,horizontalalignment="right") i+=1 #展示圖 figure.subplots_adjust(left=.02,right=.98) plt.savefig("分類演算法比較.png") plt.show()