Python 實現股票資料的實時抓取

阿新 • • 發佈:2019-01-01

**最近搗鼓股票的東西,想看看股票的實時漲跌資訊,又不想去看網上炒股軟體現有的資訊,所以尋思著自己寫了一個Python的股票當前價格抓取工具:**

一、得到滬深兩市的所有上市股票資料

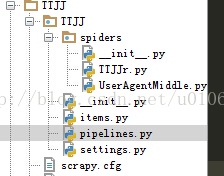

考慮主要在東方財富網站上面抓取所有的滬深兩市的股票名字和股票程式碼資訊,很多辦法可以爬到這樣的資料,我用了一個爬蟲框架Scrapy(正好在學習),將得到的資料儲存進一個名叫TTJJ.json的檔案中,Scrapy新建一個TTJJ工程,然後我添加了一個user-agent檔案,防止被伺服器ban,(在這兒其實沒什麼用,因為我訪問的頻率不高,伺服器不會拒絕),工程列表如下:

爬蟲的主要程式如TTJJr所示:

- from scrapy.spider import Spider

- from scrapy.selector import Selector

- from TTJJ.items import TTjjItem

- import re

- from scrapy import log

- class TTJJi(Spider):

- name = "TTJJ"

- allowed_domains=['eastmoney.com']

-

start_urls = ["http://quote.eastmoney.com/stocklist.html#sh"]

- def parse(self, response):

- sel = Selector(response)

- cont=sel.xpath('//div[@class="qox"]/div[@class="quotebody"]/div/ul')[0].extract()

- item = TTjjItem()

- for ii in re.findall(r'<li>.*?<a.*?target=.*?>(.*?)</a>',cont):

-

item["stockName"]=ii.split("(")[0].encode('utf-8')

- item["stockCode"]=("sh"+ii.split("(")[1][:-1]).encode('utf-8')

- log.msg(ii.encode('utf-8'),level="INFO")

- yield item

- #item["stockCode"]="+------------------------------------------------------------------+"

- #yield item

- cont1=sel.xpath('//div[@class="qox"]/div[@class="quotebody"]/div/ul')[1].extract()

- for iii in re.findall(r'<li>.*?<a.*?target=.*?>(.*?)</a>',cont1):

- item["stockName"]=iii.split("(")[0].encode('utf-8')

- item["stockCode"]=("sz"+iii.split("(")[1][:-1]).encode('utf-8')

- #log.msg(iii.encode('utf-8'),level="INFO")

- yield item

網上找了一個UserAgentMiddle的程式碼,只要在settings.py裡面宣告就可以不適用預設的登陸方式了,程式碼如下:

- #-*- coding:utf-8 -*-

- from scrapy.contrib.downloadermiddleware.useragent import UserAgentMiddleware

- import random as rd

- from scrapy import log

- class UserAgentMiddle(UserAgentMiddleware):

- def __init__(self, user_agent=''):

- self.user_agent = user_agent

- def process_request(self, request, spider):

- ua = rd.choice(self.user_agent_list)

- if ua:

- #顯示當前使用的useragent

- print"********Current UserAgent:%s************" %ua

- #記錄

- log.msg('Current UserAgent: '+ua, level='INFO')

- request.headers.setdefault('User-Agent', ua)

- #the default user_agent_list composes chrome,I E,firefox,Mozilla,opera,netscape

- #for more user agent strings,you can find it in http://www.useragentstring.com/pages/useragentstring.php

- user_agent_list = [\

- "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 "

- "(KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1",

- "Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 "

- "(KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11",

- "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 "

- "(KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6",

- "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 "

- "(KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6",

- "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 "

- "(KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1",

- "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 "

- "(KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5",

- "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 "

- "(KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5",

- "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 "

- "(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

- "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 "

- "(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

- "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_0) AppleWebKit/536.3 "

- "(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

- "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 "

- "(KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3",

- "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 "

- "(KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3",

- "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 "

- "(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

- "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 "

- "(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

- "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 "

- "(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

- "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 "

- "(KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3",

- "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 "

- "(KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24",

- "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 "

- "(KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24"

- ]

另外,items定義了一些爬蟲到的資料的儲存格式,以及pipeline裡面定義了對資料的處理方式,也就是儲存進json檔案中,還有一些配置的內容在settings.py裡詳細程式碼分別如下: