深度學習基礎(二)—— 從多層感知機(MLP)到卷積神經網路(CNN)

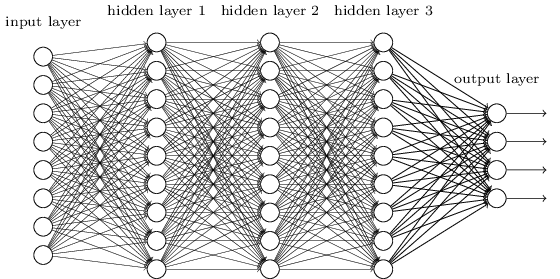

經典的多層感知機(Multi-Layer Perceptron)形式上是全連線(fully-connected)的鄰接網路(adjacent network)。

That is, every neuron in the network is connected to every neuron in adjacent layers.

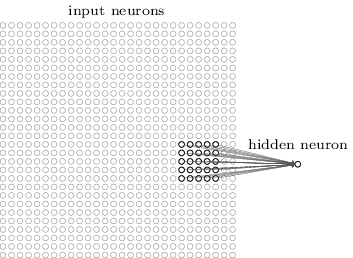

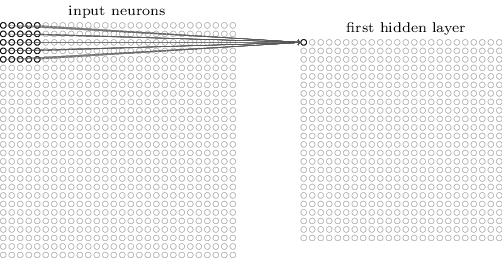

Local receptive fields

全連線的多層感知機中,輸入視為(或者需轉化為)一個列向量。而在卷積神經網路中,以手寫字元識別為例,輸入不再 reshape 為 (28*28, 1) 的列向量,而是作為

That region in the input image is called the local receptive field for the hidden neuron. It’s a little window on the input pixels. Each connection learns a weight(one single weight,也即整個

Shared weights and biases

注意對應關係,是左上角對應左上角,後四列是利用不到的?

第

該隱層神經元的輸出為:

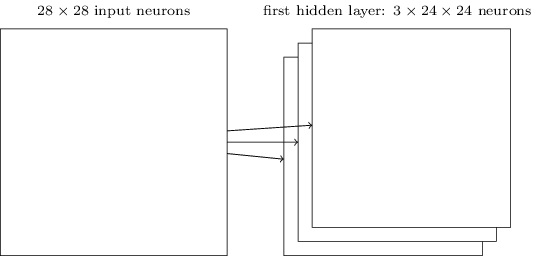

The network structure I’ve described so far can detect just a single kind of localized feature. To do image recognition we’ll need more than one feature map(特徵對映). And so a complete convolutional layer consists of several different feature maps:

feature map:filter,kernel

In the example shown, there are 3 feature maps. Each feature map is defined by a set of 5×5 shared weights, and a single shared bias. The result is that the network can detect 3 different kinds of features(特徵檢測), with each feature being detectable across the entire image.

I’ve shown just 3 feature maps, to keep the diagram above simple. However, in practice convolutional networks may use more (and perhaps many more) feature maps. One of the early convolutional networks, LeNet-5, used 6 feature maps, each associated to a 5×5 local receptive field, to recognize MNIST digits. So the example illustrated above is actually pretty close to LeNet-5. In the examples we develop later in the chapter we’ll use convolutional layers with 20 and 40 feature maps. Let’s take a quick peek at some of the features which are learned*