根據人臉預測年齡性別和情緒 (python + keras)(三)

阿新 • • 發佈:2019-01-04

* 背景 *

1、 目前人臉識別已經廣泛運用,即使在視訊流裡面也能流暢識別出來,無論是對安防還是其他體驗類產品都有很大的影響。研究完人臉識別後,對於年齡的預測,性別的判斷以及根據面部動作識別表情也開始實現,以後可能還會學習顏值預測和是否帶眼睛戴帽子什麼的。面部表情識別技術主要的應用領域包括人機互動、智慧控制、安全、醫療、通訊等領域。顏值預測可以運用於未來的虛擬化妝,客戶可以看見化妝後的自己,滿意後再實際化妝出來的效果最能讓客戶開心。

實現

在哪裡實現?

第一,在視訊流裡實時識別,人臉識別的人臉對齊過程實現,人臉檢測完之後將檢測結果傳參給預測模型。

第二、直接給圖片先檢測再預測

程式碼實現

demo.py

import os

import cv2

import time

import numpy as np

import argparse

import dlib

from contextlib import contextmanager

from wide_resnet import WideResNet

from keras.utils.data_utils import get_file

from keras.models import model_from_json

pretrained_model = "https://github.com/yu4u/age-gender-estimation/releases/download/v0.5/weights.18-4.06.hdf5" wide_resnet.py

# This code is imported from the following project: https://github.com/asmith26/wide_resnets_keras

import logging

import sys

import numpy as np

from keras.models import Model

from keras.layers import Input, Activation, add, Dense, Flatten, Dropout

from keras.layers.convolutional import Conv2D, AveragePooling2D

from keras.layers.normalization import BatchNormalization

from keras.regularizers import l2

from keras import backend as K

sys.setrecursionlimit(2 ** 20)

np.random.seed(2 ** 10)

class WideResNet:

def __init__(self, image_size, depth=16, k=8):

self._depth = depth

self._k = k

self._dropout_probability = 0

self._weight_decay = 0.0005

self._use_bias = False

self._weight_init = "he_normal"

if K.image_dim_ordering() == "th":

logging.debug("image_dim_ordering = 'th'")

self._channel_axis = 1

self._input_shape = (3, image_size, image_size)

else:

logging.debug("image_dim_ordering = 'tf'")

self._channel_axis = -1

self._input_shape = (image_size, image_size, 3)

# Wide residual network http://arxiv.org/abs/1605.07146

def _wide_basic(self, n_input_plane, n_output_plane, stride):

def f(net):

# format of conv_params:

# [ [kernel_size=("kernel width", "kernel height"),

# strides="(stride_vertical,stride_horizontal)",

# padding="same" or "valid"] ]

# B(3,3): orignal <<basic>> block

conv_params = [[3, 3, stride, "same"],

[3, 3, (1, 1), "same"]]

n_bottleneck_plane = n_output_plane

# Residual block

for i, v in enumerate(conv_params):

if i == 0:

if n_input_plane != n_output_plane:

net = BatchNormalization(axis=self._channel_axis)(net)

net = Activation("relu")(net)

convs = net

else:

convs = BatchNormalization(axis=self._channel_axis)(net)

convs = Activation("relu")(convs)

convs = Conv2D(n_bottleneck_plane, kernel_size=(v[0], v[1]),

strides=v[2],

padding=v[3],

kernel_initializer=self._weight_init,

kernel_regularizer=l2(self._weight_decay),

use_bias=self._use_bias)(convs)

else:

convs = BatchNormalization(axis=self._channel_axis)(convs)

convs = Activation("relu")(convs)

if self._dropout_probability > 0:

convs = Dropout(self._dropout_probability)(convs)

convs = Conv2D(n_bottleneck_plane, kernel_size=(v[0], v[1]),

strides=v[2],

padding=v[3],

kernel_initializer=self._weight_init,

kernel_regularizer=l2(self._weight_decay),

use_bias=self._use_bias)(convs)

# Shortcut Connection: identity function or 1x1 convolutional

# (depends on difference between input & output shape - this

# corresponds to whether we are using the first block in each

# group; see _layer() ).

if n_input_plane != n_output_plane:

shortcut = Conv2D(n_output_plane, kernel_size=(1, 1),

strides=stride,

padding="same",

kernel_initializer=self._weight_init,

kernel_regularizer=l2(self._weight_decay),

use_bias=self._use_bias)(net)

else:

shortcut = net

return add([convs, shortcut])

return f

# "Stacking Residual Units on the same stage"

def _layer(self, block, n_input_plane, n_output_plane, count, stride):

def f(net):

net = block(n_input_plane, n_output_plane, stride)(net)

for i in range(2, int(count + 1)):

net = block(n_output_plane, n_output_plane, stride=(1, 1))(net)

return net

return f

# def create_model(self):

def __call__(self):

logging.debug("Creating model...")

assert ((self._depth - 4) % 6 == 0)

n = (self._depth - 4) / 6

inputs = Input(shape=self._input_shape)

n_stages = [16, 16 * self._k, 32 * self._k, 64 * self._k]

conv1 = Conv2D(filters=n_stages[0], kernel_size=(3, 3),

strides=(1, 1),

padding="same",

kernel_initializer=self._weight_init,

kernel_regularizer=l2(self._weight_decay),

use_bias=self._use_bias)(inputs) # "One conv at the beginning (spatial size: 32x32)"

# Add wide residual blocks

block_fn = self._wide_basic

conv2 = self._layer(block_fn, n_input_plane=n_stages[0], n_output_plane=n_stages[1], count=n, stride=(1, 1))(conv1)

conv3 = self._layer(block_fn, n_input_plane=n_stages[1], n_output_plane=n_stages[2], count=n, stride=(2, 2))(conv2)

conv4 = self._layer(block_fn, n_input_plane=n_stages[2], n_output_plane=n_stages[3], count=n, stride=(2, 2))(conv3)

batch_norm = BatchNormalization(axis=self._channel_axis)(conv4)

relu = Activation("relu")(batch_norm)

# Classifier block

pool = AveragePooling2D(pool_size=(8, 8), strides=(1, 1), padding="same")(relu)

flatten = Flatten()(pool)

predictions_g = Dense(units=2, kernel_initializer=self._weight_init, use_bias=self._use_bias,

kernel_regularizer=l2(self._weight_decay), activation="softmax",

name="pred_gender")(flatten)

predictions_a = Dense(units=101, kernel_initializer=self._weight_init, use_bias=self._use_bias,

kernel_regularizer=l2(self._weight_decay), activation="softmax",

name="pred_age")(flatten)

model = Model(inputs=inputs, outputs=[predictions_g, predictions_a])

return model

def main():

model = WideResNet(64)()

model.summary()

if __name__ == '__main__':

main()

準備工作

環境:python3 TensorFlow-gpu numpy keras dlib

模型:model.h5(表情預測模型) model.json(表情預測模型的json型別) weights.18-4.06.hdf5(性別年齡預測模型)

[模型下載](https://download.csdn.net/download/hpymiss/10490349)

執行

python demo.py

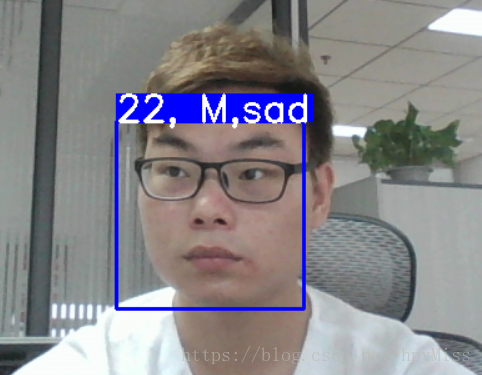

效果

處理一幀一秒以內,視訊流裡能流暢執行

不足之處:模型的精度還不夠,需要進行微調,如何改進還待研究

硬體

- GPU:

name: GeForce GTX 960M major: 5 minor: 0 memoryClockRate(GHz): 1.176

pciBusID: 0000:02:00.0

totalMemory: 4.00GiB freeMemory: 3.34GiB - 處理器 (i7)