kubernetes部署 Prometheus Operator監控系統

Prometheus Operator簡介

各元件功能說明:

1.MetricServer:是kubernetes叢集資源使用情況的聚合器,收集資料給kubernetes叢集內使用,如kubectl,hpa,scheduler等。

2.PrometheusOperator:是一個系統監測和警報工具箱,用來儲存監控資料。

3.NodeExporter:用於各node的關鍵度量指標狀態資料。

4.KubeStateMetrics:收集kubernetes叢集內資源物件資料,制定告警規則。

5.Prometheus:採用pull方式收集apiserver,scheduler,controller-manager,kubelet元件資料,通過http協議傳輸。

6.Grafana:是視覺化資料統計和監控平臺。

7.Alertmanager:實現簡訊或郵件報警。

部署環境準備

kubernetes叢集準備

kubeadm部署3節點kubernetes1.13.0叢集(1master節點+2node節點),叢集部署參考:

https://blog.csdn.net/networken/article/details/84991940

Prometheus Operator的github連結:

https://github.com/coreos/prometheus-operator

Prometheus Operator所有yaml檔案所在路徑:

https://github.com/coreos/prometheus-operator/tree/master/contrib/kube-prometheus/manifests

克隆prometheus-operator倉庫到本地:

git clone https://github.com/coreos/prometheus-operator.git

複製一份yaml檔案到指定目錄:

cp -R prometheus-operator/contrib/kube-prometheus/manifests/ $HOME && cd $HOME/manifests

一鍵部署所有yaml檔案:

[[email protected] manifests]$ kubectl apply -f .

檢視所有pod狀態:

可能部分pod由於映象拉取失敗無法正常啟動:

[[email protected] manifests]$ kubectl get all -n monitoring -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/grafana-6689854d5-xtj6c 1/1 Running 0 25m 10.244.1.219 k8s-node1 <none> <none>

pod/kube-state-metrics-86bc74fd4c-9pzj7 0/4 ContainerCreating 0 25m <none> k8s-node1 <none> <none>

pod/node-exporter-5992x 0/2 ErrImagePull 0 24m 192.168.92.56 k8s-master <none> <none>

pod/node-exporter-9mnpg 0/2 ErrImagePull 0 24m 192.168.92.58 k8s-node2 <none> <none>

pod/node-exporter-xzgsv 0/2 ContainerCreating 0 24m 192.168.92.57 k8s-node1 <none> <none>

pod/prometheus-adapter-5cc8b5d556-n9nvw 0/1 ContainerCreating 0 25m <none> k8s-node1 <none> <none>

pod/prometheus-operator-5cfb7f4c54-bzc29 0/1 ContainerCreating 0 25m <none> k8s-node1 <none> <none>

獲取拉取失敗的映象:

[[email protected] manifests]$ kubectl describe pod node-exporter-5992x -n monitoring

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 30m default-scheduler Successfully assigned monitoring/node-exporter-5992x to k8s-master

Warning Failed 24m kubelet, k8s-master Failed to pull image "quay.io/prometheus/node-exporter:v0.16.0": rpc error: code = Unknown desc = context canceled

Warning Failed 24m kubelet, k8s-master Error: ErrImagePull

Normal Pulling 24m kubelet, k8s-master pulling image "quay.io/coreos/kube-rbac-proxy:v0.4.0"

Normal Pulling 19m (x2 over 30m) kubelet, k8s-master pulling image "quay.io/prometheus/node-exporter:v0.16.0"

Warning Failed 19m kubelet, k8s-master Failed to pull image "quay.io/coreos/kube-rbac-proxy:v0.4.0": rpc error: code = Unknown desc = net/http: TLS handshake timeout

Warning Failed 19m kubelet, k8s-master Error: ErrImagePull

檢視Events資訊可以看到有2個映象在k8s-master節點上拉取失敗:

quay.io/coreos/kube-rbac-proxy:v0.4.0

quay.io/prometheus/node-exporter:v0.16.0

從阿里雲拉取映象

登入k8s-master節點,手動拉取映象,或者從阿里雲或者dockerhub映象倉庫搜尋映象,拉取到本地後修改tag。

需要拉取的映象列表:

#node-exporter-daemonset.yaml

quay.io/prometheus/node-exporter:v0.16.0

quay.io/coreos/kube-rbac-proxy:v0.4.0

#kube-state-metrics-deployment.yaml

quay.io/coreos/kube-state-metrics:v1.4.0

quay.io/coreos/addon-resizer:1.0

#0prometheus-operator-deployment.yaml

quay.io/coreos/configmap-reload:v0.0.1

quay.io/coreos/prometheus-config-reloader:v0.26.0

quay.io/coreos/prometheus-operator:v0.26.0

#alertmanager-alertmanager.yaml

quay.io/prometheus/alertmanager:v0.15.3

#prometheus-adapter-deployment.yaml

quay.io/coreos/k8s-prometheus-adapter-amd64:v0.4.1

#prometheus-prometheus.yaml

quay.io/prometheus/prometheus:v2.5.0

#grafana-deployment.yaml

grafana/grafana:5.2.4

以上映象已經push到阿里雲倉庫,準備映象列表檔案imagepath.txt放在$HOME目錄下,其他版本映象請自行搜尋獲取。

cat $HOME/imagepath.txt

quay.io/prometheus/node-exporter:v0.16.0

quay.io/coreos/kube-rbac-proxy:v0.4.0

......

執行以下指令碼將映象列表檔案imagepath.txt中的映象全部拉取到本地所有節點:

wget -O- https://raw.githubusercontent.com/zhwill/LinuxShell/master/pull-aliyun-images.sh | sh

檢視所有pod狀態:

[[email protected] manifests]$ kubectl get pod -n monitoring -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

alertmanager-main-0 2/2 Running 2 19h 10.244.1.227 k8s-node1 <none> <none>

alertmanager-main-1 2/2 Running 2 18h 10.244.2.200 k8s-node2 <none> <none>

alertmanager-main-2 2/2 Running 2 17h 10.244.1.230 k8s-node1 <none> <none>

grafana-6689854d5-xtj6c 1/1 Running 1 19h 10.244.1.233 k8s-node1 <none> <none>

kube-state-metrics-75fd9687fc-dmmlw 4/4 Running 4 19h 10.244.2.205 k8s-node2 <none> <none>

node-exporter-5992x 2/2 Running 2 19h 192.168.92.56 k8s-master <none> <none>

node-exporter-9mnpg 2/2 Running 2 19h 192.168.92.58 k8s-node2 <none> <none>

node-exporter-xzgsv 2/2 Running 2 19h 192.168.92.57 k8s-node1 <none> <none>

prometheus-adapter-5cc8b5d556-n9nvw 1/1 Running 1 19h 10.244.1.234 k8s-node1 <none> <none>

prometheus-k8s-0 3/3 Running 11 19h 10.244.1.235 k8s-node1 <none> <none>

prometheus-k8s-1 3/3 Running 5 17h 10.244.2.207 k8s-node2 <none> <none>

prometheus-operator-5cfb7f4c54-bzc29 1/1 Running 1 19h 10.244.1.229 k8s-node1 <none> <none>

[[email protected] manifests]$

所有pod狀態為running說明部署成功。

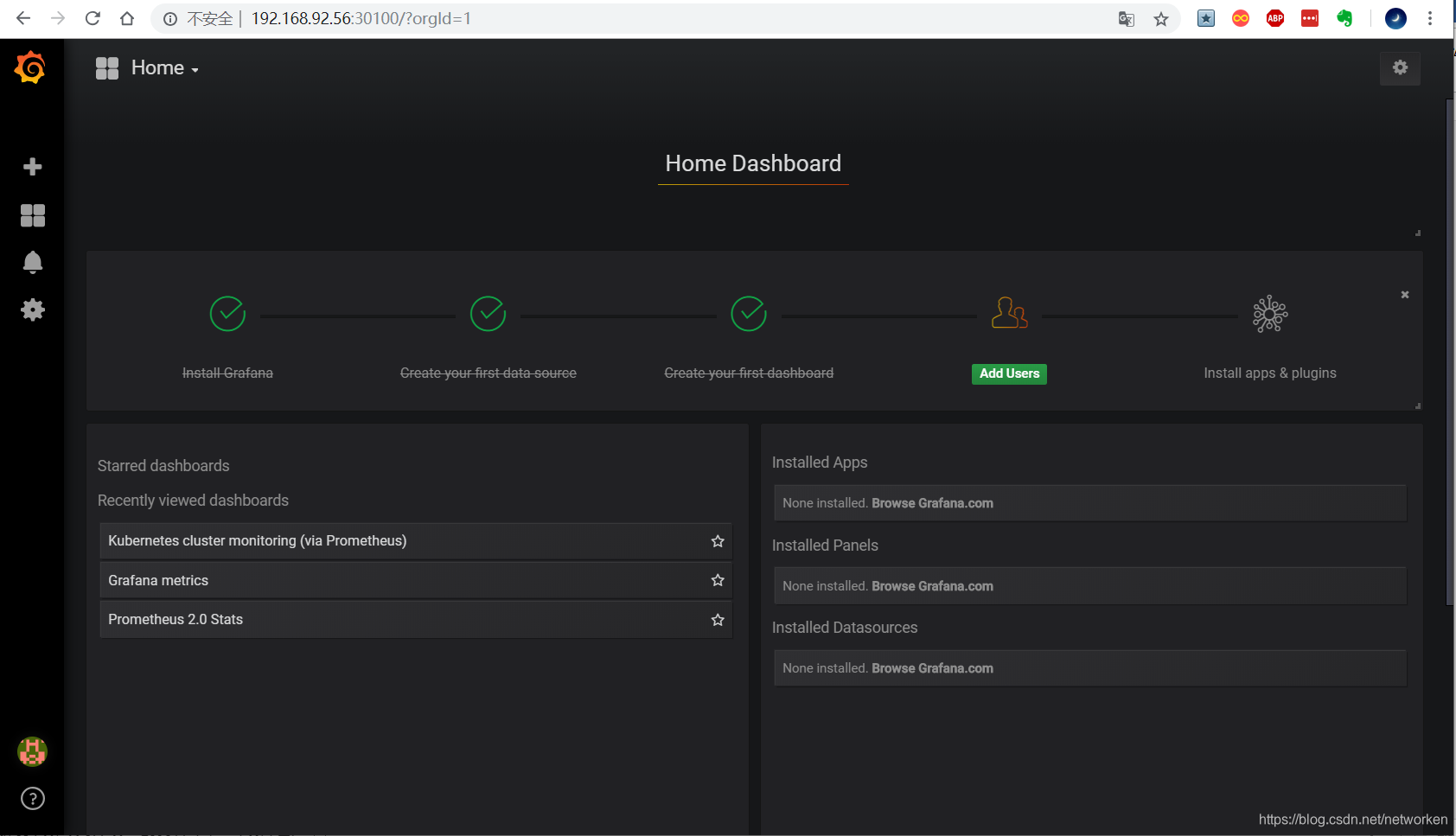

訪問grafana

修改grafana-service.yaml檔案,使用nodepode方式訪問grafana:

[[email protected] manifests]$ vim grafana-service.yaml

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: monitoring

spec:

type: NodePort #新增內容

ports:

- name: http

port: 3000

targetPort: http

nodePort: 30100 #新增內容

selector:

app: grafana

檢視grafana service暴露的埠:

[[email protected] manifests]$ kubectl get service -n monitoring

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

......

grafana NodePort 10.107.56.143 <none> 3000:30100/TCP 20h

......

根據服務暴露的埠30100,通過瀏覽器訪問http://192.168.92.56:3030,其中192.168.92.56為master節點的IP地址,可以是任意節點IP。輸入使用者名稱和密碼(admin/admin)即可登入:

修改prometheus-service.yaml,改為nodepode,同樣方式瀏覽器訪問。

[[email protected] manifests]$ vim prometheus-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

prometheus: k8s

name: prometheus-k8s

namespace: monitoring

spec:

type: NodePort

ports:

- name: web

port: 9090

targetPort: web

nodePort: 30200

selector:

app: prometheus

prometheus: k8s

修改alertmanager-service.yaml,改為nodepode,同樣方式瀏覽器訪問。

[[email protected] manifests]$ vim alertmanager-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

alertmanager: main

name: alertmanager-main

namespace: monitoring

spec:

type: NodePort

ports:

- name: web

port: 9093

targetPort: web

nodePort: 30300

selector:

alertmanager: main

app: alertmanager