kubernetes部署rook+ceph儲存系統

rook簡介

Rook官網:https://rook.io

容器的持久化儲存

容器的持久化儲存是儲存容器儲存狀態的重要手段,儲存外掛會在容器裡掛載一個基於網路或者其他機制的遠端資料卷,使得在容器裡建立的檔案,實際上是儲存在遠端儲存伺服器上,或者以分散式的方式儲存在多個節點上,而與當前宿主機沒有任何繫結關係。這樣,無論你在其他哪個宿主機上啟動新的容器,都可以請求掛載指定的持久化儲存卷,從而訪問到資料卷裡儲存的內容。

由於 Kubernetes 本身的鬆耦合設計,絕大多數儲存專案,比如 Ceph、GlusterFS、NFS 等,都可以為 Kubernetes 提供持久化儲存能力。

Ceph分散式儲存系統

Ceph是一種高度可擴充套件的分散式儲存解決方案,提供物件、檔案和塊儲存。在每個儲存節點上,您將找到Ceph儲存物件的檔案系統和Ceph OSD(物件儲存守護程式)程序。在Ceph叢集上,您還可以找到Ceph MON(監控)守護程式,它們確保Ceph叢集保持高可用性。

Rook

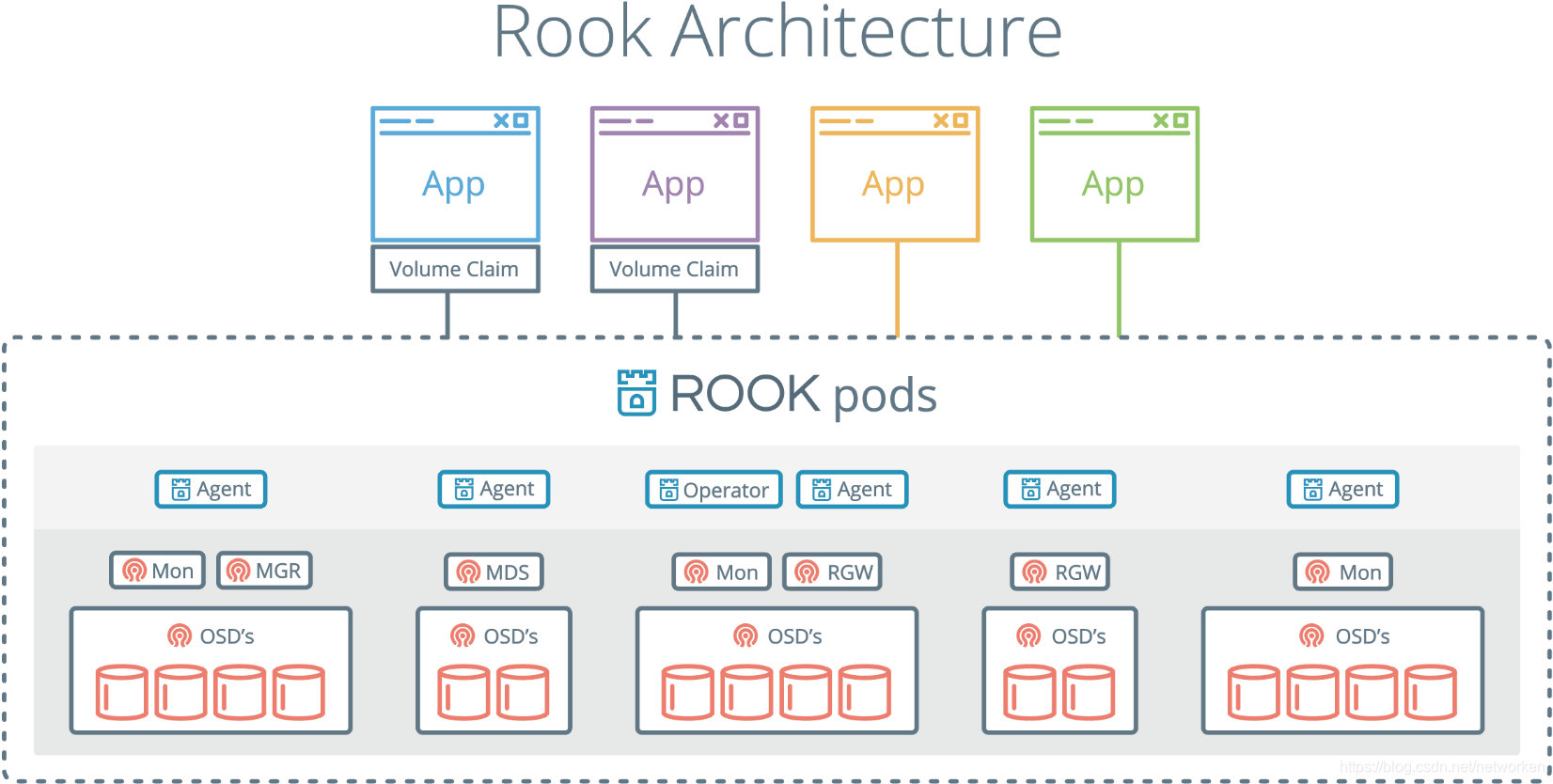

Rook 是一個開源的cloud-native storage編排, 提供平臺和框架;為各種儲存解決方案提供平臺、框架和支援,以便與雲原生環境本地整合。

Rook 將儲存軟體轉變為自我管理、自我擴充套件和自我修復的儲存服務,它通過自動化部署、引導、配置、置備、擴充套件、升級、遷移、災難恢復、監控和資源管理來實現此目的。

Rook 使用底層雲本機容器管理、排程和編排平臺提供的工具來實現它自身的功能。

Rook 目前支援Ceph、NFS、Minio Object Store和CockroachDB。

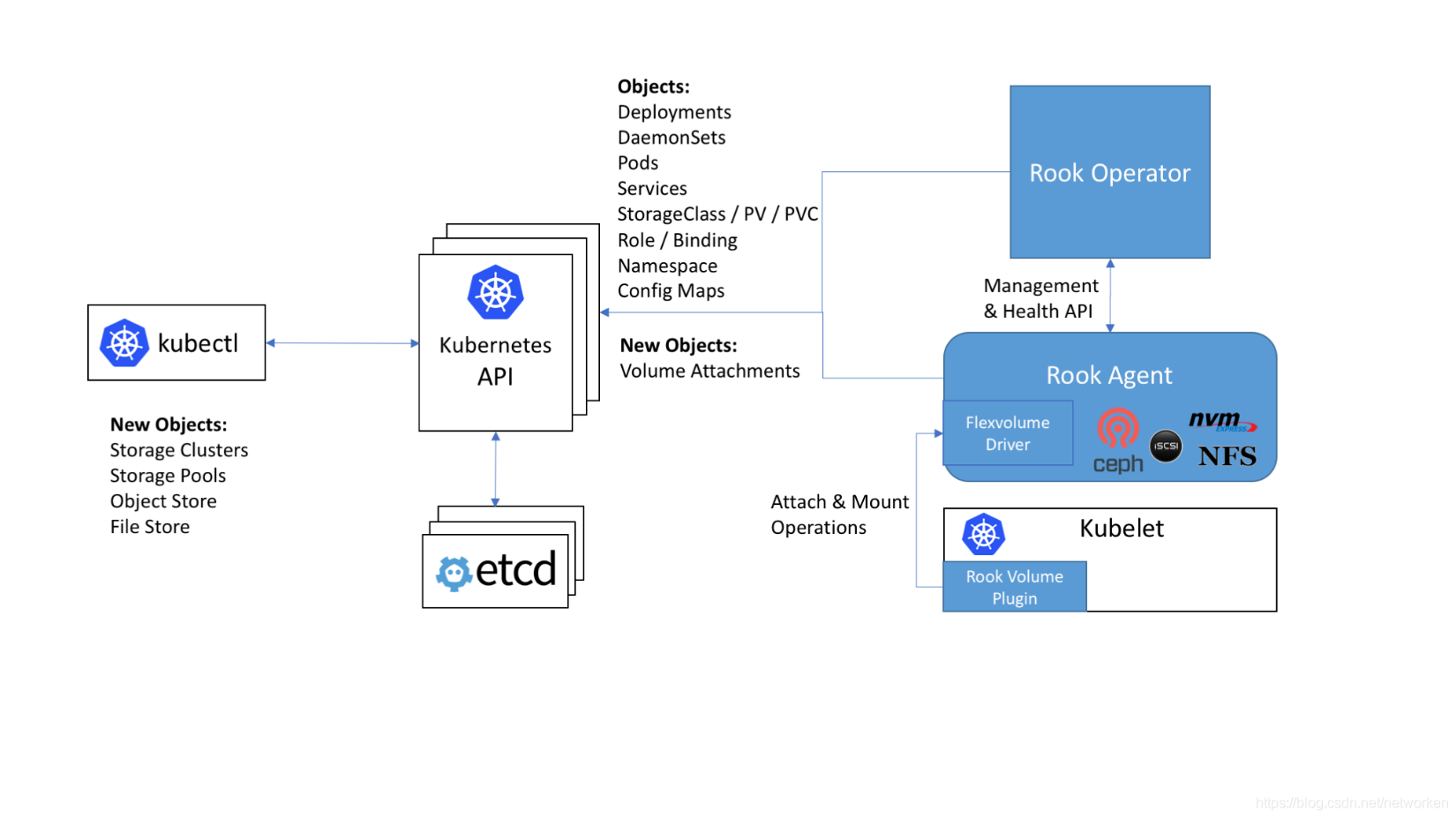

Rook使用Kubernetes原語使Ceph儲存系統能夠在Kubernetes上執行。下圖說明了Ceph Rook如何與Kubernetes整合:

隨著Rook在Kubernetes叢集中執行,Kubernetes應用程式可以掛載由Rook管理的塊裝置和檔案系統,或者可以使用S3 / Swift API提供物件儲存。Rook oprerator自動配置儲存元件並監控群集,以確保儲存處於可用和健康狀態。

Rook oprerator是一個簡單的容器,具有引導和監視儲存叢集所需的全部功能。oprerator將啟動並監控ceph monitor pods和OSDs的守護程序,它提供基本的RADOS儲存。oprerator通過初始化執行服務所需的pod和其他元件來管理池,物件儲存(S3 / Swift)和檔案系統的CRD。

oprerator將監視儲存後臺駐留程式以確保群集正常執行。Ceph mons將在必要時啟動或故障轉移,並在群集增長或縮小時進行其他調整。oprerator還將監視api服務請求的所需狀態更改並應用更改。

Rook oprerator還建立了Rook agent。這些agent是在每個Kubernetes節點上部署的pod。每個agent都配置一個Flexvolume外掛,該外掛與Kubernetes的volume controller整合在一起。處理節點上所需的所有儲存操作,例如附加網路儲存裝置,安裝卷和格式化檔案系統。

該rook容器包括所有必需的Ceph守護程序和工具來管理和儲存所有資料 - 資料路徑沒有變化。 rook並沒有試圖與Ceph保持完全的忠誠度。 許多Ceph概念(如placement groups和crush maps)都是隱藏的,因此您無需擔心它們。 相反,Rook為管理員建立了一個簡化的使用者體驗,包括物理資源,池,卷,檔案系統和buckets。 同時,可以在需要時使用Ceph工具應用高階配置。

Rook在golang中實現。Ceph在C ++中實現,其中資料路徑被高度優化。我們相信這種組合可以提供兩全其美的效果。

部署環境準備

root專案地址:https://github.com/rook/rook

rook官方參考文件:https://rook.github.io/docs/rook/v0.9/ceph-quickstart.html

kubernetes叢集準備

kubeadm部署3節點kubernetes1.13.1叢集(,master節點x1,node節點x2),叢集部署參考:

https://blog.csdn.net/networken/article/details/84991940

叢集節點資訊:

192.168.92.56 k8s-master

192.168.92.57 k8s-node1

192.168.92.58 k8s-node2

在叢集中至少有三個節點可用,滿足ceph高可用要求,這裡已配置master節點使其支援執行pod。

rook使用儲存方式

rook預設使用所有節點的所有資源,rook operator自動在所有節點上啟動OSD裝置,Rook會用如下標準監控並發現可用裝置:

- 裝置沒有分割槽

- 裝置沒有格式化的檔案系統

Rook不會使用不滿足以上標準的裝置。另外也可以通過修改配置檔案,指定哪些節點或者裝置會被使用。

新增新磁碟

這裡在所有節點新增1塊50GB的新磁碟:/dev/sdb,作為OSD盤,提供儲存空間,新增完成後掃描磁碟,確保主機能夠正常識別到:

#掃描 SCSI匯流排並新增 SCSI 裝置

for host in $(ls /sys/class/scsi_host) ; do echo "- - -" > /sys/class/scsi_host/$host/scan; done

#重新掃描 SCSI 匯流排

for scsi_device in $(ls /sys/class/scsi_device/); do echo 1 > /sys/class/scsi_device/$scsi_device/device/rescan; done

#檢視已新增的磁碟,能夠看到sdb說明新增成功

lsblk

無另外說明,以下全部操作都在master節點執行。

部署Rook Operator

克隆rook github倉庫到本地

git clone https://github.com/rook/rook.git

cd rook/cluster/examples/kubernetes/ceph/

執行yaml檔案部署rook系統元件:

[[email protected] ceph]$ kubectl apply -f operator.yaml

namespace/rook-ceph-system created

customresourcedefinition.apiextensions.k8s.io/cephclusters.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephfilesystems.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectstores.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectstoreusers.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephblockpools.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/volumes.rook.io created

clusterrole.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt created

role.rbac.authorization.k8s.io/rook-ceph-system created

clusterrole.rbac.authorization.k8s.io/rook-ceph-global created

clusterrole.rbac.authorization.k8s.io/rook-ceph-mgr-cluster created

serviceaccount/rook-ceph-system created

rolebinding.rbac.authorization.k8s.io/rook-ceph-system created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-global created

deployment.apps/rook-ceph-operator created

[[email protected] ~]$

如上所示,它會建立如下資源:

- namespace:rook-ceph-system,之後的所有rook相關的pod都會建立在該namespace下面

- CRD:建立五個CRDs,.ceph.rook.io

- role & clusterrole:使用者資源控制

- serviceaccount:ServiceAccount資源,給Rook建立的Pod使用

- deployment:rook-ceph-operator,部署rook ceph相關的元件

部署rook-ceph-operator過程中,會觸發以DaemonSet的方式在叢集部署Agent和Discoverpods。

operator會在叢集內的每個主機建立兩個pod:rook-discover,rook-ceph-agent:

[[email protected] ~]$ kubectl get pod -n rook-ceph-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

rook-ceph-agent-49w7t 1/1 Running 0 7m48s 192.168.92.57 k8s-node1 <none> <none>

rook-ceph-agent-dpxkq 1/1 Running 0 111s 192.168.92.58 k8s-node2 <none> <none>

rook-ceph-agent-wb6r8 1/1 Running 0 7m48s 192.168.92.56 k8s-master <none> <none>

rook-ceph-operator-85d64cfb99-2c78k 1/1 Running 0 9m3s 10.244.1.2 k8s-node1 <none> <none>

rook-discover-597sk 1/1 Running 0 7m48s 10.244.0.4 k8s-master <none> <none>

rook-discover-7h89z 1/1 Running 0 111s 10.244.2.2 k8s-node2 <none> <none>

rook-discover-hjdjt 1/1 Running 0 7m48s 10.244.1.3 k8s-node1 <none> <none>

[[email protected] ~]$

建立rook Cluster

當檢查到Rook operator, agent, and discover pods已經是running狀態後,就可以部署roo cluster了。

執行yaml檔案結果:

[[email protected] ceph]$ kubectl apply -f cluster.yaml

namespace/rook-ceph created

serviceaccount/rook-ceph-osd created

serviceaccount/rook-ceph-mgr created

role.rbac.authorization.k8s.io/rook-ceph-osd created

role.rbac.authorization.k8s.io/rook-ceph-mgr-system created

role.rbac.authorization.k8s.io/rook-ceph-mgr created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt created

rolebinding.rbac.authorization.k8s.io/rook-ceph-osd created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-system created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-cluster created

cephcluster.ceph.rook.io/rook-ceph created

[[email protected] ~]$

如上所示,它會建立如下資源:

- namespace:rook-ceph,之後的所有Ceph叢集相關的pod都會建立在該namespace下

- serviceaccount:ServiceAccount資源,給Ceph叢集的Pod使用

- role & rolebinding:使用者資源控制

- cluster:rook-ceph,建立的Ceph叢集

Ceph叢集部署成功後,可以檢視到的pods如下,其中osd數量取決於你的節點數量:

[[email protected] ~]$ kubectl get pod -n rook-ceph -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

rook-ceph-mgr-a-8649f78d9b-hlg7t 1/1 Running 0 3h30m 10.244.2.6 k8s-node2 <none> <none>

rook-ceph-mon-a-7c7df4b5bb-984x8 1/1 Running 0 3h31m 10.244.0.5 k8s-master <none> <none>

rook-ceph-mon-b-7b9bc8b6c4-8trmz 1/1 Running 0 3h31m 10.244.1.4 k8s-node1 <none> <none>

rook-ceph-mon-c-54b5fb5955-5dgr7 1/1 Running 0 3h30m 10.244.2.5 k8s-node2 <none> <none>

rook-ceph-osd-0-b9bb5df49-gt4vs 1/1 Running 0 3h29m 10.244.0.7 k8s-master <none> <none>

rook-ceph-osd-1-9c6dbf797-2dg8p 1/1 Running 0 3h29m 10.244.2.8 k8s-node2 <none> <none>

rook-ceph-osd-2-867ddc447d-xkh7k 1/1 Running 0 3h29m 10.244.1.6 k8s-node1 <none> <none>

rook-ceph-osd-prepare-k8s-master-m8tvr 0/2 Completed 0 3h29m 10.244.0.6 k8s-master <none> <none>

rook-ceph-osd-prepare-k8s-node1-jf7qz 0/2 Completed 1 3h29m 10.244.1.5 k8s-node1 <none> <none>

rook-ceph-osd-prepare-k8s-node2-tcqdl 0/2 Completed 0 3h29m 10.244.2.7 k8s-node2 <none> <none>

[[email protected] ~]$

可以看出部署的Ceph叢集有:

- Ceph Monitors:預設啟動三個ceph-mon,可以在cluster.yaml裡配置

- Ceph Mgr:預設啟動一個,可以在cluster.yaml裡配置

- Ceph OSDs:根據cluster.yaml裡的配置啟動,預設在所有的可用節點上啟動

上述Ceph元件對應kubernetes的kind是deployment:

[[email protected] ~]$ kubectl -n rook-ceph get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

rook-ceph-mgr-a 1/1 1 1 5h34m

rook-ceph-mon-a 1/1 1 1 5h36m

rook-ceph-mon-b 1/1 1 1 5h35m

rook-ceph-mon-c 1/1 1 1 5h35m

rook-ceph-osd-0 1/1 1 1 5h34m

rook-ceph-osd-1 1/1 1 1 5h34m

rook-ceph-osd-2 1/1 1 1 5h34m

[[email protected] ~]$

刪除Ceph叢集

如果要刪除已建立的Ceph叢集,可執行下面命令:

# kubectl delete -f cluster.yaml

刪除Ceph集群后,在之前部署Ceph元件節點的/var/lib/rook/目錄,會遺留下Ceph叢集的配置資訊。

若之後再部署新的Ceph叢集,先把之前Ceph叢集的這些資訊刪除,不然啟動monitor會失敗;

# cat clean-rook-dir.sh

hosts=(

k8s-master

k8s-node1

k8s-node2

)

for host in ${hosts[@]} ; do

ssh $host "rm -rf /var/lib/rook/*"

done

配置ceph dashboard

在cluster.yaml檔案中預設已經啟用了ceph dashboard,檢視dashboard的service:

[[email protected] ~]$ kubectl get service -n rook-ceph

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rook-ceph-mgr ClusterIP 10.107.77.188 <none> 9283/TCP 3h33m

rook-ceph-mgr-dashboard ClusterIP 10.96.135.98 <none> 8443/TCP 3h33m

rook-ceph-mon-a ClusterIP 10.105.153.93 <none> 6790/TCP 3h35m

rook-ceph-mon-b ClusterIP 10.105.107.254 <none> 6790/TCP 3h34m

rook-ceph-mon-c ClusterIP 10.104.1.238 <none> 6790/TCP 3h34m

[[email protected] ~]$

rook-ceph-mgr-dashboard監聽的埠是8443,建立nodeport型別的service以便叢集外部訪問。

kubectl apply -f rook/cluster/examples/kubernetes/ceph/dashboard-external-https.yaml

檢視一下nodeport暴露的埠,這裡是32483埠:

[[email protected] ~]$ kubectl get service -n rook-ceph | grep dashboard

rook-ceph-mgr-dashboard ClusterIP 10.96.135.98 <none> 8443/TCP 3h37m

rook-ceph-mgr-dashboard-external-https NodePort 10.97.181.103 <none> 8443:32483/TCP 3h29m

[[email protected] ~]$

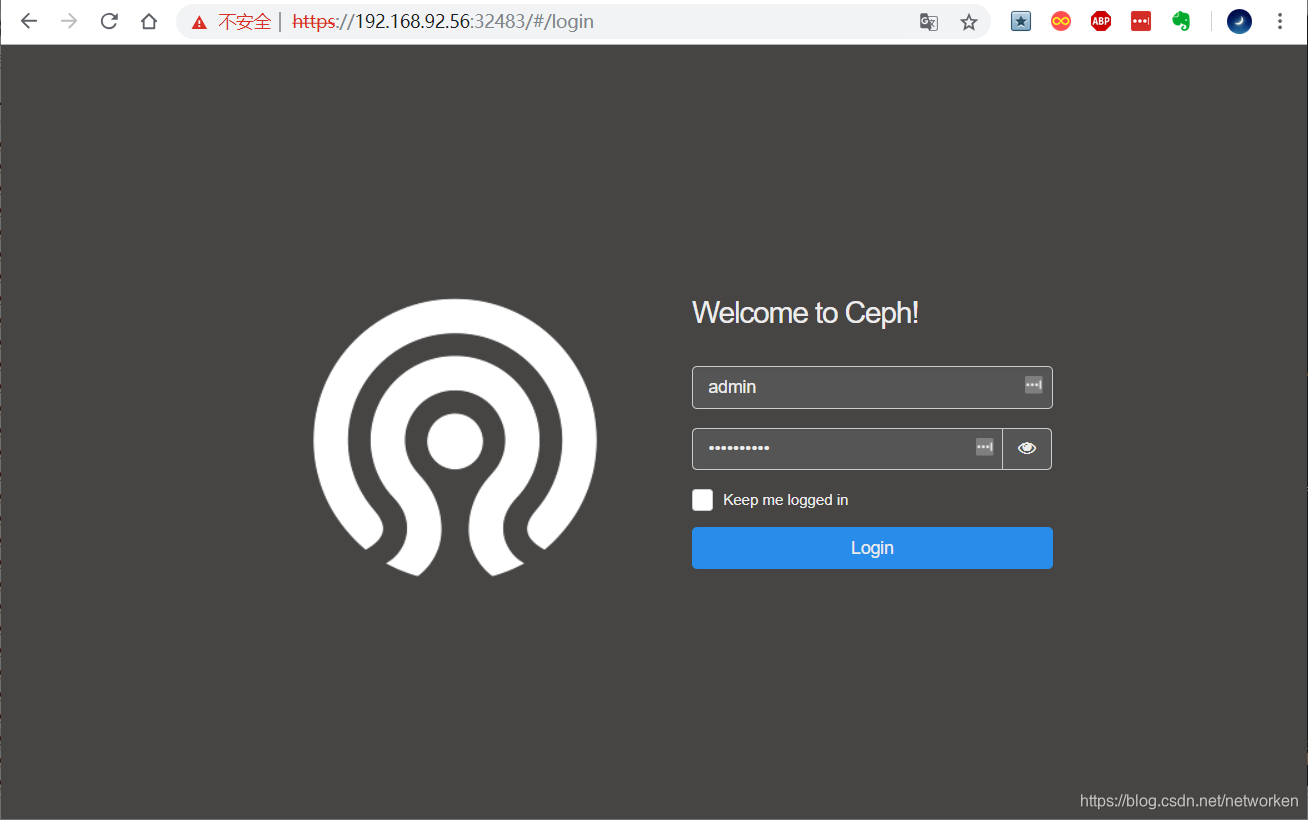

獲取Dashboard的登陸賬號和密碼

[[email protected] ~]$ MGR_POD=`kubectl get pod -n rook-ceph | grep mgr | awk '{print $1}'`

[[email protected] ~]$ kubectl -n rook-ceph logs $MGR_POD | grep password

2019-01-03 05:44:00.585 7fced4782700 0 log_channel(audit) log [DBG] : from='client.4151 10.244.1.2:0/3446600469' entity='client.admin' cmd=[{"username": "admin", "prefix": "dashboard set-login-credentials", "password": "8v2AbqHDj6", "target": ["mgr", ""], "format": "json"}]: dispatch

[[email protected] ~]$

找到username和password欄位,我這裡是admin,8v2AbqHDj6

開啟瀏覽器輸入任意一個Node的IP+nodeport埠,這裡使用master節點 ip訪問:

https://192.168.92.56:32483

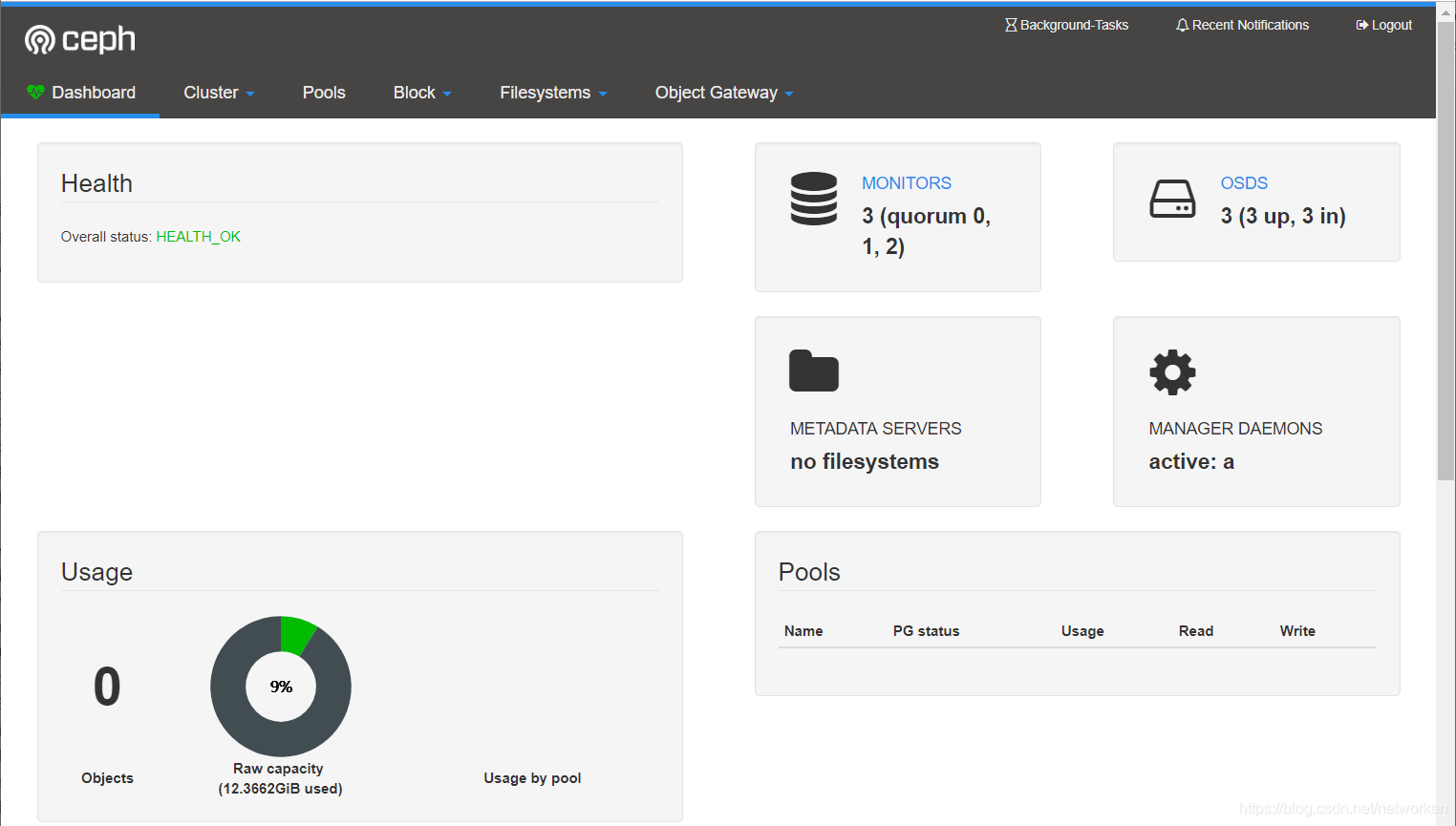

登入後介面如下:

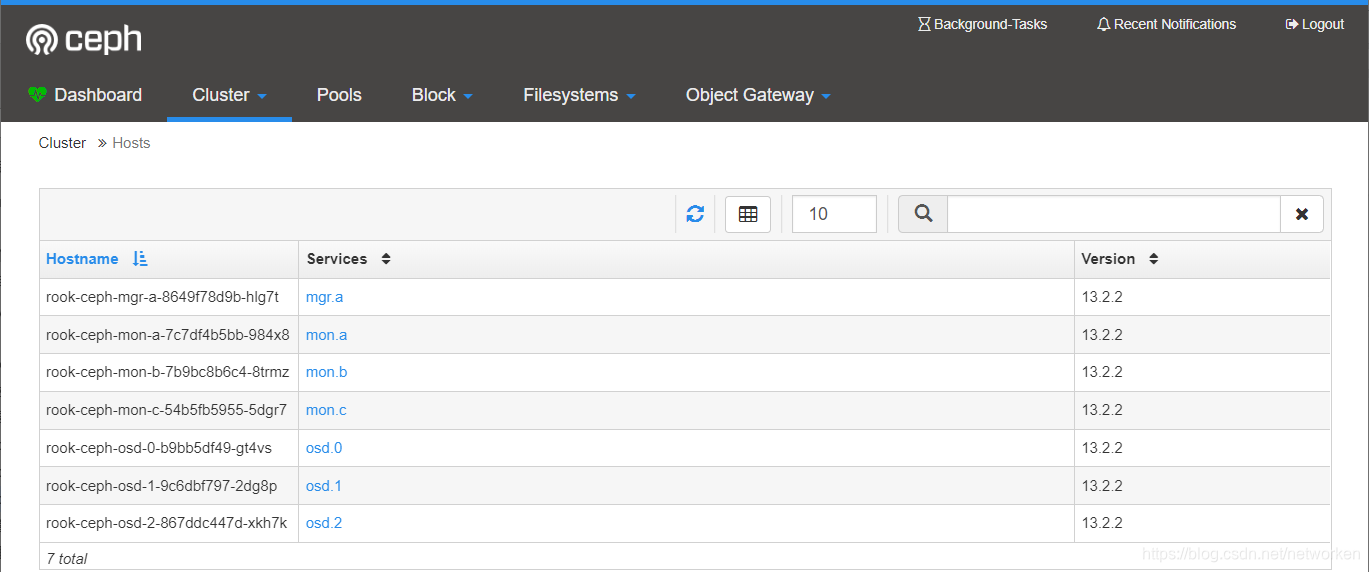

檢視hosts狀態:

運行了1個mgr、3個mon和3個osd

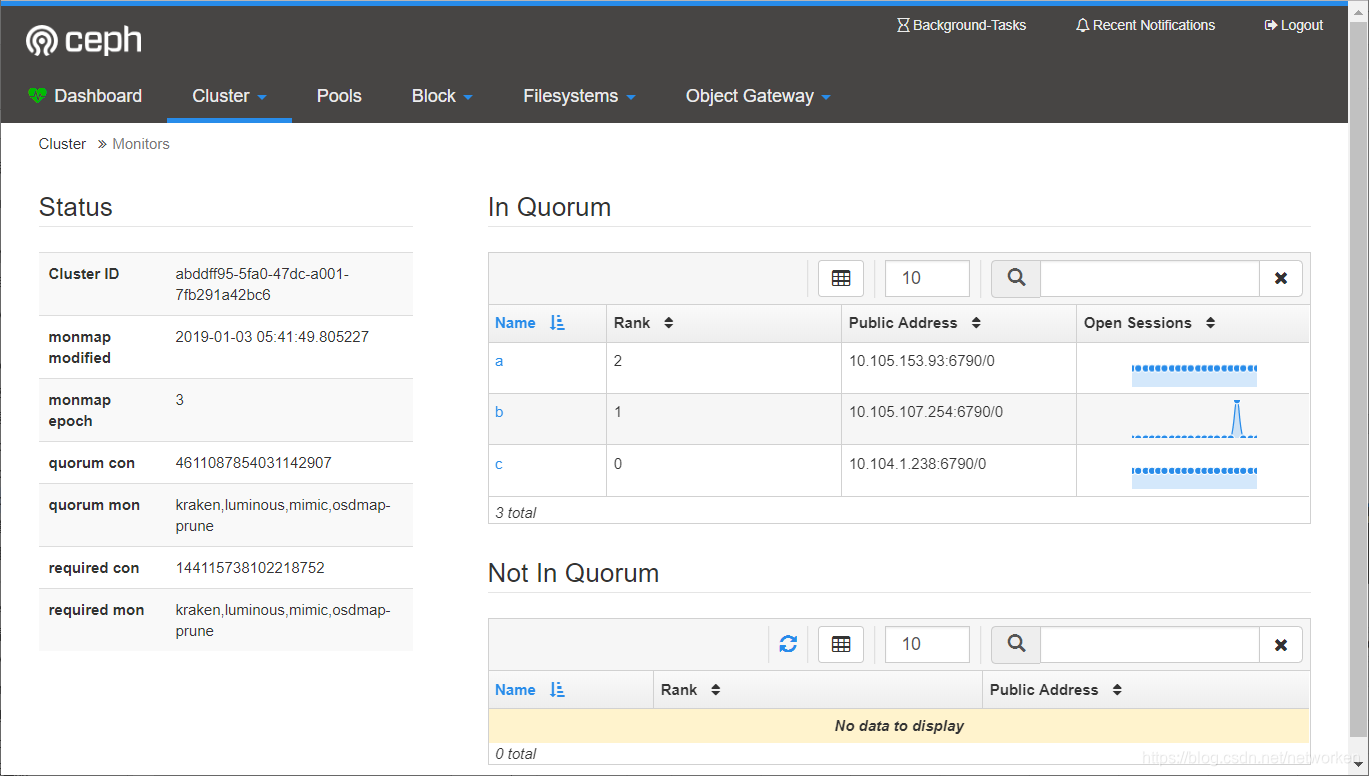

檢視monitors狀態:

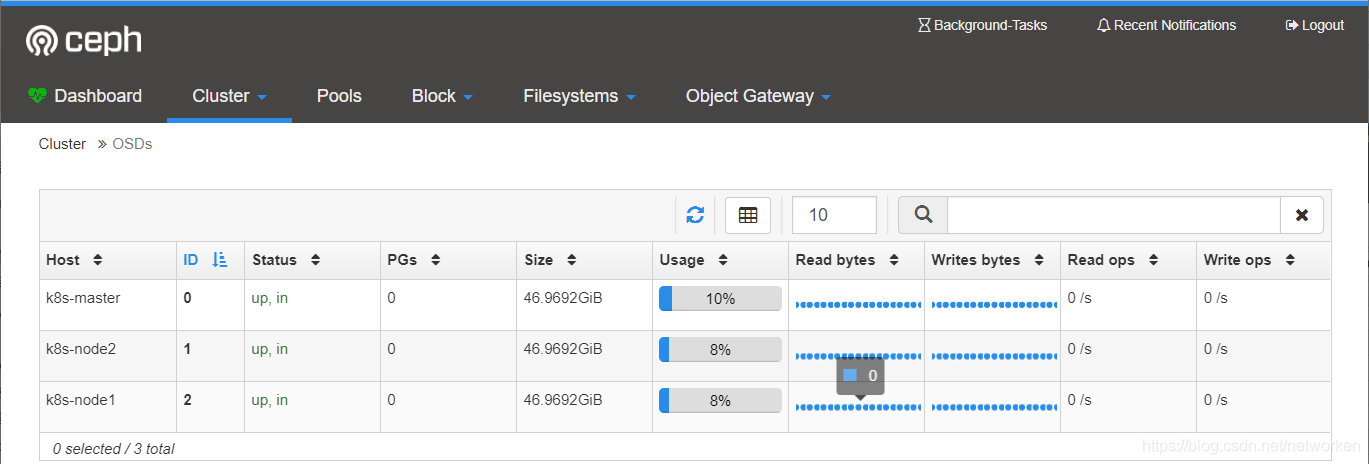

檢視OSD狀態

3個osd狀態正常,每個容量50GB.

部署Ceph toolbox

預設啟動的Ceph叢集,是開啟Ceph認證的,這樣你登陸Ceph元件所在的Pod裡,是沒法去獲取叢集狀態,以及執行CLI命令,這時需要部署Ceph toolbox,命令如下:

kubectl apply -f rook/cluster/examples/kubernetes/ceph/ toolbox.yaml

部署成功後,pod如下:

[[email protected] ceph]$ kubectl -n rook-ceph get pods -o wide | grep ceph-tools

rook-ceph-tools-76c7d559b6-8w7bk 1/1 Running 0 11s 192.168.92.58 k8s-node2 <none> <none>

[[email protected] ceph]$

然後可以登陸該pod後,執行Ceph CLI命令:

[[email protected] ceph]$ kubectl -n rook-ceph exec -it rook-ceph-tools-76c7d559b6-8w7bk bash

bash: warning: setlocale: LC_CTYPE: cannot change locale (en_US.UTF-8): No such file or directory

bash: warning: setlocale: LC_COLLATE: cannot change locale (en_US.UTF-8): No such file or directory

bash: warning: setlocale: LC_MESSAGES: cannot change locale (en_US.UTF-8): No such file or directory

bash: warning: setlocale: LC_NUMERIC: cannot change locale (en_US.UTF-8): No such file or directory

bash: warning: setlocale: LC_TIME: cannot change locale (en_US.UTF-8): No such file or directory

[[email protected] /]#

檢視ceph叢集狀態

[[email protected] /]# ceph status

cluster:

id: abddff95-5fa0-47dc-a001-7fb291a42bc6

health: HEALTH_OK

services:

mon: 3 daemons, quorum c,b,a

mgr: a(active)

osd: 3 osds: 3 up, 3 in

data:

pools: 1 pools, 100 pgs

objects: 0 objects, 0 B

usage: 12 GiB used, 129 GiB / 141 GiB avail

pgs: 100 active+clean

[[email protected] /]#

檢視ceph配置檔案

[[email protected] /]# cd /etc/ceph/

[[email protected]k8s-node2 ceph]# ll

total 12

-rw-r--r-- 1 root root 121 Jan 3 11:28 ceph.conf

-rw-r--r-- 1 root root 62 Jan 3 11:28 keyring

-rw-r--r-- 1 root root 92 Sep 24 18:15 rbdmap

[[email protected] ceph]# cat ceph.conf

[global]

mon_host = 10.104.1.238:6790,10.105.153.93:6790,10.105.107.254:6790

[client.admin]

keyring = /etc/ceph/keyring

[[email protected] ceph]# cat keyring

[client.admin]

key = AQBjoC1cXKJ7KBAA3ZnhWyxvyGa8+fnLFK7ykw==

[[email protected] ceph]# cat rbdmap

# RbdDevice Parameters

#poolname/imagename id=client,keyring=/etc/ceph/ceph.client.keyring

[[email protected] ceph]#

rook提供RBD服務

rook可以提供以下3型別的儲存:

Block: Create block storage to be consumed by a pod

Object: Create an object store that is accessible inside or outside the Kubernetes cluster

Shared File System: Create a file system to be shared across multiple pods

在提供(Provisioning)塊儲存之前,需要先建立StorageClass和儲存池。K8S需要這兩類資源,才能和Rook互動,進而分配持久卷(PV)。

在kubernetes叢集裡,要提供rbd塊裝置服務,需要有如下步驟:

- 建立rbd-provisioner pod

- 建立rbd對應的storageclass

- 建立pvc,使用rbd對應的storageclass

- 建立pod使用rbd pvc

通過rook建立Ceph Cluster之後,rook自身提供了rbd-provisioner服務,所以我們不需要再部署其provisioner。

備註:程式碼位置pkg/operator/ceph/provisioner/provisioner.go

建立pool和StorageClass

檢視storageclass.yaml的配置(預設):

[[email protected]-master ~]$ vim rook/cluster/examples/kubernetes/ceph/storageclass.yaml

apiVersion: ceph.rook.io/v1

kind: CephBlockPool

metadata:

name: replicapool

namespace: rook-ceph

spec:

replicated:

size: 1

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: rook-ceph-block

provisioner: ceph.rook.io/block

parameters:

blockPool: replicapool

# Specify the namespace of the rook cluster from which to create volumes.

# If not specified, it will use `rook` as the default namespace of the cluster.

# This is also the namespace where the cluster will be

clusterNamespace: rook-ceph

# Specify the filesystem type of the volume. If not specified, it will use `ext4`.

fstype: xfs

# (Optional) Specify an existing Ceph user that will be used for mounting storage with this StorageClass.

#mountUser: user1

# (Optional) Specify an existing Kubernetes secret name containing just one key holding the Ceph user secret.

# The secret must exist in each namespace(s) where the storage will be consumed.

#mountSecret: ceph-user1-secret

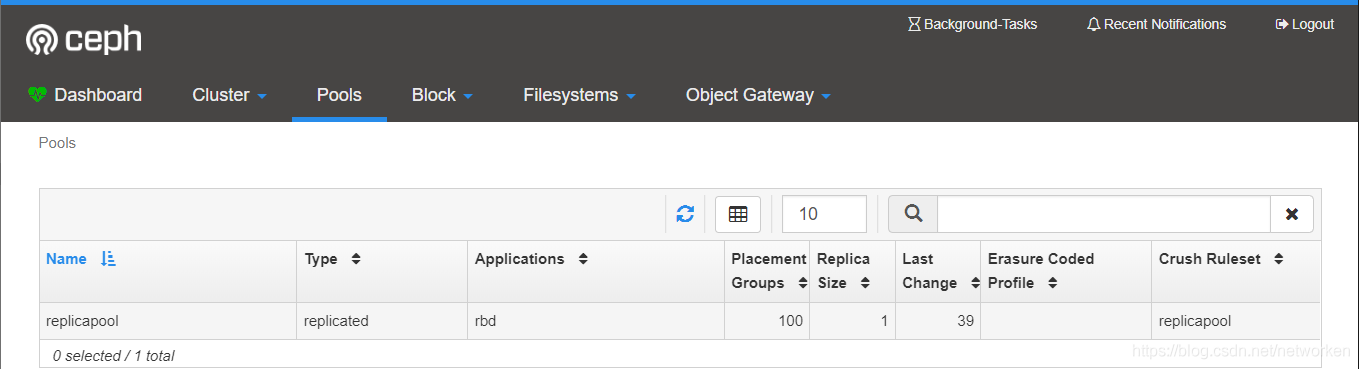

配置檔案中包含了一個名為replicapool的儲存池,和名為rook-ceph-block的storageClass。

執行yaml檔案

kubectl apply -f /rook/cluster/examples/kubernetes/ceph/storageclass.yaml

檢視建立的storageclass:

[[email protected] ~]$ kubectl get storageclass

NAME PROVISIONER AGE

rook-ceph-block ceph.rook.io/block 171m

[[email protected] ~]$

登入ceph dashboard檢視建立的儲存池:

使用儲存

以官方wordpress示例為例,建立一個經典的wordpress和mysql應用程式來使用Rook提供的塊儲存,這兩個應用程式都將使用Rook提供的block volumes。

檢視yaml檔案配置,主要看定義的pvc和掛載volume部分,以wordpress.yaml為例:

[[email protected]-master ~]$ cat rook/cluster/examples/kubernetes/wordpress.yaml

......

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: wp-pv-claim

labels:

app: wordpress

spec:

storageClassName: rook-ceph-block

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

---

......

volumeMounts:

- name: wordpress-persistent-storage

mountPath: /var/www/html

volumes:

- name: wordpress-persistent-storage

persistentVolumeClaim:

claimName: wp-pv-claim

[[email protected]-master ~]$

yaml檔案裡定義了一個名為wp-pv-claim的pvc,指定storageClassName為rook-ceph-block,申請的儲存空間大小為20Gi。最後一部分建立了一個名為wordpress-persistent-storage的volume,並且指定 claimName為pvc的名稱,最後將volume掛載到pod的/var/lib/mysql目錄下。

啟動mysql和wordpress :

kubectl apply -f rook/cluster/examples/kubernetes/mysql.yaml

kubectl apply -f rook/cluster/examples/kubernetes/wordpress.yaml

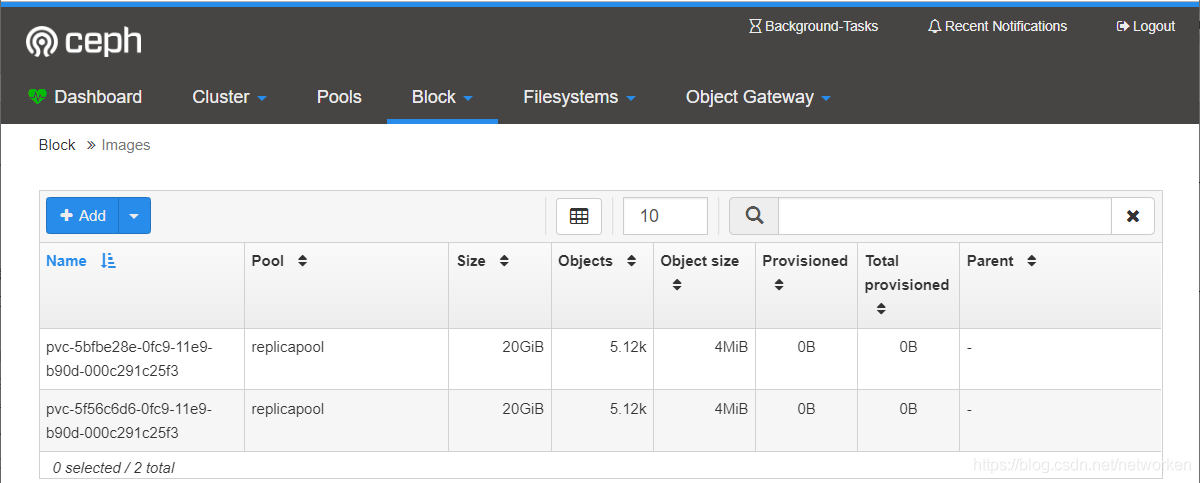

這2個應用都會建立一個塊儲存卷,並且掛載到各自的pod中,檢視宣告的pvc和pv:

[[email protected] ~]$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mysql-pv-claim Bound pvc-5bfbe28e-0fc9-11e9-b90d-000c291c25f3 20Gi RWO rook-ceph-block 32m

wp-pv-claim Bound pvc-5f56c6d6-0fc9-11e9-b90d-000c291c25f3 20Gi RWO rook-ceph-block 32m

[[email protected] ~]$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-5bfbe28e-0fc9-11e9-b90d-000c291c25f3 20Gi RWO Delete Bound default/mysql-pv-claim rook-ceph-block 32m

pvc-5f56c6d6-0fc9-11e9-b90d-000c291c25f3 20Gi RWO Delete Bound default/wp-pv-claim rook-ceph-block 32m

[[email protected] ~]$

注意:這裡的pv會自動建立,當提交了包含 StorageClass 欄位的 PVC 之後,Kubernetes 就會根據這個 StorageClass 創建出對應的 PV,這是用到的是Dynamic Provisioning機制來動態建立pv,PV 支援 Static 靜態請求,和動態建立兩種方式。

在Ceph叢集端檢查:

[[email protected] ceph]$ kubectl -n rook-ceph exec -it rook-ceph-tools-76c7d559b6-8w7bk bash

......

[[email protected] /]# rbd info -p replicapool pvc-5bfbe28e-0fc9-11e9-b90d-000c291c25f3

rbd image 'pvc-5bfbe28e-0fc9-11e9-b90d-000c291c25f3':

size 20 GiB in 5120 objects

order 22 (4 MiB objects)

id: 88156b8b4567

block_name_prefix: rbd_data.88156b8b4567

format: 2

features: layering

op_features:

flags:

create_timestamp: Fri Jan 4 02:35:12 2019

[[email protected] /]#

登陸pod檢查rbd裝置:

[[email protected] ~]$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

wordpress-7b6c4c79bb-t5pst 1/1 Running 0 135m 10.244.1.16 k8s-node1 <none> <none>

wordpress-mysql-6887bf844f-9pmg8 1/1 Running 0 135m 10.244.2.14 k8s-node2 <none> <none>

[[email protected] ~]$

[[email protected] ~]$ kubectl exec -it wordpress-7b6c4c79bb-t5pst bash

[email protected]:/var/www/html#

[email protected]:/var/www/html# mount | grep rbd

/dev/rbd0 on /var/www/html type xfs (rw,relatime,attr2,inode64,sunit=8192,swidth=8192,noquota)

[email protected]:/var/www/html# df -h

Filesystem Size Used Avail Use% Mounted on

......

/dev/rbd0 20G 59M 20G 1% /var/www/html

......

登入ceph dashboard檢視建立的images

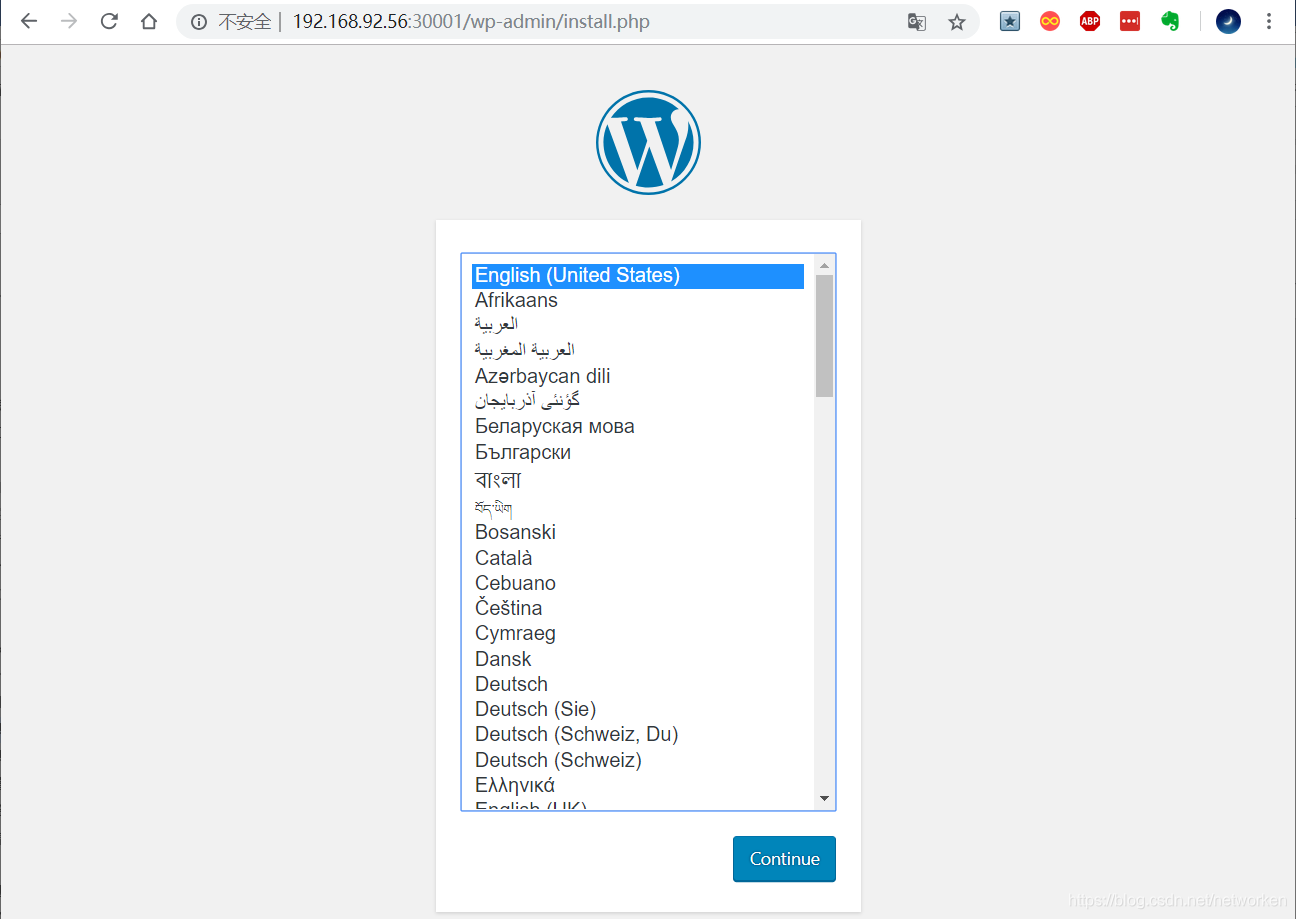

一旦wordpress和mysql pods處於執行狀態,獲取wordpress應用程式的叢集IP並使用瀏覽器訪問:

[[email protected] ~]$ kubectl get svc wordpress

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

wordpress LoadBalancer 10.98.178.189 <pending> 80:30001/TCP 136m

[[email protected] ~]$

訪問wordpress: