在seq2seq中玩文章摘要預處理資料(NLP)

阿新 • • 發佈:2019-01-05

資料預處理:

import pandas as pd import numpy as np import tensorflow as tf import re from nltk.corpus import stopwords import time from tensorflow.python.layers.core import Dense from tensorflow.python.ops.rnn_cell_impl import _zero_state_tensors print('TensorFlow Version: {}'.format(tf.__version__)) import nltk nltk.download('stopwords')

列印結果:

TensorFlow Version: 1.8.0 [nltk_data] Downloading package stopwords to [nltk_data] C:\Users\Administrator\AppData\Roaming\nltk_data... [nltk_data] Package stopwords is already up-to-date!

reviews = pd.read_csv("Reviews.csv")

reviews=reviews.iloc[0:10000,:] # Remove null values and unneeded features reviews = reviews.dropna() reviews = reviews.drop(['Id','ProductId','UserId','ProfileName','HelpfulnessNumerator','HelpfulnessDenominator', 'Score','Time'], 1) reviews = reviews.reset_index(drop=True)

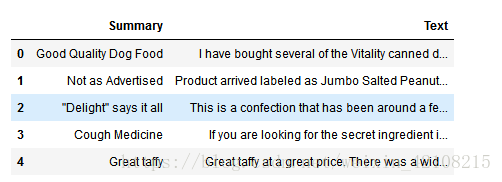

reviews.head()列印結果:

# Inspecting some of the reviews

for i in range(2):

print("Review #",i+1)

print(reviews.Summary[i])

print(reviews.Text[i])

print()列印結果:

Review # 1 Good Quality Dog Food I have bought several of the Vitality canned dog food products and have found them all to be of good quality. The product looks more like a stew than a processed meat and it smells better. My Labrador is finicky and she appreciates this product better than most. Review # 2 Not as Advertised Product arrived labeled as Jumbo Salted Peanuts...the peanuts were actually small sized unsalted. Not sure if this was an error or if the vendor intended to represent the product as "Jumbo".

contractions = {

"ain't": "am not",

"aren't": "are not",

"can't": "cannot",

"can't've": "cannot have",

"'cause": "because",

"could've": "could have",

"couldn't": "could not",

"couldn't've": "could not have",

"didn't": "did not",

"doesn't": "does not",

"don't": "do not",

"hadn't": "had not",

"hadn't've": "had not have",

"hasn't": "has not",

"haven't": "have not",

"he'd": "he would",

"he'd've": "he would have",

"he'll": "he will",

"he's": "he is",

"how'd": "how did",

"how'll": "how will",

"how's": "how is",

"i'd": "i would",

"i'll": "i will",

"i'm": "i am",

"i've": "i have",

"isn't": "is not",

"it'd": "it would",

"it'll": "it will",

"it's": "it is",

"let's": "let us",

"ma'am": "madam",

"mayn't": "may not",

"might've": "might have",

"mightn't": "might not",

"must've": "must have",

"mustn't": "must not",

"needn't": "need not",

"oughtn't": "ought not",

"shan't": "shall not",

"sha'n't": "shall not",

"she'd": "she would",

"she'll": "she will",

"she's": "she is",

"should've": "should have",

"shouldn't": "should not",

"that'd": "that would",

"that's": "that is",

"there'd": "there had",

"there's": "there is",

"they'd": "they would",

"they'll": "they will",

"they're": "they are",

"they've": "they have",

"wasn't": "was not",

"we'd": "we would",

"we'll": "we will",

"we're": "we are",

"we've": "we have",

"weren't": "were not",

"what'll": "what will",

"what're": "what are",

"what's": "what is",

"what've": "what have",

"where'd": "where did",

"where's": "where is",

"who'll": "who will",

"who's": "who is",

"won't": "will not",

"wouldn't": "would not",

"you'd": "you would",

"you'll": "you will",

"you're": "you are"

}def clean_text(text, remove_stopwords = True):

'''Remove unwanted characters, stopwords, and format the text to create fewer nulls word embeddings'''

# Convert words to lower case

text = text.lower()

# Replace contractions with their longer forms

if True:

text = text.split()

new_text = []

for word in text:

if word in contractions:

new_text.append(contractions[word])

else:

new_text.append(word)

text = " ".join(new_text)

# Format words and remove unwanted characters

text = re.sub(r'https?:\/\/.*[\r\n]*', '', text, flags=re.MULTILINE)

text = re.sub(r'\<a href', ' ', text)

text = re.sub(r'&', '', text)

text = re.sub(r'[_"\-;%()|+&=*%.,!?:#[email protected]\[\]/]', ' ', text)

text = re.sub(r'<br />', ' ', text)

text = re.sub(r'\'', ' ', text)

# Optionally, remove stop words

if remove_stopwords:

text = text.split()

stops = set(stopwords.words("english"))

text = [w for w in text if not w in stops]

text = " ".join(text)

return text# Clean the summaries and texts

clean_summaries = []

for summary in reviews.Summary:

clean_summaries.append(clean_text(summary, remove_stopwords=False))

print("Summaries are complete.")

clean_texts = []

for text in reviews.Text:

clean_texts.append(clean_text(text))

print("Texts are complete.")# Inspect the cleaned summaries and texts to ensure they have been cleaned well

for i in range(2):

print("Clean Review #",i+1)

print(clean_summaries[i])

print(clean_texts[i])

print()Clean Review # 1 good quality dog food bought several vitality canned dog food products found good quality product looks like stew processed meat smells better labrador finicky appreciates product better Clean Review # 2 not as advertised product arrived labeled jumbo salted peanuts peanuts actually small sized unsalted sure error vendor intended represent product jumbo

def count_words(count_dict, text):

for sentence in text:

for word in sentence.split():#統計單詞在文字中出現的次數

if word not in count_dict:

count_dict[word] = 1

else:

count_dict[word] += 1# Find the number of times each word was used and the size of the vocabulary

word_counts = {}

count_words(word_counts, clean_summaries)

count_words(word_counts, clean_texts)

print("Size of Vocabulary:", len(word_counts))# Load Conceptnet Numberbatch's (CN) embeddings, similar to GloVe, but probably better

# (https://github.com/commonsense/conceptnet-numberbatch)

embeddings_index = {}

with open('numberbatch-en-17.04b.txt', encoding='utf-8') as f:

for line in f:

values = line.split(' ')

word = values[0]

embedding = np.asarray(values[1:], dtype='float32')

embeddings_index[word] = embedding

print('Word embeddings:', len(embeddings_index))#g功能一共統計出現的詞的個數# Find the number of words that are missing from CN, and are used more than our threshold.

missing_words = 0

threshold = 20

for word, count in word_counts.items():

if count > threshold:

if word not in embeddings_index:

missing_words += 1

missing_ratio = round(missing_words/len(word_counts),4)*100

print("Number of words missing from CN:", missing_words)

print("Percent of words that are missing from vocabulary: {}%".format(missing_ratio))# Need to use 300 for embedding dimensions to match CN's vectors.

embedding_dim = 300

nb_words = len(vocab_to_int)

# Create matrix with default values of zero

word_embedding_matrix = np.zeros((nb_words, embedding_dim), dtype=np.float32)

for word, i in vocab_to_int.items():

if word in embeddings_index:

word_embedding_matrix[i] = embeddings_index[word]

else:

# If word not in CN, create a random embedding for it

new_embedding = np.array(np.random.uniform(-1.0, 1.0, embedding_dim))

embeddings_index[word] = new_embedding

word_embedding_matrix[i] = new_embedding

# Check if value matches len(vocab_to_int)

print(len(word_embedding_matrix))def convert_to_ints(text, word_count, unk_count, eos=False):

'''Convert words in text to an integer.

If word is not in vocab_to_int, use UNK's integer.

Total the number of words and UNKs.

Add EOS token to the end of texts'''

ints = []

for sentence in text:

sentence_ints = []

for word in sentence.split():

word_count += 1

if word in vocab_to_int:

sentence_ints.append(vocab_to_int[word])

else:

sentence_ints.append(vocab_to_int["<UNK>"])

unk_count += 1

if eos:

sentence_ints.append(vocab_to_int["<EOS>"])#進一步檢查UNK和EOS

ints.append(sentence_ints)

return ints, word_count, unk_count# Apply convert_to_ints to clean_summaries and clean_texts

word_count = 0

unk_count = 0

int_summaries, word_count, unk_count = convert_to_ints(clean_summaries, word_count, unk_count)

int_texts, word_count, unk_count = convert_to_ints(clean_texts, word_count, unk_count, eos=True)

unk_percent = round(unk_count/word_count,4)*100

print("Total number of words in headlines:", word_count)

print("Total number of UNKs in headlines:", unk_count)

print("Percent of words that are UNK: {}%".format(unk_percent))def create_lengths(text):

'''Create a data frame of the sentence lengths from a text'''

lengths = []

for sentence in text:

lengths.append(len(sentence))#功能存一下當前每個句子的長度,以便用RNN訓練時統計最長的那個數

return pd.DataFrame(lengths, columns=['counts'])lengths_summaries = create_lengths(int_summaries)

lengths_texts = create_lengths(int_texts)

print("Summaries:")

print(lengths_summaries.describe())

print()

print("Texts:")

print(lengths_texts.describe())列印結果:

Summaries:

counts

count 10000.000000

mean 4.138000

std 2.658508

min 0.000000

25% 2.000000

50% 4.000000

75% 5.000000

max 30.000000

Texts:

counts

count 10000.000000

mean 40.029800

std 39.191837

min 1.000000

25% 18.000000

50% 28.000000

75% 48.000000

max 783.000000

In [27]:def unk_counter(sentence):

'''Counts the number of time UNK appears in a sentence.'''

unk_count = 0

for word in sentence:

if word == vocab_to_int["<UNK>"]:

unk_count += 1

return unk_count# Sort the summaries and texts by the length of the texts, shortest to longest

# Limit the length of summaries and texts based on the min and max ranges.

# Remove reviews that include too many UNKs

sorted_summaries = []#功能執行一個排序和過濾的操作

sorted_texts = []

max_text_length = 84

max_summary_length = 13

min_length = 2

unk_text_limit = 1

unk_summary_limit = 0

for length in range(min(lengths_texts.counts), max_text_length):

for count, words in enumerate(int_summaries):

if (len(int_summaries[count]) >= min_length and

len(int_summaries[count]) <= max_summary_length and

len(int_texts[count]) >= min_length and

unk_counter(int_summaries[count]) <= unk_summary_limit and

unk_counter(int_texts[count]) <= unk_text_limit and

length == len(int_texts[count])

):

sorted_summaries.append(int_summaries[count])

sorted_texts.append(int_texts[count])

# Compare lengths to ensure they match

print(len(sorted_summaries))

print(len(sorted_texts))列印結果:

6814 6814