tensorflow將訓練好的模型freeze,即將權重固化到圖裡面,並使用該模型進行預測

阿新 • • 發佈:2019-01-08

ML主要分為訓練和預測兩個階段,此教程就是將訓練好的模型freeze並儲存下來.freeze的含義就是將該模型的圖結構和該模型的權重固化到一起了.也即載入freeze的模型之後,立刻能夠使用了。

下面使用一個簡單的demo來詳細解釋該過程,

一、首先執行指令碼tiny_model.py

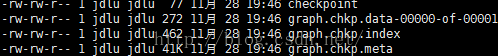

說明:saver.save必須在session裡面,因為在session裡面,整個圖才是啟用的,才能夠將引數存進來,使用save之後能夠得到如下的檔案:#-*- coding:utf-8 -*- import tensorflow as tf import numpy as np with tf.variable_scope('Placeholder'): inputs_placeholder = tf.placeholder(tf.float32, name='inputs_placeholder', shape=[None, 10]) labels_placeholder = tf.placeholder(tf.float32, name='labels_placeholder', shape=[None, 1]) with tf.variable_scope('NN'): W1 = tf.get_variable('W1', shape=[10, 1], initializer=tf.random_normal_initializer(stddev=1e-1)) b1 = tf.get_variable('b1', shape=[1], initializer=tf.constant_initializer(0.1)) W2 = tf.get_variable('W2', shape=[10, 1], initializer=tf.random_normal_initializer(stddev=1e-1)) b2 = tf.get_variable('b2', shape=[1], initializer=tf.constant_initializer(0.1)) a = tf.nn.relu(tf.matmul(inputs_placeholder, W1) + b1) a2 = tf.nn.relu(tf.matmul(inputs_placeholder, W2) + b2) y = tf.div(tf.add(a, a2), 2) with tf.variable_scope('Loss'): loss = tf.reduce_sum(tf.square(y - labels_placeholder) / 2) with tf.variable_scope('Accuracy'): predictions = tf.greater(y, 0.5, name="predictions") correct_predictions = tf.equal(predictions, tf.cast(labels_placeholder, tf.bool), name="correct_predictions") accuracy = tf.reduce_mean(tf.cast(correct_predictions, tf.float32)) adam = tf.train.AdamOptimizer(learning_rate=1e-3) train_op = adam.minimize(loss) # generate_data inputs = np.random.choice(10, size=[10000, 10]) labels = (np.sum(inputs, axis=1) > 45).reshape(-1, 1).astype(np.float32) print('inputs.shape:', inputs.shape) print('labels.shape:', labels.shape) test_inputs = np.random.choice(10, size=[100, 10]) test_labels = (np.sum(test_inputs, axis=1) > 45).reshape(-1, 1).astype(np.float32) print('test_inputs.shape:', test_inputs.shape) print('test_labels.shape:', test_labels.shape) batch_size = 32 epochs = 10 batches = [] print("%d items in batch of %d gives us %d full batches and %d batches of %d items" % ( len(inputs), batch_size, len(inputs) // batch_size, batch_size - len(inputs) // batch_size, len(inputs) - (len(inputs) // batch_size) * 32) ) for i in range(len(inputs) // batch_size): batch = [ inputs[batch_size*i:batch_size*i+batch_size], labels[batch_size*i:batch_size*i+batch_size] ] batches.append(list(batch)) if (i + 1) * batch_size < len(inputs): batch = [ inputs[batch_size*(i + 1):],labels[batch_size*(i + 1):] ] batches.append(list(batch)) print("Number of batches: %d" % len(batches)) print("Size of full batch: %d" % len(batches[0])) print("Size if final batch: %d" % len(batches[-1])) global_count = 0 with tf.Session() as sess: #sv = tf.train.Supervisor() #with sv.managed_session() as sess: sess.run(tf.initialize_all_variables()) for i in range(epochs): for batch in batches: # print(batch[0].shape, batch[1].shape) train_loss , _= sess.run([loss, train_op], feed_dict={ inputs_placeholder: batch[0], labels_placeholder: batch[1] }) # print('train_loss: %d' % train_loss) if global_count % 100 == 0: acc = sess.run(accuracy, feed_dict={ inputs_placeholder: test_inputs, labels_placeholder: test_labels }) print('accuracy: %f' % acc) global_count += 1 acc = sess.run(accuracy, feed_dict={ inputs_placeholder: test_inputs, labels_placeholder: test_labels }) print("final accuracy: %f" % acc) #在session當中就要將模型進行儲存 saver = tf.train.Saver() last_chkp = saver.save(sess, 'results/graph.chkp') #sv.saver.save(sess, 'results/graph.chkp') for op in tf.get_default_graph().get_operations(): print(op.name)

二、綜合上述幾個檔案,生成可以使用的模型的步驟如下:

1、恢復我們儲存的圖 2、開啟一個Session,然後載入該圖要求的權重 3、刪除對預測無關的metadata 4、將處理好的模型序列化之後儲存 執行freeze.py#-*- coding:utf-8 -*- import os, argparse import tensorflow as tf from tensorflow.python.framework import graph_util dir = os.path.dirname(os.path.realpath(__file__)) def freeze_graph(model_folder): # We retrieve our checkpoint fullpath checkpoint = tf.train.get_checkpoint_state(model_folder) input_checkpoint = checkpoint.model_checkpoint_path # We precise the file fullname of our freezed graph absolute_model_folder = "/".join(input_checkpoint.split('/')[:-1]) output_graph = absolute_model_folder + "/frozen_model.pb" # Before exporting our graph, we need to precise what is our output node # this variables is plural, because you can have multiple output nodes #freeze之前必須明確哪個是輸出結點,也就是我們要得到推論結果的結點 #輸出結點可以看我們模型的定義 #只有定義了輸出結點,freeze才會把得到輸出結點所必要的結點都儲存下來,或者哪些結點可以丟棄 #所以,output_node_names必須根據不同的網路進行修改 output_node_names = "Accuracy/predictions" # We clear the devices, to allow TensorFlow to control on the loading where it wants operations to be calculated clear_devices = True # We import the meta graph and retrive a Saver saver = tf.train.import_meta_graph(input_checkpoint + '.meta', clear_devices=clear_devices) # We retrieve the protobuf graph definition graph = tf.get_default_graph() input_graph_def = graph.as_graph_def() #We start a session and restore the graph weights #這邊已經將訓練好的引數載入進來,也即最後儲存的模型是有圖,並且圖裡面已經有引數了,所以才叫做是frozen #相當於將引數已經固化在了圖當中 with tf.Session() as sess: saver.restore(sess, input_checkpoint) # We use a built-in TF helper to export variables to constant output_graph_def = graph_util.convert_variables_to_constants( sess, input_graph_def, output_node_names.split(",") # We split on comma for convenience ) # Finally we serialize and dump the output graph to the filesystem with tf.gfile.GFile(output_graph, "wb") as f: f.write(output_graph_def.SerializeToString()) print("%d ops in the final graph." % len(output_graph_def.node)) if __name__ == '__main__': parser = argparse.ArgumentParser() parser.add_argument("--model_folder", type=str, help="Model folder to export") args = parser.parse_args() freeze_graph(args.model_folder)

說明:對於freeze操作,我們需要定義輸出結點的名字.因為網路其實是比較複雜的,定義了輸出結點的名字,那麼freeze的時候就只把輸出該結點所需要的子圖都固化下來,其他無關的就捨棄掉.因為我們freeze模型的目的是接下來做預測.所以,一般情況下,output_node_names就是我們預測的目標.

三、載入freeze後的模型,注意該模型已經是包含圖和相應的引數了.所以,我們不需要再載入引數進來.也即該模型載入進來已經是可以使用了.

說明:#-*- coding:utf-8 -*- import argparse import tensorflow as tf def load_graph(frozen_graph_filename): # We parse the graph_def file with tf.gfile.GFile(frozen_graph_filename, "rb") as f: graph_def = tf.GraphDef() graph_def.ParseFromString(f.read()) # We load the graph_def in the default graph with tf.Graph().as_default() as graph: tf.import_graph_def( graph_def, input_map=None, return_elements=None, name="prefix", op_dict=None, producer_op_list=None ) return graph if __name__ == '__main__': parser = argparse.ArgumentParser() parser.add_argument("--frozen_model_filename", default="results/frozen_model.pb", type=str, help="Frozen model file to import") args = parser.parse_args() #載入已經將引數固化後的圖 graph = load_graph(args.frozen_model_filename) # We can list operations #op.values() gives you a list of tensors it produces #op.name gives you the name #輸入,輸出結點也是operation,所以,我們可以得到operation的名字 for op in graph.get_operations(): print(op.name,op.values()) # prefix/Placeholder/inputs_placeholder # ... # prefix/Accuracy/predictions #操作有:prefix/Placeholder/inputs_placeholder #操作有:prefix/Accuracy/predictions #為了預測,我們需要找到我們需要feed的tensor,那麼就需要該tensor的名字 #注意prefix/Placeholder/inputs_placeholder僅僅是操作的名字,prefix/Placeholder/inputs_placeholder:0才是tensor的名字 x = graph.get_tensor_by_name('prefix/Placeholder/inputs_placeholder:0') y = graph.get_tensor_by_name('prefix/Accuracy/predictions:0') with tf.Session(graph=graph) as sess: y_out = sess.run(y, feed_dict={ x: [[3, 5, 7, 4, 5, 1, 1, 1, 1, 1]] # < 45 }) print(y_out) # [[ 0.]] Yay! print ("finish")

1、在預測的過程中,當把freeze後的模型載入進來後,我們只需要定義好輸入的tensor和目標tensor即可

2、在這裡要注意一下tensor_name和ops_name,

注意prefix/Placeholder/inputs_placeholder僅僅是操作的名字,prefix/Placeholder/inputs_placeholder:0才是tensor的名字

x = graph.get_tensor_by_name('prefix/Placeholder/inputs_placeholder:0')一定要使用tensor的名字

3、要獲取圖中ops的名字和對應的tensor的名字,可用如下的程式碼:

# We can list operations

#op.values() gives you a list of tensors it produces

#op.name gives you the name

#輸入,輸出結點也是operation,所以,我們可以得到operation的名字

for op in graph.get_operations():

print(op.name,op.values())=============================================================================================================================

上面是使用了Saver()來儲存模型,也可以使用sv = tf.train.Supervisor()來儲存模型

#-*- coding:utf-8 -*-

import tensorflow as tf

import numpy as np

with tf.variable_scope('Placeholder'):

inputs_placeholder = tf.placeholder(tf.float32, name='inputs_placeholder', shape=[None, 10])

labels_placeholder = tf.placeholder(tf.float32, name='labels_placeholder', shape=[None, 1])

with tf.variable_scope('NN'):

W1 = tf.get_variable('W1', shape=[10, 1], initializer=tf.random_normal_initializer(stddev=1e-1))

b1 = tf.get_variable('b1', shape=[1], initializer=tf.constant_initializer(0.1))

W2 = tf.get_variable('W2', shape=[10, 1], initializer=tf.random_normal_initializer(stddev=1e-1))

b2 = tf.get_variable('b2', shape=[1], initializer=tf.constant_initializer(0.1))

a = tf.nn.relu(tf.matmul(inputs_placeholder, W1) + b1)

a2 = tf.nn.relu(tf.matmul(inputs_placeholder, W2) + b2)

y = tf.div(tf.add(a, a2), 2)

with tf.variable_scope('Loss'):

loss = tf.reduce_sum(tf.square(y - labels_placeholder) / 2)

with tf.variable_scope('Accuracy'):

predictions = tf.greater(y, 0.5, name="predictions")

correct_predictions = tf.equal(predictions, tf.cast(labels_placeholder, tf.bool), name="correct_predictions")

accuracy = tf.reduce_mean(tf.cast(correct_predictions, tf.float32))

adam = tf.train.AdamOptimizer(learning_rate=1e-3)

train_op = adam.minimize(loss)

# generate_data

inputs = np.random.choice(10, size=[10000, 10])

labels = (np.sum(inputs, axis=1) > 45).reshape(-1, 1).astype(np.float32)

print('inputs.shape:', inputs.shape)

print('labels.shape:', labels.shape)

test_inputs = np.random.choice(10, size=[100, 10])

test_labels = (np.sum(test_inputs, axis=1) > 45).reshape(-1, 1).astype(np.float32)

print('test_inputs.shape:', test_inputs.shape)

print('test_labels.shape:', test_labels.shape)

batch_size = 32

epochs = 10

batches = []

print("%d items in batch of %d gives us %d full batches and %d batches of %d items" % (

len(inputs),

batch_size,

len(inputs) // batch_size,

batch_size - len(inputs) // batch_size,

len(inputs) - (len(inputs) // batch_size) * 32)

)

for i in range(len(inputs) // batch_size):

batch = [ inputs[batch_size*i:batch_size*i+batch_size], labels[batch_size*i:batch_size*i+batch_size] ]

batches.append(list(batch))

if (i + 1) * batch_size < len(inputs):

batch = [ inputs[batch_size*(i + 1):],labels[batch_size*(i + 1):] ]

batches.append(list(batch))

print("Number of batches: %d" % len(batches))

print("Size of full batch: %d" % len(batches[0]))

print("Size if final batch: %d" % len(batches[-1]))

global_count = 0

#with tf.Session() as sess:

sv = tf.train.Supervisor()

with sv.managed_session() as sess:

#sess.run(tf.initialize_all_variables())

for i in range(epochs):

for batch in batches:

# print(batch[0].shape, batch[1].shape)

train_loss , _= sess.run([loss, train_op], feed_dict={

inputs_placeholder: batch[0],

labels_placeholder: batch[1]

})

# print('train_loss: %d' % train_loss)

if global_count % 100 == 0:

acc = sess.run(accuracy, feed_dict={

inputs_placeholder: test_inputs,

labels_placeholder: test_labels

})

print('accuracy: %f' % acc)

global_count += 1

acc = sess.run(accuracy, feed_dict={

inputs_placeholder: test_inputs,

labels_placeholder: test_labels

})

print("final accuracy: %f" % acc)

#在session當中就要將模型進行儲存

#saver = tf.train.Saver()

#last_chkp = saver.save(sess, 'results/graph.chkp')

sv.saver.save(sess, 'results/graph.chkp')

for op in tf.get_default_graph().get_operations():

print(op.name)注意:使用了sv = tf.train.Supervisor(),就不需要再初始化了,將sess.run(tf.initialize_all_variables())註釋掉,否則會報錯.