BP神經網路python簡單實現

BP神經網路的原理在網上有很詳細的說明,這裡就不打算細說,這篇文章主要簡單的方式設計及實現BP神經網路,並簡單測試下在恆等計算(編碼)作測試。

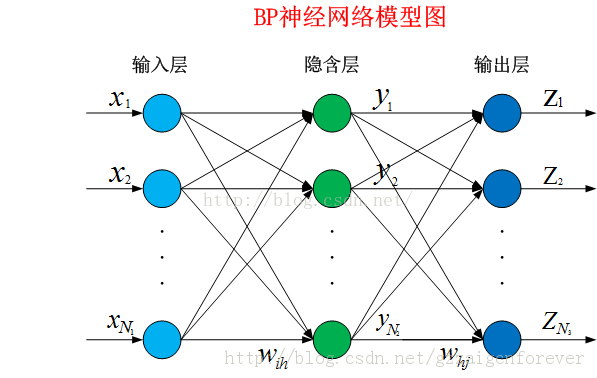

BP神經網路模型圖如下

BP神經網路基本思想

BP神經網路學習過程由資訊的下向傳遞和誤差的反向傳播兩個過程組成

正向傳遞:由模型圖中的資料x從輸入層到最後輸出層z的過程。

反向傳播:在訓練階段,如果正向傳遞過程中發現輸出的值與期望的傳有誤差,由將誤差從輸出層返傳回輸入層的過程。返回的過程主要是修改每一層每個連線的權值w,達到減少誤的過程。

BP神經網路設計

設計思路是將神經網路分為神經元、網路層及整個網路三個層次。

首先是定義使用sigmoid函式作為啟用函式

def logistic(x):

return 1 / (1 + np.exp(-x))

def logistic_derivative(x):

return logistic(x) * (1 - logistic(x))神經元的設計

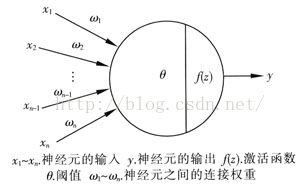

由神經元的設計圖可知,BP神經網路可拆解成是神經元的集合。

神經元主要功能:

- 計算資料,輸出結果。

- 更新各連線權值。

- 向上一層反饋權值更新值,實現反饋功能。

注意到:

程式碼是新增衝量功能(+0.9 * self.last_weight_add), 這可以加速收斂,如果有興趣可把它去掉看看訓練結果。weight_add = self.input * self.deltas_item * learning_rate + 0.9 * self.last_weight_add#新增衝量

神經元設計程式碼如下:

class Neuron: def __init__(self, len_input): # 輸入的初始引數, 隨機取很小的值(<0.1) self.weights = np.random.random(len_input) * 0.1 # 當前例項的輸入 self.input = np.ones(len_input) # 對下一層的輸出值 self.output = 1 # 誤差項 self.deltas_item = 0 # 上一次權重增加的量,記錄起來方便後面擴充套件時可考慮增加衝量 self.last_weight_add = 0 def calc_output(self, x): # 計算輸出值 self.input = x self.output = logistic(np.dot(self.weights.T, self.input)) return self.output def get_back_weight(self): # 獲取反饋差值 return self.weights * self.deltas_item def update_weight(self, target=0, back_weight=0, learning_rate=0.1, layer="OUTPUT"): # 更新權傳 if layer == "OUTPUT": self.deltas_item = (target - self.output) * logistic_derivative(self.output) elif layer == "HIDDEN": self.deltas_item = back_weight * logistic_derivative(self.output) weight_add = self.input * self.deltas_item * learning_rate + 0.9 * self.last_weight_add#新增衝量 self.weights += weight_add self.last_weight_add = weight_add

網路層設計

管理一個網路層的程式碼,分為隱藏層和輸出層。 (輸入層可直接用輸入資料,不簡單實現。)

網路層主要管理自己層的神經元,所以封裝的結果與神經元的介面一樣。對向實現自己的功能。

同時為了方便處理,添加了他下一層的引用。

class NetLayer:

'''

網路層封裝

管理當前網路層的神經元列表

'''

def __init__(self, len_node, in_count):

'''

:param len_node: 當前層的神經元數

:param in_count: 當前層的輸入數

'''

# 當前層的神經元列表

self.neurons = [Neuron(in_count) for _ in range(len_node)]

# 記錄下一層的引用,方便遞迴操作

self.next_layer = None

def calc_output(self, x):

output = np.array([node.calc_output(x) for node in self.neurons])

if self.next_layer is not None:

return self.next_layer.calc_output(output)

return output

def get_back_weight(self):

return sum([node.get_back_weight() for node in self.neurons])

def update_weight(self, learning_rate, target):

'''

更新當前網路層及之後層次的權重

使用了遞迴來操作,所以要求外面呼叫時必須從網路層的第一層(輸入層的下一層)來呼叫

:param learning_rate: 學習率

:param target: 輸出值

'''

layer = "OUTPUT"

back_weight = np.zeros(len(self.neurons))

if self.next_layer is not None:

back_weight = self.next_layer.update_weight(learning_rate, target)

layer = "HIDDEN"

for i, node in enumerate(self.neurons): target_item = 0 if len(target) <= i else target[i]

node.update_weight(target= target_item, back_weight=back_weight[i], learning_rate=learning_rate, layer=layer)

return self.get_back_weight()BP神經網路實現

管理整個網路,對外提供訓練介面及預測介面。

構建網路引數為一列表, 第一個元素程式碼輸入引數個數, 最後一個程式碼輸出神經元個數,中間的為各個隱藏層中的神經元的個數。

由於各層間程式碼鏈式儲存, 所以layers[0]操作就程式碼了整個網路。

lass NetLayer:

'''''

網路層封裝

管理當前網路層的神經元列表

'''

def __init__(self, len_node, in_count):

'''''

:param len_node: 當前層的神經元數

:param in_count: 當前層的輸入數

'''

# 當前層的神經元列表

self.neurons = [Neuron(in_count) for _ in range(len_node)]

# 記錄下一層的引用,方便遞迴操作

self.next_layer = None

def calc_output(self, x):

output = np.array([node.calc_output(x) for node in self.neurons])

if self.next_layer is not None:

return self.next_layer.calc_output(output)

return output

def get_back_weight(self):

return sum([node.get_back_weight() for node in self.neurons])

def update_weight(self, learning_rate, target):

'''''

更新當前網路層及之後層次的權重

使用了遞迴來操作,所以要求外面呼叫時必須從網路層的第一層(輸入層的下一層)來呼叫

:param learning_rate: 學習率

:param target: 輸出值

'''

layer = "OUTPUT"

back_weight = np.zeros(len(self.neurons))

if self.next_layer is not None:

back_weight = self.next_layer.update_weight(learning_rate, target)

layer = "HIDDEN"

for i, node in enumerate(self.neurons):

target_item = 0 if len(target)<i else target[i]

node.update_weight(target=target_item, back_weight = back_weight[i], learning_rate = learning_rate, layer = layer)

return self.get_back_weight()

class NeuralNetWork:

def __init__(self, layers):

self.layers = []

self.construct_network(layers)

pass

def construct_network(self, layers):

last_layer = None

for i, layer in enumerate(layers):

if i == 0:

continue

cur_layer = NetLayer(layer, layers[i-1])

self.layers.append(cur_layer)

if last_layer is not None:

last_layer.next_layer = cur_layer

last_layer = cur_layer

def fit(self, x_train, y_train, learning_rate=0.1, epochs=100000, shuffle=False):

'''

訓練網路, 預設按順序來訓練

方法 1:按訓練資料順序來訓練

方法 2: 隨機選擇測試

:param x_train: 輸入資料

:param y_train: 輸出資料

:param learning_rate: 學習率

:param epochs:權重更新次數

:param shuffle:隨機取資料訓練

'''

indices = np.arange(len(x_train))

for _ in range(epochs):

if shuffle:

np.random.shuffle(indices)

for i in indices:

self.layers[0].calc_output(x_train[i])

self.layers[0].update_weight(learning_rate, y_train[i])

pass

def predict(self, x):

return self.layers[0].calc_output(x)

測試程式碼

測試資料中輸出資料和輸出資料一樣。測試AutoEncoder自動編碼器。(AutoEncoder不瞭解的可網上找一下。)

if __name__ == '__main__':

print("test neural network")

data = np.array([[1, 0, 0, 0, 0, 0, 0, 0],

[0, 1, 0, 0, 0, 0, 0, 0],

[0, 0, 1, 0, 0, 0, 0, 0],

[0, 0, 0, 1, 0, 0, 0, 0],

[0, 0, 0, 0, 1, 0, 0, 0],

[0, 0, 0, 0, 0, 1, 0, 0],

[0, 0, 0, 0, 0, 0, 1, 0],

[0, 0, 0, 0, 0, 0, 0, 1]])

np.set_printoptions(precision=3, suppress=True)

for item in range(10):

network = NeuralNetWork([8, 3, 8])

# 讓輸入資料與輸出資料相等

network.fit(data, data, learning_rate=0.1, epochs=10000)

print("\n\n", item, "result")

for item in data:

print(item, network.predict(item))結果輸出

效果還不錯,達到了預想的結果。

問題:可測試結果中有 0.317(已經標紅), 是由於把8個數據編碼成3個數據有點勉強。 如果網路改成[8,4,8]就能夠不出現這樣的結果。 大家可以試一下。

/Library/Frameworks/Python.framework/Versions/3.4/bin/python3.4 /XXXX/機器學習/number/NeuralNetwork.py

test neural network

0 result

[1 0 0 0 0 0 0 0] [ 0.987 0. 0.005 0. 0. 0.01 0.004 0. ]

[0 1 0 0 0 0 0 0] [ 0. 0.985 0. 0.006 0. 0.025 0. 0.008]

[0 0 1 0 0 0 0 0] [ 0.007 0. 0.983 0. 0.007 0.027 0. 0. ]

[0 0 0 1 0 0 0 0] [ 0. 0.005 0. 0.985 0.007 0.02 0. 0. ]

[0 0 0 0 1 0 0 0] [ 0. 0. 0.005 0.005 0.983 0.013 0. 0. ]

[0 0 0 0 0 1 0 0] [ 0.016 0.017 0.02 0.018 0.018 <span style="color:#ff0000;">0.317</span> 0.023 0.017]

[0 0 0 0 0 0 1 0] [ 0.006 0. 0. 0. 0. 0.026 0.984 0.006]

[0 0 0 0 0 0 0 1] [ 0. 0.005 0. 0. 0. 0.01 0.004 0.985]

1 result

[1 0 0 0 0 0 0 0] [ 0.983 0. 0. 0.007 0.007 0. 0. 0.027]

[0 1 0 0 0 0 0 0] [ 0. 0.986 0.004 0. 0. 0. 0.005 0.01 ]

[0 0 1 0 0 0 0 0] [ 0. 0.005 0.985 0. 0.005 0. 0. 0.026]

[0 0 0 1 0 0 0 0] [ 0.005 0. 0. 0.983 0. 0.006 0. 0.015]

[0 0 0 0 1 0 0 0] [ 0.005 0. 0.004 0. 0.987 0. 0. 0.01 ]

[0 0 0 0 0 1 0 0] [ 0. 0. 0. 0.006 0. 0.984 0.005 0.018]

[0 0 0 0 0 0 1 0] [ 0. 0.008 0. 0. 0. 0.006 0.984 0.027]

[0 0 0 0 0 0 0 1] [ 0.018 0.017 0.025 0.018 0.016 0.018 0.017 <span style="color:#ff0000;">0.317]</span>

2 result

[1 0 0 0 0 0 0 0] [ 0.966 0. 0.016 0.014 0. 0. 0. 0. ]

[0 1 0 0 0 0 0 0] [ 0. 0.969 0. 0.016 0. 0. 0. 0.014]

[0 0 1 0 0 0 0 0] [ 0.012 0. 0.969 0. 0. 0.013 0. 0. ]

[0 0 0 1 0 0 0 0] [ 0.014 0.014 0. 0.969 0. 0. 0. 0. ]

[0 0 0 0 1 0 0 0] [ 0. 0. 0. 0. 0.962 0.016 0.02 0. ]

[0 0 0 0 0 1 0 0] [ 0. 0. 0.02 0. 0.016 0.963 0. 0. ]

[0 0 0 0 0 0 1 0] [ 0. 0. 0. 0. 0.012 0. 0.969 0.011]

[0 0 0 0 0 0 0 1] [ 0. 0.014 0. 0. 0. 0. 0.016 0.966]

3 result

[1 0 0 0 0 0 0 0] [ 0.983 0. 0. 0.007 0.027 0. 0. 0.007]

[0 1 0 0 0 0 0 0] [ 0. 0.986 0.004 0. 0.01 0.005 0. 0. ]

[0 0 1 0 0 0 0 0] [ 0. 0.006 0.984 0.006 0.026 0. 0. 0. ]

[0 0 0 1 0 0 0 0] [ 0.005 0. 0.004 0.987 0.01 0. 0. 0. ]

[0 0 0 0 1 0 0 0] [ 0.019 0.017 0.024 0.016 <span style="color:#ff0000;">0.317</span> 0.017 0.018 0.018]

[0 0 0 0 0 1 0 0] [ 0. 0.008 0. 0. 0.026 0.984 0.006 0. ]

[0 0 0 0 0 0 1 0] [ 0. 0. 0. 0. 0.019 0.005 0.984 0.007]

[0 0 0 0 0 0 0 1] [ 0.005 0. 0. 0. 0.014 0. 0.005 0.983]

4 result

[1 0 0 0 0 0 0 0] [ 0.969 0.014 0. 0. 0. 0. 0.014 0. ]

[0 1 0 0 0 0 0 0] [ 0.014 0.966 0.016 0. 0. 0. 0. 0. ]

[0 0 1 0 0 0 0 0] [ 0. 0.011 0.969 0. 0. 0.012 0. 0. ]

[0 0 0 1 0 0 0 0] [ 0. 0. 0. 0.966 0. 0. 0.013 0.016]

[0 0 0 0 1 0 0 0] [ 0. 0. 0. 0. 0.963 0.016 0. 0.02 ]

[0 0 0 0 0 1 0 0] [ 0. 0. 0.02 0. 0.016 0.963 0. 0. ]

[0 0 0 0 0 0 1 0] [ 0.016 0. 0. 0.014 0. 0. 0.969 0. ]

[0 0 0 0 0 0 0 1] [ 0. 0. 0. 0.011 0.012 0. 0. 0.969]

5 result

[1 0 0 0 0 0 0 0] [ 0.966 0. 0.016 0. 0. 0.018 0. 0. ]

[0 1 0 0 0 0 0 0] [ 0. 0.969 0.012 0. 0. 0. 0.011 0. ]

[0 0 1 0 0 0 0 0] [ 0.015 0.018 0.964 0. 0. 0. 0. 0. ]

[0 0 0 1 0 0 0 0] [ 0. 0. 0. 0.968 0.013 0. 0. 0.013]

[0 0 0 0 1 0 0 0] [ 0. 0. 0. 0.015 0.965 0.015 0. 0. ]

[0 0 0 0 0 1 0 0] [ 0.013 0. 0. 0. 0.013 0.968 0. 0. ]

[0 0 0 0 0 0 1 0] [ 0. 0.018 0. 0. 0. 0. 0.965 0.014]

[0 0 0 0 0 0 0 1] [ 0. 0. 0. 0.018 0. 0. 0.015 0.967]

6 result

[1 0 0 0 0 0 0 0] [ 0.983 0.006 0. 0.005 0. 0. 0. 0.016]

[0 1 0 0 0 0 0 0] [ 0.006 0.983 0. 0. 0. 0.005 0. 0.017]

[0 0 1 0 0 0 0 0] [ 0. 0. 0.987 0.005 0. 0. 0.004 0.01 ]

[0 0 0 1 0 0 0 0] [ 0.007 0. 0.007 0.983 0. 0. 0. 0.027]

[0 0 0 0 1 0 0 0] [ 0. 0. 0. 0. 0.987 0.005 0.004 0.01 ]

[0 0 0 0 0 1 0 0] [ 0. 0.007 0. 0. 0.008 0.983 0. 0.027]

[0 0 0 0 0 0 1 0] [ 0. 0. 0.005 0. 0.005 0. 0.985 0.026]

[0 0 0 0 0 0 0 1] [ 0.018 0.018 0.017 0.017 0.017 0.017 0.025 <span style="color:#ff0000;">0.317</span>]

7 result

[1 0 0 0 0 0 0 0] [ 0.969 0. 0. 0. 0.014 0. 0. 0.015]

[0 1 0 0 0 0 0 0] [ 0. 0.963 0.02 0. 0. 0.017 0. 0. ]

[0 0 1 0 0 0 0 0] [ 0. 0.012 0.969 0. 0. 0. 0.011 0. ]

[0 0 0 1 0 0 0 0] [ 0. 0. 0. 0.969 0.011 0.013 0. 0. ]

[0 0 0 0 1 0 0 0] [ 0.014 0. 0. 0.016 0.966 0. 0. 0. ]

[0 0 0 0 0 1 0 0] [ 0. 0.016 0. 0.02 0. 0.962 0. 0. ]

[0 0 0 0 0 0 1 0] [ 0. 0. 0.016 0. 0. 0. 0.966 0.014]

[0 0 0 0 0 0 0 1] [ 0.015 0. 0. 0. 0. 0. 0.014 0.969]

8 result

[1 0 0 0 0 0 0 0] [ 0.966 0.016 0.013 0. 0. 0. 0. 0. ]

[0 1 0 0 0 0 0 0] [ 0.011 0.969 0. 0. 0. 0. 0.012 0. ]

[0 0 1 0 0 0 0 0] [ 0.014 0. 0.969 0.015 0. 0. 0. 0. ]

[0 0 0 1 0 0 0 0] [ 0. 0. 0.015 0.969 0. 0.014 0. 0. ]

[0 0 0 0 1 0 0 0] [ 0. 0. 0. 0. 0.963 0. 0.016 0.02 ]

[0 0 0 0 0 1 0 0] [ 0. 0. 0. 0.013 0. 0.966 0. 0.016]

[0 0 0 0 0 0 1 0] [ 0. 0.02 0. 0. 0.016 0. 0.963 0. ]

[0 0 0 0 0 0 0 1] [ 0. 0. 0. 0. 0.012 0.011 0. 0.969]

9 result

[1 0 0 0 0 0 0 0] [ 0.969 0. 0. 0. 0. 0.011 0. 0.011]

[0 1 0 0 0 0 0 0] [ 0. 0.968 0. 0. 0. 0. 0.018 0.015]

[0 0 1 0 0 0 0 0] [ 0. 0. 0.965 0. 0.015 0. 0.015 0. ]

[0 0 0 1 0 0 0 0] [ 0. 0. 0. 0.966 0.018 0.016 0. 0. ]

[0 0 0 0 1 0 0 0] [ 0. 0. 0.013 0.013 0.968 0. 0. 0. ]

[0 0 0 0 0 1 0 0] [ 0.018 0. 0. 0.014 0. 0.964 0. 0. ]

[0 0 0 0 0 0 1 0] [ 0. 0.013 0.013 0. 0. 0. 0.968 0. ]

[0 0 0 0 0 0 0 1] [ 0.018 0.014 0. 0. 0. 0. 0. 0.965]

Process finished with exit code 0