基於tensorflow + Vgg16進行影象分類識別

1. VGG-16介紹

vgg是在Very Deep Convolutional Networks for Large-Scale Image Recognition期刊上提出的。模型可以達到92.7%的測試準確度,在ImageNet的前5位。它的資料集包括1400萬張影象,1000個類別。

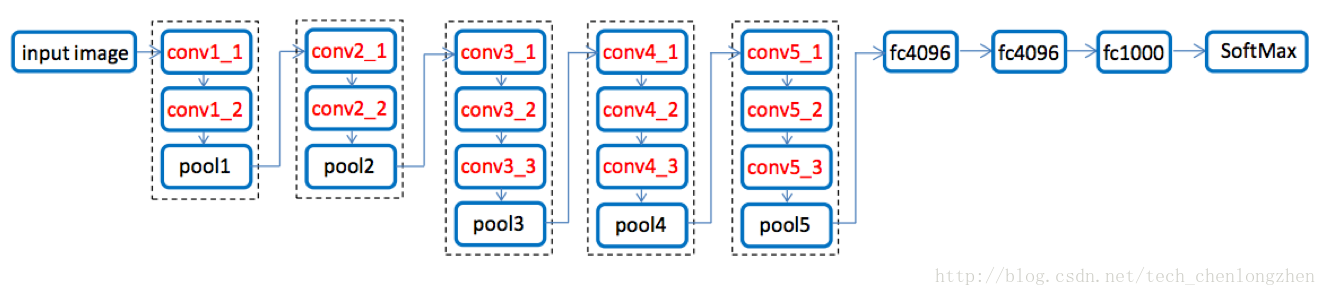

vgg-16是一種深度卷積神經網路模型,16表示其深度,在影象分類等任務中取得了不錯的效果。

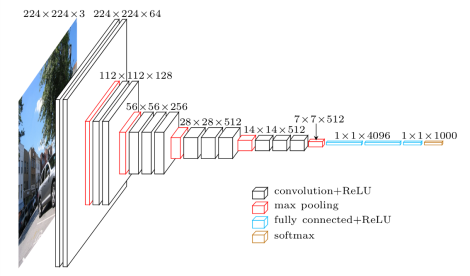

vgg16 的巨集觀結構圖如下。程式碼定義在tensorflow的vgg16.py檔案 。注意,包括一個預處理層,使用RGB影象在0-255範圍內的畫素值減去平均值(在整個ImageNet影象訓練集計算)。

2. 檔案組成

模型權重 - vgg16_weights.npz

TensorFlow模型- vgg16.py

類名(輸出模型到類名的對映) - imagenet_classes.py

示例圖片輸入 - laska.png

我們使用特定的工具轉換了原作者在GitHub profile上公開可用的Caffe權重,並做了一些後續處理,以確保模型符合TensorFlow標準。最終實現可用的權重檔案vgg16_weights.npz

下載所有的檔案到同一資料夾下,然後執行 python vgg16.py

- vgg16.py檔案程式碼:

import tensorflow as tf

import 執行,測試

測試1:

輸入圖片為laska.png

執行結果:

2018-03-23 11:04:38.311802: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX2 instructions, but these are available on your machine and could speed up CPU computations.

2018-03-23 11:04:38.311873: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use FMA instructions, but these are available on your machine and could speed up CPU computations.

0 conv1_1_W (3, 3, 3, 64)

1 conv1_1_b (64,)

2 conv1_2_W (3, 3, 64, 64)

3 conv1_2_b (64,)

4 conv2_1_W (3, 3, 64, 128)

5 conv2_1_b (128,)

6 conv2_2_W (3, 3, 128, 128)

7 conv2_2_b (128,)

8 conv3_1_W (3, 3, 128, 256)

9 conv3_1_b (256,)

10 conv3_2_W (3, 3, 256, 256)

11 conv3_2_b (256,)

12 conv3_3_W (3, 3, 256, 256)

13 conv3_3_b (256,)

14 conv4_1_W (3, 3, 256, 512)

15 conv4_1_b (512,)

16 conv4_2_W (3, 3, 512, 512)

17 conv4_2_b (512,)

18 conv4_3_W (3, 3, 512, 512)

19 conv4_3_b (512,)

20 conv5_1_W (3, 3, 512, 512)

21 conv5_1_b (512,)

22 conv5_2_W (3, 3, 512, 512)

23 conv5_2_b (512,)

24 conv5_3_W (3, 3, 512, 512)

25 conv5_3_b (512,)

26 fc6_W (25088, 4096)

27 fc6_b (4096,)

28 fc7_W (4096, 4096)

29 fc7_b (4096,)

30 fc8_W (4096, 1000)

31 fc8_b (1000,)

class_name **weasel**: step 0.693385839462

class_name polecat, fitch, foulmart, foumart, Mustela putorius: step 0.175387635827

class_name mink: step 0.12208583951

class_name black-footed ferret, ferret, Mustela nigripes: step 0.00887066219002

class_name otter: step 0.000121083263366分類結果為weasel

測試2:

輸入圖片為多場景

執行結果為:

2018-03-23 11:15:22.718228: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX2 instructions, but these are available on your machine and could speed up CPU computations.

2018-03-23 11:15:22.718297: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use FMA instructions, but these are available on your machine and could speed up CPU computations.

0 conv1_1_W (3, 3, 3, 64)

1 conv1_1_b (64,)

2 conv1_2_W (3, 3, 64, 64)

3 conv1_2_b (64,)

4 conv2_1_W (3, 3, 64, 128)

5 conv2_1_b (128,)

6 conv2_2_W (3, 3, 128, 128)

7 conv2_2_b (128,)

8 conv3_1_W (3, 3, 128, 256)

9 conv3_1_b (256,)

10 conv3_2_W (3, 3, 256, 256)

11 conv3_2_b (256,)

12 conv3_3_W (3, 3, 256, 256)

13 conv3_3_b (256,)

14 conv4_1_W (3, 3, 256, 512)

15 conv4_1_b (512,)

16 conv4_2_W (3, 3, 512, 512)

17 conv4_2_b (512,)

18 conv4_3_W (3, 3, 512, 512)

19 conv4_3_b (512,)

20 conv5_1_W (3, 3, 512, 512)

21 conv5_1_b (512,)

22 conv5_2_W (3, 3, 512, 512)

23 conv5_2_b (512,)

24 conv5_3_W (3, 3, 512, 512)

25 conv5_3_b (512,)

26 fc6_W (25088, 4096)

27 fc6_b (4096,)

28 fc7_W (4096, 4096)

29 fc7_b (4096,)

30 fc8_W (4096, 1000)

31 fc8_b (1000,)

class_name alp: step 0.830908000469

class_name church, church building: step 0.0817768126726

class_name castle: step 0.024959910661

class_name valley, vale: step 0.0158758834004

class_name monastery: step 0.0100631769747分類結果把高山,教堂,城堡,山谷,修道院都識別出來了,效果非常不錯,雖然各種精度不高,但是類別是齊全的。

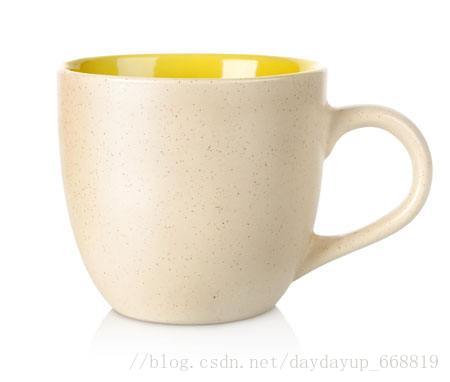

測試3:

輸入圖片為

執行結果為

2018-03-23 11:34:50.490069: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX2 instructions, but these are available on your machine and could speed up CPU computations.

2018-03-23 11:34:50.490137: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use FMA instructions, but these are available on your machine and could speed up CPU computations.

0 conv1_1_W (3, 3, 3, 64)

1 conv1_1_b (64,)

2 conv1_2_W (3, 3, 64, 64)

3 conv1_2_b (64,)

4 conv2_1_W (3, 3, 64, 128)

5 conv2_1_b (128,)

6 conv2_2_W (3, 3, 128, 128)

7 conv2_2_b (128,)

8 conv3_1_W (3, 3, 128, 256)

9 conv3_1_b (256,)

10 conv3_2_W (3, 3, 256, 256)

11 conv3_2_b (256,)

12 conv3_3_W (3, 3, 256, 256)

13 conv3_3_b (256,)

14 conv4_1_W (3, 3, 256, 512)

15 conv4_1_b (512,)

16 conv4_2_W (3, 3, 512, 512)

17 conv4_2_b (512,)

18 conv4_3_W (3, 3, 512, 512)

19 conv4_3_b (512,)

20 conv5_1_W (3, 3, 512, 512)

21 conv5_1_b (512,)

22 conv5_2_W (3, 3, 512, 512)

23 conv5_2_b (512,)

24 conv5_3_W (3, 3, 512, 512)

25 conv5_3_b (512,)

26 fc6_W (25088, 4096)

27 fc6_b (4096,)

28 fc7_W (4096, 4096)

29 fc7_b (4096,)

30 fc8_W (4096, 1000)

31 fc8_b (1000,)

class_name cup: step 0.543631911278

class_name coffee mug: step 0.364796578884

class_name pitcher, ewer: step 0.0259610358626

class_name eggnog: step 0.0117611540481

class_name water jug: step 0.00806392729282分類結果為cup

測試4:

輸入圖片為

執行結果為

2018-03-23 11:37:23.573090: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX2 instructions, but these are available on your machine and could speed up CPU computations.

2018-03-23 11:37:23.573159: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use FMA instructions, but these are available on your machine and could speed up CPU computations.

0 conv1_1_W (3, 3, 3, 64)

1 conv1_1_b (64,)

2 conv1_2_W (3, 3, 64, 64)

3 conv1_2_b (64,)

4 conv2_1_W (3, 3, 64, 128)

5 conv2_1_b (128,)

6 conv2_2_W (3, 3, 128, 128)

7 conv2_2_b (128,)

8 conv3_1_W (3, 3, 128, 256)

9 conv3_1_b (256,)

10 conv3_2_W (3, 3, 256, 256)

11 conv3_2_b (256,)

12 conv3_3_W (3, 3, 256, 256)

13 conv3_3_b (256,)

14 conv4_1_W (3, 3, 256, 512)

15 conv4_1_b (512,)

16 conv4_2_W (3, 3, 512, 512)

17 conv4_2_b (512,)

18 conv4_3_W (3, 3, 512, 512)

19 conv4_3_b (512,)

20 conv5_1_W (3, 3, 512, 512)

21 conv5_1_b (512,)

22 conv5_2_W (3, 3, 512, 512)

23 conv5_2_b (512,)

24 conv5_3_W (3, 3, 512, 512)

25 conv5_3_b (512,)

26 fc6_W (25088, 4096)

27 fc6_b (4096,)

28 fc7_W (4096, 4096)

29 fc7_b (4096,)

30 fc8_W (4096, 1000)

31 fc8_b (1000,)

class_name cellular telephone, cellular phone, cellphone, cell, mobile phone: step 0.465327292681

class_name iPod: step 0.10543012619

class_name radio, wireless: step 0.0810257941484

class_name hard disc, hard disk, fixed disk: step 0.0789099931717

class_name modem: step 0.0603163056076分類結果為 cellular telephone

測試5:

輸入圖片為

執行結果為

2018-03-23 11:40:40.956946: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX2 instructions, but these are available on your machine and could speed up CPU computations.

2018-03-23 11:40:40.957016: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use FMA instructions, but these are available on your machine and could speed up CPU computations.

0 conv1_1_W (3, 3, 3, 64)

1 conv1_1_b (64,)

2 conv1_2_W (3, 3, 64, 64)

3 conv1_2_b (64,)

4 conv2_1_W (3, 3, 64, 128)

5 conv2_1_b (128,)

6 conv2_2_W (3, 3, 128, 128)

7 conv2_2_b (128,)

8 conv3_1_W (3, 3, 128, 256)

9 conv3_1_b (256,)

10 conv3_2_W (3, 3, 256, 256)

11 conv3_2_b (256,)

12 conv3_3_W (3, 3, 256, 256)

13 conv3_3_b (256,)

14 conv4_1_W (3, 3, 256, 512)

15 conv4_1_b (512,)

16 conv4_2_W (3, 3, 512, 512)

17 conv4_2_b (512,)

18 conv4_3_W (3, 3, 512, 512)

19 conv4_3_b (512,)

20 conv5_1_W (3, 3, 512, 512)

21 conv5_1_b (512,)

22 conv5_2_W (3, 3, 512, 512)

23 conv5_2_b (512,)

24 conv5_3_W (3, 3, 512, 512)

25 conv5_3_b (512,)

26 fc6_W (25088, 4096)

27 fc6_b (4096,)

28 fc7_W (4096, 4096)

29 fc7_b (4096,)

30 fc8_W (4096, 1000)

31 fc8_b (1000,)

class_name water bottle: step 0.75726544857

class_name pop bottle, soda bottle: step 0.0976340323687

class_name nipple: step 0.0622750669718

class_name water jug: step 0.0233819428831

class_name soap dispenser: step 0.0179366543889分類結果為 water bottle

參考文件