Louvain 社團發現演算法學習(我的java實現+資料用例)

為了大家方便,直接把資料放在github了:

演算法介紹:

Louvain 演算法是基於模組度的社群發現演算法,該演算法在效率和效果上都表現較好,並且能夠發現層次性的社群結構,其優化目標是最大化整個社群網路的模組度。

社群網路的模組度(Modularity)是評估一個社群網路劃分好壞的度量方法,它的含義是社群內節點的連邊數與隨機情況下的邊數之差,它的取值範圍是 (0,1),其定義如下:

上式中,Aij代表結點i和j之間的邊權值(當圖不帶權時,邊權值可以看成1)。 ki代表結點i的領街邊的邊權和(當圖不帶權時,即為結點的度數)。

m為圖中所有邊的邊權和。 ci為結點i所在的社團編號。

模組度的公式定義可以做如下的簡化:

其中Sigma in表示社群c內的邊的權重之和,Sigma tot表示與社群c內的節點相連的邊的權重之和。

我們的目標,是要找出各個結點處於哪一個社團,並且讓這個劃分結構的模組度最大。

Louvain演算法的思想很簡單:

1)將圖中的每個節點看成一個獨立的社群,次數社群的數目與節點個數相同;

2)對每個節點i,依次嘗試把節點i分配到其每個鄰居節點所在的社群,計算分配前與分配後的模組度變化Delta Q,並記錄Delta Q最大的那個鄰居節點,如果maxDelta Q>0,則把節點i分配Delta Q最大的那個鄰居節點所在的社群,否則保持不變;

3)重複2),直到所有節點的所屬社群不再變化;

4)對圖進行壓縮,將所有在同一個社群的節點壓縮成一個新節點,社群內節點之間的邊的權重轉化為新節點的環的權重,社群間的邊權重轉化為新節點間的邊權重;

5)重複1)直到整個圖的模組度不再發生變化。

在寫程式碼時,要注意幾個要點:

* 第二步,嘗試分配結點時,並不是只嘗試獨立的結點,也可以嘗試所在社群的結點數大於1的點,我在看paper時一開始把這個部分看錯了,導致演算法出問題。

* 第三步,重複2)的時候,並不是將每個點遍歷一次後就對圖進行一次重構,而是要不斷迴圈的遍歷每個結點,直到一次迴圈中所有結點所在社群都不更新了,表示當前網路已經穩定,然後才進行圖的重構。

* 模組度增益的計算,請繼續看下文

過程如下圖所示:

可以看出,louvain是一個啟發式的貪心演算法。我們需要對模組度進行一個啟發式的更新。這樣的話這個演算法會有如下幾個問題:

1:嘗試將節點i分配到相鄰社團時,如果真的移動結點i,重新計算模組度,那麼演算法的效率很難得到保證

2:在本問題中,貪心演算法只能保證區域性最優,而不能夠保證全域性最優

3:將節點i嘗試分配至相鄰社團時,要依據一個什麼樣的順序

......

第一個問題,在該演算法的paper中給了我們解答。我們實際上不用真的把節點i加入相鄰社團後重新計算模組度,paper中給了我們一個計算把結點i移動至社團c時,模組度的增益公式:

其中Sigma in表示起點終點都在社群c內的邊的權重之和,Sigma tot表示入射社群c內的邊的權重之和,ki代表結點i的帶權度數和,m為所有邊權和。

但是該增益公式還是過於複雜,仍然會影響演算法的時間效率。

但是請注意,我們只是想通過模組增益度來判斷一個結點i是否能移動到社團c中,而我們實際上是沒有必要真正的去求精確的模組度,只需要知道,當前的這步操作,模組度是否發生了增長。

因此,就有了相對增益公式的出現:

相對增益的值可能大於1,不是真正的模組度增長值,但是它的正負表示了當前的操作是否增加了模組度。用該公式能大大降低演算法的時間複雜度。

第二個問題,該演算法的確不能夠保證全域性最優。但是我們該演算法的啟發式規則很合理,因此我們能夠得到一個十分精確的近似結果。

同時,為了校準結果,我們可以以不同的序列多次呼叫該演算法,保留一個模組度最大的最優結果。

第三個問題,在第二個問題中也出現了,就是給某個結點i找尋領接點時,應當以一個什麼順序?遞增or隨機or其它規則?我想這個問題需要用實驗資料去分析。

在paper中也有提到一些能夠使結果更精確的序列。我的思路是,如果要嘗試多次取其最優,取隨機序列應該是比較穩定比較精確的方式。

說了這麼多,下面給上本人的Louvain演算法java實現供參考。 本人程式碼註釋比較多,適合學習。

我的程式碼後面是國外大牛的程式碼,時空複雜度和我的程式碼一樣,但是常數比我小很多,速度要快很多,而且答案更精準(迭代了10次),適合實際運用~

本人的java程式碼:(時間複雜度o(e),空間複雜度o(e))

Edge.java:

package myLouvain;

public class Edge implements Cloneable{

int v; //v表示連線點的編號,w表示此邊的權值

double weight;

int next; //next負責連線和此點相關的邊

Edge(){}

public Object clone(){

Edge temp=null;

try{

temp = (Edge)super.clone(); //淺複製

}catch(CloneNotSupportedException e) {

e.printStackTrace();

}

return temp;

}

}

Louvain.java:

package myLouvain;

import java.io.BufferedReader;

import java.io.File;

import java.io.FileInputStream;

import java.io.InputStreamReader;

import java.util.ArrayList;

import java.util.Random;

public class Louvain implements Cloneable{

int n; // 結點個數

int m; // 邊數

int cluster[]; // 結點i屬於哪個簇

Edge edge[]; // 鄰接表

int head[]; // 頭結點下標

int top; // 已用E的個數

double resolution; // 1/2m 全域性不變

double node_weight[]; // 結點的權值

double totalEdgeWeight; // 總邊權值

double[] cluster_weight; // 簇的權值

double eps = 1e-14; // 誤差

int global_n; // 最初始的n

int global_cluster[]; // 最後的結果,i屬於哪個簇

Edge[] new_edge; //新的鄰接表

int[] new_head;

int new_top = 0;

final int iteration_time = 3; // 最大迭代次數

Edge global_edge[]; //全域性初始的臨接表 只儲存一次,永久不變,不參與後期運算

int global_head[];

int global_top=0;

void addEdge(int u, int v, double weight) {

if(edge[top]==null)

edge[top]=new Edge();

edge[top].v = v;

edge[top].weight = weight;

edge[top].next = head[u];

head[u] = top++;

}

void addNewEdge(int u, int v, double weight) {

if(new_edge[new_top]==null)

new_edge[new_top]=new Edge();

new_edge[new_top].v = v;

new_edge[new_top].weight = weight;

new_edge[new_top].next = new_head[u];

new_head[u] = new_top++;

}

void addGlobalEdge(int u, int v, double weight) {

if(global_edge[global_top]==null)

global_edge[global_top]=new Edge();

global_edge[global_top].v = v;

global_edge[global_top].weight = weight;

global_edge[global_top].next = global_head[u];

global_head[u] = global_top++;

}

void init(String filePath) {

try {

String encoding = "UTF-8";

File file = new File(filePath);

if (file.isFile() && file.exists()) { // 判斷檔案是否存在

InputStreamReader read = new InputStreamReader(new FileInputStream(file), encoding);// 考慮到編碼格式

BufferedReader bufferedReader = new BufferedReader(read);

String lineTxt = null;

lineTxt = bufferedReader.readLine();

////// 預處理部分

String cur2[] = lineTxt.split(" ");

global_n = n = Integer.parseInt(cur2[0]);

m = Integer.parseInt(cur2[1]);

m *= 2;

edge = new Edge[m];

head = new int[n];

for (int i = 0; i < n; i++)

head[i] = -1;

top = 0;

global_edge=new Edge[m];

global_head = new int[n];

for(int i=0;i<n;i++)

global_head[i]=-1;

global_top=0;

global_cluster = new int[n];

for (int i = 0; i < global_n; i++)

global_cluster[i] = i;

node_weight = new double[n];

totalEdgeWeight = 0.0;

while ((lineTxt = bufferedReader.readLine()) != null) {

String cur[] = lineTxt.split(" ");

int u = Integer.parseInt(cur[0]);

int v = Integer.parseInt(cur[1]);

double curw;

if (cur.length > 2) {

curw = Double.parseDouble(cur[2]);

} else {

curw = 1.0;

}

addEdge(u, v, curw);

addEdge(v, u, curw);

addGlobalEdge(u,v,curw);

addGlobalEdge(v,u,curw);

totalEdgeWeight += 2 * curw;

node_weight[u] += curw;

if (u != v) {

node_weight[v] += curw;

}

}

resolution = 1 / totalEdgeWeight;

read.close();

} else {

System.out.println("找不到指定的檔案");

}

} catch (Exception e) {

System.out.println("讀取檔案內容出錯");

e.printStackTrace();

}

}

void init_cluster() {

cluster = new int[n];

for (int i = 0; i < n; i++) { // 一個結點一個簇

cluster[i] = i;

}

}

boolean try_move_i(int i) { // 嘗試將i加入某個簇

double[] edgeWeightPerCluster = new double[n];

for (int j = head[i]; j != -1; j = edge[j].next) {

int l = cluster[edge[j].v]; // l是nodeid所在簇的編號

edgeWeightPerCluster[l] += edge[j].weight;

}

int bestCluster = -1; // 最優的簇號下標(先預設是自己)

double maxx_deltaQ = 0.0; // 增量的最大值

boolean[] vis = new boolean[n];

cluster_weight[cluster[i]] -= node_weight[i];

for (int j = head[i]; j != -1; j = edge[j].next) {

int l = cluster[edge[j].v]; // l代表領接點的簇號

if (vis[l]) // 一個領接簇只判斷一次

continue;

vis[l] = true;

double cur_deltaQ = edgeWeightPerCluster[l];

cur_deltaQ -= node_weight[i] * cluster_weight[l] * resolution;

if (cur_deltaQ > maxx_deltaQ) {

bestCluster = l;

maxx_deltaQ = cur_deltaQ;

}

edgeWeightPerCluster[l] = 0;

}

if (maxx_deltaQ < eps) {

bestCluster = cluster[i];

}

//System.out.println(maxx_deltaQ);

cluster_weight[bestCluster] += node_weight[i];

if (bestCluster != cluster[i]) { // i成功移動了

cluster[i] = bestCluster;

return true;

}

return false;

}

void rebuildGraph() { // 重構圖

/// 先對簇進行離散化

int[] change = new int[n];

int change_size=0;

boolean vis[] = new boolean[n];

for (int i = 0; i < n; i++) {

if (vis[cluster[i]])

continue;

vis[cluster[i]] = true;

change[change_size++]=cluster[i];

}

int[] index = new int[n]; // index[i]代表 i號簇在新圖中的結點編號

for (int i = 0; i < change_size; i++)

index[change[i]] = i;

int new_n = change_size; // 新圖的大小

new_edge = new Edge[m];

new_head = new int[new_n];

new_top = 0;

double new_node_weight[] = new double[new_n]; // 新點權和

for(int i=0;i<new_n;i++)

new_head[i]=-1;

ArrayList<Integer>[] nodeInCluster = new ArrayList[new_n]; // 代表每個簇中的節點列表

for (int i = 0; i < new_n; i++)

nodeInCluster[i] = new ArrayList<Integer>();

for (int i = 0; i < n; i++) {

nodeInCluster[index[cluster[i]]].add(i);

}

for (int u = 0; u < new_n; u++) { // 將同一個簇的挨在一起分析。可以將visindex陣列降到一維

boolean visindex[] = new boolean[new_n]; // visindex[v]代表新圖中u節點到v的邊在臨街表是第幾個(多了1,為了初始化方便)

double delta_w[] = new double[new_n]; // 邊權的增量

for (int i = 0; i < nodeInCluster[u].size(); i++) {

int t = nodeInCluster[u].get(i);

for (int k = head[t]; k != -1; k = edge[k].next) {

int j = edge[k].v;

int v = index[cluster[j]];

if (u != v) {

if (!visindex[v]) {

addNewEdge(u, v, 0);

visindex[v] = true;

}

delta_w[v] += edge[k].weight;

}

}

new_node_weight[u] += node_weight[t];

}

for (int k = new_head[u]; k != -1; k = new_edge[k].next) {

int v = new_edge[k].v;

new_edge[k].weight = delta_w[v];

}

}

// 更新答案

int[] new_global_cluster = new int[global_n];

for (int i = 0; i < global_n; i++) {

new_global_cluster[i] = index[cluster[global_cluster[i]]];

}

for (int i = 0; i < global_n; i++) {

global_cluster[i] = new_global_cluster[i];

}

top = new_top;

for (int i = 0; i < m; i++) {

edge[i] = new_edge[i];

}

for (int i = 0; i < new_n; i++) {

node_weight[i] = new_node_weight[i];

head[i] = new_head[i];

}

n = new_n;

init_cluster();

}

void print() {

for (int i = 0; i < global_n; i++) {

System.out.println(i + ": " + global_cluster[i]);

}

System.out.println("-------");

}

void louvain() {

init_cluster();

int count = 0; // 迭代次數

boolean update_flag; // 標記是否發生過更新

do { // 第一重迴圈,每次迴圈rebuild一次圖

// print(); // 列印簇列表

count++;

cluster_weight = new double[n];

for (int j = 0; j < n; j++) { // 生成簇的權值

cluster_weight[cluster[j]] += node_weight[j];

}

int[] order = new int[n]; // 生成隨機序列

for (int i = 0; i < n; i++)

order[i] = i;

Random random = new Random();

for (int i = 0; i < n; i++) {

int j = random.nextInt(n);

int temp = order[i];

order[i] = order[j];

order[j] = temp;

}

int enum_time = 0; // 列舉次數,到n時代表所有點已經遍歷過且無移動的點

int point = 0; // 迴圈指標

update_flag = false; // 是否發生過更新的標記

do {

int i = order[point];

point = (point + 1) % n;

if (try_move_i(i)) { // 對i點做嘗試

enum_time = 0;

update_flag = true;

} else {

enum_time++;

}

} while (enum_time < n);

if (count > iteration_time || !update_flag) // 如果沒更新過或者迭代次數超過指定值

break;

rebuildGraph(); // 重構圖

} while (true);

}

}

Main.java:

package myLouvain;

import java.io.BufferedWriter;

import java.io.FileWriter;

import java.io.IOException;

import java.util.ArrayList;

public class Main {

public static void writeOutputJson(String fileName, Louvain a) throws IOException {

BufferedWriter bufferedWriter;

bufferedWriter = new BufferedWriter(new FileWriter(fileName));

bufferedWriter.write("{\n\"nodes\": [\n");

for(int i=0;i<a.global_n;i++){

bufferedWriter.write("{\"id\": \""+i+"\", \"group\": "+a.global_cluster[i]+"}");

if(i+1!=a.global_n)

bufferedWriter.write(",");

bufferedWriter.write("\n");

}

bufferedWriter.write("],\n\"links\": [\n");

for(int i=0;i<a.global_n;i++){

for (int j = a.global_head[i]; j != -1; j = a.global_edge[j].next) {

int k=a.global_edge[j].v;

bufferedWriter.write("{\"source\": \""+i+"\", \"target\": \""+k+"\", \"value\": 1}");

if(i+1!=a.global_n||a.global_edge[j].next!=-1)

bufferedWriter.write(",");

bufferedWriter.write("\n");

}

}

bufferedWriter.write("]\n}\n");

bufferedWriter.close();

}

static void writeOutputCluster(String fileName, Louvain a) throws IOException{

BufferedWriter bufferedWriter;

bufferedWriter = new BufferedWriter(new FileWriter(fileName));

for(int i=0;i<a.global_n;i++){

bufferedWriter.write(Integer.toString(a.global_cluster[i]));

bufferedWriter.write("\n");

}

bufferedWriter.close();

}

static void writeOutputCircle(String fileName, Louvain a) throws IOException{

BufferedWriter bufferedWriter;

bufferedWriter = new BufferedWriter(new FileWriter(fileName));

ArrayList list[] = new ArrayList[a.global_n];

for(int i=0;i<a.global_n;i++){

list[i]=new ArrayList<Integer>();

}

for(int i=0;i<a.global_n;i++){

list[a.global_cluster[i]].add(i);

}

for(int i=0;i<a.global_n;i++){

if(list[i].size()==0)

continue;

for(int j=0;j<list[i].size();j++)

bufferedWriter.write(list[i].get(j).toString()+" ");

bufferedWriter.write("\n");

}

bufferedWriter.close();

}

public static void main(String[] args) throws IOException {

// TODO Auto-generated method stub

Louvain a = new Louvain();

double beginTime = System.currentTimeMillis();

a.init("/home/eason/Louvain/testdata.txt");

a.louvain();

double endTime = System.currentTimeMillis();

writeOutputJson("/var/www/html/Louvain/miserables.json", a); //輸出至d3顯示

writeOutputCluster("/home/eason/Louvain/cluster.txt",a); //列印每個節點屬於哪個簇

writeOutputCircle("/home/eason/Louvain/circle.txt",a); //列印每個簇有哪些節點

System.out.format("program running time: %f seconds%n", (endTime - beginTime) / 1000.0);

}

}

用d3繪圖工具對上文那張16個結點的圖的劃分進行louvain演算法後的視覺化結果:

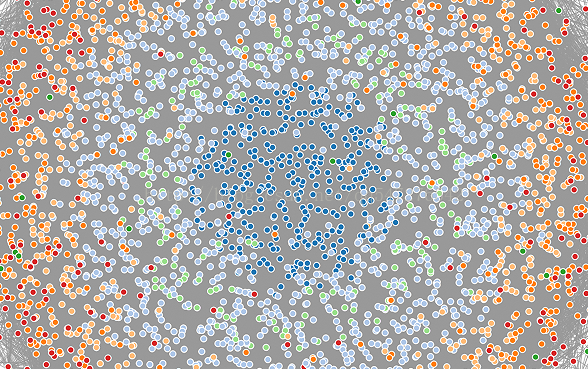

對一個4000個結點,80000條邊的無向圖(facebook資料)執行我的louvain演算法,大概需要1秒多的時間,劃分的效果還可以,視覺化效果(邊有點多了,都粘在一塊了):

//////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

下面是國外大牛的程式碼實現(為了大家方便直接貼上了,時間複雜度o(e),空間複雜度o(e)):

ModularityOptimizer.javaimport java.io.BufferedReader;

import java.io.BufferedWriter;

import java.io.FileReader;

import java.io.FileWriter;

import java.io.IOException;

import java.util.Arrays;

import java.util.Random;

public class ModularityOptimizer

{

public static void main(String[] args) throws IOException

{

boolean update;

double modularity, maxModularity, resolution, resolution2;

int algorithm, i, j, modularityFunction, nClusters, nIterations, nRandomStarts;

int[] cluster;

double beginTime, endTime;

Network network;

Random random;

String inputFileName, outputFileName;

inputFileName = "/home/eason/Louvain/facebook_combined.txt";

outputFileName = "/home/eason/Louvain/answer.txt";

modularityFunction = 1;

resolution = 1.0;

algorithm = 1;

nRandomStarts = 10;

nIterations = 3;

System.out.println("Modularity Optimizer version 1.2.0 by Ludo Waltman and Nees Jan van Eck");

System.out.println();

System.out.println("Reading input file...");

System.out.println();

network = readInputFile(inputFileName, modularityFunction);

System.out.format("Number of nodes: %d%n", network.getNNodes());

System.out.format("Number of edges: %d%n", network.getNEdges() / 2);

System.out.println();

System.out.println("Running " + ((algorithm == 1) ? "Louvain algorithm" : ((algorithm == 2) ? "Louvain algorithm with multilevel refinement" : "smart local moving algorithm")) + "...");

System.out.println();

resolution2 = ((modularityFunction == 1) ? (resolution / network.getTotalEdgeWeight()) : resolution);

beginTime = System.currentTimeMillis();

cluster = null;

nClusters = -1;

maxModularity = Double.NEGATIVE_INFINITY;

random = new Random(100);

for (i = 0; i < nRandomStarts; i++)

{

if (nRandomStarts > 1)

System.out.format("Random start: %d%n", i + 1);

network.initSingletonClusters(); //網路初始化,每個節點一個簇

j = 0;

update = true;

do

{

if (nIterations > 1)

System.out.format("Iteration: %d%n", j + 1);

if (algorithm == 1)

update = network.runLouvainAlgorithm(resolution2, random);

j++;

modularity = network.calcQualityFunction(resolution2);

if (nIterations > 1)

System.out.format("Modularity: %.4f%n", modularity);

}

while ((j < nIterations) && update);

if (modularity > maxModularity) {

cluster = network.getClusters();

nClusters = network.getNClusters();

maxModularity = modularity;

}

if (nRandomStarts > 1)

{

if (nIterations == 1)

System.out.format("Modularity: %.8f%n", modularity);

System.out.println();

}

}

endTime = System.currentTimeMillis();

if (nRandomStarts == 1)

{

if (nIterations > 1)

System.out.println();

System.out.format("Modularity: %.8f%n", maxModularity);

}

else

System.out.format("Maximum modularity in %d random starts: %f%n", nRandomStarts, maxModularity);

System.out.format("Number of communities: %d%n", nClusters);

System.out.format("Elapsed time: %.4f seconds%n", (endTime - beginTime) / 1000.0);

System.out.println();

System.out.println("Writing output file...");

System.out.println();

writeOutputFile(outputFileName, cluster);

}

private static Network readInputFile(String fileName, int modularityFunction) throws IOException

{

BufferedReader bufferedReader;

double[] edgeWeight1, edgeWeight2, nodeWeight;

int i, j, nEdges, nLines, nNodes;

int[] firstNeighborIndex, neighbor, nNeighbors, node1, node2;

Network network;

String[] splittedLine;

bufferedReader = new BufferedReader(new FileReader(fileName));

nLines = 0;

while (bufferedReader.readLine() != null)

nLines++;

bufferedReader.close();

bufferedReader = new BufferedReader(new FileReader(fileName));

node1 = new int[nLines];

node2 = new int[nLines];

edgeWeight1 = new double[nLines];

i = -1;

bufferedReader.readLine() ;

for (j = 1; j < nLines; j++)

{

splittedLine = bufferedReader.readLine().split(" ");

node1[j] = Integer.parseInt(splittedLine[0]);

if (node1[j] > i)

i = node1[j];

node2[j] = Integer.parseInt(splittedLine[1]);

if (node2[j] > i)

i = node2[j];

edgeWeight1[j] = (splittedLine.length > 2) ? Double.parseDouble(splittedLine[2]) : 1;

}

nNodes = i + 1;

bufferedReader.close();

nNeighbors = new int[nNodes];

for (i = 0; i < nLines; i++)

if (node1[i] < node2[i])

{

nNeighbors[node1[i]]++;

nNeighbors[node2[i]]++;

}

firstNeighborIndex = new int[nNodes + 1];

nEdges = 0;

for (i = 0; i < nNodes; i++)

{

firstNeighborIndex[i] = nEdges;

nEdges += nNeighbors[i];

}

firstNeighborIndex[nNodes] = nEdges;

neighbor = new int[nEdges];

edgeWeight2 = new double[nEdges];

Arrays.fill(nNeighbors, 0);

for (i = 0; i < nLines; i++)

if (node1[i] < node2[i])

{

j = firstNeighborIndex[node1[i]] + nNeighbors[node1[i]];

neighbor[j] = node2[i];

edgeWeight2[j] = edgeWeight1[i];

nNeighbors[node1[i]]++;

j = firstNeighborIndex[node2[i]] + nNeighbors[node2[i]];

neighbor[j] = node1[i];

edgeWeight2[j] = edgeWeight1[i];

nNeighbors[node2[i]]++;

}

{

nodeWeight = new double[nNodes];

for (i = 0; i < nEdges; i++)

nodeWeight[neighbor[i]] += edgeWeight2[i];

network = new Network(nNodes, firstNeighborIndex, neighbor, edgeWeight2, nodeWeight);

}

return network;

}

private static void writeOutputFile(String fileName, int[] cluster) throws IOException

{

BufferedWriter bufferedWriter;

int i;

bufferedWriter = new BufferedWriter(new FileWriter(fileName));

for (i = 0; i < cluster.length; i++)

{

bufferedWriter.write(Integer.toString(cluster[i]));

bufferedWriter.newLine();

}

bufferedWriter.close();

}

} Network.java

import java.io.Serializable;

import java.util.Random;

public class Network implements Cloneable, Serializable {

private static final long serialVersionUID = 1;

private int nNodes;

private int[] firstNeighborIndex;

private int[] neighbor;

private double[] edgeWeight;

private double[] nodeWeight;

private int nClusters;

private int[] cluster;

private double[] clusterWeight;

private int[] nNodesPerCluster;

private int[][] nodePerCluster;

private boolean clusteringStatsAvailable;

public Network(int nNodes, int[] firstNeighborIndex, int[] neighbor, double[] edgeWeight, double[] nodeWeight) {

this(nNodes, firstNeighborIndex, neighbor, edgeWeight, nodeWeight, null);

}

public Network(int nNodes, int[] firstNeighborIndex, int[] neighbor, double[] edgeWeight, double[] nodeWeight,

int[] cluster) {

int i, nEdges;

this.nNodes = nNodes;

this.firstNeighborIndex = firstNeighborIndex;

this.neighbor = neighbor;

if (edgeWeight == null) {

nEdges = neighbor.length;

this.edgeWeight = new double[nEdges];

for (i = 0; i < nEdges; i++)

this.edgeWeight[i] = 1;

} else

this.edgeWeight = edgeWeight;

if (nodeWeight == null) {

this.nodeWeight = new double[nNodes];

for (i = 0; i < nNodes; i++)

this.nodeWeight[i] = 1;

} else

this.nodeWeight = nodeWeight;

}

public int getNNodes() {

return nNodes;

}

public int getNEdges() {

return neighbor.length;

}

public double getTotalEdgeWeight() // 計算總邊權

{

double totalEdgeWeight;

int i;

totalEdgeWeight = 0;

for (i = 0; i < neighbor.length; i++)

totalEdgeWeight += edgeWeight[i];

return totalEdgeWeight;

}

public double[] getEdgeWeights() {

return edgeWeight;

}

public double[] getNodeWeights() {

return nodeWeight;

}

public int getNClusters() {

return nClusters;

}

public int[] getClusters() {

return cluster;

}

public void initSingletonClusters() {

int i;

nClusters = nNodes;

cluster = new int[nNodes];

for (i = 0; i < nNodes; i++)

cluster[i] = i;

deleteClusteringStats();

}

public void mergeClusters(int[] newCluster) {

int i, j, k;

if (cluster == null)

return;

i = 0;

for (j = 0; j < nNodes; j++) {

k = newCluster[cluster[j]];

if (k > i)

i = k;

cluster[j] = k;

}

nClusters = i + 1;

deleteClusteringStats();

}

public Network getReducedNetwork() {

double[] reducedNetworkEdgeWeight1, reducedNetworkEdgeWeight2;

int i, j, k, l, m, reducedNetworkNEdges1, reducedNetworkNEdges2;

int[] reducedNetworkNeighbor1, reducedNetworkNeighbor2;

Network reducedNetwork;

if (cluster == null)

return null;

if (!clusteringStatsAvailable)

calcClusteringStats();

reducedNetwork = new Network();

reducedNetwork.nNodes = nClusters;

reducedNetwork.firstNeighborIndex = new int[nClusters + 1];

reducedNetwork.nodeWeight = new double[nClusters];

reducedNetworkNeighbor1 = new int[neighbor.length];

reducedNetworkEdgeWeight1 = new double[edgeWeight.length];

reducedNetworkNeighbor2 = new int[nClusters - 1];

reducedNetworkEdgeWeight2 = new double[nClusters];

reducedNetworkNEdges1 = 0;

for (i = 0; i < nClusters; i++) {

reducedNetworkNEdges2 = 0;

for (j = 0; j < nodePerCluster[i].length; j++) {

k = nodePerCluster[i][j]; // k是簇i中第j個節點的id

for (l = firstNeighborIndex[k]; l < firstNeighborIndex[k + 1]; l++) {

m = cluster[neighbor[l]]; // m是k的在l位置的鄰居節點所屬的簇id

if (m != i) {

if (reducedNetworkEdgeWeight2[m] == 0) {

reducedNetworkNeighbor2[reducedNetworkNEdges2] = m;

reducedNetworkNEdges2++;

}

reducedNetworkEdgeWeight2[m] += edgeWeight[l];

}

}

reducedNetwork.nodeWeight[i] += nodeWeight[k];

}

for (j = 0; j < reducedNetworkNEdges2; j++) {

reducedNetworkNeighbor1[reducedNetworkNEdges1 + j] = reducedNetworkNeighbor2[j];

reducedNetworkEdgeWeight1[reducedNetworkNEdges1

+ j] = reducedNetworkEdgeWeight2[reducedNetworkNeighbor2[j]];

reducedNetworkEdgeWeight2[reducedNetworkNeighbor2[j]] = 0;

}

reducedNetworkNEdges1 += reducedNetworkNEdges2;

reducedNetwork.firstNeighborIndex[i + 1] = reducedNetworkNEdges1;

}

reducedNetwork.neighbor = new int[reducedNetworkNEdges1];

reducedNetwork.edgeWeight = new double[reducedNetworkNEdges1];

System.arraycopy(reducedNetworkNeighbor1, 0, reducedNetwork.neighbor, 0, reducedNetworkNEdges1);

System.arraycopy(reducedNetworkEdgeWeight1, 0, reducedNetwork.edgeWeight, 0, reducedNetworkNEdges1);

return reducedNetwork;

}

public double calcQualityFunction(double resolution) {

double qualityFunction, totalEdgeWeight;

int i, j, k;

if (cluster == null)

return Double.NaN;

if (!clusteringStatsAvailable)

calcClusteringStats();

qualityFunction = 0;

totalEdgeWeight = 0;

for (i = 0; i < nNodes; i++) {

j = cluster[i];

for (k = firstNeighborIndex[i]; k < firstNeighborIndex[i + 1]; k++) {

if (cluster[neighbor[k]] == j)

qualityFunction += edgeWeight[k];

totalEdgeWeight += edgeWeight[k];

}

}

for (i = 0; i < nClusters; i++)

qualityFunction -= clusterWeight[i] * clusterWeight[i] * resolution;

qualityFunction /= totalEdgeWeight;

return qualityFunction;

}

public boolean runLocalMovingAlgorithm(double resolution) {

return runLocalMovingAlgorithm(resolution, new Random());

}

public boolean runLocalMovingAlgorithm(double resolution, Random random) {

boolean update;

double maxQualityFunction, qualityFunction;

double[] clusterWeight, edgeWeightPerCluster;

int bestCluster, i, j, k, l, nNeighboringClusters, nStableNodes, nUnusedClusters;

int[] neighboringCluster, newCluster, nNodesPerCluster, nodeOrder, unusedCluster;

if ((cluster == null) || (nNodes == 1))

return false;

update = false;

clusterWeight = new double[nNodes];

nNodesPerCluster = new int[nNodes];

for (i = 0; i < nNodes; i++) {

clusterWeight[cluster[i]] += nodeWeight[i];

nNodesPerCluster[cluster[i]]++;

}

nUnusedClusters = 0;

unusedCluster = new int[nNodes];

for (i = 0; i < nNodes; i++)

if (nNodesPerCluster[i] == 0) {

unusedCluster[nUnusedClusters] = i;

nUnusedClusters++;

}

nodeOrder = new int[nNodes];

for (i = 0; i < nNodes; i++)

nodeOrder[i] = i;

for (i = 0; i < nNodes; i++) {

j = random.nextInt(nNodes);

k = nodeOrder[i];

nodeOrder[i] = nodeOrder[j];

nodeOrder[j] = k;

}

edgeWeightPerCluster = new double[nNodes];

neighboringCluster = new int[nNodes - 1];

nStableNodes = 0;

i = 0;

do {

j = nodeOrder[i];

nNeighboringClusters = 0;

for (k = firstNeighborIndex[j]; k < firstNeighborIndex[j + 1]; k++) {

l = cluster[neighbor[k]];

if (edgeWeightPerCluster[l] == 0) {

neighboringCluster[nNeighboringClusters] = l;

nNeighboringClusters++;

}

edgeWeightPerCluster[l] += edgeWeight[k];

}

clusterWeight[cluster[j]] -= nodeWeight[j];

nNodesPerCluster[cluster[j]]--;

if (nNodesPerCluster[cluster[j]] == 0) {

unusedCluster[nUnusedClusters] = cluster[j];

nUnusedClusters++;

}

bestCluster = -1;

maxQualityFunction = 0;

for (k = 0; k < nNeighboringClusters; k++) {

l = neighboringCluster[k];

qualityFunction = edgeWeightPerCluster[l] - nodeWeight[j] * clusterWeight[l] * resolution;

if ((qualityFunction > maxQualityFunction)

|| ((qualityFunction == maxQualityFunction) && (l < bestCluster))) {

bestCluster = l;

maxQualityFunction = qualityFunction;

}

edgeWeightPerCluster[l] = 0;

}

if (maxQualityFunction == 0) {

bestCluster = unusedCluster[nUnusedClusters - 1];

nUnusedClusters--;

}

clusterWeight[bestCluster] += nodeWeight[j];

nNodesPerCluster[bestCluster]++;

if (bestCluster == cluster[j])

nStableNodes++;

else {

cluster[j] = bestCluster;

nStableNodes = 1;

update = true;

}

i = (i < nNodes - 1) ? (i + 1) : 0;

} while (nStableNodes < nNodes); // 優化步驟是直到所有的點都穩定下來才結束

newCluster = new int[nNodes];

nClusters = 0;

for (i = 0; i < nNodes; i++)

if (nNodesPerCluster[i] > 0) {

newCluster[i] = nClusters;

nClusters++;

}

for (i = 0; i < nNodes; i++)

cluster[i] = newCluster[cluster[i]];

deleteClusteringStats();

return update;

}

public boolean runLouvainAlgorithm(double resolution) {

return runLouvainAlgorithm(resolution, new Random());

}

public boolean runLouvainAlgorithm(double resolution, Random random) {

boolean update, update2;

Network reducedNetwork;

if ((cluster == null) || (nNodes == 1))

return false;

update = runLocalMovingAlgorithm(resolution, random);

if (nClusters < nNodes) {

reducedNetwork = getReducedNetwork();

reducedNetwork.initSingletonClusters();

update2 = reducedNetwork.runLouvainAlgorithm(resolution, random);

if (update2) {

update = true;

mergeClusters(reducedNetwork.getClusters());

}

}

deleteClusteringStats();

return update;

}

private Network() {

}

private void calcClusteringStats() {

int i, j;

clusterWeight = new double[nClusters];

nNodesPerCluster = new int[nClusters];

nodePerCluster = new int[nClusters][];

for (i = 0; i < nNodes; i++) {

clusterWeight[cluster[i]] += nodeWeight[i];

nNodesPerCluster[cluster[i]]++;

}

for (i = 0; i < nClusters; i++) {

nodePerCluster[i] = new int[nNodesPerCluster[i]];

nNodesPerCluster[i] = 0;

}

for (i = 0; i < nNodes; i++) {

j = cluster[i];

nodePerCluster[j][nNodesPerCluster[j]] = i;

nNodesPerCluster[j]++;

}

clusteringStatsAvailable = true;

}

private void deleteClusteringStats() {

clusterWeight = null;

nNodesPerCluster = null;

nodePerCluster = null;

clusteringStatsAvailable = false;

}

}最後給上測試資料的連結,裡面有facebook,亞馬遜等社交資訊資料,供大家除錯用:

http://snap.stanford.edu/data/#socnets

如果我有寫的不對的地方,歡迎指正,相互學習!