DSSM & Multi-view DSSM TensorFlow實現

阿新 • • 發佈:2019-01-10

1. 資料

DSSM,對於輸入資料是Query對,即Query短句和相應的展示,展示中分點選和未點選,分別為正負樣,同時對於點選的先後順序,也是有不同賦值,具體可參考論文。

對於我的Query資料本人無權開放,還請自行尋找資料。

2. word hashing

原文使用3-grams,對於中文,我使用了uni-gram,因為中文字身字有一定代表意義(也有論文拆筆畫),對於每個gram都使用one-hot編碼代替,最終可以大大降低短句維度。

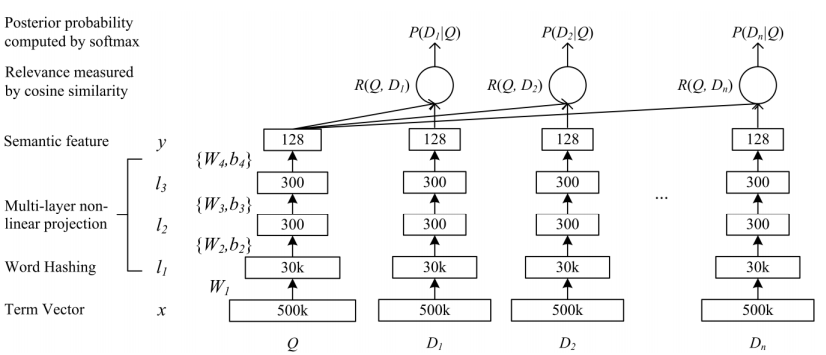

3. 結構

結構圖:

- 把條目對映成低維向量。

- 計算查詢和文件的cosine相似度。

3.1 輸入

這裡使用了TensorBoard視覺化,所以定義了name_scope:

with 3.2 全連線層

我使用三層的全連線層,對於每一層全連線層,除了神經元不一樣,其他都一樣,所以可以寫一個函式複用。

def add_layer(inputs, in_size, out_size, activation_function=None):

wlimit = np.sqrt(6.0 / (in_size + out_size))

Weights = tf.Variable(tf.random_uniform([in_size, out_size], -wlimit, wlimit))

biases = tf.Variable(tf.random_uniform([out_size], -wlimit, wlimit))

Wx_plus_b = tf.matmul(inputs, Weights) + biases

if 其中,對於權重和Bias,使用了按照論文的特定的初始化方式:

wlimit = np.sqrt(6.0 / (in_size + out_size))

Weights = tf.Variable(tf.random_uniform([in_size, out_size], -wlimit, wlimit))

biases = tf.Variable(tf.random_uniform([out_size], -wlimit, wlimit))- Batch Normalization

def batch_normalization(x, phase_train, out_size):

"""

Batch normalization on convolutional maps.

Ref.: http://stackoverflow.com/questions/33949786/how-could-i-use-batch-normalization-in-tensorflow

Args:

x: Tensor, 4D BHWD input maps

out_size: integer, depth of input maps

phase_train: boolean tf.Varialbe, true indicates training phase

scope: string, variable scope

Return:

normed: batch-normalized maps

"""

with tf.variable_scope('bn'):

beta = tf.Variable(tf.constant(0.0, shape=[out_size]),

name='beta', trainable=True)

gamma = tf.Variable(tf.constant(1.0, shape=[out_size]),

name='gamma', trainable=True)

batch_mean, batch_var = tf.nn.moments(x, [0], name='moments')

ema = tf.train.ExponentialMovingAverage(decay=0.5)

def mean_var_with_update():

ema_apply_op = ema.apply([batch_mean, batch_var])

with tf.control_dependencies([ema_apply_op]):

return tf.identity(batch_mean), tf.identity(batch_var)

mean, var = tf.cond(phase_train,

mean_var_with_update,

lambda: (ema.average(batch_mean), ema.average(batch_var)))

normed = tf.nn.batch_normalization(x, mean, var, beta, gamma, 1e-3)

return normed單層

with tf.name_scope('FC1'):

# 啟用函式在BN之後,所以此處為None

query_l1 = add_layer(query_batch, TRIGRAM_D, L1_N, activation_function=None)

doc_positive_l1 = add_layer(doc_positive_batch, TRIGRAM_D, L1_N, activation_function=None)

doc_negative_l1 = add_layer(doc_negative_batch, TRIGRAM_D, L1_N, activation_function=None)

with tf.name_scope('BN1'):

query_l1 = batch_normalization(query_l1, on_train, L1_N)

doc_l1 = batch_normalization(tf.concat([doc_positive_l1, doc_negative_l1], axis=0), on_train, L1_N)

doc_positive_l1 = tf.slice(doc_l1, [0, 0], [query_BS, -1])

doc_negative_l1 = tf.slice(doc_l1, [query_BS, 0], [-1, -1])

query_l1_out = tf.nn.relu(query_l1)

doc_positive_l1_out = tf.nn.relu(doc_positive_l1)

doc_negative_l1_out = tf.nn.relu(doc_negative_l1)

······合併負樣本

with tf.name_scope('Merge_Negative_Doc'):

# 合併負樣本,tile可選擇是否擴充套件負樣本。

doc_y = tf.tile(doc_positive_y, [1, 1])

for i in range(NEG):

for j in range(query_BS):

# slice(input_, begin, size)切片API

doc_y = tf.concat([doc_y, tf.slice(doc_negative_y, [j * NEG + i, 0], [1, -1])], 0)3.3 計算cos相似度

with tf.name_scope('Cosine_Similarity'):

# Cosine similarity

# query_norm = sqrt(sum(each x^2))

query_norm = tf.tile(tf.sqrt(tf.reduce_sum(tf.square(query_y), 1, True)), [NEG + 1, 1])

# doc_norm = sqrt(sum(each x^2))

doc_norm = tf.sqrt(tf.reduce_sum(tf.square(doc_y), 1, True))

prod = tf.reduce_sum(tf.multiply(tf.tile(query_y, [NEG + 1, 1]), doc_y), 1, True)

norm_prod = tf.multiply(query_norm, doc_norm)

# cos_sim_raw = query * doc / (||query|| * ||doc||)

cos_sim_raw = tf.truediv(prod, norm_prod)

# gamma = 20

cos_sim = tf.transpose(tf.reshape(tf.transpose(cos_sim_raw), [NEG + 1, query_BS])) * 203.4 定義損失函式

with tf.name_scope('Loss'):

# Train Loss

# 轉化為softmax概率矩陣。

prob = tf.nn.softmax(cos_sim)

# 只取第一列,即正樣本列概率。

hit_prob = tf.slice(prob, [0, 0], [-1, 1])

loss = -tf.reduce_sum(tf.log(hit_prob))

tf.summary.scalar('loss', loss)3.5選擇優化方法

with tf.name_scope('Training'):

# Optimizer

train_step = tf.train.AdamOptimizer(FLAGS.learning_rate).minimize(loss)## 3.6 開始訓練

# 建立一個Saver物件,選擇性儲存變數或者模型。

saver = tf.train.Saver()

# with tf.Session(config=config) as sess:

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

train_writer = tf.summary.FileWriter(FLAGS.summaries_dir + '/train', sess.graph)

start = time.time()

for step in range(FLAGS.max_steps):

batch_id = step % FLAGS.epoch_steps

sess.run(train_step, feed_dict=feed_dict(True, True, batch_id % FLAGS.pack_size, 0.5))Multi-view DSSM實現同理,可以參考GitHub:multi_view_dssm_v3