機器學習SVM--基於手寫字型識別

阿新 • • 發佈:2019-01-11

每一行代表一個手寫字型影象,最大值為16,大小64,然後最後一列為該圖片的標籤值。

import numpy as np

from sklearn import svm

import matplotlib.colors

import matplotlib.pyplot as plt

from PIL import Image

from sklearn.metrics import accuracy_score

import os

from sklearn.model_selection import train_test_split

from sklearn.model_selection import GridSearchCV

from time import time

def show_acc(a, b, tip):

acc = a.ravel() == b.ravel()

print('%s acc :%.2f%%' % (tip, 100*np.mean(acc)))

def save_image(image, i):

# 由於optdigits資料集的畫素最大是16,所以這裡對其reshape

image *= 16.9

# 影象取反為了好觀察

image = 255 - image

# 轉化為影象的uint8格式

a = image.astype(np.uint8)

output_path = './/handwriting'

if not os.path.exists(output_path):

os.mkdir(output_path)

Image.fromarray(a).save(output_path + ('//%d.jpg' % i))

if __name__ == '__main__':

# 開始載入訓練資料集

data = np.loadtxt('optdigits.tra', dtype=np.float, delimiter=',')

# 最後一列得到的是該手寫字型圖片的label

x, y = np.split(data, (-1,), axis=1)

# 64x64大小

images = x.reshape(-1, 8, 8)

y = y.ravel().astype(np.int)

# 載入測試資料集

data_test = np.loadtxt('optdigits.tes', dtype=np.float, delimiter=',')

x_test, y_test = np.split(data_test, (-1,), axis=1)

images_test = x_test.reshape(-1, 8, 8)

y_test = y_test.ravel().astype(np.int)

plt.figure(figsize=(15, 15), facecolor='w')

for index, image in enumerate(images[:16]):

plt.subplot(4, 8, index+1)

plt.imshow(image, cmap=plt.cm.gray_r, interpolation='nearest')

plt.title('trian image:%i' %y[index])

for index, image in enumerate(images_test[:16]):

plt.subplot(4, 8, index+17)

plt.imshow(image, cmap=plt.cm.gray_r, interpolation='nearest')

save_image(image.copy(), index)

plt.title('test image:%i' %y[index])

plt.tight_layout(1.5)

plt.show()

params = {'C':np.logspace(0, 3, 7), 'gamma':np.logspace(-5, 0, 11)}

model = svm.SVC(C=10, kernel='rbf', gamma=0.001)

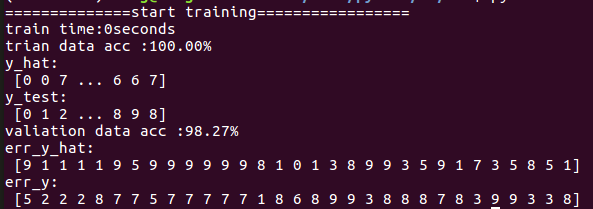

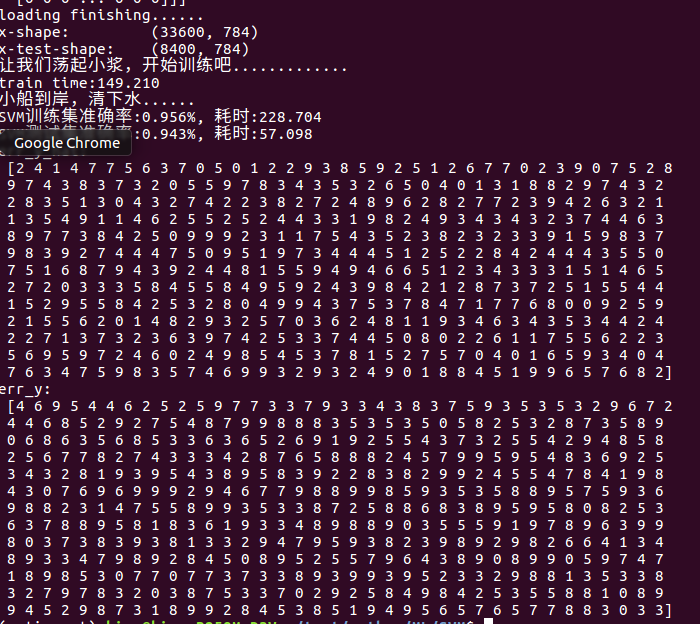

print('==============start training=================')

start = time()

model.fit(x, y)

end = time()

train_time = end - start

print('train time:%dseconds' % train_time)

y_hat = model.predict(x)

show_acc(y, y_hat, 'trian data')

y_hat_test = model.predict(x_test)

print('y_hat:\n', y_hat)

print('y_test:\n', y_test)

show_acc(y_test, y_hat_test, 'valiation data')

# 測試集裡面錯分的資料

# 測試集裡面和預測值不同的影象

err_images = images_test[y_test != y_hat_test]

# 預測裡面和測試不同的預測值

err_y_hat = y_hat_test[y_test != y_hat_test]

# 測試裡面和預測不同的測試值

err_y = y_test[y_test != y_hat_test]

print('err_y_hat:\n', err_y_hat)

print('err_y:\n', err_y)

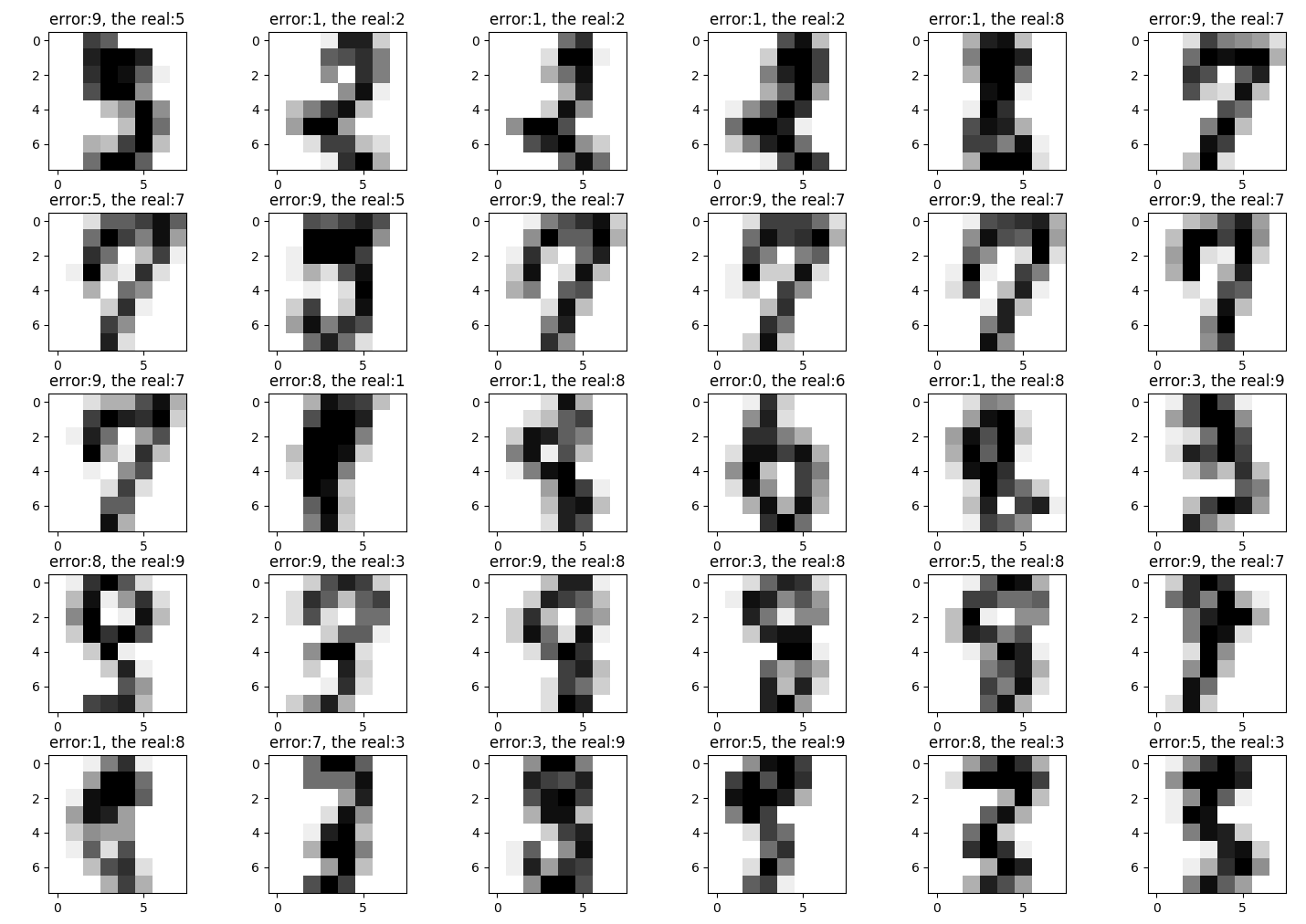

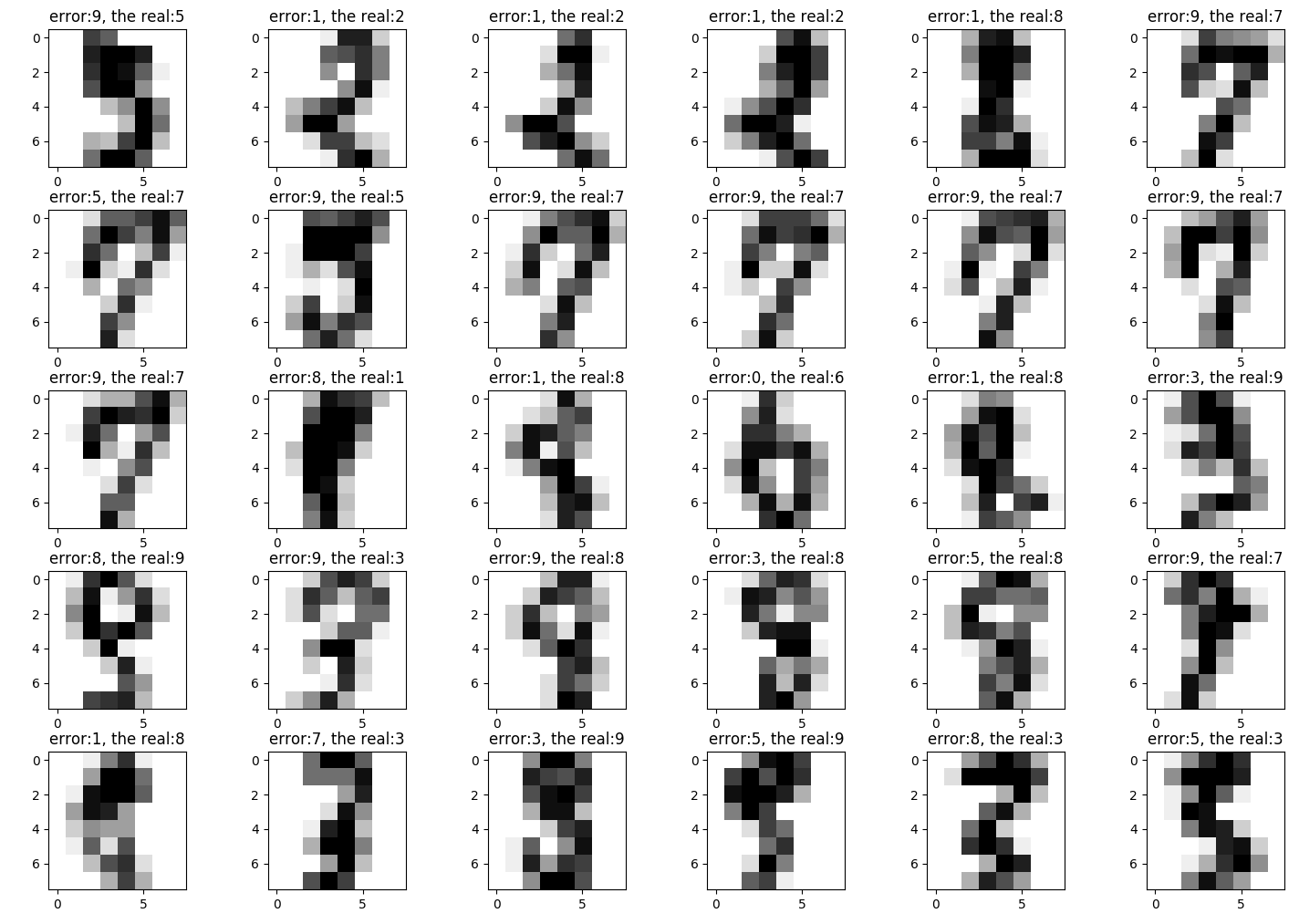

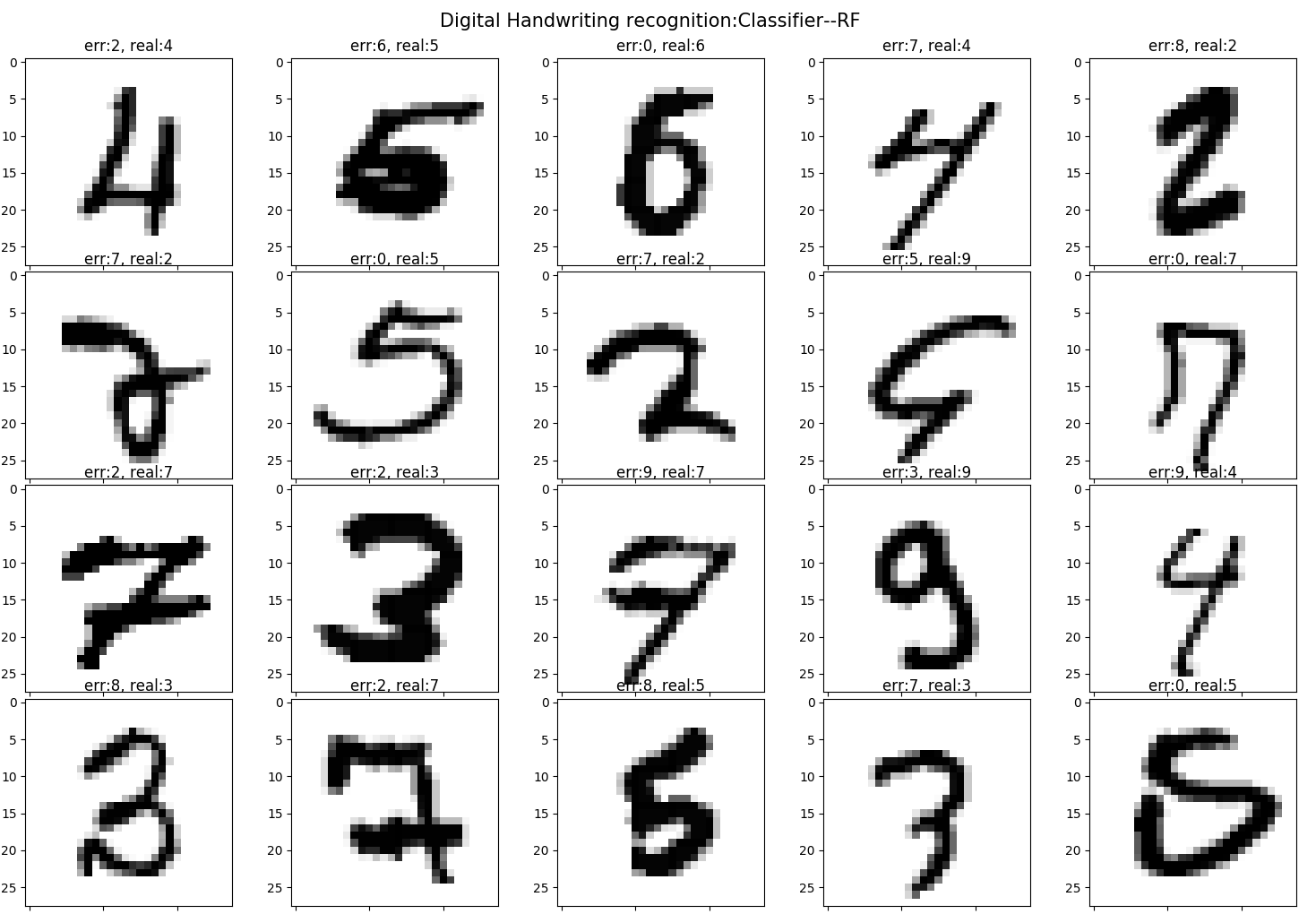

plt.figure(figsize=(15, 15), facecolor='w')

for index, image in enumerate(err_images):

if index >= 30:

break

plt.subplot(5, 6, index+1)

plt.imshow(image, cmap=plt.cm.gray_r, interpolation='nearest')

plt.title('error:%i, the real:%i' % (err_y_hat[index], err_y[index]))

plt.tight_layout(4)

plt.show()

接著我們更換訓練方法,修改程式:

# model = svm.SVC(C=10, kernel='rbf', gamma=0.001)

model = GridSearchCV(svm.SVC(kernel='rbf'), param_grid=params, cv=3)

訓練時間要長很多,但準確率並沒有提升。。。。

接著我們使用經典的MNIST資料集來做實驗:

import numpy as np

from sklearn import svm

import matplotlib.colors

import matplotlib.pyplot as plt

from PIL import Image

from sklearn.metrics import accuracy_score

import pandas as pd

import os

import csv

from sklearn.model_selection import train_test_split

from sklearn.model_selection import GridSearchCV

from sklearn.ensemble import RandomForestClassifier

from time import time

from pprint import pprint

import warnings

def show_acc(a, b, tip):

acc = a.ravel() == b.ravel()

print('%s acc :%.2f%%' % (tip, 100*np.mean(acc)))

def save_image(image, i):

# 影象取反為了好觀察

image = 255 - image

# 轉化為影象的uint8格式

a = image.astype(np.uint8)

output_path = './/handwriting'

if not os.path.exists(output_path):

os.mkdir(output_path)

Image.fromarray(a).save(output_path + ('//%d.jpg' % i))

def save_model(model):

data_test_hat = model.predict(data_test)

with open('Prediction.csv', 'wt') as f:

writer = csv.writer(f)

writer.writerow(['ImageId', 'Label'])

for i, d in enumerate(data_test_hat):

writer.writerow([i, d])

if __name__ == '__main__':

warnings.filterwarnings('ignore')

classifier_type = 'RF'

print('loading train data......')

start = time()

data = pd.read_csv('MNIST.train.csv', header=0, dtype=np.int)

print('loading finishing......')

# 讀取標籤值

y = data['label'].values

x = data.values[:, 1:]

print('the images numbers:%d, the pixs of images:%d' % (x.shape))

# reshape成28x28的格式,還原成原始的影象格式

images = x.reshape(-1, 28, 28)

y = y.ravel()

print(images)

print(y)

print('loading test data......')

start = time()

data_test = pd.read_csv('MNIST.test.csv', header=0, dtype=np.int)

data_test = data_test.values

images_test_result = data_test.reshape(-1, 28, 28)

print('data-test:\t', data_test)

print('images-test-result:\t', images_test_result)

print('loading finishing......')

np.random.seed(0)

x, x_test, y, y_test = train_test_split(x, y, train_size=0.8, random_state=1)

images = x.reshape(-1, 28, 28)

images_test = x_test.reshape(-1, 28, 28)

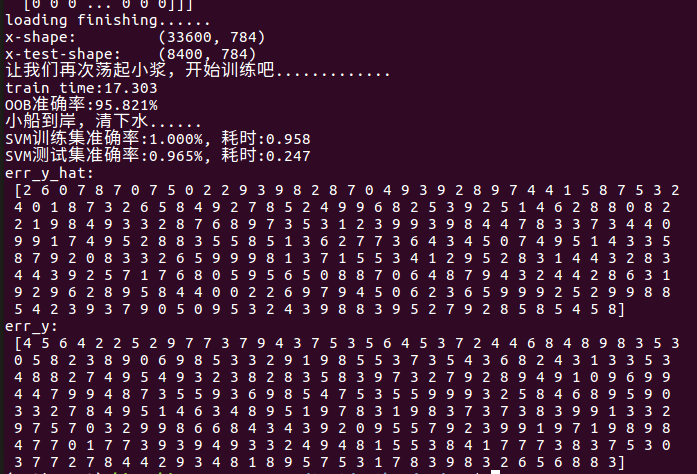

print('x-shape:\t', x.shape)

print('x-test-shape:\t', x_test.shape)

# 顯示我們使用的部分訓練資料和測試資料

plt.figure(figsize=(15, 9), facecolor='w')

for index, image in enumerate(images[:16]):

plt.subplot(4, 8, index+1)

plt.imshow(image, cmap=plt.cm.gray_r, interpolation='nearest')

plt.title('train data:%d' % (y[index]))

for index, image in enumerate(images_test_result[:16]):

plt.subplot(4, 8, index+17)

plt.imshow(image, cmap=plt.cm.gray_r, interpolation='nearest')

save_image(image.copy(), index)

plt.title('test data')

plt.tight_layout()

plt.show()

if classifier_type == 'SVM':

model = svm.SVC(C=3000, kernel='rbf', gamma=1e-10)

print('讓我們蕩起小漿,開始訓練吧.............')

t_start = time()

model.fit(x, y)

t_end = time()

print('train time:%.3f' % (t_end - t_start))

print('小船到岸,清下水......')

# print('最優分類器:', model.best_estimator_)

# print('最優引數:\t', model.best_params_)

# print('model.cv_results_ = \n', model.cv_results_)

t = time()

y_hat = model.predict(x)

t = time() - t

print('SVM訓練集準確率:%.3f%%, 耗時:%.3f' %(accuracy_score(y, y_hat), t))

t = time()

y_hat_test = model.predict(x_test)

t = time() - t

print('SVM測試集準確率:%.3f%%, 耗時:%.3f' %(accuracy_score(y_test, y_hat_test), t))

save_model(model)

elif classifier_type == 'RF':

rfc = RandomForestClassifier(100, criterion='gini', min_samples_split=2, min_impurity_split=1e-10, bootstrap=True, oob_score=True)

print('讓我們再次蕩起小漿,開始訓練吧.............')

t = time()

rfc.fit(x, y)

print('train time:%.3f' % (time() - t))

print('OOB準確率:%.3f%%' %(rfc.oob_score_*100))

print('小船到岸,清下水......')

t = time()

y_hat = rfc.predict(x)

t = time() - t

print('SVM訓練集準確率:%.3f%%, 耗時:%.3f' %(accuracy_score(y, y_hat), t))

t = time()

y_hat_test = rfc.predict(x_test)

t = time() - t

print('SVM測試集準確率:%.3f%%, 耗時:%.3f' %(accuracy_score(y_test, y_hat_test), t))

save_model(rfc)

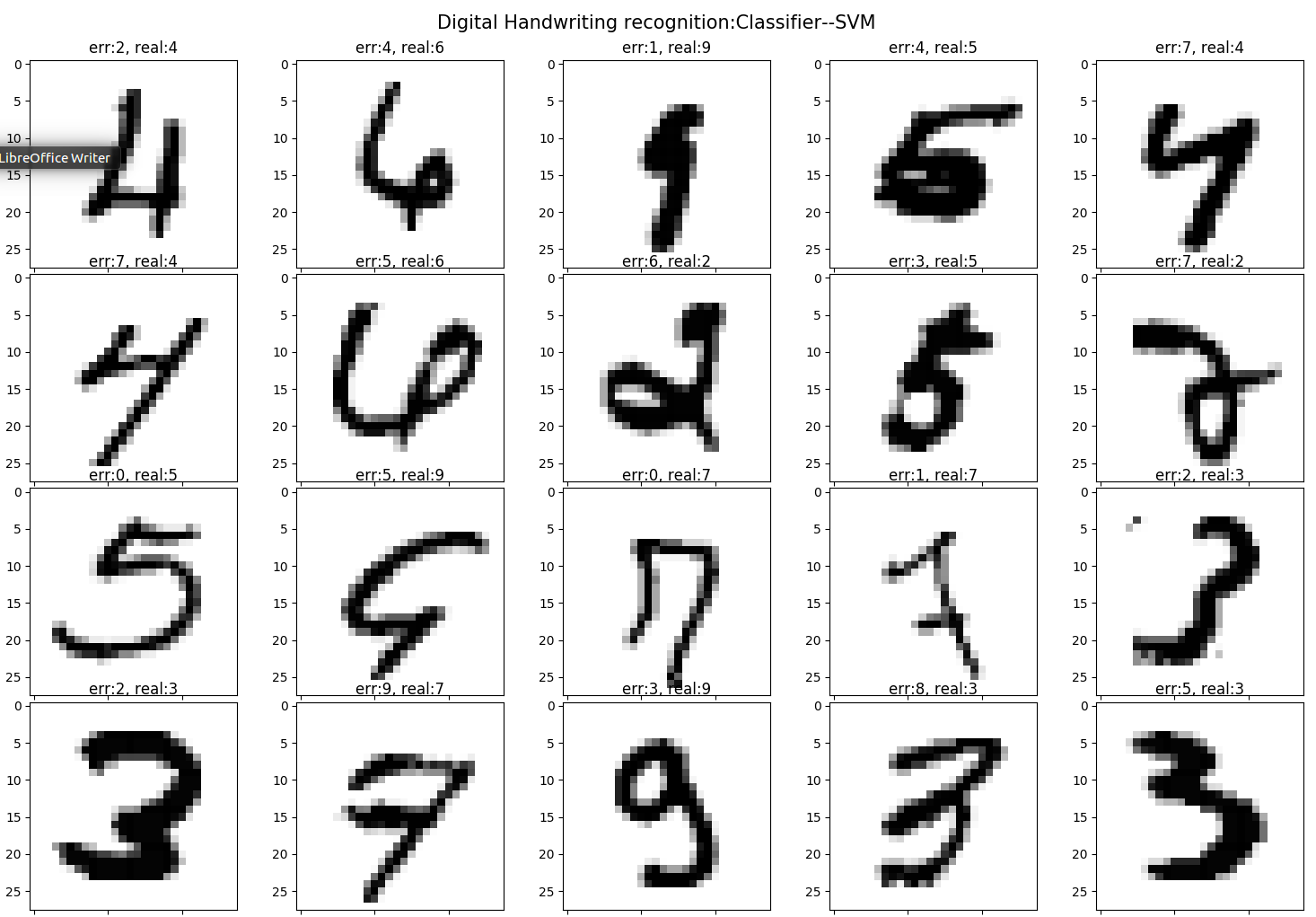

err = (y_test != y_hat_test)

err_images = images_test[err]

err_y_hat = y_hat_test[err]

err_y = y_test[err]

print('err_y_hat:\n', err_y_hat)

print('err_y:\n', err_y)

plt.figure(figsize=(15, 15), facecolor='w')

for index, image in enumerate(err_images):

if index >= 20:

break

plt.subplot(4, 5, index+1)

plt.imshow(image, cmap=plt.cm.gray_r, interpolation='nearest')

plt.title('err:%i, real:%i' % (err_y_hat[index], err_y[index]))

plt.suptitle('Digital Handwriting recognition:Classifier--%s' % classifier_type, fontsize=15)

plt.tight_layout(rect=(0, 0, 1, 0.94))

plt.show()

相對來說,SVM和隨機森林演算法效果都已經不錯,但隨機森林表現的要好一點,分析可能是SVM還需要調參。