無人駕駛之高階車道線檢測-AdvanceLane_finding_release

本篇部落格整個專案原始碼:github

引言

前面我們介紹車道線檢測的處理方法:車道線檢測之lanelines-detection

在文章末尾,我們分析了該演算法的魯棒性,當時我們提出了一些解決方法,比如說:

- 角度濾波器:濾除極小銳角或極大鈍角的線段

- 選取黃色的色調,並用白色代替

- 在邊緣檢測前,放大一些特徵

但是上述演算法還存在一個問題:在彎道處無法檢測車道線,因此本篇部落格將分享一種高階的車道線檢測技術,同時也是Udacity無人駕駛的第四個專案。

本專案主要實現如下幾個功能:

- 攝像頭校正

- 對原始影象做校正

- 使用影象處理技術來獲得一個二值化的影象

- 應用角度變換來獲得二值化影象的birds-eye view(鳥瞰圖)

- 檢測車道畫素(histogram)並獲得車道邊界

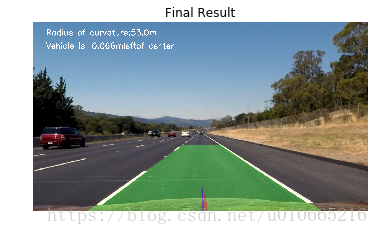

- 計算車道彎曲率及基於車道中心的車輛位置

- 在原始影象上包裹住車道邊界,用綠色色塊表示

- 將車道線進行視覺化顯示,並實時計算車道曲率及車輛位置

NOTE:關於視訊流的處理請參考Advance-Lanefinding

為了講解方便,本部分程式碼,我選取了一幀來說明

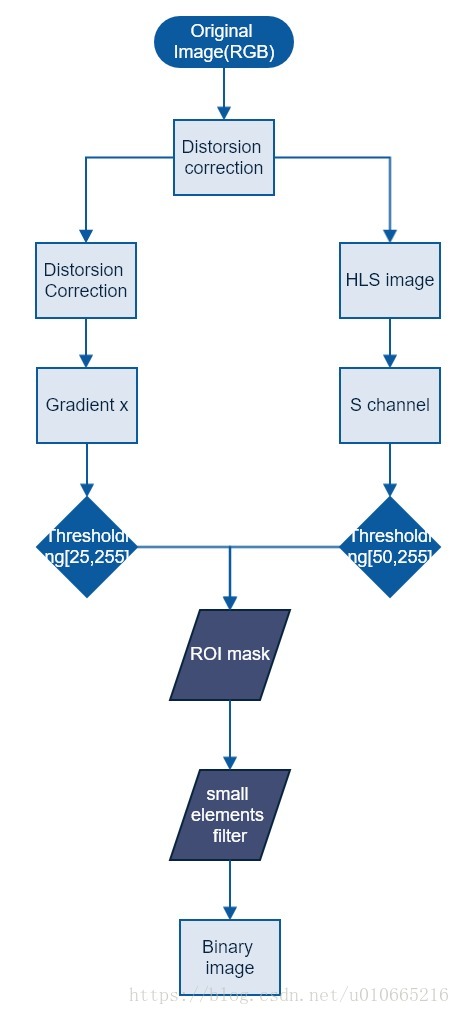

獲取車道線的演算法流程圖

本專案中,我們檢測車道線的演算法流程圖如下所示:

專案實現及程式碼註解

# import some libs we need import numpy as np import cv2 import glob import matplotlib.pyplot as plt from moviepy.editor import VideoFileClip from IPython.display import HTML from skimage import morphology from collections import deque %matplotlib inline

為了接下來的程式碼中,視覺化輸出的結果,這裡我們實現一個影象顯示演算法

#Display showMe = 0 def display(img,title,color=1): ''' func:display image img: rgb or grayscale title:figure title color:show image in color(1) or grayscale(0) ''' if color: plt.imshow(img) else: plt.imshow(img,cmap='gray') plt.title(title) plt.axis('off') plt.show()

Calibration Camera

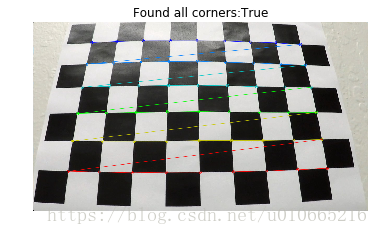

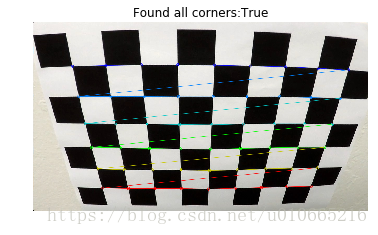

def cameracalibration(folder,nx,ny,showMe=0):

'''

使用opencv的findChessBoardCorners函式來找到所有角落的(x,y)座標

folder:校準影象的目錄

nx:x軸方向期望的角落個數

ny:y軸方向期望的角落個數

返回一個字典:

ret:校正的RMS誤差

mtx:攝像頭矩陣

dist:畸變係數

rvecs:旋轉向量

tvecs:平移向量

'''

#儲存物體實際三維空間座標及影象中影象的座標

objpoints = []#3D

imgpoints = []

objp = np.zeros((nxny,3),np.float32)

#print(objp.shape)

objp[:,:2] = np.mgrid[0:nx,0:ny].T.reshape(-1,2)

assert len(folder)!=0

#print(len(folder))

for fname in folder:

img = cv2.imread(fname)

img = cv2.cvtColor(img,cv2.COLOR_BGR2RGB)

gray = cv2.cvtColor(img,cv2.COLOR_RGB2GRAY)

ret,corners = cv2.findChessboardCorners(gray,(nx,ny))

img_sz = gray.shape[::-1]#倒序輸出

#print(corners)

#if ret is True , find corners

if ret:

imgpoints.append(corners)

objpoints.append(objp)

if showMe:

draw_corners = cv2.drawChessboardCorners(img,(nx,ny),corners,ret)

display(draw_corners,'Found all corners:{}'.format(ret))

if len(objpoints)==len(imgpoints) and len(objpoints)!=0:

ret,mtx,dist,rvecs,tvecs = cv2.calibrateCamera(objpoints,imgpoints,img_sz,None,None)

return {'ret':ret,'cameraMatrix':mtx,'distorsionCoeff':dist,\

'rotationVec':rvecs,'translationVec':tvecs}

else:

raise Error('Camera Calibration failed')

def correction(image,calib_params,showMe=0):

'''

失真矯正

calib_params:由攝像頭矯正返回的矯正引數

'''

corrected = cv2.undistort(image,calib_params['cameraMatrix'],calib_params['distorsionCoeff'],\

None,calib_params['cameraMatrix'])

if showMe:

display(image,'Original',color=1)

display(corrected,'After correction',color=1)

return corrected接下來我們來看看,尋找棋盤角點及實際場景下校正的結果

nx = 9 ny = 6 folder_calibration = glob.glob("camera_cal/calibration[1-3].jpg") #print(folder_calibration) calib_params = camera_calibration(folder_calibration,nx,ny,showMe=1) print('RMS Error of Camera calibration:{:.3f}'.format(calib_params['ret'])) print('This number must be between 0.1 and 1.0') imgs_tests = glob.glob("test_images/.jpg") original_img = np.random.choice(imgs_tests) original_img = cv2.imread("test_images/straight_lines2.jpg") original_img = cv2.cvtColor(original_img,cv2.COLOR_BGR2RGB) corr_img = correction(original_img,calib_params,showMe=1)

RMS Error of Camera calibration:1.208

This number must be between 0.1 and 1.0

如上圖所示,攝像頭首先通過標準黑白棋盤來獲得校正係數,然後再通過校正係數來對車輛前置攝像頭拍攝的圖片來應用校正係數。

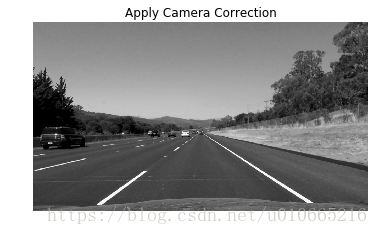

接下來,我們獲取影象的灰度圖

gray_ex = cv2.cvtColor(corr_img,cv2.COLOR_RGB2GRAY)

display(gray_ex,'Apply Camera Correction',color=0)Threshold Binary

獲得了影象的灰度圖後,我們再沿著X軸方向求梯度

def directional_gradient(img,direction='x',thresh=[0,255]):

'''

使用Opencv Sobel運算元來求方向梯度

img:Grayscale

direction:x or y axis

thresh:apply threshold on pixel intensity of gradient image

output is binary image

'''

if direction=='x':

sobel = cv2.Sobel(img,cv2.CV_64F,1,0)

elif direction=='y':

sobel = cv2.Sobel(img,cv2.CV_64F,0,1)

sobel_abs = np.absolute(sobel)#absolute value

scaled_sobel = np.uint8(sobel_abs255/np.max(sobel_abs))

binary_output = np.zeros_like(sobel)

binary_output[(scaled_sobel>=thresh[0])&(scaled_sobel<=thresh[1])] = 1

return binary_output

gradx_thresh = [25,255]

gradx = directional_gradient(gray_ex,direction='x',thresh = gradx_thresh)

display(gradx,'Gradient x',color=0)Color Transform

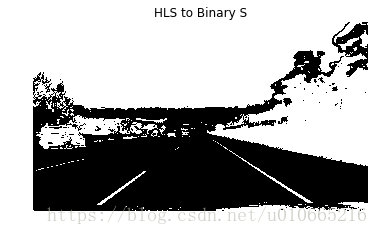

然後我們將undistorted image 轉換成HLS,並只保留S通道,二值化

def color_binary(img,dst_format='HLS',ch=2,ch_thresh=[0,255]):

'''

Color thresholding on channel ch

img:RGB

dst_format:destination format(HLS or HSV)

ch_thresh:pixel intensity threshold on channel ch

output is binary image

'''

if dst_format =='HSV':

img = cv2.cvtColor(img,cv2.COLOR_RGB2HSV)

ch_binary = np.zeros_like(img[:,:,int(ch-1)])

ch_binary[(img[:,:,int(ch-1)]>=ch_thresh[0])&(img[:,:,int(ch-1)]<=ch_thresh[1])] = 1

else:

img = cv2.cvtColor(img,cv2.COLOR_RGB2HLS)

ch_binary = np.zeros_like(img[:,:,int(ch-1)])

ch_binary[(img[:,:,int(ch-1)]>=ch_thresh[0])&(img[:,:,int(ch-1)]<=ch_thresh[1])] = 1

return ch_binary

ch_thresh = [50,255]

ch3_hls_binary = color_binary(corr_img,dst_format='HLS',ch=3,ch_thresh=ch_thresh)

display(ch3_hls_binary,'HLS to Binary S',color=0)接下來我們將Gradx與Binary S進行結合

combined_output = np.zeros_like(gradx)

combined_output[((gradx==1)|(ch3_hls_binary==1))] = 1

display(combined_output,'Combined output',color=0)接下來我們再來應用ROI mask來去除無關的背景資訊

mask = np.zeros_like(combined_output)

vertices = np.array([[(100,720),(545,470),(755,470),(1290,720)]],dtype=np.int32)

cv2.fillPoly(mask,vertices,1)

masked_image = cv2.bitwise_and(combined_output,mask)

display(masked_image,'Masked',color=0)從上圖我們可以看到有些散亂的小點,這些點很明顯是噪聲,我們可以利用morphology.remove_small_objects()函式來去除這些雜亂點

min_sz = 50

cleaned = morphology.remove_small_objects(masked_image.astype('bool'),min_size=min_sz,connectivity=2)

display(cleaned,'cleaned',color=0)Perspective Transform

這一步我將利用前面的ImageProcess類中的birds_eye()來實現將undistorted image to a ‘birds eye view’ of the road.

這樣操作後將有利於後面我們來擬合直線及測量曲率

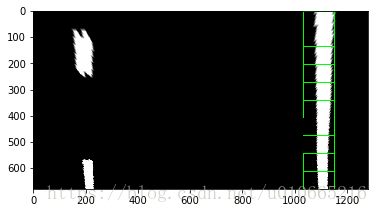

def birdView(img,M): ''' Transform image to birdeye view img:binary image M:transformation matrix return a wraped image ''' img_sz = (img.shape[1],img.shape[0]) img_warped = cv2.warpPerspective(img,M,img_sz,flags = cv2.INTER_LINEAR) return img_warped def perspective_transform(src_pts,dst_pts): ''' perspective transform args:source and destiantion points return M and Minv ''' M = cv2.getPerspectiveTransform(src_pts,dst_pts) Minv = cv2.getPerspectiveTransform(dst_pts,src_pts) return {'M':M,'Minv':Minv} # original image to bird view (transformation) src_pts = np.float32([[240,720],[575,470],[735,470],[1200,720]]) dst_pts = np.float32([[240,720],[240,0],[1200,0],[1200,720]]) transform_matrix = perspective_transform(src_pts,dst_pts) warped_image = birdView(cleaned1.0,transform_matrix['M']) display(cleaned,'undistorted',color=0) display(warped_image,'BirdViews',color=0)

從上述影象,我們可以看到圖片底部有一部分白色,這部分是前置攝像頭拍攝的汽車front-end

我們可以將底部的這部分白色擷取調

bottom_crop = -40

warped_image = warped_image[0:bottom_crop,:]車道線畫素檢測

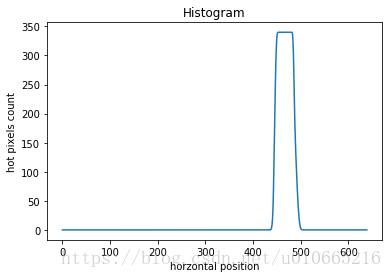

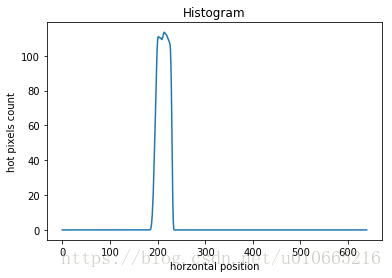

這裡車道線畫素檢測,我們主要採用Histogram法,一種基於畫素直方圖的演算法

這裡分別計算一張圖片底部的左半部分及右半部分的histogram

def find_centroid(image,peak_thresh,window,showMe): ''' find centroid in a window using histogram of hotpixels img:binary image window with specs {'x0','y0','width','height'} (x0,y0) coordinates of bottom-left corner of window return x-position of centroid ,peak intensity and hotpixels_cnt in window ''' #crop image to window dimension mask_window = image[round(window['y0']-window['height']):round(window['y0']), round(window['x0']):round(window['x0']+window['width'])] histogram = np.sum(mask_window,axis=0) centroid = np.argmax(histogram) hotpixels_cnt = np.sum(histogram) peak_intensity = histogram[centroid] if peak_intensity<=peak_thresh: centroid = int(round(window['x0']+window['width']/2)) peak_intensity = 0 else: centroid = int(round(centroid+window['x0'])) if showMe: plt.plot(histogram) plt.title('Histogram') plt.xlabel('horzontal position') plt.ylabel('hot pixels count') plt.show() return (centroid,peak_intensity,hotpixels_cnt) def find_starter_centroids(image,x0,peak_thresh,showMe): ''' find starter centroids using histogram peak_thresh:if peak intensity is below a threshold use histogram on the full height of the image returns x-position of centroid and peak intensity ''' window = {'x0':x0,'y0':image.shape[0],'width':image.shape[1]/2,'height':image.shape[0]/2} # get centroid centroid , peak_intensity,= findcentroid(image,peak_thresh,window,showMe) if peak_intensity<peak_thresh: window['height'] = image.shape[0] centroid,peak_intensity, = findcentroid(image,peak_thresh,window,showMe) return {'centroid':centroid,'intensity':peak_intensity} # if number of histogram pixels in window is below 10,condisder them as noise and does not attempt to get centroid peak_thresh = 10 showMe = 1 centroid_starter_right = find_starter_centroids(warped_image,x0=warped_image.shape[1]/2, peak_thresh=peak_thresh,showMe=showMe) centroid_starter_left = find_starter_centroids(warped_image,x0=0,peak_thresh=peak_thresh, showMe=showMe)

如上圖所示,warped_image右半邊的車道線處對應的histogram有個峰值

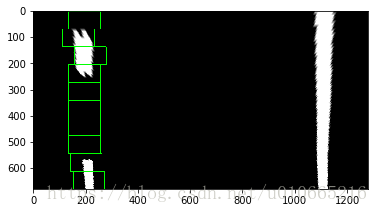

接下來,我們需要通過執行滑動視窗,並記錄hotpixels(≠≠0)的座標。

該視窗的width=120px,height=68px。該視窗從底部開始向上掃描,並記錄hotpixels的座標。

def run_sliding_window(image,centroid_starter,sliding_window_specs,showMe = showMe):

'''

Run sliding window from bottom to top of the image and return indexes of the hotpixels associated with lane

image:binary image

centroid_starter:centroid starting location sliding window

sliding_window_specs:['width','n_steps']

width of sliding window

number of steps of sliding window alog vertical axis

return {'x':[],'y':[]}

coordiantes of all hotpixels detected by sliding window

coordinates of alll centroids recorded but not used yet!

'''

#Initialize sliding window

window = {'x0':centroid_starter-int(sliding_window_specs['width']/2),

'y0':image.shape[0],'width':sliding_window_specs['width'],

'height':round(image.shape[0]/sliding_window_specs['n_steps'])}

hotpixels_log = {'x':[],'y':[]}

centroids_log = []

if showMe:

out_img = (np.dstack((image,image,image))255).astype('uint8')

for step in range(sliding_window_specs['n_steps']):

if window['x0']<0: window['x0'] = 0

if (window['x0']+sliding_window_specs['width'])>image.shape[1]:

window['x0'] = image.shape[1] - sliding_window_specs['width']

centroid,peak_intensity,hotpixels_cnt = find_centroid(image,peak_thresh,window,showMe=0)

if step==0:

starter_centroid = centroid

if hotpixels_cnt/(window['width']window['height'])>0.6:

window['width'] = window['width']2

window['x0'] = round(window['x0']-window['width']/2)

if (window['x0']<0):window['x0']=0

if (window['x0']+window['width'])>image.shape[1]:

window['x0'] = image.shape[1]-window['width']

centroid,peak_intensity,hotpixels_cnt = find_centroid(image,peak_thresh,window,showMe=0)

#if showMe:

#print('peak intensity{}'.format(peak_intensity))

#print('This is centroid:{}'.format(centroid))

mask_window = np.zeros_like(image)

mask_window[window['y0']-window['height']:window['y0'],

window['x0']:window['x0']+window['width']]\

= image[window['y0']-window['height']:window['y0'],

window['x0']:window['x0']+window['width']]

hotpixels = np.nonzero(mask_window)

#print(hotpixels_log['x'])

hotpixels_log['x'].extend(hotpixels[0].tolist())

hotpixels_log['y'].extend(hotpixels[1].tolist())

# update record of centroid

centroids_log.append(centroid)

if showMe:

cv2.rectangle(out_img,

(window['x0'],window['y0']-window['height']),

(window['x0']+window['width'],window['y0']),(0,255,0),2)

if int(window['y0'])==68:

plt.imshow(out_img)

plt.show()

'''

print(window['y0'])

plt.imshow(out_img)

'''

# set next position of window and use standard sliding window width

window['width'] = sliding_window_specs['width']

window['x0'] = round(centroid-window['width']/2)

window['y0'] = window['y0'] - window['height']

return hotpixels_log

sliding_window_specs = {'width':120,'n_steps':10}

log_lineLeft = run_sliding_window(warped_image,centroid_starter_left['centroid'],sliding_window_specs,showMe=showMe)

log_lineRight = run_sliding_window(warped_image,centroid_starter_right['centroid'],sliding_window_specs,showMe=showMe)接下來我們需要利用馬氏距離來去除一些二變數的異常值

DM(x)=(x−μ)TΣ−1(x−μ)−−−−−−−−−−−−−−−−√DM(x)=(x−μ)TΣ−1(x−μ)

def MahalanobisDist(x,y): ''' Mahalanobis Distance for bi-variate distribution ''' covariance_xy = np.cov(x,y,rowvar=0) inv_covariance_xy = np.linalg.inv(covariance_xy) xy_mean = np.mean(x),np.mean(y) x_diff = np.array([x_i-xy_mean[0] for x_i in x]) y_diff = np.array([y_i-xy_mean[1] for y_i in y]) diff_xy = np.transpose([x_diff,y_diff]) md = [] for i in range(len(diff_xy)): md.append(np.sqrt(np.dot(np.dot(np.transpose(diff_xy[i]),inv_covariance_xy),diff_xy[i]))) return md def MD_removeOutliers(x,y,MD_thresh): ''' remove pixels outliers using Mahalonobis distance ''' MD = MahalanobisDist(x,y) threshold = np.mean(MD)MD_thresh nx,ny,outliers = [],[],[] for i in range(len(MD)): if MD[i]<=threshold: nx.append(x[i]) ny.append(y[i]) else: outliers.append(i) return (nx,ny) MD_thresh = 1.8 log_lineLeft['x'],log_lineLeft['y'] = \ MD_removeOutliers(log_lineLeft['x'],log_lineLeft['y'],MD_thresh) log_lineRight['x'],log_lineRight['y'] = \ MD_removeOutliers(log_lineRight['x'],log_lineRight['y'],MD_thresh)

接下來我們用hotpixels來擬合二次多項式,這裡我們採用當前

幀的hotpixels來擬合曲線。如果想更好地擬合直線,我們可以利用過去連續5幀地影象

def update_tracker(tracker,new_value):

'''

update tracker(self.bestfit or self.bestfit_real or radO Curv or hotpixels) with new coeffs

new_coeffs is of the form {'a2':[val2,...],'a1':[va'1,...],'a0':[val0,...]}

tracker is of the form {'a2':[val2,...]}

update tracker of radius of curvature

update allx and ally with hotpixels coordinates from last sliding window

'''

if tracker =='bestfit':

bestfit['a0'].append(new_value['a0'])

bestfit['a1'].append(new_value['a1'])

bestfit['a2'].append(new_value['a2'])

elif tracker == 'bestfit_real':

bestfit_real['a0'].append(new_value['a0'])

bestfit_real['a1'].append(new_value['a1'])

bestfit_real['a2'].append(new_value['a2'])

elif tracker == 'radOfCurvature':

radOfCurv_tracker.append(new_value)

elif tracker == 'hotpixels':

allx.append(new_value['x'])

ally.append(new_value['y'])

buffer_sz = 5

allx = deque([],maxlen=buffer_sz)

ally = deque([],maxlen=buffer_sz)

bestfit = {'a0':deque([],maxlen=buffer_sz),

'a1':deque([],maxlen = buffer_sz),

'a2':deque([],maxlen=buffer_sz)}

bestfit_real = {'a0':deque([],maxlen=buffer_sz),

'a1':deque([],maxlen=buffer_sz),

'a2':deque([],maxlen=buffer_sz)}

radOfCurv_tracker = deque([],maxlen=buffer_sz)

update_tracker('hotpixels',log_lineRight)

update_tracker('hotpixels',log_lineLeft)

multiframe_r = {'x':[val for sublist in allx for val in sublist],

'y':[val for sublist in ally for val in sublist]}

multiframe_l = {'x':[val for sublist in allx for val in sublist],

'y':[val for sublist in ally for val in sublist]}

#fit to polynomial in pixel space

def polynomial_fit(data):

'''

多項式擬合

a0+a1 x+a2 x2

data:dictionary with x and y values{'x':[],'y':[]}

'''

a2,a1,a0 = np.polyfit(data['x'],data['y'],2)

return {'a0':a0,'a1':a1,'a2':a2}

#merters per pixel in y or x dimension

ym_per_pix = 12/450

xm_per_pix = 3.7/911

fit_lineLeft = polynomial_fit(multiframe_l)

fit_lineLeft_real = polynomial_fit({'x':[iym_per_pix for i in multiframe_l['x']],

'y':[ixm_per_pix for i in multiframe_l['y']]})

fit_lineRight = polynomial_fit(multiframe_r)

fit_lineRight_real = polynomial_fit({'x':[iym_per_pix for i in multiframe_r['x']],

'y':[ixm_per_pix for i in multiframe_r['y']]})

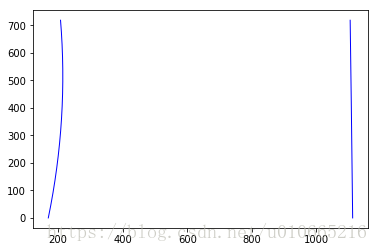

def predict_line(x0,xmax,coeffs): ''' predict road line using polyfit cofficient x vaues are in range (x0,xmax) polyfit coeffs:{'a2':,'a1':,'a2':} returns array of [x,y] predicted points ,x along image vertical / y along image horizontal direction ''' x_pts = np.linspace(x0,xmax-1,num=xmax) pred = coeffs['a2']*x_pts2+coeffs['a1']x_pts+coeffs['a0'] return np.column_stack((x_pts,pred)) fit_lineRight_singleframe = polynomial_fit(log_lineRight) fit_lineLeft_singleframe = polynomial_fit(log_lineLeft) var_pts = np.linspace(0,corr_img.shape[0]-1,num=corr_img.shape[0]) pred_lineLeft_singleframe = predict_line(0,corr_img.shape[0],fit_lineLeft_singleframe) pred_lineRight_sigleframe = predict_line(0,corr_img.shape[0],fit_lineRight_singleframe) plt.plot(pred_lineLeft_singleframe[:,1],pred_lineLeft_singleframe[:,0],'b-',label='singleframe',linewidth=1) plt.plot(pred_lineRight_sigleframe[:,1],pred_lineRight_sigleframe[:,0],'b-',label='singleframe',linewidth=1) plt.show()

曲率及車輛位置估計

這裡我們通過下面的公式來計算車道的曲率

R=(1+(2a2y+a1)2)3/2|2a2|R=(1+(2a2y+a1)2)3/2|2a2|

多項式滿足以下形式:

a2y2+a1y+a0a2y2+a1y+a0

def compute_radOfCurvature(coeffs,pt): return ((1+(2coeffs['a2']pt+coeffs['a1'])2)1.5)/np.absolute(2coeffs['a2']) pt_curvature = corr_img.shape[0] radOfCurv_r = compute_radOfCurvature(fit_lineRight_real,pt_curvatureym_per_pix) radOfCurv_l = compute_radOfCurvature(fit_lineLeft_real,pt_curvatureym_per_pix) average_radCurv = (radOfCurv_r+radOfCurv_l)/2 center_of_lane = (pred_lineLeft_singleframe[:,1][-1]+pred_lineRight_sigleframe[:,1][-1])/2 offset = (corr_img.shape[1]/2 - center_of_lane)xm_per_pix side_pos = 'right' if offset <0: side_pos = 'left' wrap_zero = np.zeros_like(gray_ex).astype(np.uint8) color_wrap = np.dstack((wrap_zero,wrap_zero,wrap_zero)) left_fitx = fit_lineLeft_singleframe['a2']var_pts2 + fit_lineLeft_singleframe['a1']var_pts + fit_lineLeft_singleframe['a0'] right_fitx = fit_lineRight_singleframe['a2']var_pts2 + fit_lineRight_singleframe['a1']*var_pts+fit_lineRight_singleframe['a0'] pts_left = np.array([np.transpose(np.vstack([left_fitx,var_pts]))]) pts_right = np.array([np.flipud(np.transpose(np.vstack([right_fitx,var_pts])))]) pts = np.hstack((pts_left,pts_right)) cv2.fillPoly(color_wrap,np.int([pts]),(0,255,0)) cv2.putText(color_wrap,'|',(int(corr_img.shape[1]/2),corr_img.shape[0]-10),cv2.FONT_HERSHEY_SIMPLEX,2,(0,0,255),8) cv2.putText(color_wrap,'|',(int(center_of_lane),corr_img.shape[0]-10),cv2.FONT_HERSHEY_SIMPLEX,1,(255,0,0),8) newwrap = cv2.warpPerspective(color_wrap,transform_matrix['Minv'],(corr_img.shape[1],corr_img.shape[0])) result = cv2.addWeighted(corr_img,1,newwrap,0.3,0) cv2.putText(result,'Vehicle is' + str(round(offset,3))+'m'+side_pos+'of center', (50,100),cv2.FONT_HERSHEY_SIMPLEX,1,(255,255,255),thickness=2) cv2.putText(result,'Radius of curvature:'+str(round(average_radCurv))+'m',(50,50),cv2.FONT_HERSHEY_SIMPLEX,1,(255,255,255),thickness=2) if showMe: plt.title('Final Result') plt.imshow(result) plt.axis('off') plt.show()

相關推薦

無人駕駛之高階車道線檢測-AdvanceLane_finding_release

本篇部落格整個專案原始碼:github 引言 前面我們介紹車道線檢測的處理方法:車道線檢測之lanelines-detection 在文章末尾,我們分析了該演算法的魯棒性,當時我們提出了一些解決方法,比如說: 角度濾波器:濾除極小銳角或極大鈍角的線段 選取黃色的色

優達學城無人駕駛工程師——P4車道線檢測功能

這次講的是優達學城的無人駕駛工程師的P4專案,利用車前方的攝像頭檢測車道線,下面開始我們的程式碼部分。import numpy as np import cv2 import glob import matplotlib.pyplot as plt import pickle

無人駕駛之車道線檢測簡易版

無人駕駛技術近些年發展迅速。無人車若想實現自動駕駛,從視覺的角度上講其要先學會觀察道路,具體來說,就是檢測車道線。包括識別車道線與車的位置關係,是實線還是虛線等。本文將簡單介紹車道線檢測的基本技術,包括Canny Edges、Hough Transform等

無人駕駛--車道線檢測實戰(附原始碼)

做完專案後寫了個技術小結,供自己回顧也為他人學習提供參考。 另外準備建一個無人駕駛方面的微信交流群,有興趣的朋友可以加我微信:wxl609278502 請註明: 姓名-單位/學校 專案描述: 使用opencv實時處理車載攝像機採集的道路影象

【智慧駕駛】車道線檢測中的新IPM(逆透視變換)演算法實驗效果

1、實驗內容 在車道保持LKA功能實現時,需要對車道線進行精準檢測:①、計算曲率半徑,②、保證測距精度。因此需要對相機的透檢視persImg進行IPM逆透視變換,得到俯檢視birdImg,在birdImg中進行車道線特徵檢測、擬合和測距。 基於以下思路,建立了新的IPM模型:對真實世界座

無人駕駛之車輛檢測與跟蹤

整個專案原始碼:GitHub 整個專案資料集:車輛資料集、無車輛資料集 引言 本次分享主要介紹,如何對道路上的汽車進行識別與跟蹤。這裡我們實現一個簡單的demo。後續我們還會對前面的程式碼及功能進行重構,從而進一步豐富我們的功能。 專案軟體框

車道線檢測之-sobel運算元邊緣檢測原理介紹

sobel運算元是canny邊緣檢測的核心,也是車道線檢測的中心,所以弄清其原理很重要。 要理解sobel邊緣檢測,首先弄清楚一點影象每一點的畫素值是一個和x,y都相關的值,即f(x,y), 任意給定的x和y都可以索引到一個畫素值。 下圖一代表了一個普通的影象,

基於Spatial CNN的車道線檢測和交通場景理解

SCNN車道線檢測--(SCNN)Spatial As Deep: Spatial CNN for Traffic Scene Understanding(論文解讀) Spatial As Deep: Spatial CNN for Traffic Scene Understanding 收

車道線檢測參考學習資料

一、GitHub: https://github.com/ChengZhongShen/Advanced_Lane_Lines https://github.com/MaybeShewill-CV/lanenet-lane-detection https://github.com/k

車道線檢測最全資料集錦

Summary:GitHub:車道線檢測最全資料集錦 Author:Amusi Date:2018-12-27 微信公眾號:CVer github:amusi/awesome-lane-detection 原文連結:GitHub:車道線檢測最全資料集錦 知乎:https://zh

基於python的車道線檢測

最近在開源社群下載了一份使用opencv在python環境中實現車道線檢測的程式碼,研究了一下,終於有點兒看懂了,尋思著寫下來,免得以後忘記了。 這個車道線檢測專案原本是優達學城裡無人駕駛課程中的第一個上手的專案,原始碼應該是一個外國人寫的吧,反正大家傳來傳去

卷積神經網路CNN(8)—— Pix2Pix Application -- Aerialmap Lane Line Detection (Pix2Pix應用:航拍圖車道線檢測)

前言 GAN(Generative Adversarial Networks–https://arxiv.org/abs/1406.2661)自問世而來熱度一直無法退減,生成網路G與判別網路D通過相互對抗(原作者比喻成欺騙)訓練網路,最終得到可以生成以假亂真圖

無人駕駛之測試資料集

目前,全球主流的自動駕駛測試資料集包括Cityscapes、Imagenet(ILSVRC)、COCO、PASCAL VOC、CIFAR、MNIST、KITTI、LFW等。 1.Cityscapes Cityscapes是由賓士於2015年推出的,提供無人駕駛環境下的影象

車道線檢測

專案描述:使用openCV設計演算法處理車輛前置攝像頭錄下的視訊,檢測車輛前方的車道,並計算車道曲率專案程式碼GitHub地址:https://github.com/yang1688899/CarND-Advanced-Lane-Lines這是在幾張測試圖片上的處理結果:實現

車道線檢測演算法

1.高斯模糊演算法 blur_gray = cv2.GaussianBlur(gray,(kernel_size,kernel_size),0) Mathematically, applying a Gaussian blur to an image i

車道線檢測方法總結

車道線檢測,我分別嘗試了傳統方案雙車道線直線檢測,以及SCNN,VPGNET,Lanenet等多車道線曲線檢測。今天就閱讀Lanenet寫讀後感,有理解誤差的地方望批評指正。傳統方案的好處在於速度快,缺點在於目前只適用於直線檢測 ,曲線檢測效果不好;SCNN基於框架torch

車道線檢測資源

pat ext .net tar .com projects mar class tex 數據集 CULane Dataset https://xingangpan.github.io/projects/CULane.html BDD100K https://bdd

車道線檢測LaneNet

# LaneNet - LanNet - Segmentation branch 完成語義分割,即判斷出畫素屬於車道or背景 - Embedding branch 完成畫素的向量表示,用於後續聚類,以完成例項分割 - H-Net ## Segmentation branch 解決樣本分佈不

Lane-Detection 近期車道線檢測論文閱讀總結

近期閱讀的幾篇關於車道線檢測的論文總結。 ## 1. 車道線檢測任務需求分析 ### 1.1 問題分析 針對車道線檢測任務,需要明確的問題包括: (1)如何對車道線建模,即用什麼方式來表示車道線。 從應用的角度來說,最終需要的是車道線在世界座標系下的方程。而神經網路更適合提取影象層面的特徵,直接回歸

手把手教用matlab做無人駕駛(九)--專案1:使用單目相機檢測車道線

現在介紹一個專案,這個專案跟優達學城的課程有點像,可以學習一下.。 不管是含有ADAS功能的車還是依賴於多種感測器設計的自動駕駛汽車,它們需要的感測器都包括超聲波、雷達、鐳射雷達和攝像頭。接下來的專案闡述的是用單目相機實現自動駕駛過程中一部分內容,實現的內容如下: 1