Phoenix三貼之三:Phoenix和hive的整合

0.前期準備,偽分散式的hbase搭建(這裡簡單演示一下)

Hbase的偽分散式安裝部署(使用三個程序來當作叢集)

在這裡,下載的是1.2.3版本

關於hbase和hadoop的版本對應資訊,可參考官檔的說明

tar -zxvf hbase-1.2.6-bin.tar.gz -C /opt/soft/ cd /opt/soft/hbase-1.2.6/ vim conf/hbase-env.sh #在內部加入export JAVA_HOME=/usr/local/jdk1.8(或者source /etc/profile) #配置Hbase mkdir /opt/soft/hbase-1.2.6/data vim conf/hbase-site.xml #####下面是在hbase-site.xml新增的項##### <configuration> <property> <name>hbase.rootdir</name> <value>hdfs://yyhhdfs/hbase</value> </property> <property> <name>hbase.zookeeper.property.dataDir</name> <value>/opt/soft/hbase-1.2.6/data/zookeeper</value> </property> <property> <name>hbase.cluster.distributed</name> <value>true</value> </property> </configuration> #在regionservers內部寫入localhost echo "localhost" > conf/regionservers

啟動Hbase

[[email protected] ~]# /opt/soft/hbase-1.2.6/bin/hbase-daemon.sh start zookeeper

starting zookeeper, logging to /opt/soft/hbase-1.2.6/logs/hbase-root-zookeeper-yyh4.out

[[email protected] ~]# /opt/soft/hbase-1.2.6/bin/hbase-daemon.sh start master

starting master, logging to /opt/soft/hbase-1.2.6/logs/hbase-root-master-yyh4.out

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

[ 可以看出,新增了HQuorumPeer,HRegionServer和HMaster三個程序。

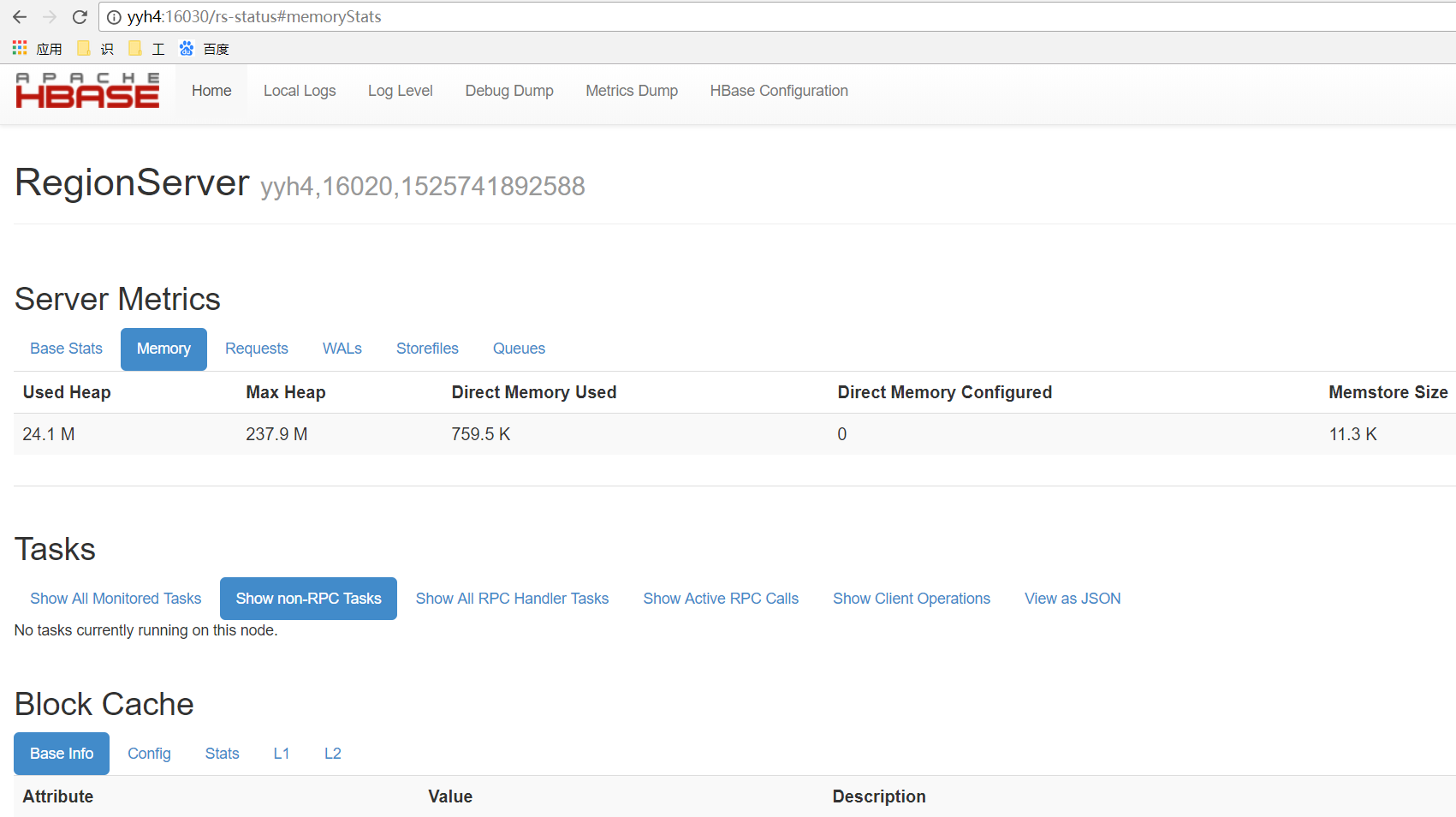

通過http://yyh4:16030/訪問Hbase的web頁面

至此,Hbase的偽分散式叢集搭建完畢

1. 安裝部署

1.1 安裝預編譯的Phoenix

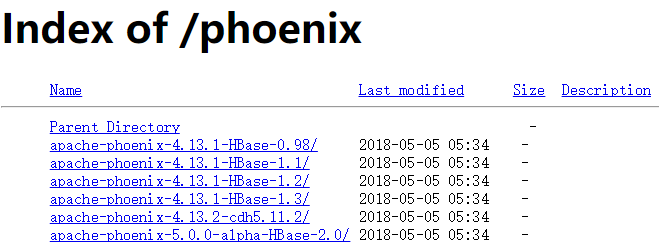

1.下載並解壓最新版的phoenix-[version]-bin.tar包

地址:http://apache.fayea.com/phoenix/apache-phoenix-4.13.1-HBase-1.2/bin/

理由:hbase是1.2.6版本的,所以選用4.13.1版本的phoenix,

將phoenix-[version]-server.jar放入服務端和master節點的HBase的lib目錄下

重啟HBase

將phoenix-[version]-client.jar新增到所有Phoenix客戶端的classpath

#將phoenix-[version]-server.jar放入服務端和master節點的HBase的lib目錄下

#將phoenix-[version]-client.jar新增到所有Phoenix客戶端的classpath

[[email protected] /]# cd /opt/soft

[[email protected] soft]# tar -zxvf apache-phoenix-4.13.1-HBase-1.2-bin.tar.gz

[[email protected] soft]# cp apache-phoenix-4.13.1-HBase-1.2-bin/phoenix-4.13.1-HBase-1.2-server.jar hbase-1.2.6/lib/

[[email protected] soft]# cp apache-phoenix-4.13.1-HBase-1.2-bin/phoenix-4.13.1-HBase-1.2-client.jar hbase-1.2.6/lib/2 使用Phoenix

若要在命令列執行互動式SQL語句:

執行過程 (要求python及yum install python-argparse)

在phoenix-{version}/bin 目錄下

$ /opt/soft/apache-phoenix-4.13.1-HBase-1.2-bin/bin/sqlline.py localhost可以進入命令列模式

0: jdbc:phoenix:localhost>退出命令列的方式是執行 !quit

0: jdbc:phoenix:localhost>!quit

0: jdbc:phoenix:localhost> help其餘常見指令

!all Execute the specified SQL against all the current connections

!autocommit Set autocommit mode on or off

!batch Start or execute a batch of statements

!brief Set verbose mode off

!call Execute a callable statement

!close Close the current connection to the database

!closeall Close all current open connections

!columns List all the columns for the specified table

!commit Commit the current transaction (if autocommit is off)

!connect Open a new connection to the database.

!dbinfo Give metadata information about the database

!describe Describe a table

!dropall Drop all tables in the current database

!exportedkeys List all the exported keys for the specified table

!go Select the current connection

!help Print a summary of command usage

!history Display the command history

!importedkeys List all the imported keys for the specified table

!indexes List all the indexes for the specified table

!isolation Set the transaction isolation for this connection

!list List the current connections

!manual Display the SQLLine manual

!metadata Obtain metadata information

!nativesql Show the native SQL for the specified statement

!outputformat Set the output format for displaying results

(table,vertical,csv,tsv,xmlattrs,xmlelements)

!primarykeys List all the primary keys for the specified table

!procedures List all the procedures

!properties Connect to the database specified in the properties file(s)

!quit Exits the program

!reconnect Reconnect to the database

!record Record all output to the specified file

!rehash Fetch table and column names for command completion

!rollback Roll back the current transaction (if autocommit is off)

!run Run a script from the specified file

!save Save the current variabes and aliases

!scan Scan for installed JDBC drivers

!script Start saving a script to a file

!set Set a sqlline variable

!sql Execute a SQL command

!tables List all the tables in the database

!typeinfo Display the type map for the current connection

!verbose Set verbose mode on若要在命令列執行SQL指令碼

$ sqlline.py localhost ../examples/stock_symbol.sql測試ph:

#建立表

CREATE TABLE yyh ( pk VARCHAR PRIMARY KEY,val VARCHAR );

#增加,修改表資料

upsert into yyh values ('1','Helldgfho');

#查詢表資料

select * from yyh;

#刪除表(如果是建立表,則同時刪除phoenix與hbase表資料,如果是建立view,則不刪除hbase的資料)

drop table test;3.(HIVE和Phoenix整合)Phoenix Hive的Phoenix Storage Handler

Apache Phoenix Storage Handler是一個外掛,它使Apache Hive能夠使用HiveQL從Apache Hive命令列訪問Phoenix表。

Hive安裝程式

使hive提供phoenix-{version}-hive.jar:

#在phoenix節點:

[[email protected] apache-phoenix-4.13.1-HBase-1.2-bin]#

scp phoenix-4.13.1-HBase-1.2-hive.jar yyh3://opt/soft/apache-hive-1.2.1-bin/lib

#第1步:在hive-server節點:hive-env.sh(這裡不需要,因為lib內部)

#HIVE_AUX_JARS_PATH = <jar的路徑>

#第2步:將屬性新增到hive-site.xml(也不需要),以便Hive MapReduce作業可以使用.jar:

#<屬性>

# <名稱> hive.aux.jars.path </名稱>

# <值>檔案:// <路徑> </值>

#</屬性>表建立和刪除

Phoenix Storage Handler支援INTERNAL和EXTERNAL Hive表。

建立內部表

對於INTERNAL表,Hive管理表和資料的生命週期。建立Hive表時,也會建立相應的Phoenix表。一旦Hive表被刪除,鳳凰表也被刪除。

create table phoenix_yyh ( s1 string, s2 string ) STORED BY 'org.apache.phoenix.hive.PhoenixStorageHandler' TBLPROPERTIES ( "phoenix.table.name" = "yyh", "phoenix.zookeeper.quorum" = "yyh4", "phoenix.zookeeper.znode.parent" = "/hbase", "phoenix.zookeeper.client.port" = "2181", "phoenix.rowkeys" = "s1, i1", "phoenix.column.mapping" = "s1:s1, i1:i1, f1:f1, d1:d1", "phoenix.table.options" = "SALT_BUCKETS=10, DATA_BLOCK_ENCODING='DIFF'" );

建立EXTERNAL表

對於EXTERNAL表,Hive與現有的Phoenix表一起使用,僅管理Hive元資料。從Hive中刪除EXTERNAL表只會刪除Hive元資料,但不會刪除Phoenix表。

create external table ayyh (pk string, value string) STORED BY 'org.apache.phoenix.hive.PhoenixStorageHandler' TBLPROPERTIES ( "phoenix.table.name" = "yyh", "phoenix.zookeeper.quorum" = "yyh4", "phoenix.zookeeper.znode.parent" = "/hbase", "phoenix.column.mapping" = "pk:PK,value:VAL", "phoenix.rowkeys" = "pk", "phoenix.table.options" = "SALT_BUCKETS=10, DATA_BLOCK_ENCODING='DIFF'" );

屬性

- phoenix.table.name

- Specifies the Phoenix table name #指定Phoenix表名

- Default: the same as the Hive table #預設值:與Hive表相同

- phoenix.zookeeper.quorum

- Specifies the ZooKeeper quorum for HBase

- Default: localhost #預設:localhost

- phoenix.zookeeper.znode.parent

- Specifies the ZooKeeper parent node for HBase #指定HBase的ZooKeeper父節點

- Default: /hbase

- phoenix.zookeeper.client.port

- Specifies the ZooKeeper port #指定ZooKeeper埠

- Default: 2181

- phoenix.rowkeys

- The list of columns to be the primary key in a Phoenix table #Phoenix列表中的主列

- Required #必填

- phoenix.column.mapping

- Mappings between column names for Hive and Phoenix. See Limitations for details #Hive和Phoenix的列名之間的對映。詳情請參閱限制。

資料提取,刪除和更新

資料提取可以通過Hive和Phoenix支援的所有方式完成:

Hive:

insert into table T values (....); insert into table T select c1,c2,c3 from source_table;

Phoenix:

upsert into table T values (.....);

Phoenix CSV BulkLoad tools

All delete and update operations should be performed on the Phoenix side. See Limitations for more details. #所有刪除和更新操作都應在鳳凰方面執行。請參閱限制瞭解更多詳情。

其他配置選項

這些選項可以在Hive命令列介面(CLI)環境中設定。

Performance Tuning效能調整

| Parameter | Default Value | Description |

|---|---|---|

| phoenix.upsert.batch.size | 1000 | Batch size for upsert.批量大小 |

| [phoenix-table-name].disable.wal | false | Temporarily sets the table attribute DISABLE_WAL to true. Sometimes used to improve performance#暫時將表格屬性DISABLE_WAL設定為true。有時用於提高效能 |

| [phoenix-table-name].auto.flush | false | When WAL is disabled and if this value is true, then MemStore is flushed to an HFile.#當WAL被禁用時,如果該值為true,則MemStore被重新整理為HFile |

Query Data查詢資料

You can use HiveQL for querying data in a Phoenix table. A Hive query on a single table can be as fast as running the query in the Phoenix CLI with the following property settings: hive.fetch.task.conversion=more and hive.exec.parallel=true

#您可以使用HiveQL查詢Phoenix表中的資料。單個表上的Hive查詢可以像執行Phoenix CLI中的查詢一樣快,並具有以下屬性設定:hive.fetch.task.conversion = more和hive.exec.parallel = true

| Parameter | Default Value | Description |

|---|---|---|

| hbase.scan.cache | 100 | Read row size for a unit request#讀取單位請求的行大小 |

| hbase.scan.cacheblock | false | Whether or not cache block#是否快取塊 |

| split.by.stats | false | If true, mappers use table statistics. One mapper per guide post. |

| [hive-table-name].reducer.count | 1 | Number of reducers. In Tez mode, this affects only single-table queries. See Limitations. |

| [phoenix-table-name].query.hint | Hint for Phoenix query (for example, NO_INDEX) |

限制

- Hive update and delete operations require transaction manager support on both Hive and Phoenix sides. Related Hive and Phoenix JIRAs are listed in the Resources section. #Hive更新和刪除操作需要Hive和Phoenix兩方的事務管理器支援。相關的Hive和Phoenix JIRA列在參考資料部分。

- Column mapping does not work correctly with mapping row key columns. #列對映無法正確使用對映行鍵列。

- MapReduce and Tez jobs always have a single reducer . #MapReduce和Tez作業總是隻有一個reducer。

資源

- PHOENIX-2743 : Implementation, accepted by Apache Phoenix community. Original pull request contains modification for Hive classes.#實施,被Apache Phoenix社群接受。原始請求包含對Hive類的修改。

- PHOENIX-331 : An outdated implementation with support of Hive 0.98.#支援Hive 0.98的過時實施。

4 整合的全步驟預覽

#注意這樣的操作,尤其是大小寫的處理,其實更符合所有元件

#####虛擬叢集測試概要#####

#hbase shell

#habse節點 2列,3資料

create 'YINGGDD','info'

put 'YINGGDD', 'row021','info:name','phoenix'

put 'YINGGDD', 'row012','info:name','hbase'

put 'YINGGDD', 'row012','info:sname','shbase'

#phoenix 節點

#opt/soft/apache-phoenix-4.13.1-HBase-1.2-bin/bin/sqlline.py yyh4

create table "YINGGDD" ("id" varchar primary key, "info"."name" varchar, "info"."sname" varchar);

select * from "YINGGDD";

#hive節點

#beeline -u jdbc:hive2://yyh3:10000

create external table yingggy ( id string,

name string,

sname string)

STORED BY 'org.apache.phoenix.hive.PhoenixStorageHandler'

TBLPROPERTIES (

"phoenix.table.name" = "YINGGDD" ,

"phoenix.zookeeper.quorum" = "yyh4" ,

"phoenix.zookeeper.znode.parent" = "/hbase",

"phoenix.column.mapping" = "pk:id,name:name,sname:sname",

"phoenix.rowkeys" = "id",

"phoenix.table.options" = "SALT_BUCKETS=10, DATA_BLOCK_ENCODING='DIFF'"

);

select * from yingggy; ############公司內部叢集測試###############

#hive 111 --- ph 113

#ph表

beeline -u jdbc:hive2://192.168.1.111:10000/dm -f /usr/local/apache-hive-2.3.2-bin/bin/yyh.sql

add jar /usr/local/apache-hive-2.3.2-bin/lib/phoenix-4.9.0-cdh5.9.1-hive.jar;

add jar /usr/local/apache-hive-2.3.2-bin/lib/phoenix-4.9.0-HBase-1.2-hive.jar;

add jar /usr/local/apache-hive-2.3.2-bin/lib/phoenix-4.9.0-HBase-1.2-client.jar;

#driver#phoenix-4.9.0-HBase-1.2-hive.org.apache.phoenix.jdbc.PhoenixDriver

create external table ayyh

(pk string,

value string)

STORED BY 'org.apache.phoenix.hive.PhoenixStorageHandler'

TBLPROPERTIES (

"phoenix.table.name" = "yyh",

"phoenix.zookeeper.quorum" = "192.168.1.112",

"phoenix.zookeeper.znode.parent" = "/hbase",

"phoenix.column.mapping" = "pk:PK,value:VAL",

"phoenix.rowkeys" = "pk",

"phoenix.table.options" = "SALT_BUCKETS=10, DATA_BLOCK_ENCODING='DIFF'"

);

#113作為hive的客戶端,去建立表格

cd ~

beeline -u jdbc:hive2://192.168.1.111:10000/dm -u hive -f yyha.sql

#117登陸hive查看錶格

beeline -u jdbc:hive2://192.168.1.111:10000/dm -e "select * from ayyh limit 3"5目前的踩坑記

5.1 stored類找不到

Error: Error while compiling statement: FAILED: SemanticException Cannot find class 'org.apache.phoenix.hive.PhoenixStorageHandler' (state=42000,code=40000)

##分析如下,缺少jar包

#匯入add jar解決5.2 No suitable driver found for jdbc:phoenix

#因此,進入beeline以後,用add jar(公司叢集,不允許頻繁啟動):

0: jdbc:hive2://yyh3:10000> add jar /opt/soft/apache-hive-1.2.1-bin/lib/phoenix-4.13.1-HBase-1.2-hive.jar;

INFO : Added [/opt/soft/apache-hive-1.2.1-bin/lib/phoenix-4.13.1-HBase-1.2-hive.jar] to class path

INFO : Added resources: [/opt/soft/apache-hive-1.2.1-bin/lib/phoenix-4.13.1-HBase-1.2-hive.jar]

發現報新錯誤:

Error: Error while processing statement: FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. MetaException(message:No suitable driver found for jdbc:phoenix:yyh4:2181:/hbase;) (state=08S01,code=1)

其實,這個問題是在查公司叢集時體現出的報錯,為了結構清晰,單獨寫在下面[[email protected] phoenix-4.9.0-cdh5.9.1]$ beeline -u jdbc:hive2://192.168.1.111:10000/dm

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/apache-hive-2.3.2-bin/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/apache-hive-2.3.2-bin/lib/phoenix-4.13.1-HBase-1.2-hive.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/apache-hive-2.3.2-bin/lib/phoenix-4.9.0-cdh5.9.1-hive.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-5.9.0-1.cdh5.9.0.p0.23/jars/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Connecting to jdbc:hive2://192.168.1.111:10000/dm

Connected to: Apache Hive (version 2.3.2)

Driver: Hive JDBC (version 2.3.2)

Transaction isolation: TRANSACTION_REPEATABLE_READ

Beeline version 2.3.2 by Apache Hive

0: jdbc:hive2://192.168.1.111:10000/dm> add jar /usr/local/apache-hive-2.3.2-bin/lib/phoenix-4.13.1-HBase-1.2-hive.jar

. . . . . . . . . . . . . . . . . . . > ;

Error: Error while processing statement: /usr/local/apache-hive-2.3.2-bin/lib/phoenix-4.13.1-HBase-1.2-hive.jar does not exist (state=,code=1)

0: jdbc:hive2://192.168.1.111:10000/dm> add jar /usr/local/apache-hive-2.3.2-bin/lib/phoenix-4.13.1-HBase-1.2-hive.jar;

No rows affected (0.051 seconds)

0: jdbc:hive2://192.168.1.111:10000/dm> create external table ayyh

. . . . . . . . . . . . . . . . . . . > (pk string,

. . . . . . . . . . . . . . . . . . . > value string)

. . . . . . . . . . . . . . . . . . . > STORED BY 'org.apache.phoenix.hive.PhoenixStorageHandler'

. . . . . . . . . . . . . . . . . . . > TBLPROPERTIES (

. . . . . . . . . . . . . . . . . . . > "phoenix.table.name" = "yyh",

. . . . . . . . . . . . . . . . . . . > "phoenix.zookeeper.quorum" = "192.168.1.113:2181",

. . . . . . . . . . . . . . . . . . . > "phoenix.zookeeper.znode.parent" = "/hbase",

. . . . . . . . . . . . . . . . . . . > "phoenix.column.mapping" = "pk:PK,value:VAL",

. . . . . . . . . . . . . . . . . . . > "phoenix.rowkeys" = "pk",

. . . . . . . . . . . . . . . . . . . > "phoenix.table.options" = "SALT_BUCKETS=10, DATA_BLOCK_ENCODING='DIFF'"

. . . . . . . . . . . . . . . . . . . > );

Error: org.apache.hive.service.cli.HiveSQLException: Error while processing statement: FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. MetaException(message:No suitable driver found for jdbc:phoenix:192.168.1.113:2181:2181:/hbase;)

at org.apache.hive.service.cli.operation.Operation.toSQLException(Operation.java:380)

at org.apache.hive.service.cli.operation.SQLOperation.runQuery(SQLOperation.java:257)

at org.apache.hive.service.cli.operation.SQLOperation.access$800(SQLOperation.java:91)

at org.apache.hive.service.cli.operation.SQLOperation$BackgroundWork$1.run(SQLOperation.java:348)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1698)

at org.apache.hive.service.cli.operation.SQLOperation$BackgroundWork.run(SQLOperation.java:362)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: MetaException(message:No suitable driver found for jdbc:phoenix:192.168.1.113:2181:2181:/hbase;)

at org.apache.hadoop.hive.ql.metadata.Hive.createTable(Hive.java:862)

at org.apache.hadoop.hive.ql.metadata.Hive.createTable(Hive.java:867)

at org.apache.hadoop.hive.ql.exec.DDLTask.createTable(DDLTask.java:4356)

at org.apache.hadoop.hive.ql.exec.DDLTask.execute(DDLTask.java:354)

at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:199)

at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:100)

at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:2183)

at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:1839)

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1526)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1237)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1232)

at org.apache.hive.service.cli.operation.SQLOperation.runQuery(SQLOperation.java:255)

... 11 more

Caused by: MetaException(message:No suitable driver found for jdbc:phoenix:192.168.1.113:2181:2181:/hbase;)

at org.apache.phoenix.hive.PhoenixMetaHook.preCreateTable(PhoenixMetaHook.java:84)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.createTable(HiveMetaStoreClient.java:747)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.createTable(HiveMetaStoreClient.java:740)

at sun.reflect.GeneratedMethodAccessor74.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.invoke(RetryingMetaStoreClient.java:173)

at com.sun.proxy.$Proxy34.createTable(Unknown Source)

at sun.reflect.GeneratedMethodAccessor74.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient$SynchronizedHandler.invoke(HiveMetaStoreClient.java:2330)

at com.sun.proxy.$Proxy34.createTable(Unknown Source)

at org.apache.hadoop.hive.ql.metadata.Hive.createTable(Hive.java:852)

... 22 more (state=08S01,code=1)

0: jdbc:hive2://192.168.1.111:10000/dm> Closing: 0: jdbc:hive2://192.168.1.111:10000/dm

You have new mail in /var/spool/mail/import

以上問題是因為add jar並不能載入driver

所以加入jar包,重啟hive解決

5.3 java.lang.NoSuchMethodError: org.apache.hadoop.hbase.client.HBaseAdmin

所以加入jar包,重啟hive,又出現新的錯誤,懷疑是版本問題,目前在公司叢集有問題,但是在虛擬機器沒有問題,所以在排查。

0: jdbc:hive2://192.168.1.111:10000/dm> create external table ayyh

. . . . . . . . . . . . . . . . . . . > (pk string,

. . . . . . . . . . . . . . . . . . . > value string)

. . . . . . . . . . . . . . . . . . . > STORED BY 'org.apache.phoenix.hive.PhoenixStorageHandler'

. . . . . . . . . . . . . . . . . . . > TBLPROPERTIES (

. . . . . . . . . . . . . . . . . . . > "phoenix.table.name" = "yyh",

. . . . . . . . . . . . . . . . . . . > "phoenix.zookeeper.quorum" = "192.168.1.112",

. . . . . . . . . . . . . . . . . . . > "phoenix.zookeeper.znode.parent" = "/hbase",

. . . . . . . . . . . . . . . . . . . > "phoenix.column.mapping" = "pk:PK,value:VAL",

. . . . . . . . . . . . . . . . . . . > "phoenix.rowkeys" = "pk",

. . . . . . . . . . . . . . . . . . . > "phoenix.table.options" = "SALT_BUCKETS=10, DATA_BLOCK_ENCODING='DIFF'"

. . . . . . . . . . . . . . . . . . . > );

Error: org.apache.hive.service.cli.HiveSQLException: Error while processing statement: FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. org.apache.hadoop.hbase.client.HBaseAdmin.<init>(Lorg/apache/hadoop/hbase/client/HConnection;)V

at org.apache.hive.service.cli.operation.Operation.toSQLException(Operation.java:380)

at org.apache.hive.service.cli.operation.SQLOperation.runQuery(SQLOperation.java:257)

at org.apache.hive.service.cli.operation.SQLOperation.access$800(SQLOperation.java:91)

at org.apache.hive.service.cli.operation.SQLOperation$BackgroundWork$1.run(SQLOperation.java:348)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1714)

at org.apache.hive.service.cli.operation.SQLOperation$BackgroundWork.run(SQLOperation.java:362)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.lang.NoSuchMethodError: org.apache.hadoop.hbase.client.HBaseAdmin.<init>(Lorg/apache/hadoop/hbase/client/HConnection;)V

at org.apache.phoenix.query.ConnectionQueryServicesImpl.getAdmin(ConnectionQueryServicesImpl.java:3282)

at org.apache.phoenix.query.ConnectionQueryServicesImpl$13.call(ConnectionQueryServicesImpl.java:2377)

at org.apache.phoenix.query.ConnectionQueryServicesImpl$13.call(ConnectionQueryServicesImpl.java:2352)

at org.apache.phoenix.util.PhoenixContextExecutor.call(PhoenixContextExecutor.java:76)

at org.apache.phoenix.query.ConnectionQueryServicesImpl.init(ConnectionQueryServicesImpl.java:2352)

at org.apache.phoenix.jdbc.PhoenixDriver.getConnectionQueryServices(PhoenixDriver.java:232)

at org.apache.phoenix.jdbc.PhoenixEmbeddedDriver.createConnection(PhoenixEmbeddedDriver.java:147)

at org.apache.phoenix.jdbc.PhoenixDriver.connect(PhoenixDriver.java:202)

at java.sql.DriverManager.getConnection(DriverManager.java:664)

at java.sql.DriverManager.getConnection(DriverManager.java:270)

at org.apache.phoenix.hive.util.PhoenixConnectionUtil.getConnection(PhoenixConnectionUtil.java:81)

at org.apache.phoenix.hive.PhoenixMetaHook.preCreateTable(PhoenixMetaHook.java:55)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.createTable(HiveMetaStoreClient.java:747)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.createTable(HiveMetaStoreClient.java:740)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.invoke(RetryingMetaStoreClient.java:173)

at com.sun.proxy.$Proxy34.createTable(Unknown Source)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient$SynchronizedHandler.invoke(HiveMetaStoreClient.java:2330)

at com.sun.proxy.$Proxy34.createTable(Unknown Source)

at org.apache.hadoop.hive.ql.metadata.Hive.createTable(Hive.java:852)

at org.apache.hadoop.hive.ql.metadata.Hive.createTable(Hive.java:867)

at org.apache.hadoop.hive.ql.exec.DDLTask.createTable(DDLTask.java:4356)

at org.apache.hadoop.hive.ql.exec.DDLTask.execute(DDLTask.java:354)

at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:199)

at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:100)

at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:2183)

at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:1839)

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1526)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1237)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1232)

at org.apache.hive.service.cli.operation.SQLOperation.runQuery(SQLOperation.java:255)

... 11 more (state=08S01,code=1)

Phoenix官方英文網站

官網 http://phoenix.apache.org/index.html

Hive整合 http://phoenix.apache.org/hive_storage_handler.html

相關推薦

Phoenix三貼之三:Phoenix和hive的整合

0.前期準備,偽分散式的hbase搭建(這裡簡單演示一下) Hbase的偽分散式安裝部署(使用三個程序

Phoenix四貼之三:hive整合

0.前期準備,偽分散式的hbase搭建(這裡簡單演示一下)Hbase的偽分散式安裝部署(使用三個程序來當作叢集)在這裡,下載的是1.2.3版本關於hbase和hadoop的版本對應資訊,可參考官檔的說明tar -zxvf hbase-1.2.6-bin.tar.gz -C /opt/soft/ cd /opt

Android Studio專案打包(三)打包說明:release和debug版本的區別、v1和v2的簽名使用等等

android中匯出簽名的,apk的release和debug版本的區別 (1)debug簽名的應用程式不能在Android Market上架銷售,它會強制你使用自己的簽名;Debug模式下簽名用的證書(預設是Eclipse/ADT和Ant編譯)自從它建立之日起,1年後就會失效。 (2)

Android cocos2d-x開發(三)之建立Android工程和編譯

1、進入cocs2d-x目錄,用文字編輯器開啟create-android-project.bat 將_CYGBIN=設定為cycgwin\bin安裝的的絕對路徑。 將_ANDROIDTOOLS設定為android sdk 的tools絕對路徑 將_NDKROOT設定為an

《C#圖解教程》讀書筆記之四:類和繼承

intern html pan 類中訪問 ted obj 小寫 his new 本篇已收錄至《C#圖解教程》讀書筆記目錄貼,點擊訪問該目錄可獲取更多內容。 一、萬物之宗:Object (1)除了特殊的Object類,其他所有類都是派生類,即使他們沒有顯示基類定義。

【Java並發編程】之六:Runnable和Thread實現多線程的區別(含代碼)

技術分享 runnable 避免 實際應用 details div 一個 預測 enter 轉載請註明出處:http://blog.csdn.net/ns_code/article/details/17161237 Java中實現多線程有兩種方法:繼承Thre

UVM序列篇之二:sequence和item(上)

技術 一點 目標 idt 需要 開始 掛載 ron 前行 無論是自駕item,穿過sequencer交通站,通往終點driver,還是坐上sequence的大巴,一路沿途觀光,最終跟隨導遊停靠到風景點driver,在介紹如何駕駛item和sequence,遵守什麽交規,最終

[Java]SpringMVC工作原理之二:HandlerMapping和HandlerAdapter

!= 子類 exe 指定 ssa ble sina -name manage 一、HandlerMapping 作用是根據當前請求的找到對應的 Handler,並將 Handler(執行程序)與一堆 HandlerInterceptor(攔截器)封裝到 HandlerExe

Python學習之路:time和datetime模塊

exists atime shuffle aaa 絕對路徑 ons 平臺 文件名 可能 轉自:http://blog.51cto.com/egon09/1840425 一:內建模塊 time和datetime(http://www.jb51.net/article/49

編程之法:面試和算法心得(最長回文子串)

高效 pre 記錄 特殊字符 一段 stp ace 分開 枚舉 內容全部來自編程之法:面試和算法心得一書,實現是自己寫的使用的是java 題目描述 給定一個字符串,求它的最長回文子串的長度。 分析與解法 最容易想到的辦法是枚舉所有的子串,分別判斷其是否為回文。這個思路初看起

編程之法:面試和算法心得(尋找和為定值的多個數)

arch 全部 col static 多個 ++ som ava sta 內容全部來自編程之法:面試和算法心得一書,實現是自己寫的使用的是java 題目描述 輸入兩個整數n和sum,從數列1,2,3.......n 中隨意取幾個數,使其和等於sum,要求將其中所有的可能組合

編程之法:面試和算法心得(最大連續子數組和)

參考 否則 ++ 例子 返回 log 遍歷 方法 時間 內容全部來自編程之法:面試和算法心得一書,實現是自己寫的使用的是java 題目描述 輸入一個整形數組,數組裏有正數也有負數。數組中連續的一個或多個整數組成一個子數組,每個子數組都有一個和。 求所有子數組的和的最大值,要

編程之法:面試和算法心得(奇偶調序)

一中 gpo part exc java 面試 正常 序列 pre 內容全部來自編程之法:面試和算法心得一書,實現是自己寫的使用的是java 題目描述 輸入一個整數數組,調整數組中數字的順序,使得所有奇數位於數組的前半部分,所有偶數位於數組的後半部分。要求時間復雜度為O(n

編程之法:面試和算法心得(荷蘭國旗)

數組排列 alt partition void 不同 begin 心得 不能 sta 內容全部來自編程之法:面試和算法心得一書,實現是自己寫的使用的是java 題目描述 拿破侖席卷歐洲大陸之後,代表自由,平等,博愛的豎色三色旗也風靡一時。荷蘭國旗就是一面三色旗(只不過是橫向

J.U.C之AQS:阻塞和喚醒線程

smart -i back ont () 而不是 受限 clh blog 此篇博客所有源碼均來自JDK 1.8 在線程獲取同步狀態時如果獲取失敗,則加入CLH同步隊列,通過通過自旋的方式不斷獲取同步狀態,但是在自旋的過程中則需要判斷當前線程是否需要阻塞,其主要方法在ac

redux超易學三篇之三(一個邏輯完整的react-redux)

沒有 難度 傳播 ppr 優化 調用 cer emc spa 配合源代碼學習吧~ : 我是源代碼 這一分支講的是 如何完整地(不包含優化,也沒有好看的頁面) 搭建一個 增刪改查 的 react-redux 系統 不同於上一節的 react-redux,這裏主要采用 函數式

Java之路:常量和變數

常量(Constant) 1、定義 所謂常量,就是固定不變的量,其一旦被定義並初始化,它的值就不能再改變。 2、常量宣告 在Java語言中,常用關鍵字final宣告常量,語法如下: // 方法1,推薦使用 final 資料型別 常量名 = 常量值; // 方法2 fina

python程式設計入門之四:列表和元組

第四章 列表和元組 4.1 建立一個列表 我們之前學過c語言的同學都知道一個數據型別叫做陣列,但是陣列有一個硬性的要求,就是陣列內的資料型別必須一致,但由於python沒有資料型別,所以python便加入了一個強大的列表,並且幾乎可以向裡注入任何型別的元素。 列

Java之路:關鍵字和保留字

關鍵字與保留字 1、定義 關鍵字(keywards)是程式語言裡事先定義好並賦予了特殊含義的單詞,在語言中有特殊的含義成為語法的一部分。且Java中所有關鍵字均是小寫。 保留字(reserved words)即它們在Java現有版本中沒有特殊含義,以後版本可

maven構建docker映象三部曲之二:編碼和構建映象

在《maven構建docker映象三部曲之一:準備環境》中,我們在vmware上準備好了ubuntu16虛擬機器,並且裝好了docker、jdk8、maven等必備工具,現在我們來開發一個java web工程,再用docker-maven-plugin外掛來