ROS turtlebot_follower :讓機器人跟隨我們移動

阿新 • • 發佈:2019-01-25

ROS turtlebot_follower 學習

首先在catkin_ws/src目錄下載原始碼,地址:https://github.com/turtlebot/turtlebot_apps.git

瞭解程式碼見註釋(其中有些地方我也不是很明白)

follower.cpp

#include <ros/ros.h>

#include <pluginlib/class_list_macros.h>

#include <nodelet/nodelet.h>

#include <geometry_msgs/Twist.h>

#include <sensor_msgs/Image.h>

#include <visualization_msgs/Marker.h>

#include <turtlebot_msgs/SetFollowState.h>

#include "dynamic_reconfigure/server.h" 接下來看launch檔案follower.launch

建議在修改前,將原先的程式碼註釋掉,不要刪掉。

<!--

The turtlebot people (or whatever) follower nodelet.

-->

<launch>

<arg name="simulation" default="false"/>

<group unless="$(arg simulation)"> <!-- Real robot -->

<include file="$(find turtlebot_follower)/launch/includes/velocity_smoother.launch.xml">

<arg name="nodelet_manager" value="/mobile_base_nodelet_manager"/>

<arg name="navigation_topic" value="/cmd_vel_mux/input/navi"/>

</include>

<!--modify by 2016.11.07 啟動我的機器人和攝像頭,這裡更換成你的機器人的啟動檔案和攝像頭啟動檔案-->

<include file="$(find handsfree_hw)/launch/handsfree_hw.launch">

</include>

<include file="$(find handsfree_bringup)/launch/xtion_fake_laser_openni2.launch">

</include>

<!-- 將原先的註釋掉<include file="$(find turtlebot_bringup)/launch/3dsensor.launch">

<arg name="rgb_processing" value="true"/>

<arg name="depth_processing" value="true"/>

<arg name="depth_registered_processing" value="false"/>

<arg name="depth_registration" value="false"/>

<arg name="disparity_processing" value="false"/>

<arg name="disparity_registered_processing" value="false"/>

<arg name="scan_processing" value="false"/>

</include>-->

<!--modify end -->

</group>

<group if="$(arg simulation)">

<!-- Load nodelet manager for compatibility -->

<node pkg="nodelet" type="nodelet" ns="camera" name="camera_nodelet_manager" args="manager"/>

<include file="$(find turtlebot_follower)/launch/includes/velocity_smoother.launch.xml">

<arg name="nodelet_manager" value="camera/camera_nodelet_manager"/>

<arg name="navigation_topic" value="cmd_vel_mux/input/navi"/>

</include>

</group>

<param name="camera/rgb/image_color/compressed/jpeg_quality" value="22"/>

<!-- Make a slower camera feed available; only required if we use android client -->

<node pkg="topic_tools" type="throttle" name="camera_throttle"

args="messages camera/rgb/image_color/compressed 5"/>

<include file="$(find turtlebot_follower)/launch/includes/safety_controller.launch.xml"/>

<!-- Real robot: Load turtlebot follower into the 3d sensors nodelet manager to avoid pointcloud serializing -->

<!-- Simulation: Load turtlebot follower into nodelet manager for compatibility -->

<node pkg="nodelet" type="nodelet" name="turtlebot_follower"

args="load turtlebot_follower/TurtlebotFollower camera/camera_nodelet_manager">

<!--更換成你的機器人的移動topic,我的是/mobile_base/mobile_base_controller/cmd_vel-->

<remap from="turtlebot_follower/cmd_vel" to="/mobile_base/mobile_base_controller/cmd_vel"/>

<remap from="depth/points" to="camera/depth/points"/>

<param name="enabled" value="true" />

<!--<param name="x_scale" value="7.0" />-->

<!--<param name="z_scale" value="2.0" />

<param name="min_x" value="-0.35" />

<param name="max_x" value="0.35" />

<param name="min_y" value="0.1" />

<param name="max_y" value="0.6" />

<param name="max_z" value="1.2" />

<param name="goal_z" value="0.6" />-->

<!-- test 可以在此處調節引數-->

<param name="x_scale" value="1.5"/>

<param name="z_scale" value="1.0" />

<param name="min_x" value="-0.35" />

<param name="max_x" value="0.35" />

<param name="min_y" value="0.1" />

<param name="max_y" value="0.5" />

<param name="max_z" value="1.5" />

<param name="goal_z" value="0.6" />

</node>

<!-- Launch the script which will toggle turtlebot following on and off based on a joystick button. default: on -->

<node name="switch" pkg="turtlebot_follower" type="switch.py"/>

<!--modify 2016.11.07 在turtlebot_follower下新建follow.rviz檔案,載入rviz,此時rviz內容為空-->

<node name="rviz" pkg="rviz" type="rviz" args="-d $(find turtlebot_follower)/follow.rviz"/>

<!--modify end -->

</launch>編譯,執行follow.launch 會將機器人和攝像頭,rviz都啟動起來,只需要執行這一個launch就可以了。

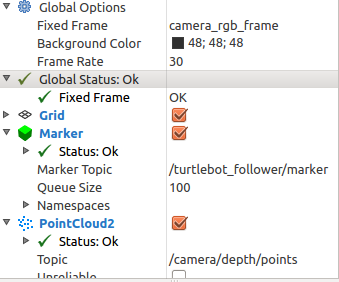

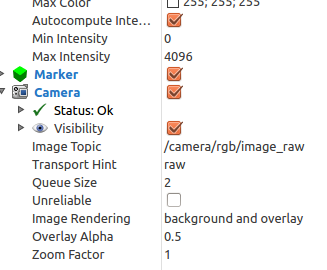

rviz中新增兩個marker,pointcloud,camera。如圖:

topic與frame名稱與程式碼中要保持一致。

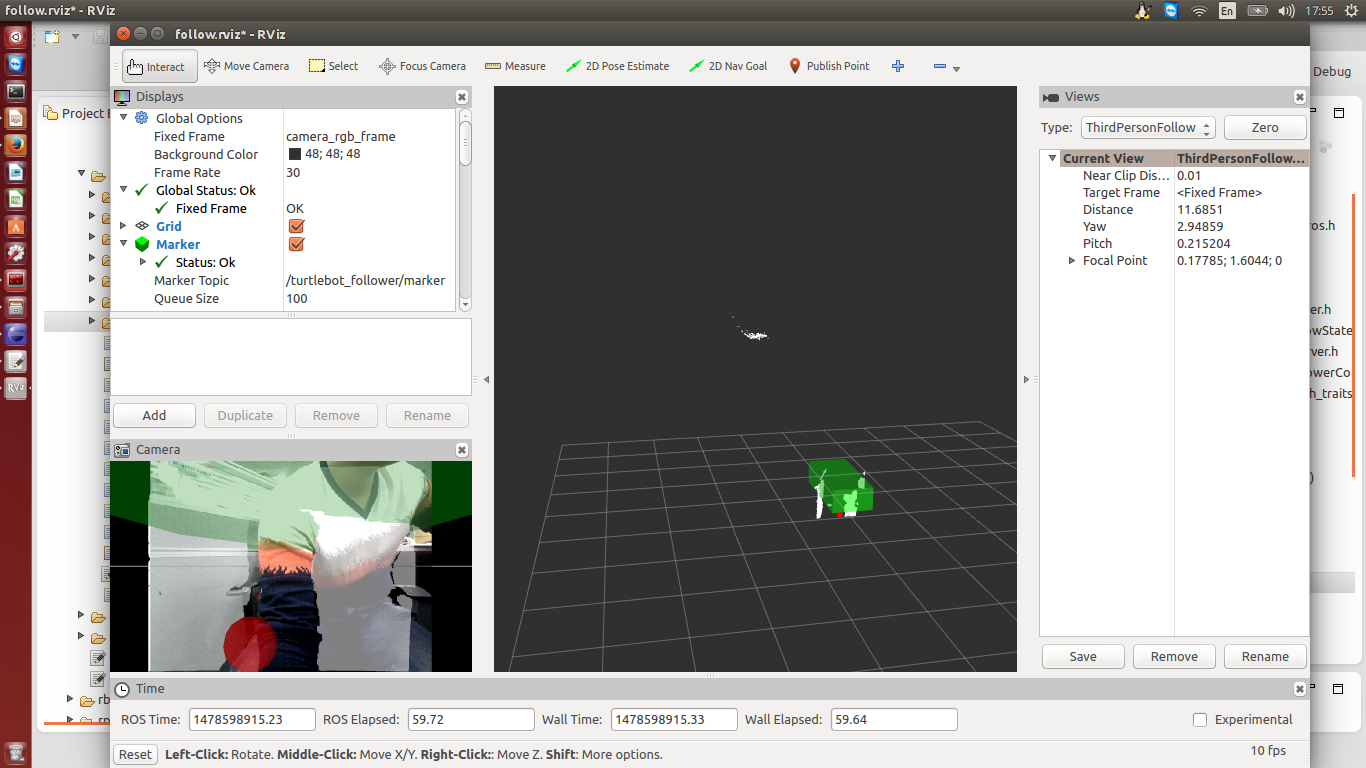

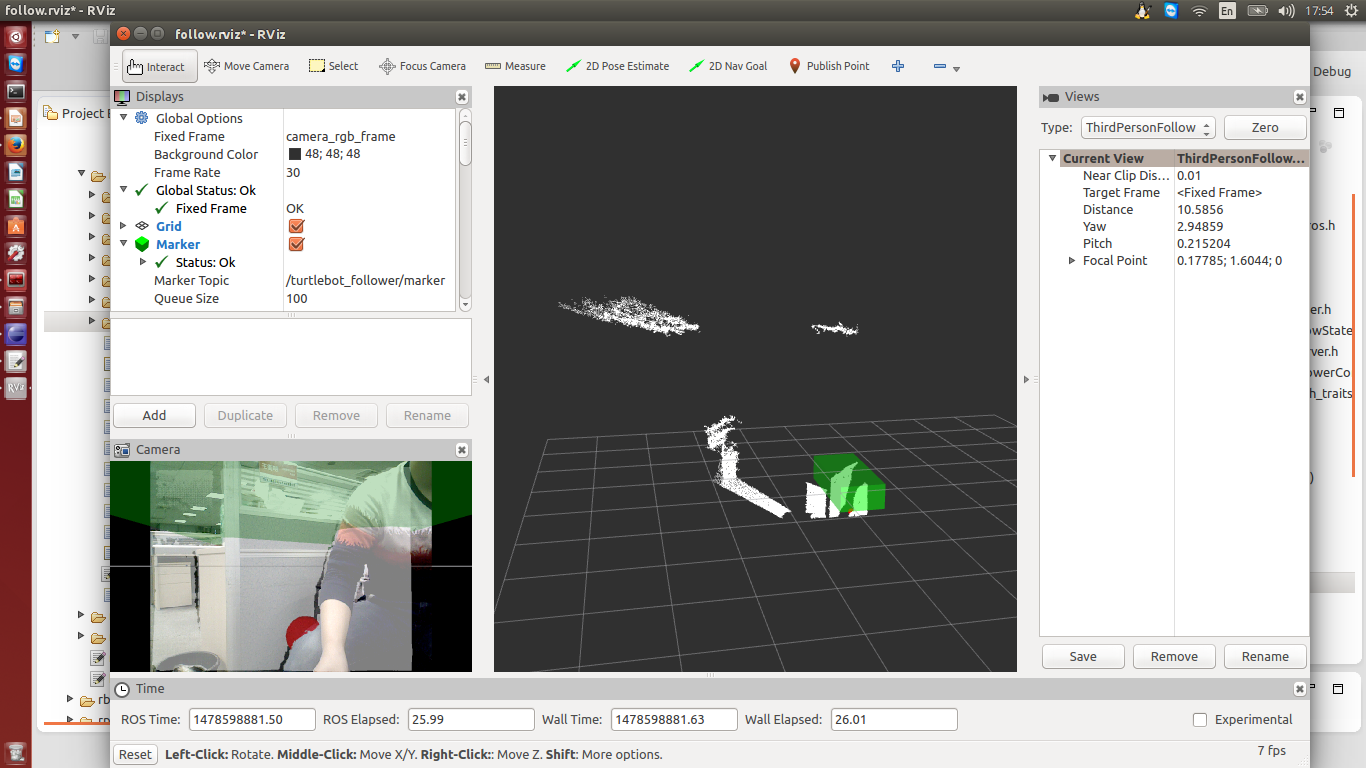

新增完之後,rviz顯示如圖:

紅點代表質心,綠框代表感興趣區域

當紅點在我們身上時,機器人會跟隨我們運動,注意:走動時,我們的速度要慢一點,機器人的移動速度也要調慢一點。

當感興趣區域沒有紅點時,機器人停止跟隨,直到出現紅點。