ros:kcf演算法+行人檢測 = 讓機器人自動識別並追蹤行人

實現目標:機器人檢測到有人走過來,迎上去並開始追蹤。

追蹤演算法使用kcf演算法,關於kcf追蹤的ros庫在github地址 https://github.com/TianyeAlex/tracker_kcf_ros,kcf演算法是目前追蹤演算法中比較好的,程式跑起來後效果也是不錯的。我能力有限,在這裡不作介紹。有興趣的可以去研究一下。這裡主要講一下在次基礎上新增行人檢測,做到自動追蹤。

訓練庫地址:http://download.csdn.net/detail/yiranhaiziqi/9711174,下載後放到src目錄下。

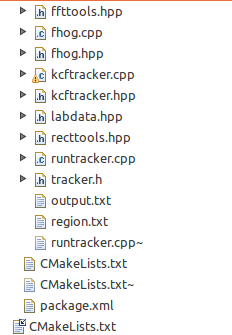

追蹤的程式碼結構

作者將kcf演算法封裝起來,在runtracker.cpp裡面呼叫。

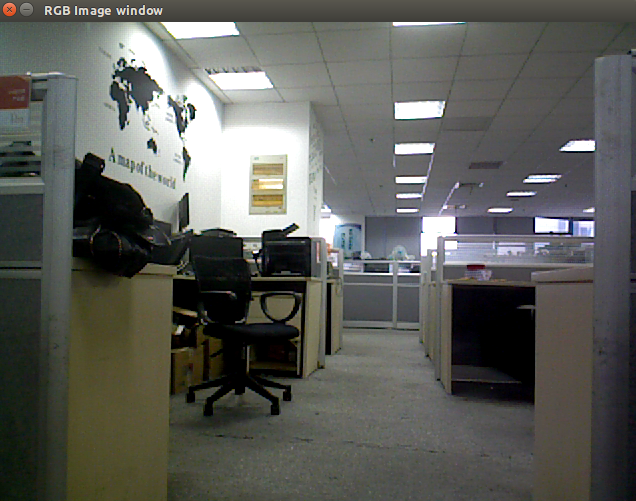

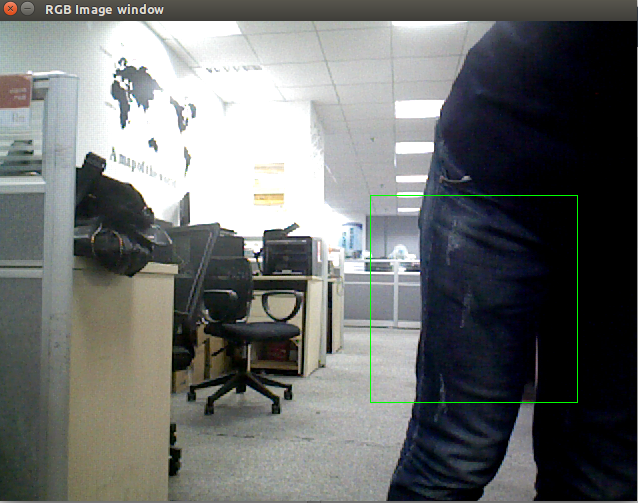

程式跑起來的效果

出現一個視窗,用滑鼠左鍵選中一個區域作為感興趣區域,之後機器人會跟蹤這個區域。例如,選中畫面中的椅子,移動椅子之後,機器人會跟隨移動。選中畫面中的人或者人的某個部位都可以實現人物跟蹤。我要想實現自動追蹤,就是把滑鼠選擇跟蹤物變成自動選擇跟蹤物,這裡的跟蹤物就是行人。

首先要先實現行人檢測,在opencv中,有行人檢測的demo,路徑在opencv-2.4.13/samples/cpp/peopledetect.cpp。接下來做的就是把程式碼結合起來。

簡單介紹一下runtracker.cpp。

ImageConverter類是核心

初始化我們要接受/傳送主題的Publisher 和Subscriber,設定相應的回掉函式。

image_sub_ = it_.subscribe("/camera/rgb/image_rect_color", 1,&ImageConverter::imageCb, this);

depth_sub_ = it_.subscribe("/camera/depth/image", 1,&ImageConverter::depthCb, this);

pub = nh_.advertise<geometry_msgs::Twist>("/mobile_base/mobile_base_controller/cmd_vel", 1000);image_sub_是接受rgb圖的subscribe,執行imageCb回掉函式,imageCb主要是將攝像頭的資料顯示在視窗中,選擇感興趣區域。

depth_sub_是接受深度圖的subscribe,執行depthCb回掉函式,depthCb作用就是計算距離和方向。

瞭解到這裡之後,要將手動選擇感興趣區域改為自動選擇感興趣區域,必然是在imageCb函式中修改。

imageCb中 cv::setMouseCallback(RGB_WINDOW, onMouse, 0);監聽滑鼠操作,如果滑鼠不動,程式不會往下執行。onMouse為滑鼠監聽回撥。要實現自動選擇肯定就不能用這個了,將其注掉。

再來看下onMouse函式做了什麼事

void onMouse(int event, int x, int y, int, void*)

{

if (select_flag)

{

selectRect.x = MIN(origin.x, x);

selectRect.y = MIN(origin.y, y);

selectRect.width = abs(x - origin.x);

selectRect.height = abs(y - origin.y);

selectRect &= cv::Rect(0, 0, rgbimage.cols, rgbimage.rows);

}

if (event == CV_EVENT_LBUTTONDOWN)

{

bBeginKCF = false;

select_flag = true;

origin = cv::Point(x, y);

selectRect = cv::Rect(x, y, 0, 0);

}

else if (event == CV_EVENT_LBUTTONUP)

{

select_flag = false;

bRenewROI = true;

}

}當按下滑鼠左鍵時,這個點就是起始點,按住滑鼠左鍵移動滑鼠,會選擇感興趣區域,鬆開滑鼠左鍵,bRenewROI = true;修改標誌,表示新的roi區域selectRect已經產生。在imageCb中程式繼續執行,初始化KCFTracker,開始追蹤。

到這裡基本的流程已經比較清晰了,接下來開始將行人檢測代替手動選擇roi區域。

preparePeopleDetect()函式是初始化檢測,

peopleDetect()函式是開始檢測。

void preparePeopleDetect()

{

has_dectect_people = false;

//hog.setSVMDetector(HOGDescriptor::getDefaultPeopleDetector());//使用預設的訓練資料,下面是使用自己的訓練資料。

MySVM svm;

string path = ros::package::getPath("track_pkg")+"/src/12000neg_2400pos.xml";

printf("path === %s",path.c_str());

//svm.load("/home/server/catkin_ws/src/tracker_kcf_ros/src/track_pkg/src/12000neg_2400pos.xml");

svm.load(path.c_str());

DescriptorDim = svm.get_var_count();//特徵向量的維數,即HOG描述子的維數

int supportVectorNum = svm.get_support_vector_count();//支援向量的個數

cout<<"支援向量個數:"<<supportVectorNum<<endl;

Mat alphaMat = Mat::zeros(1, supportVectorNum, CV_32FC1);//alpha向量,長度等於支援向量個數

Mat supportVectorMat = Mat::zeros(supportVectorNum, DescriptorDim, CV_32FC1);//支援向量矩陣

Mat resultMat = Mat::zeros(1, DescriptorDim, CV_32FC1);//alpha向量乘以支援向量矩陣的結果

//將支援向量的資料複製到supportVectorMat矩陣中

for(int i=0; i<supportVectorNum; i++)

{

const float * pSVData = svm.get_support_vector(i);//返回第i個支援向量的資料指標

for(int j=0; j<DescriptorDim; j++)

{

supportVectorMat.at<float>(i,j) = pSVData[j];

}

}

//將alpha向量的資料複製到alphaMat中

double * pAlphaData = svm.get_alpha_vector();//返回SVM的決策函式中的alpha向量

for(int i=0; i<supportVectorNum; i++)

{

alphaMat.at<float>(0,i) = pAlphaData[i];

}

//計算-(alphaMat * supportVectorMat),結果放到resultMat中

//gemm(alphaMat, supportVectorMat, -1, 0, 1, resultMat);//不知道為什麼加負號?

resultMat = -1 * alphaMat * supportVectorMat;

//得到最終的setSVMDetector(const vector<float>& detector)引數中可用的檢測子

//將resultMat中的資料複製到陣列myDetector中

for(int i=0; i<DescriptorDim; i++)

{

myDetector.push_back(resultMat.at<float>(0,i));

}

//最後新增偏移量rho,得到檢測子

myDetector.push_back(svm.get_rho());

cout<<"檢測子維數:"<<myDetector.size()<<endl;

hog.setSVMDetector(myDetector);

ofstream fout("HOGDetectorForOpenCV.txt");

for(int i=0; i<myDetector.size(); i++)

{

fout<<myDetector[i]<<endl;

}

printf("Start the tracking process\n");

}

//行人檢測

void peopleDetect()

{

if(has_dectect_people)

return;

vector<Rect> found, found_filtered;

double t = (double)getTickCount();

hog.detectMultiScale(rgbimage, found, 0, Size(8,8), Size(32,32), 1.05, 2);

t = (double)getTickCount() - t;

//printf("tdetection time = %gms\n", t*1000./cv::getTickFrequency());

size_t i, j;

printf("found.size==%d",found.size());

for( i = 0; i < found.size(); i++ )

{

Rect r = found[i];

for( j = 0; j < found.size(); j++ )

if( j != i && (r & found[j]) == r)

break;

if( j == found.size() )

found_filtered.push_back(r);

}

Rect r ;

for( i = 0; i < found_filtered.size(); i++ )

{

r = found_filtered[i];

// the HOG detector returns slightly larger rectangles than the real objects.

// so we slightly shrink the rectangles to get a nicer output.

r.x += cvRound(r.width*0.1);

r.width = cvRound(r.width*0.8);

r.y += cvRound(r.height*0.07);

r.height = cvRound(r.height*0.8);

//rectangle(rgbimage, r.tl(), r.br(), cv::Scalar(0,255,0), 3);

//printf("r.x==%d,y==%d,width==%d,height==%d\n",r.x,r.y,r.width,r.height);

}

//防止誤檢測

if(r.width>100&&r.height>350){

has_dectect_people=true;

selectRect.x = r.x+(r.width-roi_width)/2;

selectRect.y = r.y+(r.height-roi_height)/2;

selectRect.width = roi_width;

selectRect.height = roi_height;

printf("selectRect.x==%d,y==%d,width==%d,height==%d\n",selectRect.x,selectRect.y,selectRect.width,selectRect.height);

}//imshow(RGB_WINDOW, rgbimage);

}檢測到人後,人所在的區域是一個矩形,我這裡在矩形區域內取其中間100*100的矩形為感興趣區域。檢測到人後將has_dectect_people置為true,使其不會再次檢測。設定bRenewROI = true;select_flag = true;

select_flag:當追蹤目標未消失時,為true,消失時為false,與bRenewROI一起作為是否重新檢測行人追蹤的標記。

完整程式碼如下

#include <iostream>

#include <fstream>

#include <sstream>

#include <algorithm>

#include <dirent.h>

#include <math.h>

#include <ros/ros.h>

#include <ros/package.h>

#include <image_transport/image_transport.h>

#include <cv_bridge/cv_bridge.h>

#include <sensor_msgs/image_encodings.h>

#include "geometry_msgs/Twist.h"

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include "opencv2/imgproc/imgproc.hpp"

#include "opencv2/objdetect/objdetect.hpp"

#include <stdio.h>

#include <string.h>

#include <ctype.h>

#include "kcftracker.hpp"

using namespace cv;

using namespace std;

static const std::string RGB_WINDOW = "RGB Image window";

//static const std::string DEPTH_WINDOW = "DEPTH Image window";

#define Max_linear_speed 1

#define Min_linear_speed 0.4

#define Min_distance 1.0

#define Max_distance 5.0

#define Max_rotation_speed 0.75

float linear_speed = 0;

float rotation_speed = 0;

float k_linear_speed = (Max_linear_speed - Min_linear_speed) / (Max_distance - Min_distance);

float h_linear_speed = Min_linear_speed - k_linear_speed * Min_distance;

float k_rotation_speed = 0.004;

float h_rotation_speed_left = 1.2;

float h_rotation_speed_right = 1.36;

float distance_scale = 1.0;

int ERROR_OFFSET_X_left1 = 100;

int ERROR_OFFSET_X_left2 = 300;

int ERROR_OFFSET_X_right1 = 340;

int ERROR_OFFSET_X_right2 = 600;

int roi_height = 100;

int roi_width = 100;

cv::Mat rgbimage;

cv::Mat depthimage;

cv::Rect selectRect;

cv::Point origin;

cv::Rect result;

bool select_flag = false;

bool bRenewROI = false; // the flag to enable the implementation of KCF algorithm for the new chosen ROI

bool bBeginKCF = false;

bool enable_get_depth = false;

bool HOG = true;

bool FIXEDWINDOW = false;

bool MULTISCALE = true;

bool SILENT = true;

bool LAB = false;

int DescriptorDim;

bool has_dectect_people ;

// Create KCFTracker object

KCFTracker tracker(HOG, FIXEDWINDOW, MULTISCALE, LAB);

vector<float> myDetector;

float dist_val[5] ;

/*void onMouse(int event, int x, int y, int, void*)

{

if (select_flag)

{

selectRect.x = MIN(origin.x, x);

selectRect.y = MIN(origin.y, y);

selectRect.width = abs(x - origin.x);

selectRect.height = abs(y - origin.y);

selectRect &= cv::Rect(0, 0, rgbimage.cols, rgbimage.rows);

}

if (event == CV_EVENT_LBUTTONDOWN)

{

bBeginKCF = false;

select_flag = true;

origin = cv::Point(x, y);

selectRect = cv::Rect(x, y, 0, 0);

}

else if (event == CV_EVENT_LBUTTONUP)

{

select_flag = false;

bRenewROI = true;

}

}*/

class MySVM : public CvSVM

{

public:

//獲得SVM的決策函式中的alpha陣列

double * get_alpha_vector()

{

return this->decision_func->alpha;

}

//獲得SVM的決策函式中的rho引數,即偏移量

float get_rho()

{

return this->decision_func->rho;

}

};

class ImageConverter

{

ros::NodeHandle nh_;

image_transport::ImageTransport it_;

image_transport::Subscriber image_sub_;

image_transport::Subscriber depth_sub_;

HOGDescriptor hog;

public:

ros::Publisher pub;

ImageConverter()

: it_(nh_)

{

// Subscrive to input video feed and publish output video feed

image_sub_ = it_.subscribe("/camera/rgb/image_rect_color", 1,&ImageConverter::imageCb, this);

depth_sub_ = it_.subscribe("/camera/depth/image", 1,&ImageConverter::depthCb, this);

pub = nh_.advertise<geometry_msgs::Twist>("/mobile_base/mobile_base_controller/cmd_vel", 1000);

//pub = nh_.advertise<geometry_msgs::Twist>("/cmd_vel", 1000);

preparePeopleDetect();

cv::namedWindow(RGB_WINDOW);

//cv::namedWindow(DEPTH_WINDOW);

}

~ImageConverter()

{

cv::destroyWindow(RGB_WINDOW);

//cv::destroyWindow(DEPTH_WINDOW);

}

void imageCb(const sensor_msgs::ImageConstPtr& msg)

{

cv_bridge::CvImagePtr cv_ptr;

try

{

cv_ptr = cv_bridge::toCvCopy(msg, sensor_msgs::image_encodings::BGR8);

}

catch (cv_bridge::Exception& e)

{

ROS_ERROR("cv_bridge exception: %s", e.what());

return;

}

cv_ptr->image.copyTo(rgbimage);

peopleDetect();

if(has_dectect_people&&!select_flag)

{

printf("has_dectect_people = true \n");

selectRect &= cv::Rect(0,0,rgbimage.cols,rgbimage.rows);

bRenewROI = true;

select_flag = true;

}

//cv::setMouseCallback(RGB_WINDOW, onMouse, 0);

if(bRenewROI)

{

// if (selectRect.width <= 0 || selectRect.height <= 0)

// {

// bRenewROI = false;

// //continue;

// }

tracker.init(selectRect, rgbimage);

bBeginKCF = true;

bRenewROI = false;

enable_get_depth = false;

}

if(bBeginKCF)

{

result = tracker.update(rgbimage);

cv::rectangle(rgbimage, result, cv::Scalar( 0, 255,0 ), 1, 8 );

enable_get_depth = true;

}

else

cv::rectangle(rgbimage, selectRect, cv::Scalar(0, 255, 0), 2, 8, 0);

cv::imshow(RGB_WINDOW, rgbimage);

cv::waitKey(1);

}

void preparePeopleDetect()

{

has_dectect_people = false;

//hog.setSVMDetector(HOGDescriptor::getDefaultPeopleDetector());

MySVM svm;

string path = ros::package::getPath("track_pkg")+"/src/12000neg_2400pos.xml";

printf("path === %s",path.c_str());

//svm.load("/home/server/catkin_ws/src/tracker_kcf_ros/src/track_pkg/src/12000neg_2400pos.xml");

svm.load(path.c_str());

DescriptorDim = svm.get_var_count();//特徵向量的維數,即HOG描述子的維數

int supportVectorNum = svm.get_support_vector_count();//支援向量的個數

cout<<"支援向量個數:"<<supportVectorNum<<endl;

Mat alphaMat = Mat::zeros(1, supportVectorNum, CV_32FC1);//alpha向量,長度等於支援向量個數

Mat supportVectorMat = Mat::zeros(supportVectorNum, DescriptorDim, CV_32FC1);//支援向量矩陣

Mat resultMat = Mat::zeros(1, DescriptorDim, CV_32FC1);//alpha向量乘以支援向量矩陣的結果

//將支援向量的資料複製到supportVectorMat矩陣中

for(int i=0; i<supportVectorNum; i++)

{

const float * pSVData = svm.get_support_vector(i);//返回第i個支援向量的資料指標

for(int j=0; j<DescriptorDim; j++)

{

supportVectorMat.at<float>(i,j) = pSVData[j];

}

}

//將alpha向量的資料複製到alphaMat中

double * pAlphaData = svm.get_alpha_vector();//返回SVM的決策函式中的alpha向量

for(int i=0; i<supportVectorNum; i++)

{

alphaMat.at<float>(0,i) = pAlphaData[i];

}

//計算-(alphaMat * supportVectorMat),結果放到resultMat中

//gemm(alphaMat, supportVectorMat, -1, 0, 1, resultMat);//不知道為什麼加負號?

resultMat = -1 * alphaMat * supportVectorMat;

//得到最終的setSVMDetector(const vector<float>& detector)引數中可用的檢測子

//將resultMat中的資料複製到陣列myDetector中

for(int i=0; i<DescriptorDim; i++)

{

myDetector.push_back(resultMat.at<float>(0,i));

}

//最後新增偏移量rho,得到檢測子

myDetector.push_back(svm.get_rho());

cout<<"檢測子維數:"<<myDetector.size()<<endl;

hog.setSVMDetector(myDetector);

ofstream fout("HOGDetectorForOpenCV.txt");

for(int i=0; i<myDetector.size(); i++)

{

fout<<myDetector[i]<<endl;

}

printf("Start the tracking process\n");

}

//行人檢測

void peopleDetect()

{

if(has_dectect_people)

return;

vector<Rect> found, found_filtered;

double t = (double)getTickCount();

hog.detectMultiScale(rgbimage, found, 0, Size(8,8), Size(32,32), 1.05, 2);

t = (double)getTickCount() - t;

//printf("tdetection time = %gms\n", t*1000./cv::getTickFrequency());

size_t i, j;

printf("found.size==%d",found.size());

for( i = 0; i < found.size(); i++ )

{

Rect r = found[i];

for( j = 0; j < found.size(); j++ )

if( j != i && (r & found[j]) == r)

break;

if( j == found.size() )

found_filtered.push_back(r);

}

Rect r ;

for( i = 0; i < found_filtered.size(); i++ )

{

r = found_filtered[i];

// the HOG detector returns slightly larger rectangles than the real objects.

// so we slightly shrink the rectangles to get a nicer output.

r.x += cvRound(r.width*0.1);

r.width = cvRound(r.width*0.8);

r.y += cvRound(r.height*0.07);

r.height = cvRound(r.height*0.8);

//rectangle(rgbimage, r.tl(), r.br(), cv::Scalar(0,255,0), 3);

//printf("r.x==%d,y==%d,width==%d,height==%d\n",r.x,r.y,r.width,r.height);

}

if(r.width>100&&r.height>350){

has_dectect_people=true;

selectRect.x = r.x+(r.width-roi_width)/2;

selectRect.y = r.y+(r.height-roi_height)/2;

selectRect.width = roi_width;

selectRect.height = roi_height;

printf("selectRect.x==%d,y==%d,width==%d,height==%d\n",selectRect.x,selectRect.y,selectRect.width,selectRect.height);

}//imshow(RGB_WINDOW, rgbimage);

}

void depthCb(const sensor_msgs::ImageConstPtr& msg)

{

cv_bridge::CvImagePtr cv_ptr;

try

{

cv_ptr = cv_bridge::toCvCopy(msg,sensor_msgs::image_encodings::TYPE_32FC1);

cv_ptr->image.copyTo(depthimage);

}

catch (cv_bridge::Exception& e)

{

ROS_ERROR("Could not convert from '%s' to 'TYPE_32FC1'.", msg->encoding.c_str());

}

if(enable_get_depth)

{

dist_val[0] = depthimage.at<float>(result.y+result.height/3 , result.x+result.width/3) ;

dist_val[1] = depthimage.at<float>(result.y+result.height/3 , result.x+2*result.width/3) ;

dist_val[2] = depthimage.at<float>(result.y+2*result.height/3 , result.x+result.width/3) ;

dist_val[3] = depthimage.at<float>(result.y+2*result.height/3 , result.x+2*result.width/3) ;

dist_val[4] = depthimage.at<float>(result.y+result.height/2 , result.x+result.width/2) ;

float distance = 0;

int num_depth_points = 5;

for(int i = 0; i < 5; i++)

{

if(dist_val[i] > 0.4 && dist_val[i] < 10.0)

distance += dist_val[i];

else

num_depth_points--;

}

distance /= num_depth_points;

//calculate linear speed

if(distance > Min_distance)

linear_speed = distance * k_linear_speed + h_linear_speed;

else if (distance <= Min_distance-0.5){

//linear_speed = 0;

linear_speed =-1* ((Min_distance-0.5) * k_linear_speed + h_linear_speed);

}else{

linear_speed = 0;

}

if( fabs(linear_speed) > Max_linear_speed)

linear_speed = Max_linear_speed;

//calculate rotation speed

int center_x = result.x + result.width/2;

if(center_x < ERROR_OFFSET_X_left1){

printf("center_x <<<<<<<< ERROR_OFFSET_X_left1\n");

rotation_speed = Max_rotation_speed/5;

has_dectect_people = false;

enable_get_depth = false;

select_flag = false;

bBeginKCF = false;

}

else if(center_x > ERROR_OFFSET_X_left1 && center_x < ERROR_OFFSET_X_left2)

rotation_speed = -k_rotation_speed * center_x + h_rotation_speed_left;

else if(center_x > ERROR_OFFSET_X_right1 && center_x < ERROR_OFFSET_X_right2)

rotation_speed = -k_rotation_speed * center_x + h_rotation_speed_right;

else if(center_x > ERROR_OFFSET_X_right2){

printf("center_x >>>>>>>> ERROR_OFFSET_X_right2\n");

rotation_speed = -Max_rotation_speed/5;

has_dectect_people = false;

enable_get_depth = false;

select_flag = false;

bBeginKCF = false;

}

else

rotation_speed = 0;

//std::cout << "linear_speed = " << linear_speed << " rotation_speed = " << rotation_speed << std::endl;

// std::cout << dist_val[0] << " / " << dist_val[1] << " / " << dist_val[2] << " / " << dist_val[3] << " / " << dist_val[4] << std::endl;

// std::cout << "distance = " << distance << std::endl;

}

//cv::imshow(DEPTH_WINDOW, depthimage);

cv::waitKey(1);

}

};

int main(int argc, char** argv)

{

ros::init(argc, argv, "kcf_tracker");

ImageConverter ic;

while(ros::ok())

{

ros::spinOnce();

geometry_msgs::Twist twist;

twist.linear.x = linear_speed;

twist.linear.y = 0;

twist.linear.z = 0;

twist.angular.x = 0;

twist.angular.y = 0;

twist.angular.z = rotation_speed;

ic.pub.publish(twist);

if (cvWaitKey(33) == 'q')

break;

}

return 0;

}

程式執行結果。