FFmpeg的Android平臺移植及使用

參考部落格 http://blog.csdn.net/gobitan/article/details/22750719

原始碼下載:http://download.csdn.net/detail/h291850336/9166229

失敗多了就成功了.....

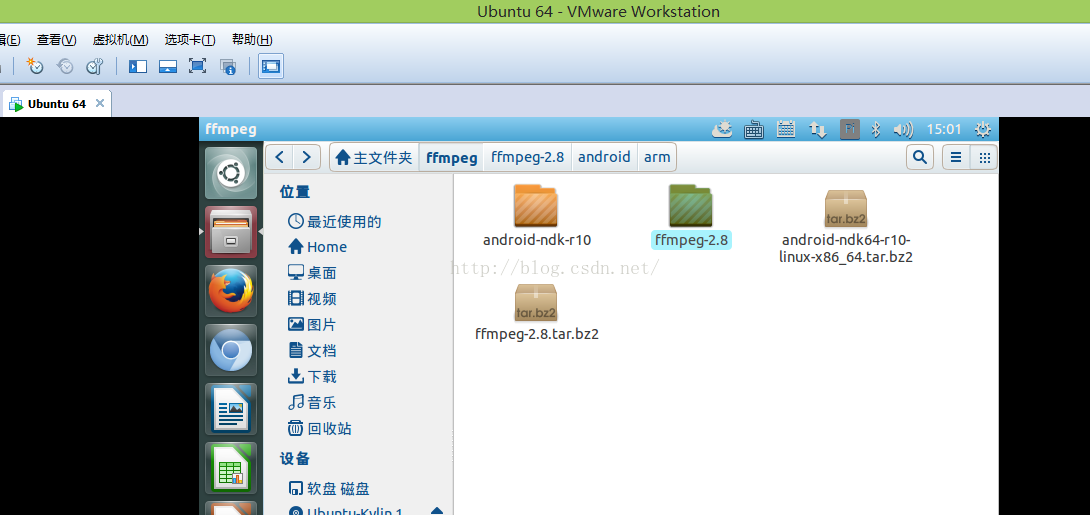

環境準備:

本人使用win8下安裝vmware workstation

Ubuntu kylin 14.04

android-ndk64-r10-linux-x86_64.tar.bz2

ffmpeg-2.8.tar.bz2

完成安裝配置後

修改ffmpeg-2.2/configure檔案

如果直接按照未修改的配置進行編譯,結果編譯出來的so檔案類似libavcodec.so.55.39.101,版本號位於so之後,Android上似乎無法載入。因此需要按如下修改:

將該檔案中的如下四行:

SLIBNAME_WITH_MAJOR='$(SLIBNAME).$(LIBMAJOR)'

LIB_INSTALL_EXTRA_CMD='$$(RANLIB)"$(LIBDIR)/$(LIBNAME)"'

SLIB_INSTALL_NAME='$(SLIBNAME_WITH_VERSION)'

SLIB_INSTALL_LINKS='$(SLIBNAME_WITH_MAJOR)$(SLIBNAME)'

替換為:

SLIBNAME_WITH_MAJOR='$(SLIBPREF)$(FULLNAME)-$(LIBMAJOR)$(SLIBSUF)'

LIB_INSTALL_EXTRA_CMD='$$(RANLIB)"$(LIBDIR)/$(LIBNAME)"'

SLIB_INSTALL_NAME='$(SLIBNAME_WITH_MAJOR)'

SLIB_INSTALL_LINKS='$(SLIBNAME)'

編寫build_android.sh指令碼檔案

<pre name="code" class="javascript">#!/bin/bash NDK=/home/sunmeng/ffmpeg/android-ndk-r10 SYSROOT=$NDK/platforms/android-L/arch-arm/ TOOLCHAIN=$NDK/toolchains/arm-linux-androideabi-4.9/prebuilt/linux-x86_64 function build_one { ./configure \ --prefix=$PREFIX \ --enable-shared \ --disable-static \ --disable-doc \ --disable-ffserver \ --enable-cross-compile \ --cross-prefix=$TOOLCHAIN/bin/arm-linux-androideabi- \ --target-os=linux \ --arch=arm \ --sysroot=$SYSROOT \ --extra-cflags="-Os -fpic $ADDI_CFLAGS" \ --extra-ldflags="$ADDI_LDFLAGS" \ $ADDITIONAL_CONFIGURE_FLAG } CPU=arm PREFIX=$(pwd)/android/$CPU ADDI_CFLAGS="-marm" build_one

這個指令碼檔案有幾個地方需要注意:

(1) NDK,SYSROOT和TOOLCHAIN這三個環境變數一定要換成你自己機器裡的。

(2) 確保cross-prefix變數所指向的路徑是存在的。

給build_android.sh增加可執行許可權: chmod +x build_android.sh

執行build_android.sh ./build_android.sh

- $make

- $make install

執行完後生成

include包含c檔案 lib中包含so檔案

到此ffmpeg編譯結束

建立一個普通的Android工程

- 建立一個新的Android工程FFmpegTest

- 在工程根目錄下建立jni資料夾

- 在jni下建立prebuilt目錄,然後:

(1) 將上面lib檔案編譯成功的7個so檔案放入到該目錄下;

(2) 將include下的所有標頭檔案夾拷貝到該目錄下.

- 建立包含native方法的類,先在src下建立FFmpegNative.java類檔案。主要包括載入so庫檔案和一個native測試方法兩部分,其內容如下:

package com.lmy.ffmpegtest;

public class FFmpegNative {

static {

System.loadLibrary("avcodec-56");

System.loadLibrary("avformat-56");

System.loadLibrary("avdevice-56");

System.loadLibrary("avfilter-5");

System.loadLibrary("avutil-54");

System.loadLibrary("ffmpegutil");

System.loadLibrary("swresample-1");

System.loadLibrary("swscale-3");

}

public native int H264DecoderInit(int width, int height);

public native int H264DecoderRelease();

public native int H264Decode(byte[] in, int insize, byte[] out);

public native int GetFFmpegVersion();

}用javah建立.標頭檔案:

進入bin/classes目錄,執行:javah-jni com.lmy.ffmpegtest..FFmpegNative

生成com_lmy_ffmpegtest_FFmpegNative.h

/* DO NOT EDIT THIS FILE - it is machine generated */

#include <jni.h>

/* Header for class com_lmy_ffmpegtest_FFmpegNative */

#ifndef _Included_com_lmy_ffmpegtest_FFmpegNative

#define _Included_com_lmy_ffmpegtest_FFmpegNative

#ifdef __cplusplus

extern "C" {

#endif

/*

* Class: com_lmy_ffmpegtest_FFmpegNative

* Method: H264DecoderInit

* Signature: (II)I

*/

JNIEXPORT jint JNICALL Java_com_lmy_ffmpegtest_FFmpegNative_H264DecoderInit

(JNIEnv *, jobject, jint, jint);

/*

* Class: com_lmy_ffmpegtest_FFmpegNative

* Method: H264DecoderRelease

* Signature: ()I

*/

JNIEXPORT jint JNICALL Java_com_lmy_ffmpegtest_FFmpegNative_H264DecoderRelease

(JNIEnv *, jobject);

/*

* Class: com_lmy_ffmpegtest_FFmpegNative

* Method: H264Decode

* Signature: ([BI[B)I

*/

JNIEXPORT jint JNICALL Java_com_lmy_ffmpegtest_FFmpegNative_H264Decode

(JNIEnv *, jobject, jbyteArray, jint, jbyteArray);

/*

* Class: com_lmy_ffmpegtest_FFmpegNative

* Method: GetFFmpegVersion

* Signature: ()I

*/

JNIEXPORT jint JNICALL Java_com_lmy_ffmpegtest_FFmpegNative_GetFFmpegVersion

(JNIEnv *, jobject);

#ifdef __cplusplus

}

#endif拷貝到jni下

根據生成的檔案編寫com_lmy_ffmpegtest_FFmpegNative.c檔案

<pre name="code" class="cpp">#include <math.h>

#include <libavutil/opt.h>

#include <libavcodec/avcodec.h>

#include <libavutil/channel_layout.h>

#include <libavutil/common.h>

#include <libavutil/imgutils.h>

#include <libavutil/mathematics.h>

#include <libavutil/samplefmt.h>

#include <android/log.h>

#include "com_lmy_ffmpegtest_FFmpegNative.h"

#define LOG_TAG "H264Android.c"

#define LOGD(...) __android_log_print(ANDROID_LOG_DEBUG,LOG_TAG,__VA_ARGS__)

#ifdef __cplusplus

extern "C" {

#endif

//Video

struct AVCodecContext *pAVCodecCtx = NULL;

struct AVCodec *pAVCodec;

struct AVPacket mAVPacket;

struct AVFrame *pAVFrame = NULL;

//Audio

struct AVCodecContext *pAUCodecCtx = NULL;

struct AVCodec *pAUCodec;

struct AVPacket mAUPacket;

struct AVFrame *pAUFrame = NULL;

int iWidth = 0;

int iHeight = 0;

int *colortab = NULL;

int *u_b_tab = NULL;

int *u_g_tab = NULL;

int *v_g_tab = NULL;

int *v_r_tab = NULL;

//short *tmp_pic=NULL;

unsigned int *rgb_2_pix = NULL;

unsigned int *r_2_pix = NULL;

unsigned int *g_2_pix = NULL;

unsigned int *b_2_pix = NULL;

void DeleteYUVTab() {

// av_free(tmp_pic);

av_free(colortab);

av_free(rgb_2_pix);

}

void CreateYUVTab_16() {

int i;

int u, v;

// tmp_pic = (short*)av_malloc(iWidth*iHeight*2); // 緙傛挸鐡� iWidth * iHeight * 16bits

colortab = (int *) av_malloc(4 * 256 * sizeof(int));

u_b_tab = &colortab[0 * 256];

u_g_tab = &colortab[1 * 256];

v_g_tab = &colortab[2 * 256];

v_r_tab = &colortab[3 * 256];

for (i = 0; i < 256; i++) {

u = v = (i - 128);

u_b_tab[i] = (int) (1.772 * u);

u_g_tab[i] = (int) (0.34414 * u);

v_g_tab[i] = (int) (0.71414 * v);

v_r_tab[i] = (int) (1.402 * v);

}

rgb_2_pix = (unsigned int *) av_malloc(3 * 768 * sizeof(unsigned int));

r_2_pix = &rgb_2_pix[0 * 768];

g_2_pix = &rgb_2_pix[1 * 768];

b_2_pix = &rgb_2_pix[2 * 768];

for (i = 0; i < 256; i++) {

r_2_pix[i] = 0;

g_2_pix[i] = 0;

b_2_pix[i] = 0;

}

for (i = 0; i < 256; i++) {

r_2_pix[i + 256] = (i & 0xF8) << 8;

g_2_pix[i + 256] = (i & 0xFC) << 3;

b_2_pix[i + 256] = (i) >> 3;

}

for (i = 0; i < 256; i++) {

r_2_pix[i + 512] = 0xF8 << 8;

g_2_pix[i + 512] = 0xFC << 3;

b_2_pix[i + 512] = 0x1F;

}

r_2_pix += 256;

g_2_pix += 256;

b_2_pix += 256;

}

void DisplayYUV_16(unsigned int *pdst1, unsigned char *y, unsigned char *u,

unsigned char *v, int width, int height, int src_ystride,

int src_uvstride, int dst_ystride) {

int i, j;

int r, g, b, rgb;

int yy, ub, ug, vg, vr;

unsigned char* yoff;

unsigned char* uoff;

unsigned char* voff;

unsigned int* pdst = pdst1;

int width2 = width / 2;

int height2 = height / 2;

if (width2 > iWidth / 2) {

width2 = iWidth / 2;

y += (width - iWidth) / 4 * 2;

u += (width - iWidth) / 4;

v += (width - iWidth) / 4;

}

if (height2 > iHeight)

height2 = iHeight;

for (j = 0; j < height2; j++) {

yoff = y + j * 2 * src_ystride;

uoff = u + j * src_uvstride;

voff = v + j * src_uvstride;

for (i = 0; i < width2; i++) {

yy = *(yoff + (i << 1));

ub = u_b_tab[*(uoff + i)];

ug = u_g_tab[*(uoff + i)];

vg = v_g_tab[*(voff + i)];

vr = v_r_tab[*(voff + i)];

b = yy + ub;

g = yy - ug - vg;

r = yy + vr;

rgb = r_2_pix[r] + g_2_pix[g] + b_2_pix[b];

yy = *(yoff + (i << 1) + 1);

b = yy + ub;

g = yy - ug - vg;

r = yy + vr;

pdst[(j * dst_ystride + i)] = (rgb)

+ ((r_2_pix[r] + g_2_pix[g] + b_2_pix[b]) << 16);

yy = *(yoff + (i << 1) + src_ystride);

b = yy + ub;

g = yy - ug - vg;

r = yy + vr;

rgb = r_2_pix[r] + g_2_pix[g] + b_2_pix[b];

yy = *(yoff + (i << 1) + src_ystride + 1);

b = yy + ub;

g = yy - ug - vg;

r = yy + vr;

pdst[((2 * j + 1) * dst_ystride + i * 2) >> 1] = (rgb)

+ ((r_2_pix[r] + g_2_pix[g] + b_2_pix[b]) << 16);

}

}

}

/*

* Class: FFmpeg

* Method: H264DecoderInit

* Signature: (II)I

*/

JNIEXPORT jint JNICALL Java_com_lmy_ffmpegtest_FFmpegNative_H264DecoderInit(

JNIEnv * env, jobject jobj, jint width, jint height) {

iWidth = width;

iHeight = height;

if (pAVCodecCtx != NULL) {

avcodec_close(pAVCodecCtx);

pAVCodecCtx = NULL;

}

if (pAVFrame != NULL) {

av_free(pAVFrame);

pAVFrame = NULL;

}

// Register all formats and codecs

av_register_all();

LOGD("avcodec register success");

//CODEC_ID_PCM_ALAW

pAVCodec = avcodec_find_decoder(AV_CODEC_ID_H264);

if (pAVCodec == NULL)

return -1;

//init AVCodecContext

pAVCodecCtx = avcodec_alloc_context3(pAVCodec);

if (pAVCodecCtx == NULL)

return -1;

/* we do not send complete frames */

if (pAVCodec->capabilities & CODEC_CAP_TRUNCATED)

pAVCodecCtx->flags |= CODEC_FLAG_TRUNCATED; /* we do not send complete frames */

/* open it */

if (avcodec_open2(pAVCodecCtx, pAVCodec, NULL) < 0)

return avcodec_open2(pAVCodecCtx, pAVCodec, NULL);

av_init_packet(&mAVPacket);

pAVFrame = av_frame_alloc();

if (pAVFrame == NULL)

return -1;

//pImageConvertCtx = sws_getContext(pAVCodecCtx->width, pAVCodecCtx->height, PIX_FMT_YUV420P, pAVCodecCtx->width, pAVCodecCtx->height,PIX_FMT_RGB565LE, SWS_BICUBIC, NULL, NULL, NULL);

//LOGD("sws_getContext return =%d",pImageConvertCtx);

LOGD("avcodec context success");

CreateYUVTab_16();

LOGD("create yuv table success");

return 1;

}

/*

* Class: com_android_concox_FFmpeg

* Method: H264DecoderRelease

* Signature: ()I

*/

JNIEXPORT jint JNICALL Java_com_lmy_ffmpegtest_FFmpegNative_H264DecoderRelease(

JNIEnv * env, jobject jobj) {

if (pAVCodecCtx != NULL) {

avcodec_close(pAVCodecCtx);

pAVCodecCtx = NULL;

}

if (pAVFrame != NULL) {

av_free(pAVFrame);

pAVFrame = NULL;

}

DeleteYUVTab();

return 1;

}

/*

* Class: com_android_concox_FFmpeg

* Method: H264Decode

* Signature: ([BI[B)I

*/

JNIEXPORT jint JNICALL Java_com_lmy_ffmpegtest_FFmpegNative_H264Decode(JNIEnv* env,

jobject thiz, jbyteArray in, jint inbuf_size, jbyteArray out) {

int i;

jbyte * inbuf = (jbyte*) (*env)->GetByteArrayElements(env, in, 0);

jbyte * Picture = (jbyte*) (*env)->GetByteArrayElements(env, out, 0);

av_frame_unref(pAVFrame);

mAVPacket.data = inbuf;

mAVPacket.size = inbuf_size;

LOGD("mAVPacket.size:%d\n ", mAVPacket.size);

int len = -1, got_picture = 0;

len = avcodec_decode_video2(pAVCodecCtx, pAVFrame, &got_picture,

&mAVPacket);

LOGD("len:%d\n", len);

if (len < 0) {

LOGD("len=-1,decode error");

return len;

}

if (got_picture > 0) {

LOGD("GOT PICTURE");

/*pImageConvertCtx = sws_getContext (pAVCodecCtx->width,

pAVCodecCtx->height, pAVCodecCtx->pix_fmt,

pAVCodecCtx->width, pAVCodecCtx->height,

PIX_FMT_RGB565LE, SWS_BICUBIC, NULL, NULL, NULL);

sws_scale (pImageConvertCtx, pAVFrame->data, pAVFrame->linesize,0, pAVCodecCtx->height, pAVFrame->data, pAVFrame->linesize);

*/

DisplayYUV_16((int*) Picture, pAVFrame->data[0], pAVFrame->data[1],

pAVFrame->data[2], pAVCodecCtx->width, pAVCodecCtx->height,

pAVFrame->linesize[0], pAVFrame->linesize[1], iWidth);

} else

LOGD("GOT PICTURE fail");

(*env)->ReleaseByteArrayElements(env, in, inbuf, 0);

(*env)->ReleaseByteArrayElements(env, out, Picture, 0);

return len;

}

/*

* Class: com_android_concox_FFmpeg

* Method: GetFFmpegVersion

* Signature: ()I

*/

JNIEXPORT jint JNICALL Java_com_lmy_ffmpegtest_FFmpegNative_GetFFmpegVersion(

JNIEnv * env, jobject jobj) {

return avcodec_version();

}

#ifdef __cplusplus

}

#endif

#endif

<pre name="code" class="cpp">#include <math.h>

#include <libavutil/opt.h>

#include <libavcodec/avcodec.h>

#include <libavutil/channel_layout.h>

#include <libavutil/common.h>

#include <libavutil/imgutils.h>

#include <libavutil/mathematics.h>

#include <libavutil/samplefmt.h>

#include <android/log.h>

#include "com_lmy_ffmpegtest_FFmpegNative.h"

#define LOG_TAG "H264Android.c"

#define LOGD(...) __android_log_print(ANDROID_LOG_DEBUG,LOG_TAG,__VA_ARGS__)

#ifdef __cplusplus

extern "C" {

#endif

//Video

struct AVCodecContext *pAVCodecCtx = NULL;

struct AVCodec *pAVCodec;

struct AVPacket mAVPacket;

struct AVFrame *pAVFrame = NULL;

//Audio

struct AVCodecContext *pAUCodecCtx = NULL;

struct AVCodec *pAUCodec;

struct AVPacket mAUPacket;

struct AVFrame *pAUFrame = NULL;

int iWidth = 0;

int iHeight = 0;

int *colortab = NULL;

int *u_b_tab = NULL;

int *u_g_tab = NULL;

int *v_g_tab = NULL;

int *v_r_tab = NULL;

//short *tmp_pic=NULL;

unsigned int *rgb_2_pix = NULL;

unsigned int *r_2_pix = NULL;

unsigned int *g_2_pix = NULL;

unsigned int *b_2_pix = NULL;

void DeleteYUVTab() {

// av_free(tmp_pic);

av_free(colortab);

av_free(rgb_2_pix);

}

void CreateYUVTab_16() {

int i;

int u, v;

// tmp_pic = (short*)av_malloc(iWidth*iHeight*2); // 緙傛挸鐡� iWidth * iHeight * 16bits

colortab = (int *) av_malloc(4 * 256 * sizeof(int));

u_b_tab = &colortab[0 * 256];

u_g_tab = &colortab[1 * 256];

v_g_tab = &colortab[2 * 256];

v_r_tab = &colortab[3 * 256];

for (i = 0; i < 256; i++) {

u = v = (i - 128);

u_b_tab[i] = (int) (1.772 * u);

u_g_tab[i] = (int) (0.34414 * u);

v_g_tab[i] = (int) (0.71414 * v);

v_r_tab[i] = (int) (1.402 * v);

}

rgb_2_pix = (unsigned int *) av_malloc(3 * 768 * sizeof(unsigned int));

r_2_pix = &rgb_2_pix[0 * 768];

g_2_pix = &rgb_2_pix[1 * 768];

b_2_pix = &rgb_2_pix[2 * 768];

for (i = 0; i < 256; i++) {

r_2_pix[i] = 0;

g_2_pix[i] = 0;

b_2_pix[i] = 0;

}

for (i = 0; i < 256; i++) {

r_2_pix[i + 256] = (i & 0xF8) << 8;

g_2_pix[i + 256] = (i & 0xFC) << 3;

b_2_pix[i + 256] = (i) >> 3;

}

for (i = 0; i < 256; i++) {

r_2_pix[i + 512] = 0xF8 << 8;

g_2_pix[i + 512] = 0xFC << 3;

b_2_pix[i + 512] = 0x1F;

}

r_2_pix += 256;

g_2_pix += 256;

b_2_pix += 256;

}

void DisplayYUV_16(unsigned int *pdst1, unsigned char *y, unsigned char *u,

unsigned char *v, int width, int height, int src_ystride,

int src_uvstride, int dst_ystride) {

int i, j;

int r, g, b, rgb;

int yy, ub, ug, vg, vr;

unsigned char* yoff;

unsigned char* uoff;

unsigned char* voff;

unsigned int* pdst = pdst1;

int width2 = width / 2;

int height2 = height / 2;

if (width2 > iWidth / 2) {

width2 = iWidth / 2;

y += (width - iWidth) / 4 * 2;

u += (width - iWidth) / 4;

v += (width - iWidth) / 4;

}

if (height2 > iHeight)

height2 = iHeight;

for (j = 0; j < height2; j++) {

yoff = y + j * 2 * src_ystride;

uoff = u + j * src_uvstride;

voff = v + j * src_uvstride;

for (i = 0; i < width2; i++) {

yy = *(yoff + (i << 1));

ub = u_b_tab[*(uoff + i)];

ug = u_g_tab[*(uoff + i)];

vg = v_g_tab[*(voff + i)];

vr = v_r_tab[*(voff + i)];

b = yy + ub;

g = yy - ug - vg;

r = yy + vr;

rgb = r_2_pix[r] + g_2_pix[g] + b_2_pix[b];

yy = *(yoff + (i << 1) + 1);

b = yy + ub;

g = yy - ug - vg;

r = yy + vr;

pdst[(j * dst_ystride + i)] = (rgb)

+ ((r_2_pix[r] + g_2_pix[g] + b_2_pix[b]) << 16);

yy = *(yoff + (i << 1) + src_ystride);

b = yy + ub;

g = yy - ug - vg;

r = yy + vr;

rgb = r_2_pix[r] + g_2_pix[g] + b_2_pix[b];

yy = *(yoff + (i << 1) + src_ystride + 1);

b = yy + ub;

g = yy - ug - vg;

r = yy + vr;

pdst[((2 * j + 1) * dst_ystride + i * 2) >> 1] = (rgb)

+ ((r_2_pix[r] + g_2_pix[g] + b_2_pix[b]) << 16);

}

}

}

/*

* Class: FFmpeg

* Method: H264DecoderInit

* Signature: (II)I

*/

JNIEXPORT jint JNICALL Java_com_lmy_ffmpegtest_FFmpegNative_H264DecoderInit(

JNIEnv * env, jobject jobj, jint width, jint height) {

iWidth = width;

iHeight = height;

if (pAVCodecCtx != NULL) {

avcodec_close(pAVCodecCtx);

pAVCodecCtx = NULL;

}

if (pAVFrame != NULL) {

av_free(pAVFrame);

pAVFrame = NULL;

}

// Register all formats and codecs

av_register_all();

LOGD("avcodec register success");

//CODEC_ID_PCM_ALAW

pAVCodec = avcodec_find_decoder(AV_CODEC_ID_H264);

if (pAVCodec == NULL)

return -1;

//init AVCodecContext

pAVCodecCtx = avcodec_alloc_context3(pAVCodec);

if (pAVCodecCtx == NULL)

return -1;

/* we do not send complete frames */

if (pAVCodec->capabilities & CODEC_CAP_TRUNCATED)

pAVCodecCtx->flags |= CODEC_FLAG_TRUNCATED; /* we do not send complete frames */

/* open it */

if (avcodec_open2(pAVCodecCtx, pAVCodec, NULL) < 0)

return avcodec_open2(pAVCodecCtx, pAVCodec, NULL);

av_init_packet(&mAVPacket);

pAVFrame = av_frame_alloc();

if (pAVFrame == NULL)

return -1;

//pImageConvertCtx = sws_getContext(pAVCodecCtx->width, pAVCodecCtx->height, PIX_FMT_YUV420P, pAVCodecCtx->width, pAVCodecCtx->height,PIX_FMT_RGB565LE, SWS_BICUBIC, NULL, NULL, NULL);

//LOGD("sws_getContext return =%d",pImageConvertCtx);

LOGD("avcodec context success");

CreateYUVTab_16();

LOGD("create yuv table success");

return 1;

}

/*

* Class: com_android_concox_FFmpeg

* Method: H264DecoderRelease

* Signature: ()I

*/

JNIEXPORT jint JNICALL Java_com_lmy_ffmpegtest_FFmpegNative_H264DecoderRelease(

JNIEnv * env, jobject jobj) {

if (pAVCodecCtx != NULL) {

avcodec_close(pAVCodecCtx);

pAVCodecCtx = NULL;

}

if (pAVFrame != NULL) {

av_free(pAVFrame);

pAVFrame = NULL;

}

DeleteYUVTab();

return 1;

}

/*

* Class: com_android_concox_FFmpeg

* Method: H264Decode

* Signature: ([BI[B)I

*/

JNIEXPORT jint JNICALL Java_com_lmy_ffmpegtest_FFmpegNative_H264Decode(JNIEnv* env,

jobject thiz, jbyteArray in, jint inbuf_size, jbyteArray out) {

int i;

jbyte * inbuf = (jbyte*) (*env)->GetByteArrayElements(env, in, 0);

jbyte * Picture = (jbyte*) (*env)->GetByteArrayElements(env, out, 0);

av_frame_unref(pAVFrame);

mAVPacket.data = inbuf;

mAVPacket.size = inbuf_size;

LOGD("mAVPacket.size:%d\n ", mAVPacket.size);

int len = -1, got_picture = 0;

len = avcodec_decode_video2(pAVCodecCtx, pAVFrame, &got_picture,

&mAVPacket);

LOGD("len:%d\n", len);

if (len < 0) {

LOGD("len=-1,decode error");

return len;

}

if (got_picture > 0) {

LOGD("GOT PICTURE");

/*pImageConvertCtx = sws_getContext (pAVCodecCtx->width,

pAVCodecCtx->height, pAVCodecCtx->pix_fmt,

pAVCodecCtx->width, pAVCodecCtx->height,

PIX_FMT_RGB565LE, SWS_BICUBIC, NULL, NULL, NULL);

sws_scale (pImageConvertCtx, pAVFrame->data, pAVFrame->linesize,0, pAVCodecCtx->height, pAVFrame->data, pAVFrame->linesize);

*/

DisplayYUV_16((int*) Picture, pAVFrame->data[0], pAVFrame->data[1],

pAVFrame->data[2], pAVCodecCtx->width, pAVCodecCtx->height,

pAVFrame->linesize[0], pAVFrame->linesize[1], iWidth);

} else

LOGD("GOT PICTURE fail");

(*env)->ReleaseByteArrayElements(env, in, inbuf, 0);

(*env)->ReleaseByteArrayElements(env, out, Picture, 0);

return len;

}

/*

* Class: com_android_concox_FFmpeg

* Method: GetFFmpegVersion

* Signature: ()I

*/

JNIEXPORT jint JNICALL Java_com_lmy_ffmpegtest_FFmpegNative_GetFFmpegVersion(

JNIEnv * env, jobject jobj) {

return avcodec_version();

}

#ifdef __cplusplus

}

#endif

#endif 編寫Android.mk

LOCAL_PATH := $(call my-dir)

include $(CLEAR_VARS)

LOCAL_MODULE := avutil-54-prebuilt

LOCAL_SRC_FILES := prebuilt/libavutil-54.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := avswresample-1-prebuilt

LOCAL_SRC_FILES := prebuilt/libswresample-1.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := swscale-3-prebuilt

LOCAL_SRC_FILES := prebuilt/libswscale-3.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := avcodec-56-prebuilt

LOCAL_SRC_FILES := prebuilt/libavcodec-56.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := avdevice-56-prebuilt

LOCAL_SRC_FILES := prebuilt/libavdevice-56.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := avformat-56-prebuilt

LOCAL_SRC_FILES := prebuilt/libavformat-56.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := avfilter-5-prebuilt

LOCAL_SRC_FILES := prebuilt/libavfilter-5.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := libffmpegutil

LOCAL_SRC_FILES := com_lmy_ffmpegtest_FFmpegNative.c

LOCAL_LDLIBS := -llog -ljnigraphics -lz -landroid -lm -pthread

LOCAL_SHARED_LIBRARIES := avcodec-56-prebuilt avdevice-56-prebuilt avfilter-5-prebuilt avformat-56-prebuilt avutil-54-prebuilt avswresample-1-prebuilt swscale-3-prebuilt

include $(BUILD_SHARED_LIBRARY)編寫Application.mk : APP_PLATFORM := android-9

此處省略回引起 dlopen failed: cannot locate symbol "atof" referenced by "libavformat-56.so類似的錯誤

開啟cmd命令列,進入工程目錄目,執行如下命令: ndk-build

測試程式碼:

public class MainActivity extends Activity {

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

FFmpegNative s = new FFmpegNative();

int i = s.GetFFmpegVersion();

((TextView)findViewById(R.id.text)).setText(i + "");

}

}

專案結構:

程式碼下載路徑:http://download.csdn.net/detail/h291850336/9166229