VLC for Android 基於 Opencv 對 RTSP視訊 實時人臉檢測

最近專案上需要在Android客戶端 通過獲取 RTSP 的視訊進行實時人臉檢測, 要做就就是以下幾點:

1、通過VLC 獲取 獲取RTSP

2、對VLC中播放的視訊進行實時截圖並儲存在SD卡中

3、用opencv對截圖後的檔案進行 人臉檢測

4、用擷取到的人臉顯示在主介面上

我在網上找到了一個公共的RTSP地址,作為RTSP視訊資料來源

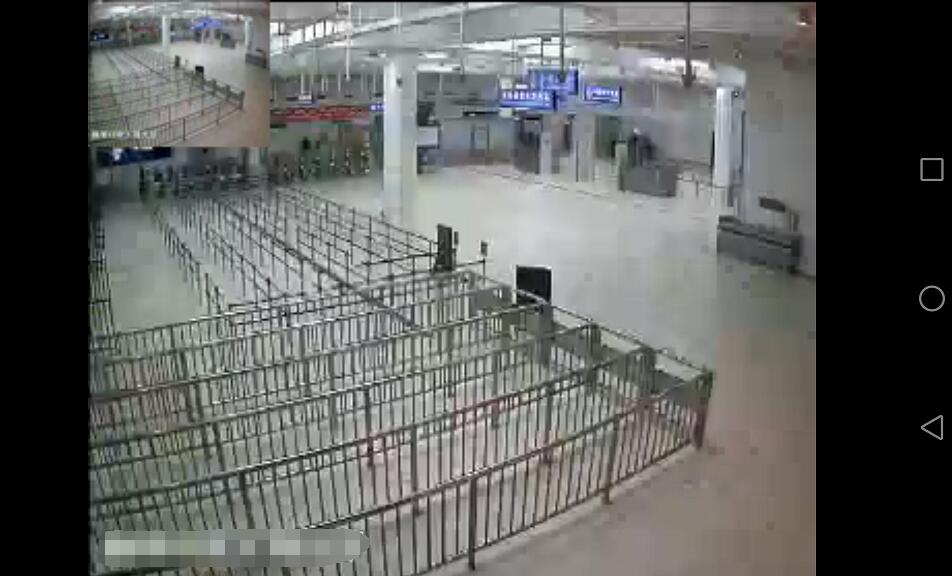

先看看VLC獲取RTSP效果:

坐上角的縮圖就是截圖後的縮圖,已經儲存在SD卡中了。

- 注:因為公開的RTSP中沒有人臉,所以只能用一張圖片作為測試

看看人臉檢測的效果:

測試的原圖是:

//VLC中將視訊幀截取出來,並儲存在SD卡中 注:在測試的時候,把上面程式碼中的 一號位 和二號位的註釋去掉, 並且註釋掉三號位,這樣就會對截圖後的圖片直接進行人臉檢測了。

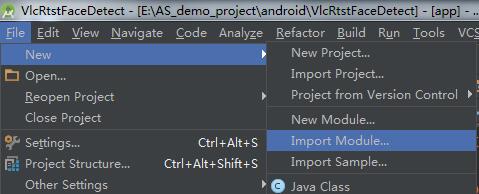

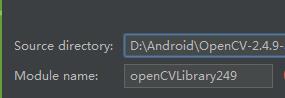

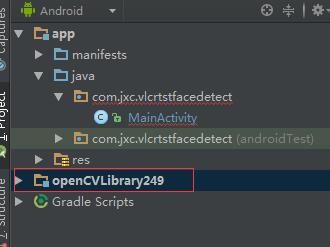

一、搭建環境

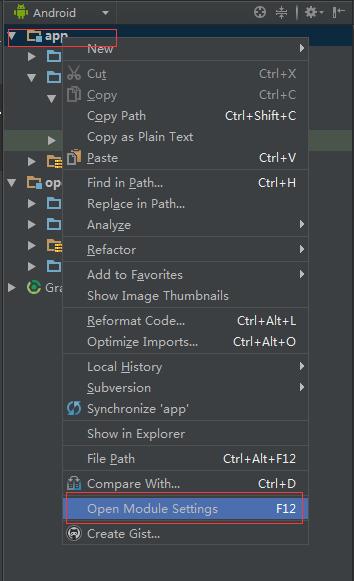

1、將OpenCV 作為Model匯入專案中(我用的版本是2.4.9)

注意,這裡的java資料夾,我也會放到上傳的壓縮包裡,可以直接按照同樣的方式,匯入Model

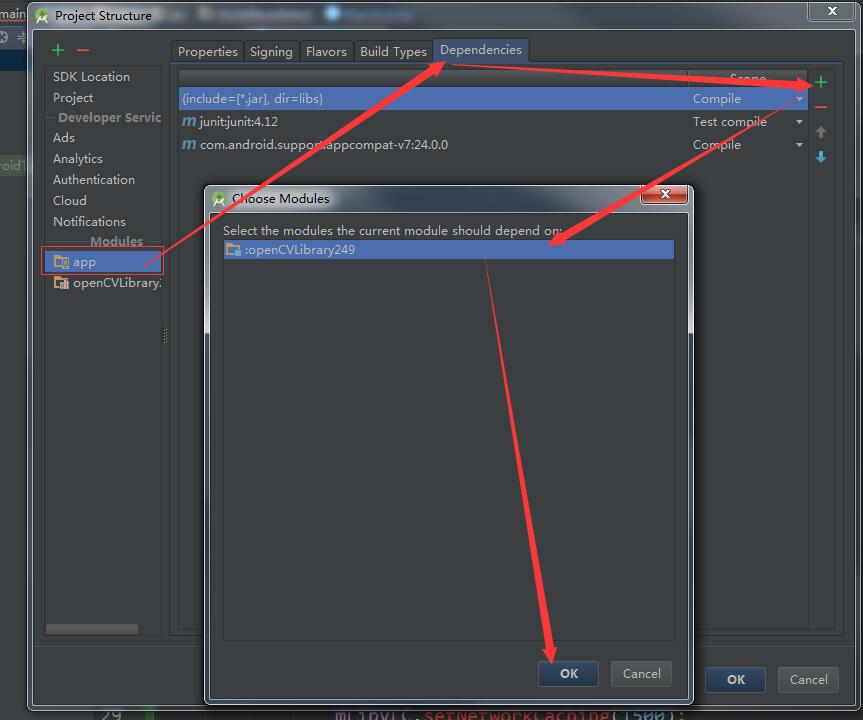

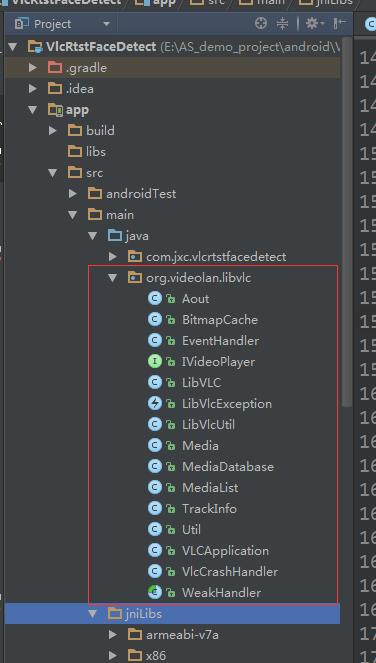

2、將Opencv中和VLC的so庫複製進去,直接將我專案裡的jniLibs資料夾複製到指定main資料夾下就可以了

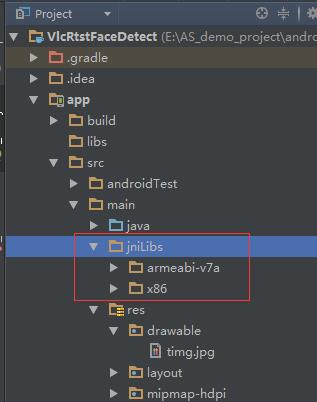

3、將VLC中要用到的程式碼拷貝到專案裡(直接從我專案裡拷貝即可,也可以自己去官網下載)

4、配置一下AndroidManifest.xml

<!-- 獲取rtsp視訊需要聯網 -->

<uses-permission android:name 這樣就配置的差不多了。

二、VLC獲取RTSP網路視訊

public class MainActivity extends Activity {

private static final String TAG = "MainActivity";

private VideoPlayerFragment fragment;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

fragment = new VideoPlayerFragment();

//-------------

// 其他程式碼

//-------------

try {

EventHandler em = EventHandler.getInstance();

em.addHandler(handler);

LibVLC mLibVLC = Util.getLibVlcInstance();

if (mLibVLC != null) {

mLibVLC.setSubtitlesEncoding("");

mLibVLC.setTimeStretching(false);

mLibVLC.setFrameSkip(true);

mLibVLC.setChroma("RV32");

mLibVLC.setVerboseMode(true);

mLibVLC.setAout(-1);

mLibVLC.setDeblocking(4);

mLibVLC.setNetworkCaching(1500);

//測試地址

// String pathUri = "rtsp://218.204.223.237:554/live/1/66251FC11353191F/e7ooqwcfbqjoo80j.sdp";

String pathUri = "rtsp://218.204.223.237:554/live/1/67A7572844E51A64/f68g2mj7wjua3la7.sdp";

mLibVLC.playMyMRL(pathUri);

}

} catch (LibVlcException e) {

e.printStackTrace();

}

}

Handler handler = new Handler() {

public void handleMessage(Message msg) {

Log.d(TAG, "Event = " + msg.getData().getInt("event"));

switch (msg.getData().getInt("event")) {

case EventHandler.MediaPlayerPlaying:

case EventHandler.MediaPlayerPaused:

break;

case EventHandler.MediaPlayerStopped:

break;

case EventHandler.MediaPlayerEndReached:

break;

case EventHandler.MediaPlayerVout:

if (msg.getData().getInt("data") > 0) {

FragmentTransaction transaction = getFragmentManager().beginTransaction();

transaction.add(R.id.frame_layout, fragment);

transaction.commit();

}

break;

case EventHandler.MediaPlayerPositionChanged:

break;

case EventHandler.MediaPlayerEncounteredError:

AlertDialog dialog = new AlertDialog.Builder(MainActivity.this)

.setTitle("提示資訊")

.setMessage("無法連線到網路攝像頭,請確保手機已經連線到攝像頭所在的wifi熱點")

.setNegativeButton("知道了", new DialogInterface.OnClickListener() {

@Override

public void onClick(DialogInterface dialogInterface, int i) {

finish();

}

}).create();

dialog.setCanceledOnTouchOutside(false);

dialog.show();

break;

default:

Log.d(TAG, "Event not handled ");

break;

}

}

};

}這裡的作用就是先初始化一下VLC,然後判斷能否載入到RTSP視訊,如果載入不到,就應該是 你的安卓手機 和 拍攝RTSP的攝像頭 不處於同一個網路下, 這個需要自行處理,一般連線同一個WIFI就可以了。 我這裡的測試地址是 網上公開的,大家都可以獲取到RTSP視訊的。

三 、對獲取到的RTSP進行截圖並儲存

public class VideoPlayerFragment extends Fragment implements IVideoPlayer {

public final static String TAG = "VideoPlayerFragment";

private SurfaceHolder surfaceHolder = null;

private LibVLC mLibVLC = null;

private int mVideoHeight;

private int mVideoWidth;

private int mSarDen;

private int mSarNum;

private int mUiVisibility = -1;

private static final int SURFACE_SIZE = 3;

private SurfaceView surfaceView = null;

private FaceRtspUtil mFaceUtil;

//截圖後的圖片的寬度

private static final int PIC_WIDTH = 1280;

//截圖後的圖片的高度

private static final int PIC_HEIGHT = 720;

private String mPicCachePath;

private Timer mTimer;

@Override

public View onCreateView(LayoutInflater inflater, ViewGroup container, Bundle savedInstanceState) {

//存放VLC的截圖圖片的資料夾路徑

View view = inflater.inflate(R.layout.video_player, null);

init(view);

if (Util.isICSOrLater())

getActivity().getWindow().getDecorView().findViewById(android.R.id.content)

.setOnSystemUiVisibilityChangeListener(

new OnSystemUiVisibilityChangeListener() {

@Override

public void onSystemUiVisibilityChange(

int visibility) {

if (visibility == mUiVisibility)

return;

setSurfaceSize(mVideoWidth, mVideoHeight,

mSarNum, mSarDen);

if (visibility == View.SYSTEM_UI_FLAG_VISIBLE) {

Log.d(TAG, "onSystemUiVisibilityChange");

}

mUiVisibility = visibility;

}

});

try {

mLibVLC = LibVLC.getInstance();

if (mLibVLC != null) {

EventHandler em = EventHandler.getInstance();

em.addHandler(eventHandler);

}

} catch (LibVlcException e) {

e.printStackTrace();

Log.i(TAG, "onCreateView: " + e.getMessage());

}

return view;

}

@Override

public void onStart() {

super.onStart();

if (!mLibVLC.isPlaying()) {

mLibVLC.play();

}

}

@Override

public void onPause() {

super.onPause();

mLibVLC.stop();

mTimer.cancel();

}

private CascadeClassifier initializeOpenCVDependencies() {

CascadeClassifier classifier = null;

try {

InputStream is = getResources().openRawResource(R.raw.haarcascade_frontalface_alt);

File cascadeDir = getActivity().getDir("cascade", Context.MODE_PRIVATE);

File mCascadeFile = new File(cascadeDir, "haarcascade_frontalface_alt.xml");

FileOutputStream fos = new FileOutputStream(mCascadeFile);

byte[] bytes = new byte[4096];

int len;

while ((len = is.read(bytes)) != -1) {

fos.write(bytes, 0, len);

}

is.close();

fos.close();

classifier = new CascadeClassifier(mCascadeFile.getAbsolutePath());

} catch (Exception e) {

e.printStackTrace();

Log.e(TAG, "Error loading cascade", e);

}

return classifier;

}

@Override

public void onResume() {

super.onResume();

if (!OpenCVLoader.initDebug()) {

Log.e(TAG, "OpenCV init error");

}

CascadeClassifier classifier = initializeOpenCVDependencies();

mFaceUtil = new FaceRtspUtil(classifier, PIC_WIDTH, PIC_HEIGHT);

mTimer = new Timer();

//開啟一個定時器,每隔一秒截圖檢測一次

mTimer.schedule(new TimerTask() {

@Override

public void run() {

//VLC中將視訊幀截取出來,並儲存在SD卡中

// String picPath = snapShot();

//

// //將圖片轉化為Bitmap物件後

// Bitmap oldBitmap = getFramePicture(picPath);

Bitmap oldBitmap = BitmapFactory.decodeResource(getResources(), R.drawable.timg);

//對儲存在本地的圖片進行人臉檢測,並獲取截到的所有人臉

final List<Bitmap> faces = mFaceUtil.detectFrame(oldBitmap);

if (faces == null || faces.isEmpty()) {

return;

}

getActivity().runOnUiThread(new Runnable() {

@Override

public void run() {

callBack.pushData(faces);

}

});

}

}, 1000, 1000);

}

/**

* 初始化元件

*/

private void init(View view) {

surfaceView = (SurfaceView) view.findViewById(R.id.main_surface);

surfaceHolder = surfaceView.getHolder();

surfaceHolder.setFormat(PixelFormat.RGBX_8888);

surfaceHolder.addCallback(mSurfaceCallback);

mPicCachePath = getSDPath() + "/FaceTest/";

File file = new File(mPicCachePath);

if (!file.exists()) {

file.mkdirs();

}

}

/**

* 截圖

*/

private String snapShot() {

try {

String name = mPicCachePath + System.currentTimeMillis() + ".jpg";

//呼叫LibVlc的截圖功能,傳入一個路徑,及圖片的寬高

if (mLibVLC.takeSnapShot(name, PIC_WIDTH, PIC_HEIGHT)) {

Log.i(TAG, "snapShot: 儲存成功--" + System.currentTimeMillis());

return name;

}

Log.i(TAG, "snapShot: 儲存失敗");

} catch (Exception e) {

e.printStackTrace();

}

return null;

}

/**

* 傳入檔案路徑,獲取bitmap

*

* @param path 路徑

*/

private Bitmap getFramePicture(String path) {

if (TextUtils.isEmpty(path) || mFaceUtil == null) {

Log.i(TAG, "faceDetect: 檔案路徑為空|| mFaceUtil == null");

return null;

}

File file = new File(path);

if (!file.exists()) {

return null;

}

return file2Bitmap(file);

}

private RtspCallBack callBack;

public void setRtspCallBack(RtspCallBack callBack) {

this.callBack = callBack;

}

public interface RtspCallBack {

void pushData(List<Bitmap> faces);

}

@Override

public void onConfigurationChanged(Configuration newConfig) {

setSurfaceSize(mVideoWidth, mVideoHeight, mSarNum, mSarDen);

super.onConfigurationChanged(newConfig);

}

/**

* attach and disattach surface to the lib

*/

private final Callback mSurfaceCallback = new Callback() {

@Override

public void surfaceChanged(SurfaceHolder holder, int format, int width,

int height) {

if (format == PixelFormat.RGBX_8888)

Log.d(TAG, "Pixel format is RGBX_8888");

else if (format == PixelFormat.RGB_565)

Log.d(TAG, "Pixel format is RGB_565");

else if (format == ImageFormat.YV12)

Log.d(TAG, "Pixel format is YV12");

else

Log.d(TAG, "Pixel format is other/unknown");

mLibVLC.attachSurface(holder.getSurface(),

VideoPlayerFragment.this);

}

@Override

public void surfaceCreated(SurfaceHolder holder) {

}

@Override

public void surfaceDestroyed(SurfaceHolder holder) {

mLibVLC.detachSurface();

}

};

public final Handler mHandler = new VideoPlayerHandler(this);

private static class VideoPlayerHandler extends

WeakHandler<VideoPlayerFragment> {

public VideoPlayerHandler(VideoPlayerFragment owner) {

super(owner);

}

@Override

public void handleMessage(Message msg) {

VideoPlayerFragment activity = getOwner();

if (activity == null) // WeakReference could be GC'ed early

return;

switch (msg.what) {

case SURFACE_SIZE:

activity.changeSurfaceSize();

break;

}

}

}

private void changeSurfaceSize() {

// get screen size

int dw = getActivity().getWindow().getDecorView().getWidth();

int dh = getActivity().getWindow().getDecorView().getHeight();

// getWindow().getDecorView() doesn't always take orientation into

// account, we have to correct the values

boolean isPortrait = getResources().getConfiguration().orientation == Configuration.ORIENTATION_PORTRAIT;

if (dw > dh && isPortrait || dw < dh && !isPortrait) {

int d = dw;

dw = dh;

dh = d;

}

if (dw * dh == 0)

return;

// compute the aspect ratio

double ar, vw;

double density = (double) mSarNum / (double) mSarDen;

if (density == 1.0) {

/* No indication about the density, assuming 1:1 */

ar = (double) mVideoWidth / (double) mVideoHeight;

} else {

/* Use the specified aspect ratio */

vw = mVideoWidth * density;

ar = vw / mVideoHeight;

}

// compute the display aspect ratio

double dar = (double) dw / (double) dh;

if (dar < ar)

dh = (int) (dw / ar);

else

dw = (int) (dh * ar);

surfaceHolder.setFixedSize(mVideoWidth, mVideoHeight);

LayoutParams lp = surfaceView.getLayoutParams();

lp.width = dw;

lp.height = dh;

surfaceView.setLayoutParams(lp);

surfaceView.invalidate();

}

private final Handler eventHandler = new VideoPlayerEventHandler(this);

private static class VideoPlayerEventHandler extends

WeakHandler<VideoPlayerFragment> {

public VideoPlayerEventHandler(VideoPlayerFragment owner) {

super(owner);

}

@Override

public void handleMessage(Message msg) {

VideoPlayerFragment activity = getOwner();

if (activity == null)

return;

Log.d(TAG, "Event = " + msg.getData().getInt("event"));

switch (msg.getData().getInt("event")) {

case EventHandler.MediaPlayerPlaying:

Log.i(TAG, "MediaPlayerPlaying");

break;

case EventHandler.MediaPlayerPaused:

Log.i(TAG, "MediaPlayerPaused");

break;

case EventHandler.MediaPlayerStopped:

Log.i(TAG, "MediaPlayerStopped");

break;

case EventHandler.MediaPlayerEndReached:

Log.i(TAG, "MediaPlayerEndReached");

activity.getActivity().finish();

break;

case EventHandler.MediaPlayerVout:

activity.getActivity().finish();

break;

default:

Log.d(TAG, "Event not handled");

break;

}

}

}

@Override

public void onDestroy() {

if (mLibVLC != null) {

mLibVLC.stop();

}

EventHandler em = EventHandler.getInstance();

em.removeHandler(eventHandler);

super.onDestroy();

}

public void setSurfaceSize(int width, int height, int sar_num, int sar_den) {

if (width * height == 0)

return;

mVideoHeight = height;

mVideoWidth = width;

mSarNum = sar_num;

mSarDen = sar_den;

Message msg = mHandler.obtainMessage(SURFACE_SIZE);

mHandler.sendMessage(msg);

}

@Override

public void setSurfaceSize(int width, int height, int visible_width,

int visible_height, int sar_num, int sar_den) {

mVideoHeight = height;

mVideoWidth = width;

mSarNum = sar_num;

mSarDen = sar_den;

Message msg = mHandler.obtainMessage(SURFACE_SIZE);

mHandler.sendMessage(msg);

}

private String getSDPath() {

boolean hasSDCard = Environment.getExternalStorageState().equals(Environment.MEDIA_MOUNTED);

if (hasSDCard) {

return Environment.getExternalStorageDirectory().toString();

} else

return Environment.getDownloadCacheDirectory().toString();

}

private Bitmap file2Bitmap(File file) {

if (file == null) {

return null;

}

try {

FileInputStream fis = new FileInputStream(file);

return BitmapFactory.decodeStream(fis);

} catch (FileNotFoundException e) {

e.printStackTrace();

}

return null;

}

}主要看 onResume 方法

1、初始化opencv:OpenCVLoader.initDebug()

2、初始化用於人臉檢測的分類級聯器:

CascadeClassifier classifier = initializeOpenCVDependencies();

3、然後建立一個定時器,每個1秒截圖檢測一次

mTimer = new Timer();

mTimer.schedule(….);

四、人臉檢測

public class FaceRtspUtil {

private static final String TAG = "FaceUtil";

private Mat grayscaleImage;

private CascadeClassifier cascadeClassifier = null;

public FaceRtspUtil(CascadeClassifier cascadeClassifier, int width, int height) {

this.cascadeClassifier = cascadeClassifier;

//人臉的寬高最小也要是原圖的height的 10%

grayscaleImage = new Mat(height, width, CvType.CV_8UC4);

}

/**

* 給一個圖片,檢測這張圖片裡是否有人臉

*

* @param oldBitmap 圖片

* @return 返回一個List集合,裡面存放所有檢測到的人臉

*/

public List<Bitmap> detectFrame(Bitmap oldBitmap) {

Mat aInputFrame = new Mat();

if (oldBitmap == null) {

return null;

}

Utils.bitmapToMat(oldBitmap, aInputFrame);

if (grayscaleImage == null) {

Log.i(TAG, "detectFrame: aInputFrame == null || grayscaleImage == null");

return null;

}

Imgproc.cvtColor(aInputFrame, grayscaleImage, Imgproc.COLOR_RGBA2RGB);

MatOfRect faces = new MatOfRect();

// 使用級聯分類器 檢測人臉

if (cascadeClassifier != null) {

//不獲取60*60以下的人臉

cascadeClassifier.detectMultiScale(grayscaleImage, faces, 1.1, 2, 2,

new Size(60, 60), new Size());

}

//facesArray裡儲存所有檢測到的人臉的位置及大小

Rect[] facesArray = faces.toArray();

if (facesArray == null || facesArray.length == 0) {

//如果沒有人臉,直接退出

Log.i(TAG, "detectFrame: 該圖片中沒有人臉");

return null;

}

//儲存該幀中的所有人臉

List<Bitmap> bitmaps = new ArrayList<>();

Bitmap tmpBitmap = Bitmap.createBitmap(aInputFrame.width(), aInputFrame.height(), Bitmap.Config.RGB_565);

Utils.matToBitmap(aInputFrame, tmpBitmap);

for (Rect aFacesArray : facesArray) {

Bitmap bitmap = Bitmap.createBitmap(tmpBitmap, aFacesArray.x, aFacesArray.y,

aFacesArray.width, aFacesArray.height);

bitmaps.add(bitmap);

}

//回收幀圖片

tmpBitmap.recycle();

return bitmaps;

}

}傳入一張圖片,返回 臉的List集合

五、顯示檢測到的人臉

這裡我們使用 介面回撥 的方法將人臉 從Fragment中傳到Activity去

在Activity中接收到人臉以後,建立ImageView將人臉顯示出來

final LinearLayout ll_faces = (LinearLayout) findViewById(R.id.ll_faces);

fragment.setRtspCallBack(new VideoPlayerFragment.RtspCallBack() {

@Override

public void pushData(final List<Bitmap> faces) {

//清除所有的子View

ll_faces.removeAllViews();

for (int i = 0; i < faces.size(); i++) {

ImageView image = new ImageView(MainActivity.this);

image.setImageBitmap(faces.get(i));

LinearLayout.LayoutParams params = new LinearLayout.LayoutParams(-2, -2);

ll_faces.addView(image, params);

}

}

});