深度學習:多層感知機MLP數字識別的程式碼實現

深度學習我看的是neural network and deep learning 這本書,這本書寫的真的非常好,是我的導師推薦的。這篇部落格裡的程式碼也是來自於這,我最近是在學習Pytorch,學習的過程我覺得還是有必要把程式碼自己敲一敲,就像當初學習機器學習一樣。也是希望通過這個程式碼能夠加深對反向傳播原路的認識。

在下面的程式碼中,比較複雜的部分就是mini_batch部分了,我們一定要有清晰的認識,我們在上一篇部落格中給出了反向傳播方法四公式,而且在文章的末尾又給出了使用這四個公式的方法。

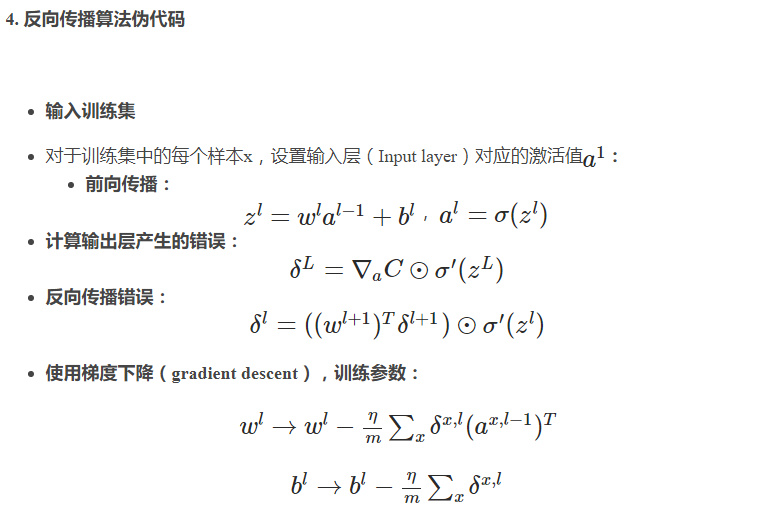

所以牢牢記住這個個流程,一共分為:

1.輸入訓練集

2.前向傳播

3.計算輸出層產生的錯誤

4.反向傳播的錯誤

5.使用梯度下降,訓練引數

我們將在下面的程式碼中用以上的幾個數字(1到5)來表示當前程式碼是哪個過程

import random

import mnist_loader

import numpy as np

class Network(object):

def __init__(self, sizes): """The list ``sizes`` contains the number of neurons in the respective layers of the network. For example, if the list was [2, 3, 1] then it would be a three-layer network, with the first layer containing 2 neurons, the second layer 3 neurons, and the third layer 1 neuron. The biases and weights for the network are initialized randomly, using a Gaussian distribution with mean 0, and variance 1. Note that the first layer is assumed to be an input layer, and by convention we won't set any biases for those neurons, since biases are only ever used in computing the outputs from later layers.""" self.num_layers = len(sizes) self.sizes = sizes self.biases = [np.random.randn(y, 1) for y in sizes[1:]] self.weights = [np.random.randn(y, x) for x, y in zip(sizes[:-1], sizes[1:])]

前向傳播方法

def feedforward(self, a):

“”“Return the output of the network ifais input.”""

for b, w in zip(self.biases, self.weights):

a = sigmoid(np.dot(w, a)+b)

return a

隨機梯度方法

def SGD(self, training_data, epochs, mini_batch_size, eta,

test_data=None):

“”“Train the neural network using mini-batch stochastic

gradient descent. Thetraining_datais a list of tuples

(x, y)representing the training inputs and the desired

outputs. The other non-optional parameters are

self-explanatory. Iftest_datais provided then the

network will be evaluated against the test data after each

epoch, and partial progress printed out. This is useful for

tracking progress, but slows things down substantially.”""

training_data = list(training_data)

n = len(training_data)

if test_data:

test_data = list(test_data)

n_test = len(test_data)

打亂資料,然後按照mini_batch大小取資料,在這裡有update_mini_batch方法,這裡面就是反向傳播的核心功能。還有evaluate方法,這裡包含feedforward方法。

for j in range(epochs):

random.shuffle(training_data)

mini_batches = [

training_data[k:k+mini_batch_size]

for k in range(0, n, mini_batch_size)]

for mini_batch in mini_batches:

self.update_mini_batch(mini_batch, eta)

if test_data:

print(“Epoch {} : {} / {}”.format(j,self.evaluate(test_data),n_test));

else:

print(“Epoch {} complete”.format(j))

在這個程式碼裡最核心的部分便是

def update_mini_batch(self, mini_batch, eta):

“”“Update the network’s weights and biases by applying

gradient descent using backpropagation to a single mini batch.

Themini_batchis a list of tuples(x, y), andeta

is the learning rate.”""

nabla_b = [np.zeros(b.shape) for b in self.biases]

nabla_w = [np.zeros(w.shape) for w in self.weights]

for x, y in mini_batch:

delta_nabla_b, delta_nabla_w = self.backprop(x, y)

nabla_b = [nb+dnb for nb, dnb in zip(nabla_b, delta_nabla_b)]

nabla_w = [nw+dnw for nw, dnw in zip(nabla_w, delta_nabla_w)]

下面這個程式碼是屬於過程5,使用梯度下降,訓練引數

self.weights = [w-(eta/len(mini_batch))*nw

for w, nw in zip(self.weights, nabla_w)]

self.biases = [b-(eta/len(mini_batch))*nb

for b, nb in zip(self.biases, nabla_b)]

def backprop(self, x, y):

“”“Return a tuple(nabla_b, nabla_w)representing the

gradient for the cost function C_x.nabla_band

nabla_ware layer-by-layer lists of numpy arrays, similar

toself.biasesandself.weights.”""

nabla_b = [np.zeros(b.shape) for b in self.biases]

nabla_w = [np.zeros(w.shape) for w in self.weights]

activation = x

activations = [x]

zs = []

for b, w in zip(self.biases, self.weights):

z = np.dot(w, activation)+b

zs.append(z)

activation = sigmoid(z)

activations.append(activation)

下面這個程式碼是計算輸出層誤差

delta = self.cost_derivative(activations[-1], y) * \

sigmoid_prime(zs[-1])

下面這個公式屬於反向傳播公式3

nabla_b[-1] = delta

下面這個公式屬於反向創博公式4

nabla_w[-1] = np.dot(delta, activations[-2].transpose())

for l in range(2, self.num_layers):

z = zs[-l]

sp = sigmoid_prime(z)

delta = np.dot(self.weights[-l+1].transpose(), delta) * sp

nabla_b[-l] = delta

nabla_w[-l] = np.dot(delta, activations[-l-1].transpose())

return (nabla_b, nabla_w)

def evaluate(self, test_data):

"""Return the number of test inputs for which the neural

network outputs the correct result. Note that the neural

network's output is assumed to be the index of whichever

neuron in the final layer has the highest activation."""

test_results = [(np.argmax(self.feedforward(x)), y)

for (x, y) in test_data]

return sum(int(x == y) for (x, y) in test_results)

def cost_derivative(self, output_activations, y):

"""Return the vector of partial derivatives \partial C_x /

\partial a for the output activations."""

return (output_activations-y)

def sigmoid(z):

“”“The sigmoid function.”""

return 1.0/(1.0+np.exp(-z))

def sigmoid_prime(z):

“”“Derivative of the sigmoid function.”""

return sigmoid(z)*(1-sigmoid(z))

if name == ‘main’:

training_data, validation_data, test_data = mnist_loader.load_data_wrapper()

net = Network([784, 30, 10])

net.SGD(training_data, 30, 10, 100.0, test_data=test_data)