opencv2 預測演算法之Kalman濾波器(KalmanFilter)

轉自:http://blog.csdn.net/gdfsg/article/details/50904811

本文將簡要回顧一下卡爾曼濾波理論,然後詳細介紹如何在OpenCV中使用卡爾曼濾波進行跟蹤,最後給兩個程式例項。

1. 卡爾曼濾波理論回顧

對於一個動態系統,我們首先定義一組狀態空間方程

狀態方程:

測量方程:

xk是狀態向量,zk是測量向量,Ak是狀態轉移矩陣,uk是控制向量,Bk是控制矩陣,wk是系統誤差(噪聲),Hk是測量矩陣,vk是測量誤差(噪聲)。wk和vk都是高斯噪聲,即

整個卡爾曼濾波的過程就是個遞推計算的過程,不斷的“預測——更新——預測——更新……”

預測

預測狀態值:

預測最小均方誤差:

更新

測量誤差:

測量協方差:

最優卡爾曼增益:

修正狀態值:

修正最小均方誤差:

2.OpenCV中的KalmanFilter詳解

OpenCV中有兩個版本的卡爾曼濾波方法KalmanFilter(C++)和CvKalman(C),用法差不太多,這裡只介紹KalmanFilter。

C++版本中將KalmanFilter封裝到一個類中,其結構如下所示:

[cpp] view plain copy print?- class CV_EXPORTS_W KalmanFilter

- {

- public:

- CV_WRAP KalmanFilter(); //構造預設KalmanFilter物件

- CV_WRAP KalmanFilter(int dynamParams, int measureParams, int controlParams=0, int type=CV_32F); //完整構造KalmanFilter物件方法

- void init(int dynamParams, int measureParams, int controlParams=0, int type=CV_32F); //初始化KalmanFilter物件,會替換原來的KF物件

- CV_WRAP const Mat& predict(const Mat& control=Mat()); //計算預測的狀態值

- CV_WRAP const Mat& correct(const Mat& measurement); //根據測量值更新狀態值

- Mat statePre; //預測值 (x'(k)): x(k)=A*x(k-1)+B*u(k)

- Mat statePost; //狀態值 (x(k)): x(k)=x'(k)+K(k)*(z(k)-H*x'(k))

- Mat transitionMatrix; //狀態轉移矩陣 (A)

- Mat controlMatrix; //控制矩陣 B

- Mat measurementMatrix; //測量矩陣 H

- Mat processNoiseCov; //系統誤差 Q

- Mat measurementNoiseCov; //測量誤差 R

- Mat errorCovPre; //最小均方誤差 (P'(k)): P'(k)=A*P(k-1)*At + Q)

- Mat gain; //卡爾曼增益 (K(k)): K(k)=P'(k)*Ht*inv(H*P'(k)*Ht+R)

- Mat errorCovPost; //修正的最小均方誤差 (P(k)): P(k)=(I-K(k)*H)*P'(k)

- // 臨時矩陣

- Mat temp1;

- Mat temp2;

- Mat temp3;

- Mat temp4;

- Mat temp5;

- };

- enum

- {

- OPTFLOW_USE_INITIAL_FLOW = CV_LKFLOW_INITIAL_GUESSES,

- OPTFLOW_LK_GET_MIN_EIGENVALS = CV_LKFLOW_GET_MIN_EIGENVALS,

- OPTFLOW_FARNEBACK_GAUSSIAN = 256

- };

class CV_EXPORTS_W KalmanFilter

{

public:

CV_WRAP KalmanFilter(); //構造預設KalmanFilter物件

CV_WRAP KalmanFilter(int dynamParams, int measureParams, int controlParams=0, int type=CV_32F); //完整構造KalmanFilter物件方法

void init(int dynamParams, int measureParams, int controlParams=0, int type=CV_32F); //初始化KalmanFilter物件,會替換原來的KF物件

CV_WRAP const Mat& predict(const Mat& control=Mat()); //計算預測的狀態值

CV_WRAP const Mat& correct(const Mat& measurement); //根據測量值更新狀態值

Mat statePre; //預測值 (x'(k)): x(k)=A*x(k-1)+B*u(k)

Mat statePost; //狀態值 (x(k)): x(k)=x'(k)+K(k)*(z(k)-H*x'(k))

Mat transitionMatrix; //狀態轉移矩陣 (A)

Mat controlMatrix; //控制矩陣 B

Mat measurementMatrix; //測量矩陣 H

Mat processNoiseCov; //系統誤差 Q

Mat measurementNoiseCov; //測量誤差 R

Mat errorCovPre; //最小均方誤差 (P'(k)): P'(k)=A*P(k-1)*At + Q)

Mat gain; //卡爾曼增益 (K(k)): K(k)=P'(k)*Ht*inv(H*P'(k)*Ht+R)

Mat errorCovPost; //修正的最小均方誤差 (P(k)): P(k)=(I-K(k)*H)*P'(k)

// 臨時矩陣

Mat temp1;

Mat temp2;

Mat temp3;

Mat temp4;

Mat temp5;

};

enum

{

OPTFLOW_USE_INITIAL_FLOW = CV_LKFLOW_INITIAL_GUESSES,

OPTFLOW_LK_GET_MIN_EIGENVALS = CV_LKFLOW_GET_MIN_EIGENVALS,

OPTFLOW_FARNEBACK_GAUSSIAN = 256

};函式原型見:…..\OpenCV2\sources\modules\ocl\src\kalman.cpp

只有四個方法: 構造KF物件KalmanFilter(DP,MP,CP)、初始化KF物件init(DP,MP,CP)、預測predict( )、更新correct( )。除非你要重新構造KF物件,否則用不到init( )。

KalmanFilter(DP,MP,CP)和init( )就是賦值,沒什麼好說的。

注意:KalmanFilter結構體中並沒有測量值,測量值需要自己定義,而且一定要定義,因為後面要用。

程式設計步驟step1:定義KalmanFilter類並初始化

//構造KF物件

KalmanFilter KF(DP, MP, 0);

//初始化相關引數

KF.transitionMatrix 轉移矩陣 A

KF.measurementMatrix 測量矩陣 H

KF.processNoiseCov 過程噪聲 Q

KF.measurementNoiseCov 測量噪聲 R

KF.errorCovPost 最小均方誤差 P

KF.statePost 系統初始狀態 x(0)

Mat measurement 定義初始測量值 z(0)

step2:預測

KF.predict( ) //返回的是下一時刻的狀態值KF.statePost (k+1)

step3:更新

更新measurement; //注意measurement不能通過觀測方程進行計算得到,要自己定義!

更新KF KF.correct(measurement)

最終的結果應該是更新後的statePost.

相關引數的確定

對於系統狀態方程,簡記為Y=AX+B,X和Y是表示系統狀態的列向量,A是轉移矩陣,B是其他項。

狀態值(向量)只要能表示系統的狀態即可,狀態值的維數決定了轉移矩陣A的維數,比如X和Y是N×1的,則A是N×N的。

A的確定跟X有關,只要保證方程中不相干項的係數為0即可,看下面例子

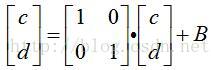

X和Y是二維的,

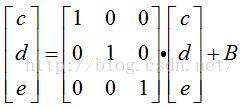

X和Y是三維的,

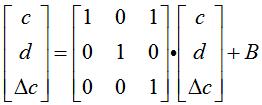

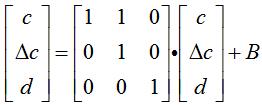

X和Y是三維的,但c和△ c是相關項

上面的1也可以是其他值。

下面對predict( ) 和correct( )函式介紹下,可以不用看,不影響程式設計。

[cpp] view plain copy print?- CV_EXPORTS const oclMat& KalmanFilter::predict(const oclMat& control)

- {

- gemm(transitionMatrix, statePost, 1, oclMat(), 0, statePre);

- oclMat temp;

- if(control.data)

- gemm(controlMatrix, control, 1, statePre, 1, statePre);

- gemm(transitionMatrix, errorCovPost, 1, oclMat(), 0, temp1);

- gemm(temp1, transitionMatrix, 1, processNoiseCov, 1, errorCovPre, GEMM_2_T);

- statePre.copyTo(statePost);

- return statePre;

- }

CV_EXPORTS const oclMat& KalmanFilter::predict(const oclMat& control)

{

gemm(transitionMatrix, statePost, 1, oclMat(), 0, statePre);

oclMat temp;

if(control.data)

gemm(controlMatrix, control, 1, statePre, 1, statePre);

gemm(transitionMatrix, errorCovPost, 1, oclMat(), 0, temp1);

gemm(temp1, transitionMatrix, 1, processNoiseCov, 1, errorCovPre, GEMM_2_T);

statePre.copyTo(statePost);

return statePre;

}gemm( )是矩陣的廣義乘法

void gemm(const GpuMat& src1, constGpuMat& src2, double alpha, const GpuMat& src3, double beta,GpuMat& dst, int flags=0, Stream& stream=Stream::Null())

dst = alpha · src1 · src2 +beta· src3

上面,oclMat()其實是uk,只不過預設為0,所以沒賦值。整個過程就計算了x'和P’。(用x'代表x的預測值,用P'代表P的預測值)。GEMM_2_T表示對第2個引數轉置。

可見,和第一部分的理論介紹完全一致。 [cpp] view plain copy print?x’(k)=1·A·x(k-1)

如果B非空, x'(k) = 1·B·u + 1·x'(k-1)

temp1 = 1·A·P(k-1) + 0·u(k)

P’(k) = 1· temp1·AT + 1· Qk= A·P(k-1)·AT + 1· Qk

- CV_EXPORTS const oclMat& KalmanFilter::correct(const oclMat& measurement)

- {

- CV_Assert(measurement.empty() == false);

- gemm(measurementMatrix, errorCovPre, 1, oclMat(), 0, temp2);

- gemm(temp2, measurementMatrix, 1, measurementNoiseCov, 1, temp3, GEMM_2_T);

- Mat temp;

- solve(Mat(temp3), Mat(temp2), temp, DECOMP_SVD);

- temp4.upload(temp);

- gain = temp4.t();

- gemm(measurementMatrix, statePre, -1, measurement, 1, temp5);

- gemm(gain, temp5, 1, statePre, 1, statePost);

- gemm(gain, temp2, -1, errorCovPre, 1, errorCovPost);

- return statePost;

- }

CV_EXPORTS const oclMat& KalmanFilter::correct(const oclMat& measurement)

{

CV_Assert(measurement.empty() == false);

gemm(measurementMatrix, errorCovPre, 1, oclMat(), 0, temp2);

gemm(temp2, measurementMatrix, 1, measurementNoiseCov, 1, temp3, GEMM_2_T);

Mat temp;

solve(Mat(temp3), Mat(temp2), temp, DECOMP_SVD);

temp4.upload(temp);

gain = temp4.t();

gemm(measurementMatrix, statePre, -1, measurement, 1, temp5);

gemm(gain, temp5, 1, statePre, 1, statePost);

gemm(gain, temp2, -1, errorCovPre, 1, errorCovPost);

return statePost;

}求解線型最小二乘估計

temp2 = 1· H·P’ + 0·u(k)

temp3 = 1· temp2·HT + 1·R = H·P’·HT+ 1· R 也就是上面的Sk

temp = argmin||tem2- temp3||

K=temp

temp5 = -1· H·x’ + 1·zk 就是上面的y’。

x = 1·K·temp5 + 1·x’ = KT·y’ +x’

P =-1·K·temp2 + 1·P’ = -K·H·P’+P’ = (I- K·H) P’也和第一部分的理論完全一致。

通過深入函式內部,學到了兩個實用的函式哦。矩陣廣義乘法gemm( )、最小二乘估計solve( )

補充:

1)以例2為例,為什麼狀態值一般都設定成(x,y,△x,△y)?我們不妨設定成(x,y,△x),對應的轉移矩陣也改成3×3的。可以看到仍能跟上,不過在x方向跟蹤速度快,在y方向跟蹤速度慢。進一步設定成(x,y)和2×2的轉移矩陣,程式的跟蹤速度簡直是龜速。所以,簡單理解,△x和△y嚴重影響對應方向上的跟蹤速度。

3.例項

例1 OpenCV自帶的示例程式

[cpp] view plain copy print?- #include "opencv2/video/tracking.hpp"

- #include "opencv2/highgui/highgui.hpp"

- #include <iostream>

- #include <stdio.h>

- usingnamespace std;

- usingnamespace cv;

- //計算相對視窗的座標值,因為座標原點在左上角,所以sin前有個負號

- staticinline Point calcPoint(Point2f center, double R, double angle)

- {

- return center + Point2f((float)cos(angle), (float)-sin(angle))*(float)R;

- }

- staticvoid help()

- {

- printf( "\nExamle of c calls to OpenCV's Kalman filter.\n"

- " Tracking of rotating point.\n"

- " Rotation speed is constant.\n"

- " Both state and measurements vectors are 1D (a point angle),\n"

- " Measurement is the real point angle + gaussian noise.\n"

- " The real and the estimated points are connected with yellow line segment,\n"

- " the real and the measured points are connected with red line segment.\n"

- " (if Kalman filter works correctly,\n"

- " the yellow segment should be shorter than the red one).\n"

- "\n"

- " Pressing any key (except ESC) will reset the tracking with a different speed.\n"

- " Pressing ESC will stop the program.\n"

- );

- }

- int main(int, char**)

- {

- help();

- Mat img(500, 500, CV_8UC3);

- KalmanFilter KF(2, 1, 0); //建立卡爾曼濾波器物件KF

- Mat state(2, 1, CV_32F); //state(角度,△角度)

- Mat processNoise(2, 1, CV_32F);

- Mat measurement = Mat::zeros(1, 1, CV_32F); //定義測量值

- char code = (char)-1;

- for(;;)

- {

- //1.初始化

- randn( state, Scalar::all(0), Scalar::all(0.1) ); //

- KF.transitionMatrix = *(Mat_<float>(2, 2) << 1, 1, 0, 1); //轉移矩陣A[1,1;0,1]

- //將下面幾個矩陣設定為對角陣

- setIdentity(KF.measurementMatrix); //測量矩陣H

- setIdentity(KF.processNoiseCov, Scalar::all(1e-5)); //系統噪聲方差矩陣Q

- setIdentity(KF.measurementNoiseCov, Scalar::all(1e-1)); //測量噪聲方差矩陣R

- setIdentity(KF.errorCovPost, Scalar::all(1)); //後驗錯誤估計協方差矩陣P

- randn(KF.statePost, Scalar::all(0), Scalar::all(0.1)); //x(0)初始化

- for(;;)

- {

- Point2f center(img.cols*0.5f, img.rows*0.5f); //center影象中心點

- float R = img.cols/3.f; //半徑

- double stateAngle = state.at<float>(0); //跟蹤點角度

- Point statePt = calcPoint(center, R, stateAngle); //跟蹤點座標statePt

- //2. 預測

- Mat prediction = KF.predict(); //計算預測值,返回x'

- double predictAngle = prediction.at<float>(0); //預測點的角度

- Point predictPt = calcPoint(center, R, predictAngle); //預測點座標predictPt

- //3.更新

- //measurement是測量值

- randn( measurement, Scalar::all(0), Scalar::all(KF.measurementNoiseCov.at<float>(0))); //給measurement賦值N(0,R)的隨機值

- // generate measurement

- measurement += KF.measurementMatrix*state; //z = z + H*x;

- double measAngle = measurement.at<float>(0);

- Point measPt = calcPoint(center, R, measAngle);

- // plot points

- //定義了畫十字的方法,值得學習下

- #define drawCross( center, color, d ) \

- line( img, Point( center.x - d, center.y - d ), \

- Point( center.x + d, center.y + d ), color, 1, CV_AA, 0); \

- line( img, Point( center.x + d, center.y - d ), \

- Point( center.x - d, center.y + d ), color, 1, CV_AA, 0 )

- img = Scalar::all(0);

- drawCross( statePt, Scalar(255,255,255), 3 );

- drawCross( measPt, Scalar(0,0,255), 3 );

- drawCross( predictPt, Scalar(0,255,0), 3 );

- line( img, statePt, measPt, Scalar(0,0,255), 3, CV_AA, 0 );

- line( img, statePt, predictPt, Scalar(0,255,255), 3, CV_AA, 0 );

- //呼叫kalman這個類的correct方法得到加入觀察值校正後的狀態變數值矩陣

- if(theRNG().uniform(0,4) != 0)

- KF.correct(measurement);

- //不加噪聲的話就是勻速圓周運動,加了點噪聲類似勻速圓周運動,因為噪聲的原因,運動方向可能會改變

- randn( processNoise, Scalar(0), Scalar::all(sqrt(KF.processNoiseCov.at<float>(0, 0)))); //vk

- state = KF.transitionMatrix*state + processNoise;

- imshow( "Kalman", img );

- code = (char)waitKey(100);

- if( code > 0 )

- break;

- }

- if( code == 27 || code == 'q' || code == 'Q' )

- break;

- }

- return 0;

- }

#include "opencv2/video/tracking.hpp"

#include "opencv2/highgui/highgui.hpp"

#include <iostream>

#include <stdio.h>

using namespace std;

using namespace cv;

//計算相對視窗的座標值,因為座標原點在左上角,所以sin前有個負號

static inline Point calcPoint(Point2f center, double R, double angle)

{

return center + Point2f((float)cos(angle), (float)-sin(angle))*(float)R;

}

static void help()

{

printf( "\nExamle of c calls to OpenCV's Kalman filter.\n"

" Tracking of rotating point.\n"

" Rotation speed is constant.\n"

" Both state and measurements vectors are 1D (a point angle),\n"

" Measurement is the real point angle + gaussian noise.\n"

" The real and the estimated points are connected with yellow line segment,\n"

" the real and the measured points are connected with red line segment.\n"

" (if Kalman filter works correctly,\n"

" the yellow segment should be shorter than the red one).\n"

"\n"

" Pressing any key (except ESC) will reset the tracking with a different speed.\n"

" Pressing ESC will stop the program.\n"

);

}

int main(int, char**)

{

help();

Mat img(500, 500, CV_8UC3);

KalmanFilter KF(2, 1, 0); //建立卡爾曼濾波器物件KF

Mat state(2, 1, CV_32F); //state(角度,△角度)

Mat processNoise(2, 1, CV_32F);

Mat measurement = Mat::zeros(1, 1, CV_32F); //定義測量值

char code = (char)-1;

for(;;)

{

//1.初始化

randn( state, Scalar::all(0), Scalar::all(0.1) ); //

KF.transitionMatrix = *(Mat_<float>(2, 2) << 1, 1, 0, 1); //轉移矩陣A[1,1;0,1]

//將下面幾個矩陣設定為對角陣

setIdentity(KF.measurementMatrix); //測量矩陣H

setIdentity(KF.processNoiseCov, Scalar::all(1e-5)); //系統噪聲方差矩陣Q

setIdentity(KF.measurementNoiseCov, Scalar::all(1e-1)); //測量噪聲方差矩陣R

setIdentity(KF.errorCovPost, Scalar::all(1)); //後驗錯誤估計協方差矩陣P

randn(KF.statePost, Scalar::all(0), Scalar::all(0.1)); //x(0)初始化

for(;;)

{

Point2f center(img.cols*0.5f, img.rows*0.5f); //center影象中心點

float R = img.cols/3.f; //半徑

double stateAngle = state.at<float>(0); //跟蹤點角度

Point statePt = calcPoint(center, R, stateAngle); //跟蹤點座標statePt

//2. 預測

Mat prediction = KF.predict(); //計算預測值,返回x'

double predictAngle = prediction.at<float>(0); //預測點的角度

Point predictPt = calcPoint(center, R, predictAngle); //預測點座標predictPt

//3.更新

//measurement是測量值

randn( measurement, Scalar::all(0), Scalar::all(KF.measurementNoiseCov.at<float>(0))); //給measurement賦值N(0,R)的隨機值

// generate measurement

measurement += KF.measurementMatrix*state; //z = z + H*x;

double measAngle = measurement.at<float>(0);

Point measPt = calcPoint(center, R, measAngle);

// plot points

//定義了畫十字的方法,值得學習下

#define drawCross( center, color, d ) \

line( img, Point( center.x - d, center.y - d ), \

Point( center.x + d, center.y + d ), color, 1, CV_AA, 0); \

line( img, Point( center.x + d, center.y - d ), \

Point( center.x - d, center.y + d ), color, 1, CV_AA, 0 )

img = Scalar::all(0);

drawCross( statePt, Scalar(255,255,255), 3 );

drawCross( measPt, Scalar(0,0,255), 3 );

drawCross( predictPt, Scalar(0,255,0), 3 );

line( img, statePt, measPt, Scalar(0,0,255), 3, CV_AA, 0 );

line( img, statePt, predictPt, Scalar(0,255,255), 3, CV_AA, 0 );

//呼叫kalman這個類的correct方法得到加入觀察值校正後的狀態變數值矩陣

if(theRNG().uniform(0,4) != 0)

KF.correct(measurement);

//不加噪聲的話就是勻速圓周運動,加了點噪聲類似勻速圓周運動,因為噪聲的原因,運動方向可能會改變

randn( processNoise, Scalar(0), Scalar::all(sqrt(KF.processNoiseCov.at<float>(0, 0)))); //vk

state = KF.transitionMatrix*state + processNoise;

imshow( "Kalman", img );

code = (char)waitKey(100);

if( code > 0 )

break;

}

if( code == 27 || code == 'q' || code == 'Q' )

break;

}

return 0;

}

例2 跟蹤滑鼠位置

[cpp] view plain copy print?- #include "opencv2/video/tracking.hpp"

- #include "opencv2/highgui/highgui.hpp"

- #include <stdio.h>

- usingnamespace cv;

- usingnamespace std;

- constint winHeight=600;

- constint winWidth=800;

- Point mousePosition= Point(winWidth>>1,winHeight>>1);

- //mouse event callback

- void mouseEvent(int event, int x, int y, int flags, void *param )

- {

- if (event==CV_EVENT_MOUSEMOVE) {

- mousePosition = Point(x,y);

- }

- }

- int main (void)

- {

- RNG rng;

- //1.kalman filter setup

- constint stateNum=4; //狀態值4×1向量(x,y,△x,△y)

- constint measureNum=2; //測量值2×1向量(x,y)

- KalmanFilter KF(stateNum, measureNum, 0);

- KF.transitionMatrix = *(Mat_<float>(4, 4) <<1,0,1,0,0,1,0,1,0,0,1,0,0,0,0,1); //轉移矩陣A

- setIdentity(KF.measurementMatrix); //測量矩陣H

- setIdentity(KF.processNoiseCov, Scalar::all(1e-5)); //系統噪聲方差矩陣Q

- setIdentity(KF.measurementNoiseCov, Scalar::all(1e-1)); //測量噪聲方差矩陣R

- setIdentity(KF.errorCovPost, Scalar::all(1)); //後驗錯誤估計協方差矩陣P

- rng.fill(KF.statePost,RNG::UNIFORM,0,winHeight>winWidth?winWidth:winHeight); //初始狀態值x(0)

- Mat measurement = Mat::zeros(measureNum, 1, CV_32F); //初始測量值x'(0),因為後面要更新這個值,所以必須先定義

- namedWindow("kalman");

- setMouseCallback("kalman",mouseEvent);

- Mat image(winHeight,winWidth,CV_8UC3,Scalar(0));

- while (1)

- {

- //2.kalman prediction

- Mat prediction = KF.predict();

- Point predict_pt = Point(prediction.at<float>(0),prediction.at<float>(1) ); //預測值(x',y')

- //3.update measurement

- measurement.at<float>(0) = (float)mousePosition.x;

- measurement.at<float>(1) = (float)mousePosition.y;

- //4.update

- KF.correct(measurement);

- //draw

- image.setTo(Scalar(255,255,255,0));

- circle(image,predict_pt,5,Scalar(0,255,0),3); //predicted point with green

- circle(image,mousePosition,5,Scalar(255,0,0),3); //current position with red

- char buf[256];

- sprintf_s(buf,256,"predicted position:(%3d,%3d)",predict_pt.x,predict_pt.y);

- putText(image,buf,Point(10,30),CV_FONT_HERSHEY_SCRIPT_COMPLEX,1,Scalar(0,0,0),1,8);

- sprintf_s(buf,256,"current position :(%3d,%3d)",mousePosition.x,mousePosition.y);

- putText(image,buf,cvPoint(10,60),CV_FONT_HERSHEY_SCRIPT_COMPLEX,1,Scalar(0,0,0),1,8);

- imshow("kalman", image);

- int key=waitKey(3);

- if (key==27){//esc

- break;

- }

- }

- }

#include "opencv2/video/tracking.hpp"

#include "opencv2/highgui/highgui.hpp"

#include <stdio.h>

using namespace cv;

using namespace std;

const int winHeight=600;

const int winWidth=800;

Point mousePosition= Point(winWidth>>1,winHeight>>1);

//mouse event callback

void mouseEvent(int event, int x, int y, int flags, void *param )

{

if (event==CV_EVENT_MOUSEMOVE) {

mousePosition = Point(x,y);

}

}

int main (void)

{

RNG rng;

//1.kalman filter setup

const int stateNum=4; //狀態值4×1向量(x,y,△x,△y)

const int measureNum=2; //測量值2×1向量(x,y)

KalmanFilter KF(stateNum, measureNum, 0);

KF.transitionMatrix = *(Mat_<float>(4, 4) <<1,0,1,0,0,1,0,1,0,0,1,0,0,0,0,1); //轉移矩陣A

setIdentity(KF.measurementMatrix); //測量矩陣H

setIdentity(KF.processNoiseCov, Scalar::all(1e-5)); //系統噪聲方差矩陣Q

setIdentity(KF.measurementNoiseCov, Scalar::all(1e-1)); //測量噪聲方差矩陣R

setIdentity(KF.errorCovPost, Scalar::all(1)); //後驗錯誤估計協方差矩陣P

rng.fill(KF.statePost,RNG::UNIFORM,0,winHeight>winWidth?winWidth:winHeight); //初始狀態值x(0)

Mat measurement = Mat::zeros(measureNum, 1, CV_32F); //初始測量值x'(0),因為後面要更新這個值,所以必須先定義

namedWindow("kalman");

setMouseCallback("kalman",mouseEvent);

Mat image(winHeight,winWidth,CV_8UC3,Scalar(0));

while (1)

{

//2.kalman prediction

Mat prediction = KF.predict();

Point predict_pt = Point(prediction.at<float>(0),prediction.at<float>(1) ); //預測值(x',y')

//3.update measurement

measurement.at<float>(0) = (float)mousePosition.x;

measurement.at<float>(1) = (float)mousePosition.y;

//4.update

KF.correct(measurement);

//draw

image.setTo(Scalar(255,255,255,0));

circle(image,predict_pt,5,Scalar(0,255,0),3); //predicted point with green

circle(image,mousePosition,5,Scalar(255,0,0),3); //current position with red

char buf[256];

sprintf_s(buf,256,"predicted position:(%3d,%3d)",predict_pt.x,predict_pt.y);

putText(image,buf,Point(10,30),CV_FONT_HERSHEY_SCRIPT_COMPLEX,1,Scalar(0,0,0),1,8);

sprintf_s(buf,256,"current position :(%3d,%3d)",mousePosition.x,mousePosition.y);

putText(image,buf,cvPoint(10,60),CV_FONT_HERSHEY_SCRIPT_COMPLEX,1,Scalar(0,0,0),1,8);

imshow("kalman", image);

int key=waitKey(3);

if (key==27){//esc

break;

}

}

}結果

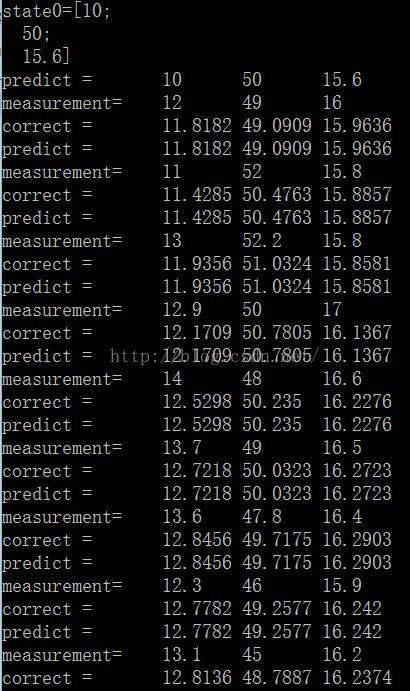

例3

[cpp] view plain copy print?- #include "opencv2/video/tracking.hpp"

- #include <opencv2/legacy/legacy.hpp> //#include "cvAux.h"

- #include <opencv2/highgui/highgui.hpp>

- #include <opencv2/core/core.hpp>

- #include <stdio.h>

- usingnamespace cv;

- usingnamespace std;

- int main( )

- {

- float A[10][3] =

- {

- 10, 50, 15.6,

- 12, 49, 16,

- 11, 52, 15.8,

- 13, 52.2, 15.8,

- 12.9, 50, 17,

- 14, 48, 16.6,

- 13.7, 49, 16.5,

- 13.6, 47.8, 16.4,

- 12.3, 46, 15.9,

- 13.1, 45, 16.2

- };

- constint stateNum=3;

- constint measureNum=3;

- KalmanFilter KF(stateNum, measureNum, 0);

- KF.transitionMatrix = *(Mat_<float>(3, 3) <<1,0,0,0,1,0,0,0,1); //轉移矩陣A

- setIdentity(KF.measurementMatrix); //測量矩陣H

- setIdentity(KF.processNoiseCov, Scalar::all(1e-5)); //系統噪聲方差矩陣Q

- setIdentity(KF.measurementNoiseCov, Scalar::all(1e-1)); //測量噪聲方差矩陣R

- setIdentity(KF.errorCovPost, Scalar::all(1));

- Mat measurement = Mat::zeros(measureNum, 1, CV_32F);

- //初始狀態值

- KF.statePost = *(Mat_<float>(3, 1) <<A[0][0],A[0][1],A[0][2]);

- cout<<"state0="<<KF.statePost<<endl;

- for(int i=1;i<=9;i++)

- {

- //預測

- Mat prediction = KF.predict();

- //計算測量值

- measurement.at<float>(0) = (float)A[i][0];

- measurement.at<float>(1) = (float)A[i][1];

- measurement.at<float>(2) = (float)A[i][2];

- //更新

- KF.correct(measurement);

- //輸出結果

- cout<<"predict ="<<"\t"<<prediction.at<float>(0)<<"\t"<<prediction.at<float>(1)<<"\t"<<prediction.at<float>(2)<<endl;

- cout<<"measurement="<<"\t"<<measurement.at<float>(0)<<"\t"<<measurement.at<float>(1)<<"\t"<<measurement.at<float>(2)<<endl;

- cout<<"correct ="<<"\t"<<KF.statePost.at<float>(0)<<"\t"<<KF.statePost.at<float>(1)<<"\t"<<KF.statePost.at<float>(2)<<endl;

- }

- system("pause");

- }

#include "opencv2/video/tracking.hpp"

#include <opencv2/legacy/legacy.hpp> //#include "cvAux.h"

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/core/core.hpp>

#include <stdio.h>

using namespace cv;

using namespace std;

int main( )

{

float A[10][3] =

{

10, 50, 15.6,

12, 49, 16,

11, 52, 15.8,

13, 52.2, 15.8,

12.9, 50, 17,

14, 48, 16.6,

13.7, 49, 16.5,

13.6, 47.8, 16.4,

12.3, 46, 15.9,

13.1, 45, 16.2

};

const int stateNum=3;

const int measureNum=3;

KalmanFilter KF(stateNum, measureNum, 0);

KF.transitionMatrix = *(Mat_<float>(3, 3) <<1,0,0,0,1,0,0,0,1); //轉移矩陣A

setIdentity(KF.measurementMatrix); //測量矩陣H

setIdentity(KF.processNoiseCov, Scalar::all(1e-5)); //系統噪聲方差矩陣Q

setIdentity(KF.measurementNoiseCov, Scalar::all(1e-1)); //測量噪聲方差矩陣R

setIdentity(KF.errorCovPost, Scalar::all(1));

Mat measurement = Mat::zeros(measureNum, 1, CV_32F);

//初始狀態值

KF.statePost = *(Mat_<float>(3, 1) <<A[0][0],A[0][1],A[0][2]);

cout<<"state0="<<KF.statePost<<endl;

for(int i=1;i<=9;i++)

{

//預測

Mat prediction = KF.predict();

//計算測量值

measurement.at<float>(0) = (float)A[i][0];

measurement.at<float>(1) = (float)A[i][1];

measurement.at<float>(2) = (float)A[i][2];

//更新

KF.correct(measurement);

//輸出結果

cout<<"predict ="<<"\t"<<prediction.at<float>(0)<<"\t"<<prediction.at<float>(1)<<"\t"<<prediction.at<float>(2)<<endl;

cout<<"measurement="<<"\t"<<measurement.at<float>(0)<<"\t"<<measurement.at<float>(1)<<"\t"<<measurement.at<float>(2)<<endl;

cout<<"correct ="<<"\t"<<KF.statePost.at<float>(0)<<"\t"<<KF.statePost.at<float>(1)<<"\t"<<KF.statePost.at<float>(2)<<endl;

}

system("pause");

}

這裡預測值和上一個狀態值一樣,原因是轉移矩陣A是單位陣,如果改成非單位陣,結果就不一樣了。