go http 框架效能大幅下降原因分析

最近在開發一個web 框架,然後業務方使用過程中,跟我們說,壓測qps 上不去,我就很納悶,httprouter + net/http.httpserver , 效能不可能這麼差啊,網上的壓測結果都是10w qps 以上,幾個middleware 至於將效能拖垮?後來一番排查,發現些有意思的東西。

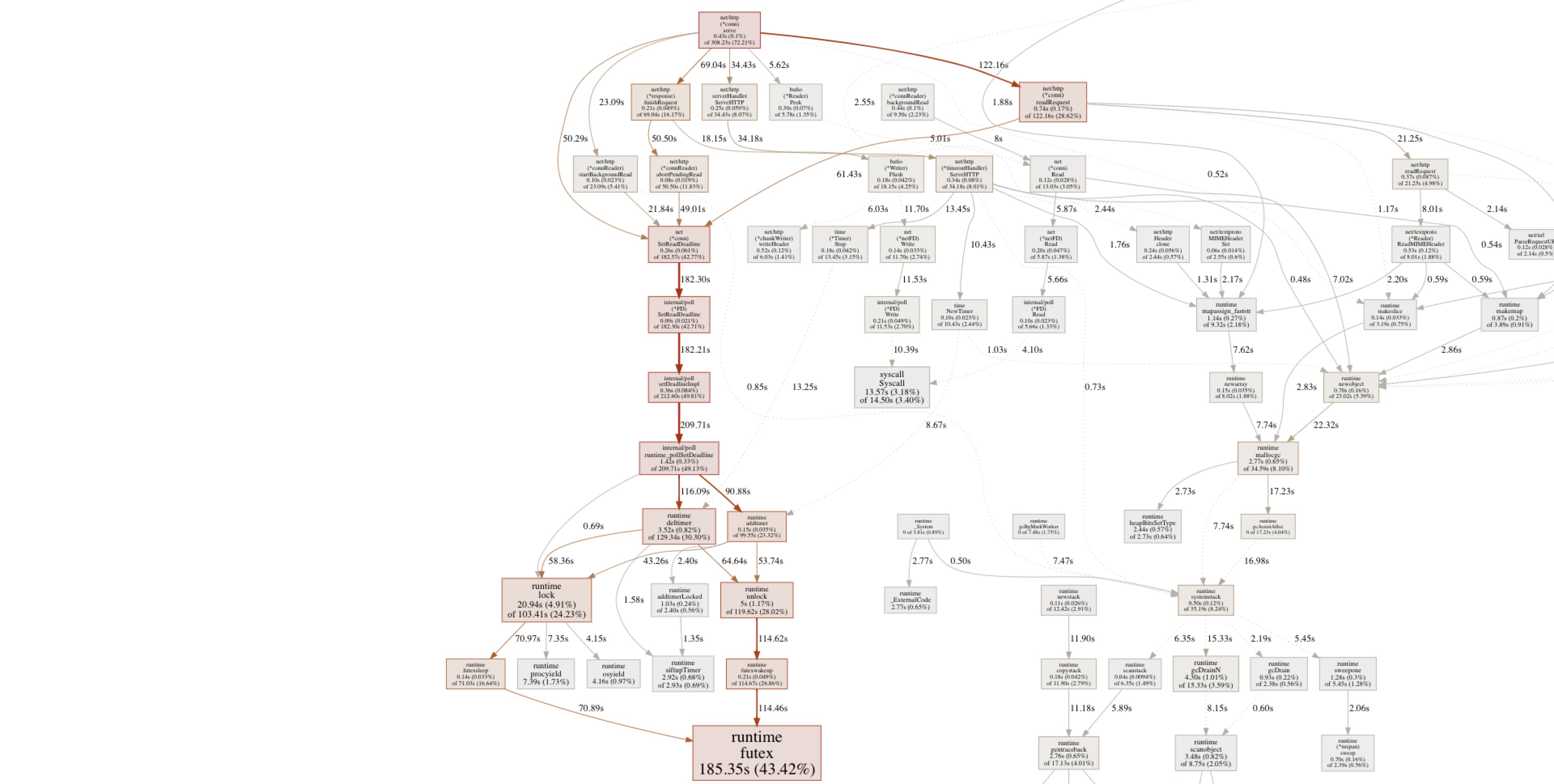

首先,我就簡單壓測hello world, 每個請求進來,我日誌都不打,然後,開啟pprof ,顯示的情況如下:

這裡futex 怎麼這麼高?看著上面的一些操作,addtimer, deltimer 我想到以前的自己實現的定時器,這估計是超時引起的。然後檢查版本,go1.9, 然後框架預設為每個conn 設定了4個timeout,readtimeout, writetimeout, idletimeout, headertimeout ,這直接導致了定時器在新增和刪除回撥的時候,鎖的壓力特別大。

下面我們分析下,正常的加超時操作,到底發生了些什麼,下面是個最簡單的例子,為了安全,每個連線設定超時。

package main import ( "fmt" "github.com/julienschmidt/httprouter" "log" "net/http" "time" ) func Index(w http.ResponseWriter, r *http.Request, _ httprouter.Params) { fmt.Fprint(w, "Welcome!\n") } func Hello(w http.ResponseWriter, r *http.Request, ps httprouter.Params) { fmt.Fprintf(w, "hello, %s!\n", ps.ByName("name")) } func main() { router := httprouter.New() router.GET("/", Index) router.GET("/hello/:name", Hello) srv := &http.Server{ ReadTimeout: 5 * time.Second, WriteTimeout: 10 * time.Second, ReadHeaderTimeout: 10 * time.Second, IdleTimeout: 10 * time.Second, Addr: "0.0.0.0:8998", Handler: router, } log.Fatal(srv.ListenAndServe()) }

其中,ListenAndServe() 在呼叫accept 每個連線後,會呼叫 server.serve(), 根據是否新增超時,呼叫conn.SetReadDeadline等函式,對應的是 net/http/server.go,如下:

// Serve a new connection. func (c *conn) serve(ctx context.Context) { ... if tlsConn, ok := c.rwc.(*tls.Conn); ok { if d := c.server.ReadTimeout; d != 0 { c.rwc.SetReadDeadline(time.Now().Add(d)) // 設定讀超時 } if d := c.server.WriteTimeout; d != 0 { c.rwc.SetWriteDeadline(time.Now().Add(d))// 設定寫超時 } if err := tlsConn.Handshake(); err != nil { c.server.logf("http: TLS handshake error from %s: %v", c.rwc.RemoteAddr(), err) return } c.tlsState = new(tls.ConnectionState) *c.tlsState = tlsConn.ConnectionState() if proto := c.tlsState.NegotiatedProtocol; validNPN(proto) { if fn := c.server.TLSNextProto[proto]; fn != nil { h := initNPNRequest{tlsConn, serverHandler{c.server}} fn(c.server, tlsConn, h) } return } } ...

之後,con.SetReadDeadline 會呼叫 internal/poll/fd_poll_runtime.go的 fd.setReadDeadline,最後呼叫runtime/netpoll.go 的poll_runtime_pollSetDeadline, 這個函式會連結成internal/poll.runtime_pollSetDeadline。這個函式比較關鍵:

//go:linkname poll_runtime_pollSetDeadline internal/poll.runtime_pollSetDeadline

func poll_runtime_pollSetDeadline(pd *pollDesc, d int64, mode int) {

lock(&pd.lock)

if pd.closing {

unlock(&pd.lock)

return

}

pd.seq++ // invalidate current timers

// Reset current timers.

if pd.rt.f != nil {

deltimer(&pd.rt)

pd.rt.f = nil

}

if pd.wt.f != nil {

deltimer(&pd.wt)

pd.wt.f = nil

}

// Setup new timers.

if d != 0 && d <= nanotime() {

d = -1

}

if mode == 'r' || mode == 'r'+'w' {

pd.rd = d

}

if mode == 'w' || mode == 'r'+'w' {

pd.wd = d

}

if pd.rd > 0 && pd.rd == pd.wd {

pd.rt.f = netpollDeadline

pd.rt.when = pd.rd

// Copy current seq into the timer arg.

// Timer func will check the seq against current descriptor seq,

// if they differ the descriptor was reused or timers were reset.

pd.rt.arg = pd

pd.rt.seq = pd.seq

addtimer(&pd.rt)

} else {

if pd.rd > 0 {

pd.rt.f = netpollReadDeadline // 設定讀的定時回撥

pd.rt.when = pd.rd

pd.rt.arg = pd

pd.rt.seq = pd.seq

addtimer(&pd.rt) // 新增到系統定時器中

}

if pd.wd > 0 {

pd.wt.f = netpollWriteDeadline // 設定寫的定時回撥

pd.wt.when = pd.wd

pd.wt.arg = pd

pd.wt.seq = pd.seq

addtimer(&pd.wt) // 新增到系統定時器中

}

}

// If we set the new deadline in the past, unblock currently pending IO if any.

var rg, wg *g

atomicstorep(unsafe.Pointer(&wg), nil) // full memory barrier between stores to rd/wd and load of rg/wg in netpollunblock

if pd.rd < 0 {

rg = netpollunblock(pd, 'r', false)

}

if pd.wd < 0 {

wg = netpollunblock(pd, 'w', false)

}

unlock(&pd.lock)

if rg != nil {

netpollgoready(rg, 3)

}

if wg != nil {

netpollgoready(wg, 3)

}

}這裡主要工作就是檢查過期定時器,然後新增定時器,設定回撥函式為netpollReadDeadline 或者netpollWriteDeadline。 從中可以看出新增和刪除定時器操作為addtimer(&pd.rt), deltimer(&pd.rt)。

後面就是核心了,為啥加超時後這麼慢,看下addtimer 的實現,timer 是個四叉小頂堆,每次新增一個超時,最後都需要對一個全域性的timers 進行加鎖,當qps 很高,一個請求,多次加鎖,這效能能很高嗎?

type timer struct {

i int // heap index

// Timer wakes up at when, and then at when+period, ... (period > 0 only)

// each time calling f(arg, now) in the timer goroutine, so f must be

// a well-behaved function and not block.

when int64

period int64

f func(interface{}, uintptr)

arg interface{}

seq uintptr

}

var timers struct {

lock mutex

gp *g

created bool

sleeping bool

rescheduling bool

sleepUntil int64

waitnote note

t []*timer

}

//新增一個定時器

func addtimer(t *timer) {

lock(&timers.lock)

addtimerLocked(t)

unlock(&timers.lock)

}解決鎖衝突改怎麼辦?分段鎖是很常見一個思路,在go1.10 後,timers 由一個,變成64個,定時器被打散到64個鎖上去,自然鎖衝突就降低了。看1.10的runtime/time.go 可以發現定義如下,每個p有單獨的timer, 每個timer能被多個p使用:

// Package time knows the layout of this structure.

// If this struct changes, adjust ../time/sleep.go:/runtimeTimer.

// For GOOS=nacl, package syscall knows the layout of this structure.

// If this struct changes, adjust ../syscall/net_nacl.go:/runtimeTimer.

type timer struct {

tb *timersBucket // the bucket the timer lives in

i int // heap index

// Timer wakes up at when, and then at when+period, ... (period > 0 only)

// each time calling f(arg, now) in the timer goroutine, so f must be

// a well-behaved function and not block.

when int64

period int64

f func(interface{}, uintptr)

arg interface{}

seq uintptr

}

// timersLen is the length of timers array.

//

// Ideally, this would be set to GOMAXPROCS, but that would require

// dynamic reallocation

//

// The current value is a compromise between memory usage and performance

// that should cover the majority of GOMAXPROCS values used in the wild.

const timersLen = 64 //64個bucket

// timers contains "per-P" timer heaps.

//

// Timers are queued into timersBucket associated with the current P,

// so each P may work with its own timers independently of other P instances.

//

// Each timersBucket may be associated with multiple P

// if GOMAXPROCS > timersLen.

var timers [timersLen]struct {

timersBucket

// The padding should eliminate false sharing

// between timersBucket values.

pad [sys.CacheLineSize - unsafe.Sizeof(timersBucket{})%sys.CacheLineSize]byte

}下面是go1.10 後的timer 資料結構(此圖來源於網路):

總結,網上很多httpserver 框架壓測 qps 很高,但是它們的demo並沒有設定超時,資料真實值會差很多。線上如果需要設定超時,需要注意go 的版本,qps 很高的情況下,最好使用1.10以上。最終我們不做任何其他操作情況下,僅將go 版本提高到1.10,qps 提高接近2倍。