PB級資料實現秒級查詢ES的安裝

什麼是ES?ElasticSearch是一個基於Lucene的搜尋伺服器。它提供了一個分散式多使用者能力的全文搜尋引擎,基於RESTful web介面。Elasticsearch是用Java語言開發的,並作為Apache許可條款下的開放原始碼釋出,是一種流行的企業級搜尋引擎。ElasticSearch用於雲端計算中,能夠達到實時搜尋,穩定,可靠,快速,安裝使用方便。

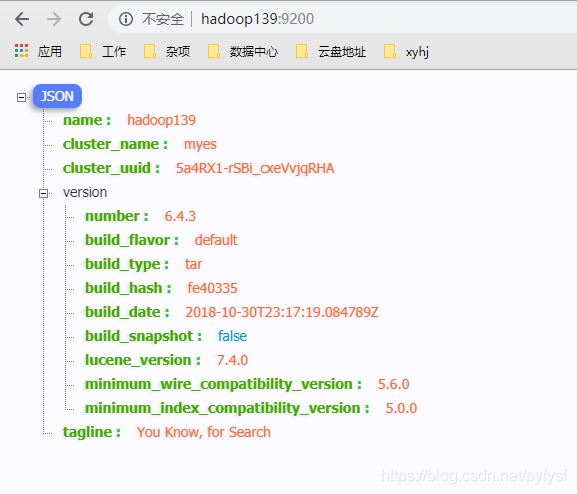

本教程使用軟體為6.4.3版本

下載

ElasticSearch:

https://www.elastic.co/cn/downloads/elasticsearch

Kibana:

https://www.elastic.co/cn/downloads/kibana

上傳至Linux伺服器

略

解壓並修改配置檔案

# 注意:需要使用普通使用者操作ES,不可使用root使用者進行操作。 #使用root使用者,新增使用者 #新增使用者命令: [root@hadoop137 ~]# useradd shaofei [root@hadoop137 ~]# passwd shaofei 更改使用者 shaofei的密碼 。 新的 密碼: 無效的密碼: 過於簡單化/系統化 無效的密碼: 過於簡單 重新輸入新的 密碼: passwd: 所有的身份驗證令牌已經成功更新。 #切換為shaofei使用者: [root@hadoop137 ~]# su shaofei [shaofei@hadoop137 root]$ cd [shaofei@hadoop137 ~]$ # 解壓elasticsearch [shaofei@hadoop137 softwear]$ tar -zxvf elasticsearch-6.4.3.tar.gz -C ../module/ [shaofei@hadoop137 elasticsearch-6.4.3]$ pwd /opt/module/elasticsearch-6.4.3 # 修改配置檔案 [shaofei@hadoop137 elasticsearch-6.4.3]$ vim config/elasticsearch.yml # 注意修改yml檔案時,配置項後面要空格

elasticsearch.yml

# ======================== Elasticsearch Configuration ========================= # # NOTE: Elasticsearch comes with reasonable defaults for most settings. # Before you set out to tweak and tune the configuration, make sure you # understand what are you trying to accomplish and the consequences. # # The primary way of configuring a node is via this file. This template lists # the most important settings you may want to configure for a production cluster. # # Please consult the documentation for further information on configuration options: # https://www.elastic.co/guide/en/elasticsearch/reference/index.html # # ---------------------------------- Cluster ----------------------------------- # # Use a descriptive name for your cluster: # 修改叢集名稱 cluster.name: myes # # ------------------------------------ Node ------------------------------------ # # Use a descriptive name for the node: # 修改當前節點名稱——下一步分發到其他叢集時需要修改 node.name: hadoop137 # # Add custom attributes to the node: # #node.attr.rack: r1 # # ----------------------------------- Paths ------------------------------------ # # Path to directory where to store the data (separate multiple locations by comma): # #path.data: /path/to/data # # Path to log files: # #path.logs: /path/to/logs # # ----------------------------------- Memory ----------------------------------- # # Lock the memory on startup: # #bootstrap.memory_lock: true # # Make sure that the heap size is set to about half the memory available # on the system and that the owner of the process is allowed to use this # limit. # # Elasticsearch performs poorly when the system is swapping the memory. # # ---------------------------------- Network ----------------------------------- # # Set the bind address to a specific IP (IPv4 or IPv6): # 當前節點的ip地址,我這裡配置了hosts檔案所以寫了主機名稱——下一步分發之後需要修改為對應主機的ip地址 network.host: hadoop137 # # Set a custom port for HTTP: # ES對外開放服務的埠號 http.port: 9200 # # For more information, consult the network module documentation. # # --------------------------------- Discovery ---------------------------------- # # Pass an initial list of hosts to perform discovery when new node is started: # The default list of hosts is ["127.0.0.1", "[::1]"] # #discovery.zen.ping.unicast.hosts: ["host1", "host2"] # # Prevent the "split brain" by configuring the majority of nodes (total number of master-eligible nodes / 2 + 1): # #discovery.zen.minimum_master_nodes: # # For more information, consult the zen discovery module documentation. # # ---------------------------------- Gateway ----------------------------------- # # Block initial recovery after a full cluster restart until N nodes are started: # #gateway.recover_after_nodes: 3 # # For more information, consult the gateway module documentation. # # ---------------------------------- Various ----------------------------------- # # Require explicit names when deleting indices: # #action.destructive_requires_name: true #新增叢集ip地址 discovery.zen.ping.unicast.hosts: ["192.168.23.137", "192.168.23.138", "192.168.23.139"]

分發各個叢集中的其他主機

[shaofei@hadoop137 module]$ rsync -rvl elasticsearch-6.4.3 shaofei@hadoop138:`pwd`

[shaofei@hadoop137 module]$ rsync -rvl elasticsearch-6.4.3 shaofei@hadoop139:`pwd`分別啟動叢集中的ES

[shaofei@hadoop137 elasticsearch-6.4.3]$ ./bin/elasticsearch

[shaofei@hadoop138 elasticsearch-6.4.3]$ ./bin/elasticsearch

[shaofei@hadoop139 elasticsearch-6.4.3]$ ./bin/elasticsearch啟動中遇到的問題:

ERROR: [4] bootstrap checks failed

[1]: max file descriptors [4096] for elasticsearch process is too low, increase to at least [65536]

[2]: max number of threads [1024] for user [shaofei] is too low, increase to at least [4096]

[3]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

對於第【1】個問題:

[root@hadoop139 shaofei]# vi /etc/security/limits.conf

末尾新增:

# End of file

* soft nofile 65536

* hard nofile 131072

* soft nproc 2048

* hard nproc 4096

對於第【2】個問題:

[root@hadoop139 shaofei]# vim /etc/security/limits.d/90-nproc.conf

修改:

[root@hadoop139 shaofei]# cat /etc/security/limits.d/90-nproc.conf

# Default limit for number of user's processes to prevent

# accidental fork bombs.

# See rhbz #432903 for reasoning.

* soft nproc 10240

root soft nproc unlimited

# 重新登陸使用者即可

對於第【3】個問題:

vi /etc/sysctl.conf

新增下面配置:

vm.max_map_count=655360

並執行命令:

sysctl -p

java.lang.UnsupportedOperationException: seccomp unavailable: CONFIG_SECCOMP not compiled into kernel, CONFIG_SECCOMP and CONFIG_SECCOMP_FILTER are needed

# 修改elasticsearch.yml 新增一下內容

bootstrap.memory_lock: false

bootstrap.system_call_filter: false問題解決之後重新啟動es即可。

- 啟動成功

訪問:http://ip:9200

安裝kibana

- 下載安裝包

- 解壓

- 修改配置檔案

kibana.yml

# Kibana is served by a back end server. This setting specifies the port to use.

# 配置埠

server.port: 5601

# Specifies the address to which the Kibana server will bind. IP addresses and host names are both valid values.

# The default is 'localhost', which usually means remote machines will not be able to connect.

# To allow connections from remote users, set this parameter to a non-loopback address.

# 配置主機ip

server.host: "hadoop137"

# Enables you to specify a path to mount Kibana at if you are running behind a proxy.

# Use the `server.rewriteBasePath` setting to tell Kibana if it should remove the basePath

# from requests it receives, and to prevent a deprecation warning at startup.

# This setting cannot end in a slash.

#server.basePath: ""

# Specifies whether Kibana should rewrite requests that are prefixed with

# `server.basePath` or require that they are rewritten by your reverse proxy.

# This setting was effectively always `false` before Kibana 6.3 and will

# default to `true` starting in Kibana 7.0.

#server.rewriteBasePath: false

# The maximum payload size in bytes for incoming server requests.

#server.maxPayloadBytes: 1048576

# The Kibana server's name. This is used for display purposes.

#server.name: "your-hostname"

# The URL of the Elasticsearch instance to use for all your queries.

#配置elasticsearch的訪問

elasticsearch.url: "http://hadoop137:9200"

# When this setting's value is true Kibana uses the hostname specified in the server.host

# setting. When the value of this setting is false, Kibana uses the hostname of the host

# that connects to this Kibana instance.

#elasticsearch.preserveHost: true

# Kibana uses an index in Elasticsearch to store saved searches, visualizations and

# dashboards. Kibana creates a new index if the index doesn't already exist.

#kibana.index: ".kibana"

# The default application to load.

#kibana.defaultAppId: "home"

# If your Elasticsearch is protected with basic authentication, these settings provide

# the username and password that the Kibana server uses to perform maintenance on the Kibana

# index at startup. Your Kibana users still need to authenticate with Elasticsearch, which

# is proxied through the Kibana server.

#elasticsearch.username: "user"

#elasticsearch.password: "pass"

# Enables SSL and paths to the PEM-format SSL certificate and SSL key files, respectively.

# These settings enable SSL for outgoing requests from the Kibana server to the browser.

#server.ssl.enabled: false

#server.ssl.certificate: /path/to/your/server.crt

#server.ssl.key: /path/to/your/server.key

# Optional settings that provide the paths to the PEM-format SSL certificate and key files.

# These files validate that your Elasticsearch backend uses the same key files.

#elasticsearch.ssl.certificate: /path/to/your/client.crt

#elasticsearch.ssl.key: /path/to/your/client.key

# Optional setting that enables you to specify a path to the PEM file for the certificate

# authority for your Elasticsearch instance.

#elasticsearch.ssl.certificateAuthorities: [ "/path/to/your/CA.pem" ]

# To disregard the validity of SSL certificates, change this setting's value to 'none'.

#elasticsearch.ssl.verificationMode: full

# Time in milliseconds to wait for Elasticsearch to respond to pings. Defaults to the value of

# the elasticsearch.requestTimeout setting.

#elasticsearch.pingTimeout: 1500

# Time in milliseconds to wait for responses from the back end or Elasticsearch. This value

# must be a positive integer.

#elasticsearch.requestTimeout: 30000

# List of Kibana client-side headers to send to Elasticsearch. To send *no* client-side

# headers, set this value to [] (an empty list).

#elasticsearch.requestHeadersWhitelist: [ authorization ]

# Header names and values that are sent to Elasticsearch. Any custom headers cannot be overwritten

# by client-side headers, regardless of the elasticsearch.requestHeadersWhitelist configuration.

#elasticsearch.customHeaders: {}

# Time in milliseconds for Elasticsearch to wait for responses from shards. Set to 0 to disable.

#elasticsearch.shardTimeout: 30000

# Time in milliseconds to wait for Elasticsearch at Kibana startup before retrying.

#elasticsearch.startupTimeout: 5000

# Logs queries sent to Elasticsearch. Requires logging.verbose set to true.

#elasticsearch.logQueries: false

# Specifies the path where Kibana creates the process ID file.

#pid.file: /var/run/kibana.pid

# Enables you specify a file where Kibana stores log output.

#logging.dest: stdout

# Set the value of this setting to true to suppress all logging output.

#logging.silent: false

# Set the value of this setting to true to suppress all logging output other than error messages.

#logging.quiet: false

# Set the value of this setting to true to log all events, including system usage information

# and all requests.

#logging.verbose: false

# Set the interval in milliseconds to sample system and process performance

# metrics. Minimum is 100ms. Defaults to 5000.

#ops.interval: 5000

# The default locale. This locale can be used in certain circumstances to substitute any missing

# translations.

#i18n.defaultLocale: "en"

瀏覽器訪問: http://hadoop137:5601/ 即可驗證是否成功

相關推薦

PB級資料實現秒級查詢ES的安裝

什麼是ES?ElasticSearch是一個基於Lucene的搜尋伺服器。它提供了一個分散式多使用者能力的全文搜尋引擎,基於RESTful web介面。Elasticsearch是用Java語言開發的,並作為Apache許可條款下的開放原始碼釋出,是一種流行的企業級搜尋引擎。ElasticSearch用於雲

python +ip2region IP庫地址文件實現秒級查詢1萬不同ip歸屬地址

python +ip2region一、服務器環境介紹: 服務器硬件:4核4g內存服務器系統:centos6.9 x86_64位最小化安裝 二、環境安裝 參考地址:https://github.com/lionsoul2014/ip2region直接下載包到服務器上的/root目錄下wget https://g

阿里如何實現秒級百萬TPS?搜尋離線大資料平臺架構解讀

什麼是搜尋離線? 一個典型的商品搜尋架構如下圖所示,本文將要重點介紹的就是下圖中的離線資料處理系統(Offline System)。 何謂離線?在阿里搜尋工程體系中我們把搜尋引擎、線上算分、SearchPlanner等ms級響應使用者請求的服務稱之為“

億級資料多條件組合查詢——秒級響應解決方案

1 概述 組合查詢為多條件組合查詢,在很多場景下都有使用。購物網站中通過勾選類別、價格、銷售量範圍等屬性來對所有的商品進行篩選,篩選出滿足客戶需要的商品,這是一種典型的組合查詢。在小資料量的情況下,後臺通過簡單的sql語句便能夠快速過濾出需要的資料,但隨著資料量

每天數百億使用者行為資料,美團點評怎麼實現秒級轉化分析?

使用者行為分析是資料分析中非常重要的一項內容,在統計活躍使用者,分析留存和轉化率,改進產品體驗、推動使用者增長等領域有重要作用。美團點評每天收集的使用者行為日誌達到數百億條,如何在海量資料集上實現對使用者行為的快速靈活分析,成為一個巨大的挑戰。為此,我們提出並實現了一套面向海

【資料案例】每天數百億使用者行為資料,美團點評怎麼實現秒級轉化分析?

6. 效果:上述方案目前在美團點評內部已經實際落地,穩定執行超過半年以上。每天的資料有幾百億條,活躍使用者達到了上億的量級,埋點屬性超過了百萬,日均查詢量幾百次,單次查詢的TP95時間小於5秒,完全能夠滿足互動式分析的預期。相比於原有sql方案,達到了3-4個數量級的效能提升。

實現毫秒級和納秒級計數的幾個API--timeGetTime、GetTickCount、QueryPerformanceCounter

Private Declare Function timeGetTime Lib "winmm.dll" () As Long Private Declare Function GetTickCount Lib "kernel32" () As Long Private Declar

MySQL千萬級資料分割槽儲存及查詢優化

本文轉載自:https://www.cnblogs.com/javaIOException/p/7524945.html 作為傳統的關係型資料庫,MySQL因其體積小、速度快、總體擁有成本低受到中小企業的熱捧,但是對於大資料量(百萬級以上)的操作顯得有些力不從心,這裡我結合之前開發的一個web系

身份證、駕駛證ocr識別助力共享租車實現秒級認證

目前,國內P2P租車領軍品牌凹凸共享租車完成了其APP版本的升級,應用了身份證、駕駛證OCR識別技術,租客身份認證開啟懶人模式,拍照自動轉文字,達到秒級驗證;車主上傳車輛開啟傻瓜模式,雲端識別車型資訊,將使用流程效率提升至業內領先水準。 網際網路的本質是提升效率,身份驗證是使用者使用AP

crontab實現秒級的計劃任務

開啟crontab的配置檔案: [[email protected] ~]# cat /etc/crontab # Example of job definition: # .---------------- 分鐘(0 - 59) # | .

Linux下實現秒級定時任務的兩種方案(crontab 每秒執行)

第一種方案,當然是寫一個後臺執行的指令碼一直迴圈,然後每次迴圈sleep一段時間。 while true ;do command sleep XX //間隔秒數 done 第二種方案,使用crontab。 我們都知道crontab的粒度最小是到分鐘,但是我們還是可以通過變

[開源]CSharpFlink(NET 5.0開發)分散式實時計算框架,PC機10萬資料點秒級計算測試說明

github地址:https://github.com/wxzz/CSharpFlinkgitee地址:https://gitee.com/wxzz/CSharpFlink 參考:[開源地址] 放棄Flink,.NET5.0開發CSharpFlink,簡要設計、部署及二次開發說明。

使用 tke-autoscaling-placeholder 實現秒級彈性伸縮

## 背景 當 TKE 叢集配置了節點池並啟用了彈性伸縮,在節點資源不夠時可以觸發節點的自動擴容 (自動買機器並加入叢集),但這個擴容流程需要一定的時間才能完成,在一些流量突高的場景,這個擴容速度可能會顯得太慢,影響業務。 `tke-autoscaling-placeholder` 可以用於在 TKE 上實

【 轉】百度地圖Canvas實現十萬CAD資料秒級載入

Github上看到: https://github.com/lcosmos/map-canvas 這個實現颱風軌跡,這個資料量非常龐大,當時開啟時,看到這麼多資料載入很快,感到有點震驚,然後自己研究了一番,發現作者採用的是Canvas作為百度的自定義覆蓋層。 <!DOCTYPE html&

KUDU--秒級查詢的資料倉庫

## == Kudu 是什麼 == Kudu是ToddLipcon@Cloudera帶頭開發的儲存系統,其整體應用模式和HBase比較接近,即支援行級別的隨機讀寫,並支援批量順序檢索功能。 那既然有了HBase,為什麼還需要Kudu呢,簡單的說,就是嫌棄HBase在

PostgreSQL 百億資料 秒級響應 正則及模糊查詢

原文: https://yq.aliyun.com/articles/7444?spm=5176.blog7549.yqblogcon1.6.2wcXO2 摘要: 正則匹配和模糊匹配通常是搜尋引擎的特長,但是如果你使用的是 PostgreSQL 資料庫照樣能實現,並

基於關係型資料庫和ES搜尋引擎,實現多源,百億級資料的大資料分析方案

背景: 隨著公司各項業務的快速發展與擴張,伺服器和各種應用系統隨之而增加,同時對應用系統、伺服器的穩定性,可持續性提出了更高的要

企業實戰-KeepAlived+Redis實現主從熱備、秒級切換

keepalived redis 楊文 最近公司生產環境需要做一個Redis+Keepalived的集群架構,分別用六個端口,實現多路復用,最終實現主從熱備、秒級切換。一、部署Redis集群首先用兩臺虛擬機模擬6個節點,一臺機器3個節點,創建出3 master、3 salve 環境。然後模擬成功,

淺談秒級故障切換!用MHA輕松實現MySQL高可用(三)

mysql 高可用 mha MySQL復制是異步或者半同步的。當master故障時,一些slave可能並沒有收到最新的relay log,也就意味著每個slave可能處於不同的狀態。手動處理這些一致性問題是小事,因為不修復這些問題,就不能開始復制。但是手動修復這些問題,花費一個小時或更多的時間並不

centos實現兩種秒級任務的簡單方法

實現 需要 mage 一次 logs 如果 必須 centos chm 1、通過寫shell腳本,死循環,守護進程運行 > vi /data/sec.sh #!/bin/bash while true do #寫上自已的命令