Windows10+YOLOv3實現檢測自己的資料集(1)——製作自己的資料集

本文將從以下三個方面介紹如何製作自己的資料集

- 資料標註

- 資料擴增

- 將資料轉化為COCO的json格式

- 參考資料

一、資料標註

在深度學習的目標檢測任務中,首先要使用訓練集進行模型訓練。訓練的資料集好壞決定了任務的上限。下面介紹兩種常用的影象目標檢測標註工具:Labelme和LabelImg。

(1)Labelme

Labelme適用於影象分割任務和目標檢測任務的資料集製作,它來自該專案:https://github.com/wkentaro/labelme 。

按照專案中的教程安裝完畢後,應用介面如下圖所示:

它能夠提供多邊形、矩形、圓形、直線和點的影象標註,並將結果儲存為 JSON 檔案。

(2)LabelImg

LabelImg適用於目標檢測任務的資料集製作。它來自該專案:https://github.com/tzutalin/labelImg

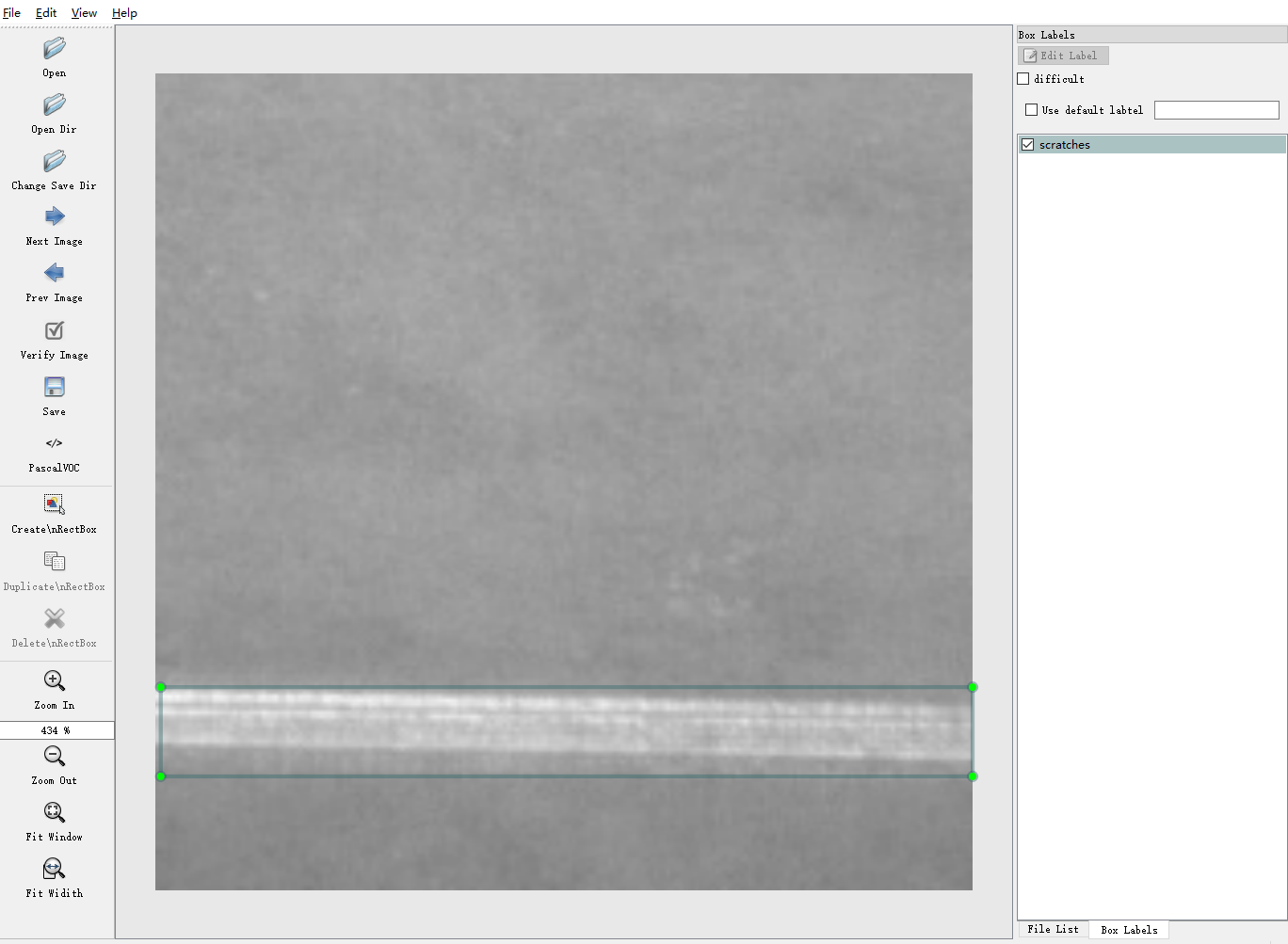

應用介面如下圖所示:

它能夠提供矩形的影象標註,並將結果儲存為txt(YOLO)或xml(PascalVOC)格式。如果需要修改標籤的類別內容,則在主目錄data資料夾中的predefined_classes.txt檔案中修改。

我使用的就是這一個標註軟體,標註結果儲存為xml格式,後續還需要進行標註格式的轉換。

操作快捷鍵:

Ctrl + u 載入目錄中的所有影象,滑鼠點選Open dir同功能 Ctrl + r 更改預設註釋目標目錄(xml檔案儲存的地址) Ctrl + s 儲存 Ctrl + d 複製當前標籤和矩形框 space 將當前影象標記為已驗證 w 建立一個矩形框 d 下一張圖片 a 上一張圖片 del 刪除選定的矩形框 Ctrl++ 放大 Ctrl-- 縮小 ↑→↓← 鍵盤箭頭移動選定的矩形框

二、資料擴增

在某些場景下的目標檢測中,樣本數量較小,導致檢測的效果比較差,這時就需要進行資料擴增。本文介紹常用的6類資料擴增方式,包括裁剪、平移、改變亮度、加入噪聲、旋轉角度以及映象。

考慮到篇幅問題,將這一部分單列出來,詳細請參考本篇部落格:https://www.cnblogs.com/lky-learning/p/11653861.html

三、將資料轉換至COCO的json格式

首先讓我們明確一下幾種格式,參考自【點此處】:

3.1 csv

csv/labels.csvimages/image1.jpgimage2.jpg...

labels.csv

/path/to/image,xmin,ymin,xmax,ymax,label

例如:

/mfs/dataset/face/image1.jpg,450,154,754,341,face/mfs/dataset/face/image2.jpg,143,154,344,341,face

3.2 voc

標準的voc資料格式如下:

VOC2007/

Annotations/0d4c5e4f-fc3c-4d5a-906c-105.xml0ddfc5aea-fcdac-421-92dad-144/xml...

ImageSets/Main/train.txttest.txtval.txttrainval.txt

JPEGImages/0d4c5e4f-fc3c-4d5a-906c-105.jpg0ddfc5aea-fcdac-421-92dad-144.jpg...

3.3 COCO

coco/

annotations/instances_train2017.jsoninstances_val2017.json

images/train2017/0d4c5e4f-fc3c-4d5a-906c-105.jpg...

val20170ddfc5aea-fcdac-421-92dad-144.jpg...

Json file 格式: (imageData那一塊太長了,不展示了)

{

"version": "3.6.16",

"flags": {},

"shapes": [

{

"label": "helmet",

"line_color": null,

"fill_color": null,

"points": [

[

131,

269

],

[

388,

457

]

],

"shape_type": "rectangle"

}

],

"lineColor": [

0,

255,

0,

128

],

"fillColor": [

255,

0,

0,

128

],

"imagePath": "004ffe6f-c3e2-3602-84a1-ecd5f437b113.jpg",

"imageData": "" # too long ,so not show here

"imageHeight": 1080,

"imageWidth": 1920

}

在上一節中提到,經過標註後的結果儲存為xml格式,我們首先要把這些xml標註檔案整合成一個csv檔案。

整合程式碼如下:

import os

import glob

import pandas as pd

import xml.etree.ElementTree as ET

## xml檔案的路徑

os.chdir('./data/annotations/scratches')

path = 'C:/Users/Admin/Desktop/data/annotations/scratches' # 絕對路徑

img_path = 'C:/Users/Admin/Desktop/data/images'

def xml_to_csv(path):

xml_list = []

for xml_file in glob.glob(path + '/*.xml'): #返回所有匹配的檔案路徑列表。

tree = ET.parse(xml_file)

root = tree.getroot()

for member in root.findall('object'):

# value = (root.find('filename').text,

# int(root.find('size')[0].text),

# int(root.find('size')[1].text),

# member[0].text,

# int(member[4][0].text),

# int(member[4][1].text),

# int(member[4][2].text),

# int(member[4][3].text)

# )

value = (img_path +'/' + root.find('filename').text,

int(member[4][0].text),

int(member[4][1].text),

int(member[4][2].text),

int(member[4][3].text),

member[0].text

)

xml_list.append(value)

#column_name = ['filename', 'width', 'height', 'class', 'xmin', 'ymin', 'xmax', 'ymax']

column_name = ['filename', 'xmin', 'ymin', 'xmax', 'ymax', 'class']

xml_df = pd.DataFrame(xml_list, columns=column_name)

return xml_df

if __name__ == '__main__':

image_path = path

xml_df = xml_to_csv(image_path)

## 修改檔名稱

xml_df.to_csv('scratches.csv', index=None)

print('Successfully converted xml to csv.')

當顯示 Successfully converted xml to csv 後,我們就得到了整理後的標記檔案。

在有些模型下,有了影象資料和csv格式的標註檔案後,就可以進行訓練了。但是在YOLOv3中,標記檔案的型別為COCO的json格式,因此我們還得將其轉換至json格式。

轉換程式碼:

import os

import json

import numpy as np

import pandas as pd

import glob

import cv2

import shutil

from IPython import embed

from sklearn.model_selection import train_test_split

np.random.seed(41)

# 0為背景

classname_to_id = {"scratches": 1,"inclusion": 2}

class Csv2CoCo:

def __init__(self,image_dir,total_annos):

self.images = []

self.annotations = []

self.categories = []

self.img_id = 0

self.ann_id = 0

self.image_dir = image_dir

self.total_annos = total_annos

def save_coco_json(self, instance, save_path):

json.dump(instance, open(save_path, 'w'), ensure_ascii=False, indent=2) # indent=2 更加美觀顯示

# 由txt檔案構建COCO

def to_coco(self, keys):

self._init_categories()

for key in keys:

self.images.append(self._image(key))

shapes = self.total_annos[key]

for shape in shapes:

bboxi = []

for cor in shape[:-1]:

bboxi.append(int(cor))

label = shape[-1]

annotation = self._annotation(bboxi,label)

self.annotations.append(annotation)

self.ann_id += 1

self.img_id += 1

instance = {}

instance['info'] = 'spytensor created'

instance['license'] = ['license']

instance['images'] = self.images

instance['annotations'] = self.annotations

instance['categories'] = self.categories

return instance

# 構建類別

def _init_categories(self):

for k, v in classname_to_id.items():

category = {}

category['id'] = v

category['name'] = k

self.categories.append(category)

# 構建COCO的image欄位

def _image(self, path):

image = {}

img = cv2.imread(self.image_dir + path)

image['height'] = img.shape[0]

image['width'] = img.shape[1]

image['id'] = self.img_id

image['file_name'] = path

return image

# 構建COCO的annotation欄位

def _annotation(self, shape,label):

# label = shape[-1]

points = shape[:4]

annotation = {}

annotation['id'] = self.ann_id

annotation['image_id'] = self.img_id

annotation['category_id'] = int(classname_to_id[label])

annotation['segmentation'] = self._get_seg(points)

annotation['bbox'] = self._get_box(points)

annotation['iscrowd'] = 0

annotation['area'] = 1.0

return annotation

# COCO的格式: [x1,y1,w,h] 對應COCO的bbox格式

def _get_box(self, points):

min_x = points[0]

min_y = points[1]

max_x = points[2]

max_y = points[3]

return [min_x, min_y, max_x - min_x, max_y - min_y]

# segmentation

def _get_seg(self, points):

min_x = points[0]

min_y = points[1]

max_x = points[2]

max_y = points[3]

h = max_y - min_y

w = max_x - min_x

a = []

a.append([min_x,min_y, min_x,min_y+0.5*h, min_x,max_y, min_x+0.5*w,max_y, max_x,max_y, max_x,max_y-0.5*h, max_x,min_y, max_x-0.5*w,min_y])

return a

if __name__ == '__main__':

## 修改目錄

csv_file = "data/annotations/scratches/scratches.csv"

image_dir = "data/images/"

saved_coco_path = "./"

# 整合csv格式標註檔案

total_csv_annotations = {}

annotations = pd.read_csv(csv_file,header=None).values

for annotation in annotations:

key = annotation[0].split(os.sep)[-1]

value = np.array([annotation[1:]])

if key in total_csv_annotations.keys():

total_csv_annotations[key] = np.concatenate((total_csv_annotations[key],value),axis=0)

else:

total_csv_annotations[key] = value

# 按照鍵值劃分資料

total_keys = list(total_csv_annotations.keys())

train_keys, val_keys = train_test_split(total_keys, test_size=0.2)

print("train_n:", len(train_keys), 'val_n:', len(val_keys))

## 建立必須的資料夾

if not os.path.exists('%ssteel/annotations/'%saved_coco_path):

os.makedirs('%ssteel/annotations/'%saved_coco_path)

if not os.path.exists('%ssteel/images/train/'%saved_coco_path):

os.makedirs('%ssteel/images/train/'%saved_coco_path)

if not os.path.exists('%ssteel/images/val/'%saved_coco_path):

os.makedirs('%ssteel/images/val/'%saved_coco_path)

## 把訓練集轉化為COCO的json格式

l2c_train = Csv2CoCo(image_dir=image_dir,total_annos=total_csv_annotations)

train_instance = l2c_train.to_coco(train_keys)

l2c_train.save_coco_json(train_instance, '%ssteel/annotations/instances_train.json'%saved_coco_path)

for file in train_keys:

shutil.copy(image_dir+file,"%ssteel/images/train/"%saved_coco_path)

for file in val_keys:

shutil.copy(image_dir+file,"%ssteel/images/val/"%saved_coco_path)

## 把驗證集轉化為COCO的json格式

l2c_val = Csv2CoCo(image_dir=image_dir,total_annos=total_csv_annotations)

val_instance = l2c_val.to_coco(val_keys)

l2c_val.save_coco_json(val_instance, '%ssteel/annotations/instances_val.json'%saved_coco_path)

至此,我們的資料預處理工作就做好了

四、參考資料

- https://blog.csdn.net/sty945/article/details/79387054

- https://blog.csdn.net/saltriver/article/details/79680189

- https://www.ctolib.com/topics-44419.html

- https://www.zhihu.com/question/20666664

- https://github.com/spytensor/prepare_detection_dataset#22-voc

- https://blog.csdn.net/chaipp0607/article/details/79036312