Netty 100萬級高併發伺服器配置

前言

每一種該語言在某些極限情況下的表現一般都不太一樣,那麼我常用的Java語言,在達到100萬個併發連線情況下,會怎麼樣呢,有些好奇,更有些期盼。

這次使用經常使用的順手的netty NIO框架(netty-3.6.5.Final),封裝的很好,介面很全面,就像它現在的域名 netty.io,專注於網路IO。

整個過程沒有什麼技術含量,淺顯分析過就更顯得有些枯燥無聊,準備好,硬著頭皮吧。

測試伺服器配置

執行在VMWare Workstation 9中,64位Centos 6.2系統,分配14.9G記憶體左右,4核。

已安裝有Java7版本:

java version "1.7.0_21" Java(TM) SE Runtime Environment (build 1.7.0_21-b11) Java HotSpot(TM) 64-Bit Server VM (build 23.21-b01, mixed mode)

在/etc/sysctl.conf中新增如下配置:

fs.file-max = 1048576

net.ipv4.ip_local_port_range = 1024 65535

net.ipv4.tcp_mem = 786432 2097152 3145728

net.ipv4.tcp_rmem = 4096 4096 16777216

net.ipv4.tcp_wmem = 4096 4096 16777216

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_tw_recycle = 1在/etc/security/limits.conf中新增如下配置:

* soft nofile 1048576 * hard nofile 1048576

測試端

測試端無論是配置還是程式和以前一樣,翻看前幾篇部落格就可以看到client5.c的原始碼,以及相關的配置資訊等。

伺服器程式

這次也是很簡單吶,沒有業務功能,客戶端HTTP請求,服務端輸出chunked編碼內容。

入口HttpChunkedServer.java:

package com.test.server; import static org.jboss.netty.channel.Channels.pipeline; import java.net.InetSocketAddress; import java.util.concurrent.Executors; import org.jboss.netty.bootstrap.ServerBootstrap; import org.jboss.netty.channel.ChannelPipeline; import org.jboss.netty.channel.ChannelPipelineFactory; import org.jboss.netty.channel.socket.nio.NioServerSocketChannelFactory; import org.jboss.netty.handler.codec.http.HttpChunkAggregator; import org.jboss.netty.handler.codec.http.HttpRequestDecoder; import org.jboss.netty.handler.codec.http.HttpResponseEncoder; import org.jboss.netty.handler.stream.ChunkedWriteHandler; public class HttpChunkedServer { private final int port; public HttpChunkedServer(intport) { this.port = port; } public void run() { // Configure the server. ServerBootstrap bootstrap = new ServerBootstrap( new NioServerSocketChannelFactory( Executors.newCachedThreadPool(), Executors.newCachedThreadPool())); // Set up the event pipeline factory. bootstrap.setPipelineFactory(newChannelPipelineFactory() { public ChannelPipeline getPipeline ()throws Exception { ChannelPipeline pipeline = pipeline(); pipeline.addLast("decoder", new HttpRequestDecoder()); pipeline.addLast("aggregator", new HttpChunkAggregator(65536)); pipeline.addLast("encoder", new HttpResponseEncoder()); pipeline.addLast("chunkedWriter", new ChunkedWriteHandler()); pipeline.addLast("handler", new HttpChunkedServerHandler()); return pipeline; } }); bootstrap.setOption("child.reuseAddress", true); bootstrap.setOption("child.tcpNoDelay", true); bootstrap.setOption("child.keepAlive", true); // Bind and start to accept incoming connections. bootstrap.bind(newInetSocketAddress(port)); } public static void main(String[] args) { int port; if (args.length > 0) { port = Integer.parseInt(args[0]); } else { port = 8080; } System.out.format("server start with port %d \n", port); new HttpChunkedServer(port).run(); } }

唯一的自定義處理器HttpChunkedServerHandler.java:

package com.test.server;

import static org.jboss.netty.handler.codec.http.HttpHeaders.Names.CONTENT_TYPE;

import static org.jboss.netty.handler.codec.http.HttpMethod.GET;

import static org.jboss.netty.handler.codec.http.HttpResponseStatus.BAD_REQUEST;

import static org.jboss.netty.handler.codec.http.HttpResponseStatus.METHOD_NOT_ALLOWED;

import static org.jboss.netty.handler.codec.http.HttpResponseStatus.OK;

import static org.jboss.netty.handler.codec.http.HttpVersion.HTTP_1_1;

import java.util.concurrent.atomic.AtomicInteger;

import org.jboss.netty.buffer.ChannelBuffer;

import org.jboss.netty.buffer.ChannelBuffers;

import org.jboss.netty.channel.Channel;

import org.jboss.netty.channel.ChannelFutureListener;

import org.jboss.netty.channel.ChannelHandlerContext;

import org.jboss.netty.channel.ChannelStateEvent;

import org.jboss.netty.channel.ExceptionEvent;

import org.jboss.netty.channel.MessageEvent;

import org.jboss.netty.channel.SimpleChannelUpstreamHandler;

import org.jboss.netty.handler.codec.frame.TooLongFrameException;

import org.jboss.netty.handler.codec.http.DefaultHttpChunk;

import org.jboss.netty.handler.codec.http.DefaultHttpResponse;

import org.jboss.netty.handler.codec.http.HttpChunk;

import org.jboss.netty.handler.codec.http.HttpHeaders;

import org.jboss.netty.handler.codec.http.HttpRequest;

import org.jboss.netty.handler.codec.http.HttpResponse;

import org.jboss.netty.handler.codec.http.HttpResponseStatus;

import org.jboss.netty.util.CharsetUtil;

public class HttpChunkedServerHandlerextends SimpleChannelUpstreamHandler {

private static final AtomicInteger count = new AtomicInteger(0);

private void increment() {

System.out.format("online user %d\n", count.incrementAndGet());

}

private void decrement() {

if (count.get() <= 0) {

System.out.format("~online user %d\n", 0);

} else {

System.out.format("~online user %d\n", count.decrementAndGet());

}

}

@Override

public void messageReceived(ChannelHandlerContextctx, MessageEvent e)

throws Exception {

HttpRequest request = (HttpRequest) e.getMessage();

if (request.getMethod() != GET) {

sendError(ctx, METHOD_NOT_ALLOWED);

return;

}

sendPrepare(ctx);

increment();

}

@Override

public void channelDisconnected(ChannelHandlerContextctx,

ChannelStateEvent e) throws Exception {

decrement();

super.channelDisconnected(ctx, e);

}

@Override

public void exceptionCaught(ChannelHandlerContextctx, ExceptionEvent e)

throws Exception {

Throwable cause = e.getCause();

if (cause instanceof TooLongFrameException) {

sendError(ctx, BAD_REQUEST);

return;

}

}

private static void sendError(ChannelHandlerContext ctx,

HttpResponseStatus status) {

HttpResponse response = new DefaultHttpResponse(HTTP_1_1, status);

response.setHeader(CONTENT_TYPE, "text/plain; charset=UTF-8");

response.setContent(ChannelBuffers.copiedBuffer(

"Failure:" + status.toString() + "\r\n", CharsetUtil.UTF_8));

// Close the connection as soon as the error message is sent.

ctx.getChannel().write(response)

.addListener(ChannelFutureListener.CLOSE);

}

private void sendPrepare(ChannelHandlerContextctx) {

HttpResponse response = new DefaultHttpResponse(HTTP_1_1, OK);

response.setChunked(true);

response.setHeader(HttpHeaders.Names.CONTENT_TYPE,

"text/html; charset=UTF-8");

response.addHeader(HttpHeaders.Names.CONNECTION,

HttpHeaders.Values.KEEP_ALIVE);

response.setHeader(HttpHeaders.Names.TRANSFER_ENCODING,

HttpHeaders.Values.CHUNKED);

Channel chan = ctx.getChannel();

chan.write(response);

// 緩衝必須湊夠256位元組,瀏覽器端才能夠正常接收 ...

StringBuilder builder = new StringBuilder();

builder.append("");

int leftChars = 256 - builder.length();

for (int i = 0; i < leftChars; i++) {

builder.append("");

}

writeStringChunk(chan, builder.toString());

}

private void writeStringChunk(Channelchannel, String data) {

ChannelBuffer chunkContent = ChannelBuffers.dynamicBuffer(channel

.getConfig().getBufferFactory());

chunkContent.writeBytes(data.getBytes());

HttpChunk chunk = new DefaultHttpChunk(chunkContent);

channel.write(chunk);

}

}啟動指令碼start.sh

set CLASSPATH=.

nohup java -server -Xmx6G -Xms6G -Xmn600M -XX:PermSize=50M -XX:MaxPermSize=50M -Xss256K -XX:+DisableExplicitGC -XX:SurvivorRatio=1 -XX:+UseConcMarkSweepGC -XX:+UseParNewGC -XX:+CMSParallelRemarkEnabled -XX:+UseCMSCompactAtFullCollection -XX:CMSFullGCsBeforeCompaction=0 -XX:+CMSClassUnloadingEnabled -XX:LargePageSizeInBytes=128M -XX:+UseFastAccessorMethods -XX:+UseCMSInitiatingOccupancyOnly -XX:CMSInitiatingOccupancyFraction=80 -XX:SoftRefLRUPolicyMSPerMB=0 -XX:+PrintClassHistogram -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+PrintHeapAtGC -Xloggc:gc.log -Djava.ext.dirs=lib com.test.server.HttpChunkedServer 8000>server.out 2>&1 &達到100萬併發連線時的一些資訊

每次伺服器端達到一百萬個併發持久連線之後,然後關掉測試端程式,斷開所有的連線,等到伺服器端日誌輸出線上使用者為0時,再次重複以上步驟。在這反反覆覆的情況下,觀察記憶體等資訊的一些情況。以某次斷開所有測試端為例後,當前系統佔用為(設定為list_free_1):

total used free shared buffers cached

Mem: 15189 7736 7453 0 18 120

-/+ buffers/cache: 7597 7592

Swap: 4095 948 3147通過top觀察,其程序相關資訊

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

4925 root 20 0 8206m 4.3g 2776 S 0.3 28.8 50:18.66 java在啟動指令碼start.sh中,我們設定堆記憶體為6G。

ps aux|grep java命令獲得資訊:

root 4925 38.0 28.8 8403444 4484764 ? Sl 15:26 50:18 java -server...HttpChunkedServer 8000RSS佔用記憶體為4484764K/1024K=4379M

然後再次啟動測試端,在伺服器接收到online user 1023749時,ps aux|grep java內容為:

root 4925 43.6 28.4 8403444 4422824 ? Sl 15:26 62:53 java -server...檢視當前網路資訊統計

ss -s

Total: 1024050 (kernel 1024084)

TCP: 1023769 (estab 1023754, closed 2, orphaned 0, synrecv 0, timewait 0/0), ports 12

Transport Total IP IPv6

* 1024084 - -

RAW 0 0 0

UDP 7 6 1

TCP 1023767 12 1023755

INET 1023774 18 1023756

FRAG 0 0 0 通過top檢視一下

top -p 4925

top - 17:51:30 up 3:02, 4 users, load average: 1.03, 1.80, 1.19

Tasks: 1 total, 0 running, 1 sleeping, 0 stopped, 0 zombie

Cpu0 : 0.9%us, 2.6%sy, 0.0%ni, 52.9%id, 1.0%wa, 13.6%hi, 29.0%si, 0.0%st

Cpu1 : 1.4%us, 4.5%sy, 0.0%ni, 80.1%id, 1.9%wa, 0.0%hi, 12.0%si, 0.0%st

Cpu2 : 1.5%us, 4.4%sy, 0.0%ni, 80.5%id, 4.3%wa, 0.0%hi, 9.3%si, 0.0%st

Cpu3 : 1.9%us, 4.4%sy, 0.0%ni, 84.4%id, 3.2%wa, 0.0%hi, 6.2%si, 0.0%st

Mem: 15554336k total, 15268728k used, 285608k free, 3904k buffers

Swap: 4194296k total, 1082592k used, 3111704k free, 37968k cached

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

4925 root 20 0 8206m 4.2g 2220 S 3.3 28.4 62:53.66 java四核都被佔用了,每一個核心不太平均。這是在虛擬機器中得到結果,可能真實伺服器會更好一些。 因為不是CPU密集型應用,CPU不是問題,無須多加關注。

系統記憶體狀況

free -m

total used free shared buffers cached

Mem: 15189 14926 263 0 5 56

-/+ buffers/cache: 14864 324

Swap: 4095 1057 3038實體記憶體已經無法滿足要求了,佔用了1057M虛擬記憶體。

檢視一下堆記憶體情況

jmap -heap 4925

Attaching to process ID 4925, please wait...

Debugger attached successfully.

Server compiler detected.

JVM version is 23.21-b01

using parallel threads in the new generation.

using thread-local object allocation.

Concurrent Mark-Sweep GC

Heap Configuration:

MinHeapFreeRatio = 40

MaxHeapFreeRatio = 70

MaxHeapSize = 6442450944 (6144.0MB)

NewSize = 629145600 (600.0MB)

MaxNewSize = 629145600 (600.0MB)

OldSize = 5439488 (5.1875MB)

NewRatio = 2

SurvivorRatio = 1

PermSize = 52428800 (50.0MB)

MaxPermSize = 52428800 (50.0MB)

G1HeapRegionSize = 0 (0.0MB)

Heap Usage:

New Generation (Eden + 1 Survivor Space):

capacity = 419430400 (400.0MB)

used = 308798864 (294.49354553222656MB)

free = 110631536 (105.50645446777344MB)

73.62338638305664% used

Eden Space:

capacity = 209715200 (200.0MB)

used = 103375232 (98.5863037109375MB)

free = 106339968 (101.4136962890625MB)

49.29315185546875% used

From Space:

capacity = 209715200 (200.0MB)

used = 205423632 (195.90724182128906MB)

free = 4291568 (4.0927581787109375MB)

97.95362091064453% used

To Space:

capacity = 209715200 (200.0MB)

used = 0 (0.0MB)

free = 209715200 (200.0MB)

0.0% used

concurrent mark-sweep generation:

capacity = 5813305344 (5544.0MB)

used = 4213515472 (4018.321487426758MB)

free = 1599789872 (1525.6785125732422MB)

72.48054631000646% used

Perm Generation:

capacity = 52428800 (50.0MB)

used = 5505696 (5.250640869140625MB)

free = 46923104 (44.749359130859375MB)

10.50128173828125% used

1439 interned Strings occupying 110936 bytes.老生代佔用記憶體為72%,較為合理,畢竟系統已經處理100萬個連線。

再次斷開所有測試端,看看系統記憶體(free -m)

total used free shared buffers cached

Mem: 15189 7723 7466 0 13 120

-/+ buffers/cache: 7589 7599

Swap: 4095 950 3145記為list_free_2。

list_free_1和list_free_2兩次都釋放後的記憶體比較結果,系統可用物理已經記憶體已經降到7589M,先前可是7597M實體記憶體。

總之,我們的JAVA測試程式在記憶體佔用方面已經,最低需要7589 + 950 = 8.6G記憶體為最低需求記憶體吧。

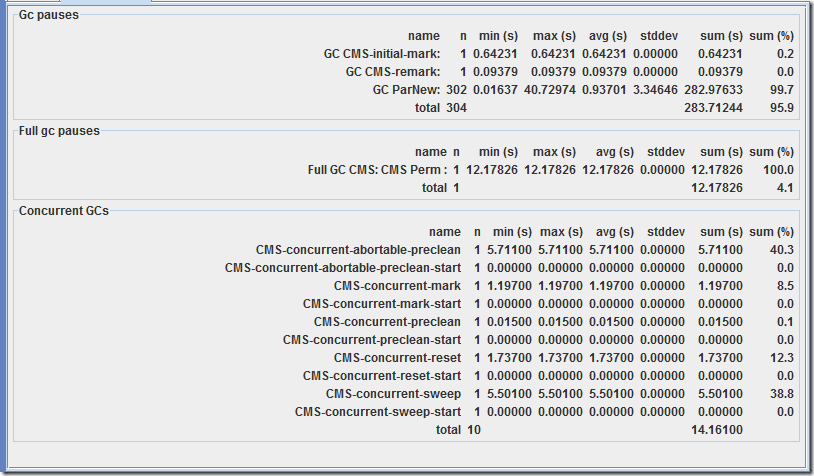

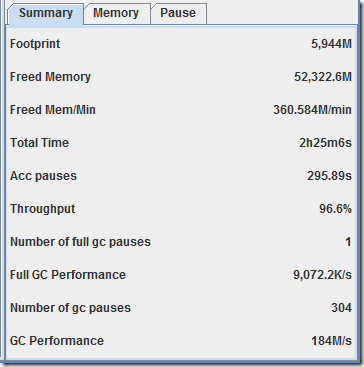

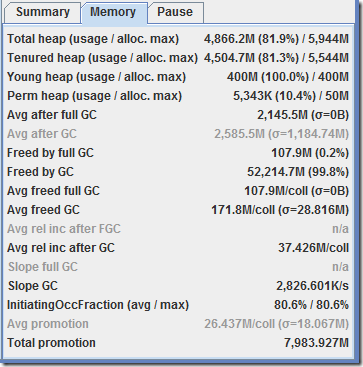

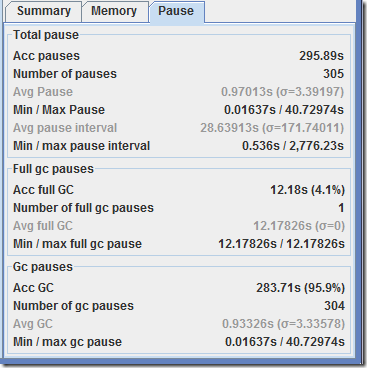

GC日誌

我們在啟動指令碼處設定的一大串引數,到底是否達到目標,還得從gc日誌處獲得具體效果,推薦使用GCViewer。

總之:

- 只進行了一次Full GC,代價太高,停頓了12秒。

- PartNew成為了停頓大戶,導致整個系統停頓了41秒之久,不可接受。

- 當前JVM調優喜憂參半,還得繼續努力等

小結

Java與與Erlang、C相比,比較麻煩的事情,需要在程式一開始就得準備好它的堆疊到底需要多大空間,換個說法就是JVM啟動引數設定堆記憶體大小,設定合適的垃圾回收機制,若以後程式需要更多記憶體,需停止程式,編輯啟動引數,然後再次啟動。總之一句話,就是麻煩。單單JVM的調優,就得持續不斷的根據檢測、資訊、日誌等進行適當微調。

- JVM需要提前指定堆大小,相比Erlang/C,這可能是個麻煩

- GC(垃圾回收),相對比麻煩,需要持續不斷的根據日誌、JVM堆疊資訊、執行時情況進行JVM引數微調

- 設定一個最大連線目標,多次測試達到頂峰,然後釋放所有連線,反覆觀察記憶體佔用,獲得一個較為合適的系統執行記憶體值

- Eclipse Memory Analyzer結合jmap匯出堆疊DUMP檔案,分析記憶體洩漏,還是很方便的

- 想修改執行時內容,或者稱之為熱載入,預設不可能

- 真實機器上會有更好的反映

吐槽一下:

JAVA OSGI,相對比Erlang來說,需要人轉換思路,不是那麼原生的東西,總是有些彆扭,社群或商業公司對此的修修補補,不過是實現一些面向物件所不具備的熱載入的企業特性。

測試原始碼,下載just_test。

無程式設計不創客,無案例不學習。瘋狂創客圈,一大波高手正在交流、學習中!

瘋狂創客圈 Netty 死磕系列 10多篇深度文章: 【部落格園 總入口】 QQ群:104131248