使用雙lstm隱層的RNN神經網路做分類預測

整體思路

這裡為了好執行,舉了個mnist的例子,對手寫圖片進行識別,每個圖片是28*28大小的,使用雙lstm隱層的結構進行分類預測,預測出10個數字類別的概率,整體的網路結構如下:

(1)輸入層 [每個時間步的向量長度為28,一次訓練時連續輸入28個時間步,所以每次輸入資料為28*28]

(2)第一lstm層[定義64個記憶體,其中28個記憶體收集輸入層傳過來的記憶,36個只是獲取上一記憶體傳來的資訊,這層產生64個輸出]

(3)第二Dropout層[對lstm層的輸出進行隨機一半輸出的丟棄,Dropout是在層與層之間的隨機連線上的丟棄]

(4)第三lstm層[定義64個記憶體,讀取上一層輸入,產生64個輸出]

(5)第二Dropout層[對lstm的輸出進行隨機一半輸出的丟棄,但是其接下來就是全連線層,所以覺得這種丟棄是指在64*10條連線中進行隨機丟棄]

(6)全連線層[讀取上層的輸出,通過w*x+b計算產生這層的10個輸出,經過softmax操作得到10個類別中每個類別的概率]

這樣定義好了網路結構後,輸入資料即可獲取每個類別下的概率輸出,經過交叉熵損失函式可以計算出批次內的平均損失值,之後使用優化器對網路進行訓練即可。

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data資料讀取

tf.reset_default_graph() # Hyper Parameters learning_rate = 0.01 # 學習率 n_steps = 28 # LSTM 展開步數(時序持續長度) n_inputs = 28 # 輸入節點數 n_hiddens = 64 # 隱層節點數 n_layers = 2 # LSTM layer 層數 n_classes = 10 # 輸出節點數(分類數目) # data mnist = input_data.read_data_sets("MNIST_data", one_hot=True) test_x = mnist.test.images test_y = mnist.test.labels

定義網路引數

# tensor placeholder with tf.name_scope('inputs'): x = tf.placeholder(tf.float32, [None, n_steps * n_inputs], name='x_input') # 輸入 y = tf.placeholder(tf.float32, [None, n_classes], name='y_input') # 輸出 keep_prob = tf.placeholder(tf.float32, name='keep_prob_input') # 保持多少不被 dropout batch_size = tf.placeholder(tf.int32, [], name='batch_size_input') # 批大小 # weights and biases with tf.name_scope('weights'): Weights = tf.Variable(tf.truncated_normal([n_hiddens, n_classes],stddev=0.1), dtype=tf.float32, name='W') tf.summary.histogram('output_layer_weights', Weights) with tf.name_scope('biases'): biases = tf.Variable(tf.random_normal([n_classes]), name='b') tf.summary.histogram('output_layer_biases', biases)

定義網路結構

1.定義了一個包含兩個lstm結構塊的RNN網路,每個lstm結構塊包含兩部分:BasicLSTMCell定義的包含64個記憶體的隱層、隨機丟棄一般引數的Dropout層

2.使用MultiRNNCell 來構建一個多隱層的結構,把加入兩個lstm塊的enc_cells堆疊一起,這樣相當於構建了多個lstm隱層的RNN網路。

3.使用dynamic_rnn來構建動態的rnn網路,這個網路會把x輸入資訊輸入到多隱層的網路中,獲取得到最後一層每個記憶塊的輸出,這裡動態的含義是指相對與動態rnn,其輸入層的輸入step長度是可以變長的,是使用while的形式。

總體下來,用整體到部分: dynamic_rnn(牽扯到資料輸入的整體網路形式,輸入資料,輸出64的輸出) -> MultiRNNCell(整體網路的定義,對多個lstm塊的連線) -> attn_cell(自己定義的lstm塊,包含一個BasicLSTMCell基礎lstm結構和一個.Dropout操作)

# RNN structure

def RNN_LSTM(x, Weights, biases):

# RNN 輸入 reshape

x = tf.reshape(x, [-1, n_steps, n_inputs])

# 定義 LSTM cell

# cell 中的 dropout

def attn_cell():

lstm_cell = tf.contrib.rnn.BasicLSTMCell(n_hiddens)

with tf.name_scope('lstm_dropout'):

return tf.contrib.rnn.DropoutWrapper(lstm_cell, output_keep_prob=keep_prob)

# attn_cell = tf.contrib.rnn.DropoutWrapper(lstm_cell, output_keep_prob=keep_prob)

# 實現多層 LSTM

# [attn_cell() for _ in range(n_layers)]

enc_cells = []

for i in range(0, n_layers):

enc_cells.append(attn_cell())

with tf.name_scope('lstm_cells_layers'):

mlstm_cell = tf.contrib.rnn.MultiRNNCell(enc_cells, state_is_tuple=True)

# 全零初始化 state

_init_state = mlstm_cell.zero_state(batch_size, dtype=tf.float32)

# dynamic_rnn 執行網路

outputs, states = tf.nn.dynamic_rnn(mlstm_cell, x, initial_state=_init_state, dtype=tf.float32, time_major=False)

# 輸出

#return tf.matmul(outputs[:,-1,:], Weights) + biases

return tf.nn.softmax(tf.matmul(outputs[:,-1,:], Weights) + biases)

with tf.name_scope('output_layer'):

pred = RNN_LSTM(x, Weights, biases)

tf.summary.histogram('outputs', pred)定義損失函式和優化器

# cost

with tf.name_scope('loss'):

#cost = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=pred, labels=y))

cost = tf.reduce_mean(-tf.reduce_sum(y * tf.log(pred),reduction_indices=[1]))

tf.summary.scalar('loss', cost)

# optimizer

with tf.name_scope('train'):

train_op = tf.train.AdamOptimizer(learning_rate=learning_rate).minimize(cost)

# correct_pred = tf.equal(tf.argmax(pred, 1), tf.argmax(y, 1))

# accuarcy = tf.reduce_mean(tf.cast(correct_pred, tf.float32))

with tf.name_scope('accuracy'):

accuracy = tf.metrics.accuracy(labels=tf.argmax(y, axis=1), predictions=tf.argmax(pred, axis=1))[1]

tf.summary.scalar('accuracy', accuracy)summary合併、初始化

merged = tf.summary.merge_all()

init = tf.group(tf.global_variables_initializer(), tf.local_variables_initializer())啟動訓練

with tf.Session() as sess:

sess.run(init)

train_writer = tf.summary.FileWriter("D://logs//train",sess.graph)

test_writer = tf.summary.FileWriter("D://logs//test",sess.graph)

# training

step = 1

for i in range(2000):

_batch_size = 128

batch_x, batch_y = mnist.train.next_batch(_batch_size)

sess.run(train_op, feed_dict={x:batch_x, y:batch_y, keep_prob:0.5, batch_size:_batch_size})

if (i + 1) % 100 == 0:

#loss = sess.run(cost, feed_dict={x:batch_x, y:batch_y, keep_prob:1.0, batch_size:_batch_size})

#acc = sess.run(accuracy, feed_dict={x:batch_x, y:batch_y, keep_prob:1.0, batch_size:_batch_size})

#print('Iter: %d' % ((i+1) * _batch_size), '| train loss: %.6f' % loss, '| train accuracy: %.6f' % acc)

train_result = sess.run(merged, feed_dict={x:batch_x, y:batch_y, keep_prob:1.0, batch_size:_batch_size})

test_result = sess.run(merged, feed_dict={x:test_x, y:test_y, keep_prob:1.0, batch_size:test_x.shape[0]})

train_writer.add_summary(train_result,i+1)

test_writer.add_summary(test_result,i+1)

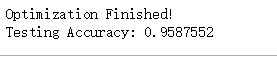

print("Optimization Finished!")

# prediction

print("Testing Accuracy:", sess.run(accuracy, feed_dict={x:test_x, y:test_y, keep_prob:1.0, batch_size:test_x.shape[0]}))

效果

本人太忙,僅做交流,就不把tensorboard的圖展示出來了,可參考上一篇部落格,自己展現計算流圖和曲線變化。