使用Pytorch實現ResNet

阿新 • • 發佈:2018-12-09

轉載:https://blog.csdn.net/kongshuchen/article/details/72285709 ResNet要解決的問題 深度學習網路的深度對最後的分類和識別的效果有著很大的影響,所以正常想法就是能把網路設計的越深越好,但是事實上卻不是這樣,常規的網路的堆疊(plain network)在網路很深的時候,效果卻越來越差了。 其中的原因之一即是網路越深,梯度消失的現象就越來越明顯,網路的訓練效果也不會很好。 但是現在淺層的網路(shallower network)又無法明顯提升網路的識別效果了,所以現在要解決的問題就是怎樣在加深網路的情況下又解決梯度消失的問題。

ResNet的解決方案

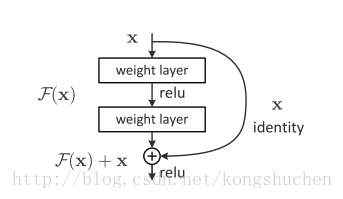

ResNet引入了殘差網路結構(residual network),通過殘差網路,可以把網路層弄的很深,據說現在達到了1000多層,最終的網路分類的效果也是非常好,殘差網路的基本結構如下圖所示

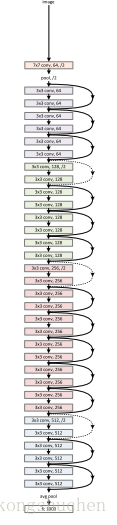

ResNet通過在輸出個輸入之間引入一個shortcut connection,而不是簡單的堆疊網路,這樣可以解決網路由於很深出現梯度消失的問題,從而可可以把網路做的很深,ResNet其中一個網路結構如下圖所示

# -*- coding:utf-8 -*-

import torch

import torch.nn as nn

import torchvision.datasets as dsets

import torchvision.transforms as transforms

from torch.autograd import Variable

# Image Preprocessing - 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

執行結果如下:

Files already downloaded and verified

Epoch [1/80], Iter [100/500] Loss: 1.6537

Epoch [1/80], Iter [200/500] Loss: 1.5279

Epoch [1/80], Iter [300/500] Loss: 1.3174

Epoch [1/80], Iter [400/500] Loss: 1.1979

Epoch [1/80], Iter [500/500] Loss: 1.1882

Epoch [2/80], Iter [100/500] Loss: 1.1613

Epoch [2/80], Iter [200/500] Loss: 1.0430

Epoch [2/80], Iter [300/500] Loss: 0.9967

Epoch [2/80], Iter [400/500] Loss: 1.0057

...

Accuracy of the model on the test images: 85 %

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13