基於人工蜂群優化的帕累託前沿特徵選擇

#引用

##LaTex

@article{HANCER2018462, title = “Pareto front feature selection based on artificial bee colony optimization”, journal = “Information Sciences”, volume = “422”, pages = “462 - 479”, year = “2018”, issn = “0020-0255”, doi = “https://doi.org/10.1016/j.ins.2017.09.028”, url = “http://www.sciencedirect.com/science/article/pii/S0020025516312609

##Normal

Emrah Hancer, Bing Xue, Mengjie Zhang, Dervis Karaboga, Bahriye Akay,

Pareto front feature selection based on artificial bee colony optimization,

Information Sciences,

Volume 422,

2018,

Pages 462-479,

ISSN 0020-0255,

#摘要

to maximize the classification performance to minimize the number of selected features

the curse of dimensionality

a multi-objective problem

multi-objective artificial bee colony algorithm integrated with non-dominated sorting procedure and genetic operators

ABC with binary representation and ABC with continuous representation

12 benchmark datasets

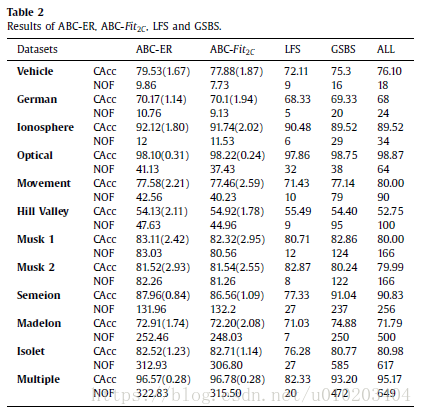

linear forward selection, greedy stepwise backward selection, two single objective ABC algorithms and three well-known multi-objective evolutionary computation algorithms

#主要內容

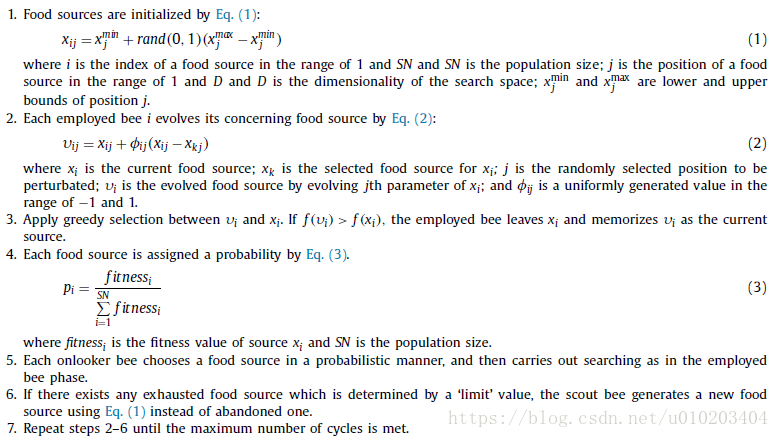

ABC

##Non-EC approaches

wrapper:

- sequential forward selection (SFS)

- sequential backward selection (SBS)

- sequential forward floating selection (SFFS)

- sequential backward floating selection (SFBS)

filter:

- FOCUS — exhaustive search

- Relief

- information theoretic approaches: MIFS, mRmR and MIFS-U

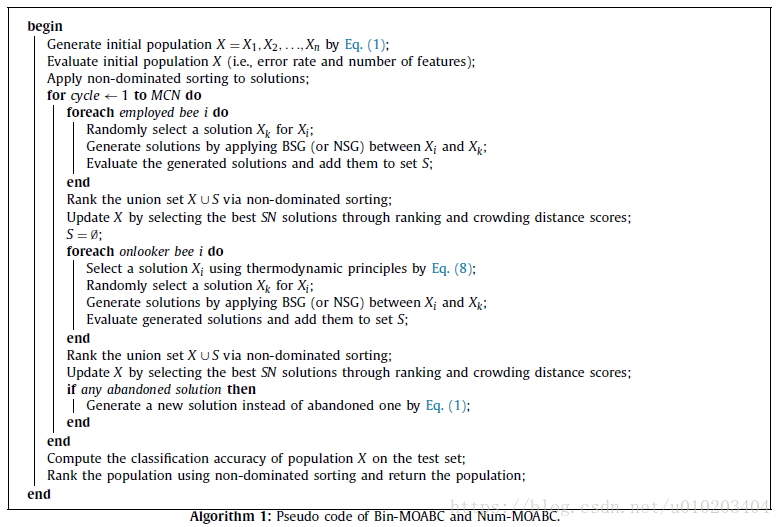

##提出的演算法

objectives:

- (1) minimizing the feature subset size

- (2) maximizing the classification accuracy

multi-objective ABC based wrapper approach

Bin-MOABC and Num-MOABC

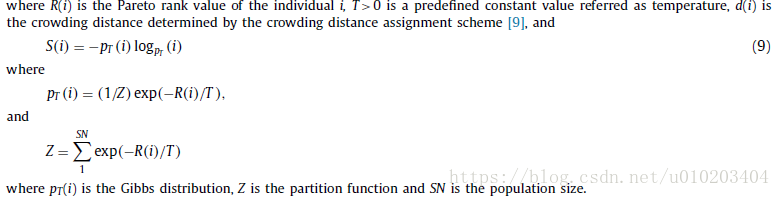

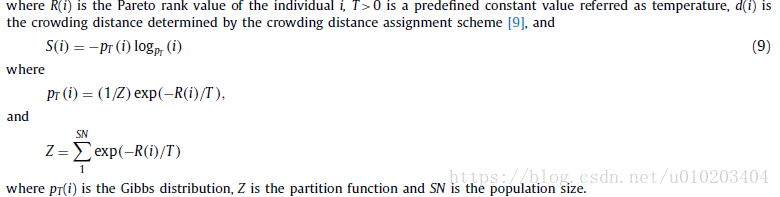

###A calculate probabilities for onlookers:

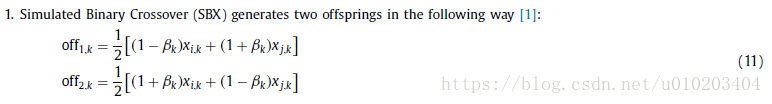

###B update individuals:

greedy selection

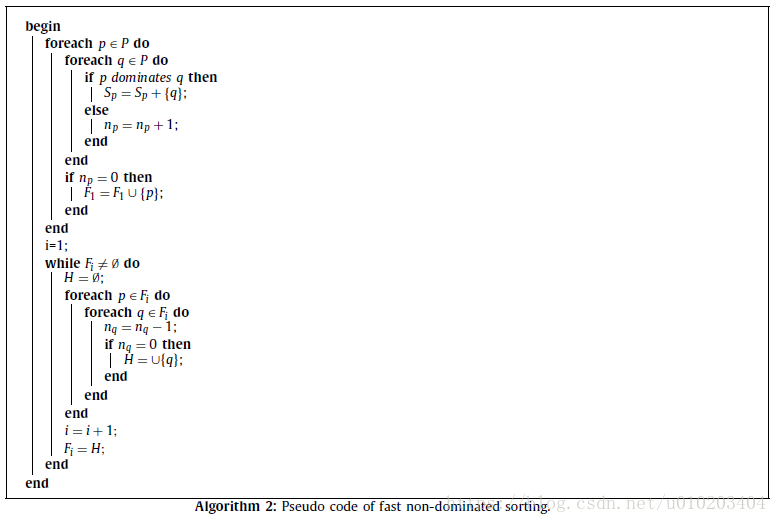

a fast non-dominated sorting scheme

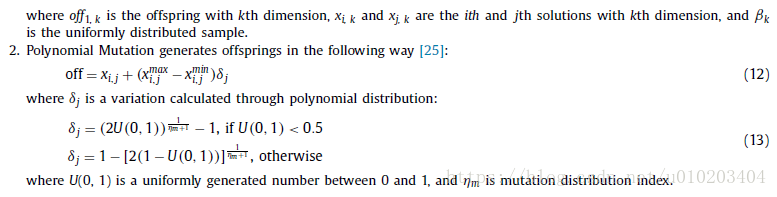

###C generate new individuals:

binary solution generator (BSG):

numeric solution generator (NSG)):

###D Representation and fitness function:

activation codes — 【0,1】

vary / discrete

a user specified threshold value — 0.5、

The classification error rate of a feature subset:

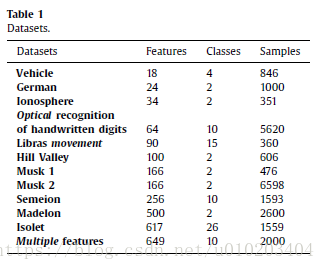

##試驗

UCI machine learning repository

加權和

ER — the error rate calculated through all available features in the dataset

NSGAII, NSSABC and multi-objective PSO (MOPSO)