使用遞迴神經網路識別垃圾簡訊

阿新 • • 發佈:2018-12-24

1.測試資料準備

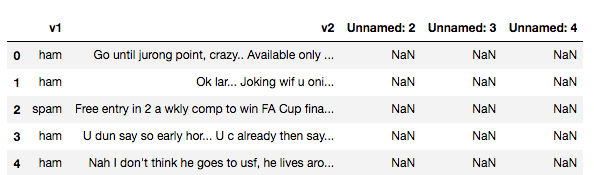

首先匯入本地準備的spam檔案

import pandas as pd import numpy as np import matplotlib.pyplot as plt import seaborn as sns from sklearn.model_selection import train_test_split from sklearn.preprocessing import LabelEncoder from keras.models import Model from keras.layers import LSTM, Activation, Dense, Dropout, Input, Embedding from keras.optimizers import RMSprop from keras.preprocessing.text import Tokenizer from keras.preprocessing import sequence from keras.utils import to_categorical from keras.callbacks import EarlyStopping %matplotlib inline df = pd.read_csv('spam.csv', delimiter=',', encoding='latin-1') df.head()

列印結果如下

能觀察到Unnamed:2~4幾列,有空多空資料,所以我們需要將這幾列刪除

df.drop(['Unnamed: 2', 'Unnamed: 3', 'Unnamed: 4'], axis=1, inplace=True)

再次列印

我們可以用seaborn觀察一下資料集label的分佈

sns.countplot(df.v1)

plt.xlabel('Label')

plt.title('Number of ham and spam messages')

然後我們將v2值存入X,將v1存入Y,因為v1值都是ham和spam,所以我們用LabelEncoder將其全部轉為0或1的整數陣列

X = df.v2

Y = df.v1

le = LabelEncoder()

Y = le.fit_transform(Y)

Y = Y.reshape(-1,1)

將測試資料集分成訓練資料和測試資料

X_train, X_test, Y_train, Y_test = train_test_split(X, Y, test_size=0.15)

2.搭建測試模型

首先我們先確定詞庫的最大長度(1000),以及每條簡訊的最大長度(150),然後使用Tokenizer將簡訊拆成單詞

max_words = 1000 max_len = 150 tok = Tokenizer(num_words=max_words) tok.fit_on_texts(X_train) sequences = tok.texts_to_sequences(X_train) sequences_matrix = sequence.pad_sequences(sequences, maxlen=max_len)

定義網路模型

def RNN():

inputs = Input(shape=[max_len])

layer = Embedding(max_words, 50, input_length=max_len)(inputs)

layer = LSTM(64)(layer)

layer = Dense(256)(layer)

layer = Activation('relu')(layer)

layer = Dropout(0.5)(layer)

layer = Dense(1)(layer)

layer = Activation('sigmoid')(layer)

model = Model(inputs=inputs, outputs=layer)

return model

生成網路

model = RNN()

model.summary()

我們可以觀察一下網路結構

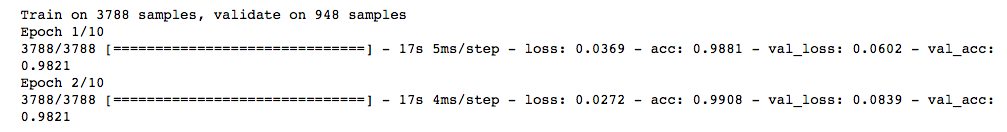

3.模型訓練

model.compile(loss='binary_crossentropy', optimizer=RMSprop(), metrics=['accuracy'])

model.fit(sequences_matrix, Y_train, batch_size=128, epochs=10, validation_split=0.2, callbacks=[EarlyStopping(monitor='val_loss', min_delta=0.0001)])

可以看到,由於我們設定了EarlyStopping,模型在迴圈2次後,val_loss就開始增大,所以模型停止了訓練。

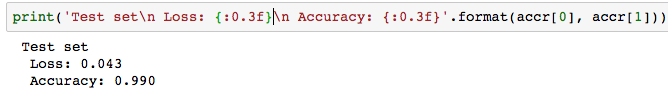

我們可以在測試資料集上看一下模型的準確率

test_sequences = tok.texts_to_sequences(X_test)

test_sequences = sequence.pad_sequences(test_sequences, maxlen=max_len)

accr = model.evaluate(test_sequences, Y_test)

print('Test set\n Loss: {:0.3f}\n Accuracy: {:0.3f}'.format(accr[0], accr[1]))

可以看到在測試資料上loss為0.043,準確率已經達到了99.0%

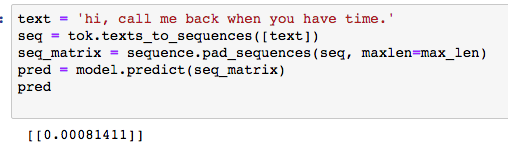

最後我們可以自己寫一條簡訊進行測試:hi, call me back when you have time.

text = 'hi, call me back when you have time.'

seq = tok.texts_to_sequences([text])

seq_matrix = sequence.pad_sequences(seq, maxlen=max_len)

pred = model.predict(seq_matrix)

可以看到,這條簡訊是垃圾簡訊的概率只有0.08%

到這裡,垃圾簡訊識別的功能就全部實現了。

所有程式碼及測試資料已上傳至git,點選這裡可直接檢視,有疑問的同學請在部落格下方留言或提Issuees,謝謝!