lgbm引數分析及迴歸超引數尋找

參考:lgbm的github:

https://github.com/Microsoft/LightGBM/blob/master/docs/Parameters.rst

程式碼來源參見我另一篇部落格:

https://blog.csdn.net/ssswill/article/details/85217702

網格搜尋尋找超引數:

from sklearn.model_selection import (cross_val_score, train_test_split,

GridSearchCV, RandomizedSearchCV) from sklearn.model_selection import KFold

from sklearn.metrics import mean_squared_error

import lightgbm as lgb

lgb_params = {"objective" : "regression", "metric" : "rmse",

"max_depth": 7, "min_child_samples": 20,

"reg_alpha": 1, "reg_lambda": 1,

"num_leaves" : 64, "learning_rate" : 0.01,

"subsample" : 0.8, "colsample_bytree" : 0.8,

"verbosity": -1}

FOLDs = KFold(n_splits=5, shuffle=True, random_state=42)

oof_lgb = np.zeros(len(train_X))

predictions_lgb = np.zeros(len(test_X))

features_lgb = list(train_X.columns)

feature_importance_df_lgb = pd.DataFrame()

for fold_, (trn_idx, val_idx) in enumerate(FOLDs.split(train_X)):

trn_data = lgb.Dataset(train_X.iloc[trn_idx], label=train_y.iloc[trn_idx])

val_data = lgb.Dataset(train_X.iloc[val_idx], label=train_y.iloc[val_idx])

print("-" * 20 +"LGB Fold:"+str(fold_)+ "-" * 20)

num_round = 10000

clf = lgb.train(lgb_params, trn_data, num_round, valid_sets = [trn_data, val_data], verbose_eval=1000, early_stopping_rounds = 50)

oof_lgb[val_idx] = clf.predict(train_X.iloc[val_idx], num_iteration=clf.best_iteration)

fold_importance_df_lgb = pd.DataFrame()

fold_importance_df_lgb["feature"] = features_lgb

fold_importance_df_lgb["importance"] = clf.feature_importance()

fold_importance_df_lgb["fold"] = fold_ + 1

feature_importance_df_lgb = pd.concat([feature_importance_df_lgb, fold_importance_df_lgb], axis=0)

predictions_lgb += clf.predict(test_X, num_iteration=clf.best_iteration) / FOLDs.n_splits

print("Best RMSE: ",np.sqrt(mean_squared_error(oof_lgb, train_y)))

輸出:

開始!

我們把程式碼拆分來看:

先看超引數字典:

hyper_space = {'n_estimators': [1000, 1500, 2000, 2500],

'max_depth': [4, 5, 8, -1],

'num_leaves': [15, 31, 63, 127],

'subsample': [0.6, 0.7, 0.8, 1.0],

'colsample_bytree': [0.6, 0.7, 0.8, 1.0],

'learning_rate' : [0.01,0.02,0.03]

}

“n_estimators”:

從圖中可看到,n_estimators是num_itertations的別名,預設是100.也就是迴圈次數,或者叫樹的數目。

後面又有一句note:對於多分類問題,樹的數目是種類數*你設的樹顆數。

“max_depth”:樹的深度

-1代表無限制。

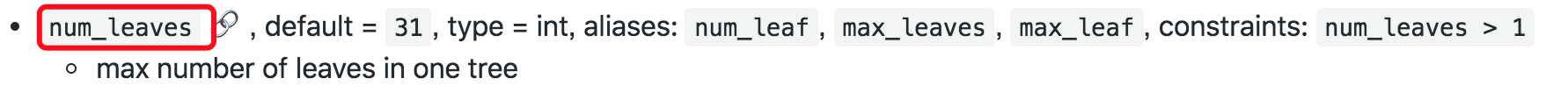

“num_leaves”:

“subsample”:

介紹裡說了它的好處:加速訓練,避免過擬合等。並說與feature_fraction類似。我們來看看這個引數是啥:

原來如此,它和RF的

很像。

圖來自:https://www.cnblogs.com/harvey888/p/6512312.html

再來看:‘colsample_bytree’:

原來就是上面的引數。

繼續:‘learning_rate’

這個肯定不用多說了,學習率。

再來看這兩行程式碼:

est = lgb.LGBMRegressor(n_jobs=-1, random_state=2018)

gs = GridSearchCV(est, hyper_space, scoring='r2', cv=4, verbose=1)

n_jobs=1:並行job個數。這個在ensemble演算法中非常重要,尤其是bagging(而非boosting,因為boosting的每次迭代之間有影響,所以很難進行並行化),因為可以並行從而提高效能。1=不併行;n:n個並行;-1:CPU有多少core,就啟動多少job。

“random_state”:

“verbose”:

就是控制輸出資訊的冗長程度。不用太在意。預設就好。

類似這樣的輸出日誌:

資訊的意思是:

因為LightGBM使用的是leaf-wise的演算法,因此在調節樹的複雜程度時,使用的是num_leaves而不是max_depth。

大致換算關係:num_leaves = 2^(max_depth)。它的值的設定應該小於 2^(max_depth),否則可能會導致過擬合。

下面有一個類似的講解,但不是lgbm的引數講解。

第二段程式碼,關於訓練與驗證、畫圖的分析參見下篇部落格吧。太長看著累。