Training alternative Dlib Shape Predictor models using Python

1. Understanding the Training Options

The training process of a model is governed by a set of parameters. These parameters affect the size, accuracy and speed of the generated model.

The most important parameters are:

- Tree Depth — Specifies the depth of the trees used in each cascade. This parameter represent the “capacity” of the model. An optimal value (in terms of accuracy) is 4, instead a value of 3 is a good tradeoff between accuracy and model-size.

- Nu — Is the regularization parameter.It determines the ability of the model to generalize and learn patterns instead of fixed-data. Value close to 1 will emphasize the learning of fixed-data instead of patterns, thus raising the chances for over-fittingto occur. Instead, an optimal nu value of 0.1 will make the model to recognize patterns instead of fixed-situations, totally eliminating the over-fitting problem. The amount of training samples can be a problem here, in fact with lower nu values the model needs a lot (thousands) of training samples in order to perform well.

- Cascade Depth — Is the number of cascades used to train the model. This parameter affect either the size and accuracy of a model. A good value is about 10-12, instead a value of 15 is a perfect balance of maximum accuracy and a reasonable model-size.

- Feature Pool Size — Denotes the number of pixels used to generate the features for the random trees at each cascade. Larger amount of pixels will lead the algorithm to be more robust and accurate but to execute slower. A value of 400 achieves a great accuracy with a good runtime speed. Instead, if speed is not a problem, setting the parameter value to 800 (or even 1000) will lead to superior precision. Interestingly, with a value between 100 and 150 is still possible to obtain a quite good accuracy but with an impressing runtime speed. This last value is particularly suitable for mobile and embedded devices applications.

- Num Test Splits — Is the number of split features sampled at each node. This parameter is responsible for selecting the best features at each cascade during the training process. The parameter affects the training speed and the model accuracy. The default value of the parameter is 20. This parameter can be very useful, for example, when we want to train a model with a good accuracy and keep its size small. This can be done by increasing the amount of num split test to 100 or even 300, in order to increase the model accuracy and not its size.

- Oversampling Amount — Specifies the number of randomly selected deformations applied to the training samples. Applying random deformations to the training images is a simple technique that effectively increase the size of the training dataset. Increasing the value of the parameter to 20 or even 40 is only required in the case of small datasets, also it will increase the training time considerably (so be careful). In the latest releases of the Dlib library, there is a new training parameter: the oversampling jittering amount that apply some translation deformation to the given bounding boxes in order to make the model more robust against eventually misplaced face regions.

2. Getting the Data

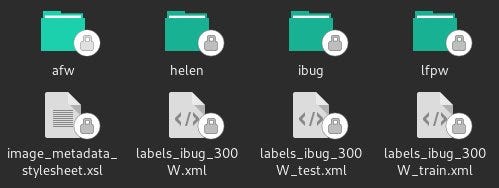

In order to replicate the Dlib results, we have to utilize the images and annotations inside the iBug 300W dataset (available here). The dataset consists of the combination of four major datasets: afw, helen, ibug, and lfpw.

The files (annotations) that we need to train and test the models are the: labels_ibug_300W_train.xml and labels_ibug_300W_test.xml.

Before getting into coding remember to put the scripts in the same directory of the dataset!

3. Training the Models

Imagine we are interested into training a model that is able to localize only the landmarks of the left and right eye. To do this, we have to edit the iBug training annotations by selecting only the relevant points:

This can be done by calling the slice_xml() function, that creates a new xml-file with only the selected landmarks.

After setting the training parameters, we can finally train our eye-model.

When the training is done, we can easily evaluate the model accuracy by invoking the measure_model_error() function.