Why building your own Deep Learning computer is 10x cheaper than AWS

Why building your own Deep Learning computer is 10x cheaper than AWS

If you’ve used, or are considering, AWS/Azure/GCloud for Machine Learning, you know how crazy expensive GPU time is. And turning machines on and off is a major disruption to your workflow. There’s a better way. Just build your own Deep Learning Computer. It’s 10x cheaper and also easier to use. Let’s take a closer look below.

This is part 1 of 3 in the Deep Learning Computer Series. Part 2 is ‘How to build one’ and Part 3 is ‘How to benchmark performance’. Follow me to get the new articles. Leave questions and thoughts in comments below!

Building an expandable Deep Learning Computer w/ 1 top-end GPU only costs $3k

The machine I built costs $3k and has the parts shown below. There’s one 1080 Ti GPU to start (you can just as easily use the new 2080 Ti for Machine Learning at $500 more — just be careful to get one with a blower fan design), a 12 Core CPU, 64GB RAM, and 1TB M.2 SSD. You can add three more GPUs easily for a total of four.

Building is 10x cheaper than renting on AWS / EC2 and is just as performant

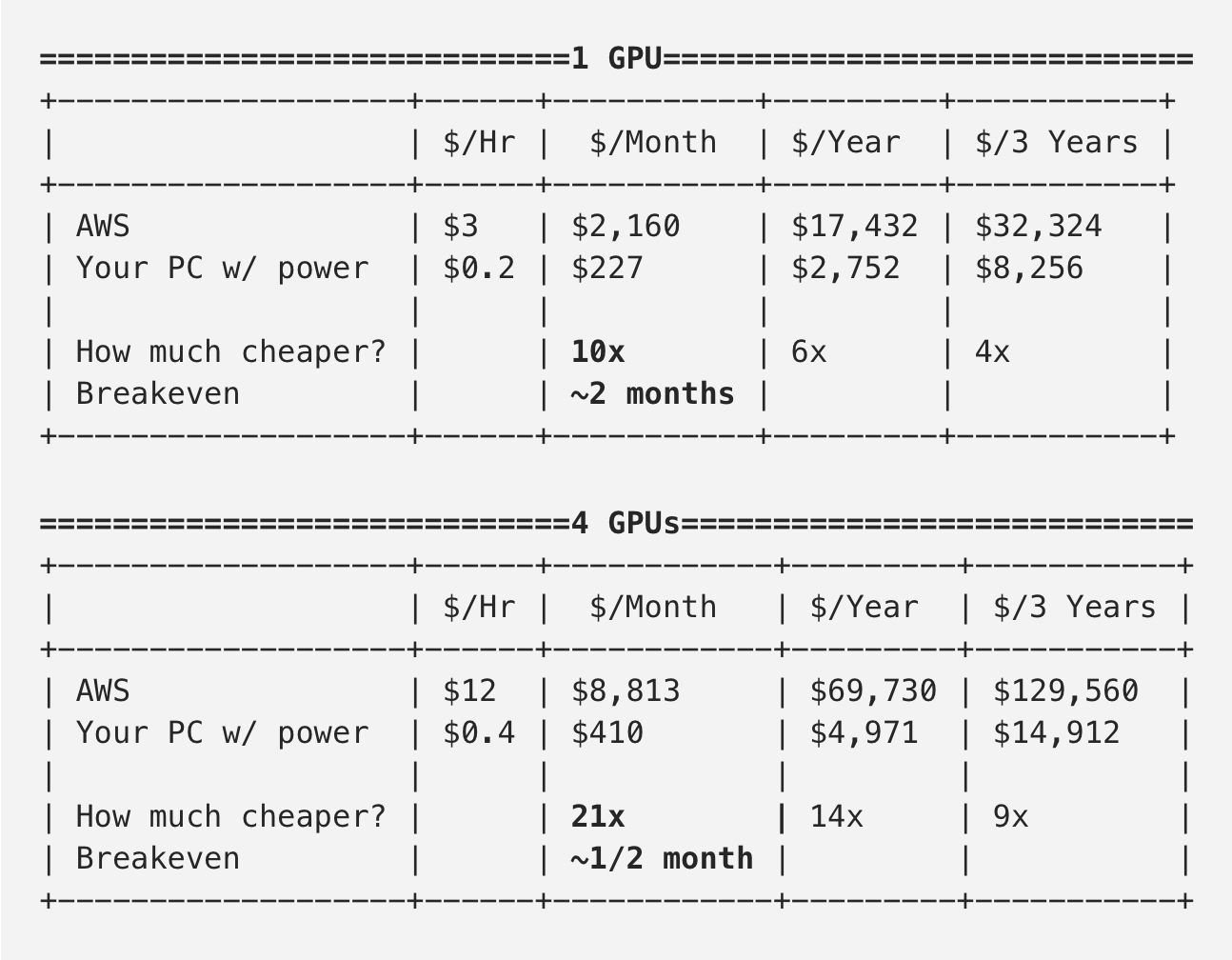

Assuming your 1 GPU machine depreciates to $0 in 3 years (very conservative), the chart below shows that if you use it for up to 1 year, it’ll be 10x cheaper, including costs for electricity. Amazon discounts pricing if you have a multi-year contract, so the advantage is 4–6x for multi-year contracts. If you are shelling out tens of thousands of dollars for a multi-year contract, you should seriously consider building at 4–6x less money. The math gets more favorable for the 4 GPU version at 21x cheaper within 1 year!

There are some draw backs, such as slower download speed to your machine because it’s not on the backbone, static IP is required to access it away from your house, you may want to refresh the GPUs in a couple of years, but the cost savings is so ridiculous it’s still worth it.

If you’re thinking of using the 2080 Ti for your Deep Learning Computer, it’s $500 more and still 4-9x cheaper for a 1 GPU machine.

Cloud GPU machines are expensive at $3 / hour and you have to pay even when you’re not using the machine.

The reason for this dramatic cost discrepancy is that Amazon Web Services EC2 (or Google Cloud or Microsoft Azure) is expensive for GPUs at $3 / hour or about $2100 / month. At Stanford, I used it for my Semantic Segmentation project and my bill was $1,000. I’ve also tried Google Cloud for a project and my bill was $1,800. This is with me carefully monitoring usage and turning off machines when not in use — major pain in the butt!

Even when you shut your machine down, you still have to pay storage for the machine at $0.10 per GB per month, so I got charged a couple hundred dollars / month just to keep my data around.

You’ll break even in just a few months

For the 1 GPU $3k machine you build (Power: 1 kW/h), you will break even in just 2 months if you are using it regularly. This is not to mention you still own your computer and it hasn’t depreciated much in 2 months, so building should be a no-brainer. Again, the math gets more favorable for the 4 GPU version (Power: 2 kW/h) as you’ll break even in less than 1 month. (Assumes power is $0.20 / kWh)

Your GPU Performance is on par with AWS

Your $700 Nvidia 1080 Ti performs at 90% speed compared to the cloud Nvidia V100 GPU (which uses next gen Volta tech). This is because Cloud GPUs suffer from slow IO between the instance and the GPU, so even though the V100 may be 1.5–2x faster in theory, IO slows it down in practice. Since you’re using a M.2 SSD, IO is blazing fast on your own computer.

You get more memory with the V100, 16GB vs. 11GB, but if you just make your batch sizes a little smaller and your models more efficient, you’ll do fine with 11GB.

Compared with renting a last generation Nvidia K80 online (cheaper at $1 / hour), your 1080 Ti blows it out of the water, performing 4x faster in training speed. I validated that it’s 3x-4x faster in my own benchmark (I will show you how to benchmark in a subsequent post). K80 is 12GB per GPU, which is a tiny advantage to your 11GB 1080 Ti.

AWS is expensive because Amazon is forced to use a much more expensive GPU

There’s a reason why datacenters are expensive: they are not using the Geforce 1080 Ti. Nvidia contractually prohibits the use of GeForce and Titan cards in datacenters. So Amazon and other providers have to use the $8,500 datacenter version of the GPUs, and they have to charge a lot for renting it. This is customer segmentation at its finest folks!

Building is better than buying

You also need to decide whether to buy a computer or build your own. Though it’s utterly unimaginable to me that an enthusiast would choose to buy instead of build, you’ll be happy to know that it’s also 40–50% cheaper to build. Pre-builts cost at least $5k, here are Some buying options: this one, and that one.

It’s not necessary to buy one. You see, the hard part about building is finding the right parts for machine learning and making sure they all work together, which I’ve done for you! Physically building the computer is not hard, a first-timer can do it in less than 6 hours, a pro in less than 1 hour.

Building lets you take advantage of crazy price drops

When new gen hardware comes out every year, there’s a stepwise drop on last gen hardware. For example, when AMD came out with the Threadripper 2 CPUs, it slashed the price of the 1920X processor from $800 to $400. You can take immediate advantage of these drops, and keep $$$ in your pocket.

Building lets you pick parts so your computer can expand to 4 GPUs and optimize it in other ways.

I looked at some of the off-the-shelf builds, and some cannot go to 4 GPUs or are not optimized for performance. Some examples of issues: a CPU does not have 36+ PCIe lanes, the motherboard cannot physically have 4 GPUS plugged in, the power supply is less than 1400W, a CPU is less than 8 cores. I will discuss the nuances of part picking in the next post.

You can also make sure the design aesthetic is awesome (I personally find some of the common computer cases hideously ugly), the noise profile is low (some gold rated power supplies are very loud), and the parts make sense for Machine Learning (SATA3 SSD is 600MB/sec while M.2 PCIe SSD is a whopping 5x faster at 3.4GB/sec).

How to start your build

In the next post I will discuss how to pick components, avoid common pitfalls, and build your machine. If you want to get a head start, you can use my public parts list with pricing and get going.

FAQ

Why is expandability important in a Deep Learning Computer?If you don’t know how much GPU power you’ll need, the best idea is to build a computer with 1 GPU and add more GPUs as you go along.

Why 4 GPUs?You want to add as many as you can to amortize the cost of the rest of your system. I was only able to find motherboards/cpu combos that support 4 GPUs with reasonable costs.

Will you help me build one?Happy to help with questions via comments / email. I also run the www.HomebrewAIClub.com, some of our members may be interested in helping.

How can I make my computer even cheaper?Buy parts off eBay (you can get a 1080 Ti Founders Edition for $600 and a 1920X CPU for $400 as of 09/2018).

How does my computer compare to Nvidia’s $49,000 Personal AI Supercomputer?Nvidia’s Personal AI Supercomputer uses 4 GPUs (Tesla V100), a 20 core CPU, and 128GB ram. I don’t have one so I don’t know for sure, but latest benchmarks show 25–80% speed improvement. Nvidia’s own benchmark quotes 4x faster, but you can bet their benchmark uses all V100’s unique advantages such as half-precision and won’t materialize in practice. Remember your machine only costs $4.5k with 4 GPUs, so laugh your way to the bank.