部署Hadoop2.0高效能叢集

廢話不多說直接實戰,部署Hadoop高效能叢集:

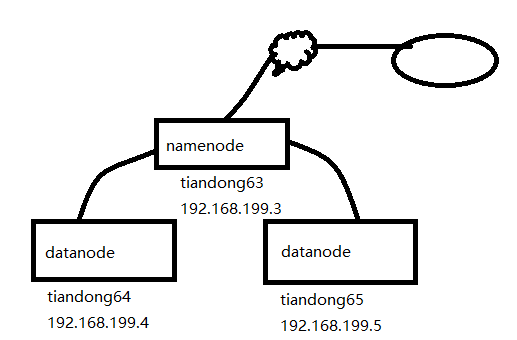

拓撲圖:

一、實驗前期環境準備:

1、三臺主機配置hosts檔案:(複製到另外兩臺主機上)

[[email protected] ~]# more /etc/hosts

192.168.199.3 tiandong63

192.168.199.4 tiandong64

192.168.199.5 tiandong65

2、建立Hadoop賬號(另外兩臺主機上都的建立)

[[email protected] ~]#useradd -u 8000 hadoop

3、給hadoop使用者增加sudo許可權,增加內容(另外兩臺主機上都得配置)

[[email protected] ~]# vim /etc/sudoers

hadoop ALL=(ALL) ALL

4、主機互信(在tiandong63主機上)

可以ssh無密碼登入機器tiandong63,tiandong64,tiandong65 ,方便後期複製檔案和啟動服務。因為namenode啟動時,會連線到datanode上啟動對應的服務。

[[email protected] ~]# su - hadoop

[[email protected] ~]$ ssh-keygen

[[email protected] ~]$ ssh-copy-id [email protected]

[[email protected] ~]$ ssh-copy-id [email protected]

二、配置hadoop環境

1、配置jdk環境:(三臺都的配置)

[[email protected] ~]# ll jdk-8u191-linux-x64.tar.gz

-rw-r--r-- 1 root root 191753373 Dec 30 00:58 jdk-8u191-linux-x64.tar.gz

[[email protected] ~]# tar jdk-8u191-linux-x64.tar.gz -C /usr/local/src/

[[email protected] ~]# vim /etc/profile

export JAVA_HOME=/usr/local/src/jdk1.8.0_191

export JAVA_BIN=/usr/local/src/jdk1.8.0_191/bin

export PATH=${JAVA_HOME}/bin:$PATH

export CLASSPATH=.:${JAVA_HOME}/lib/dt.jar:${JAVA_HOME}/lib/tools.jar

export hadoop_root_logger=DEBUG,console

[[email protected] ~]# source /etc/profile

2、關閉防火牆(三臺都的關閉)

[[email protected] ~]# /etc/init.d/iptables stop

3、在tiandong63安裝Hadoop 並配置成namenode主節點

[[email protected] ~]# cd /home/hadoop/

[[email protected] hadoop]# ll hadoop-2.7.7.tar.gz

-rw-r--r-- 1 hadoop hadoop 218720521 Dec 29 21:11 hadoop-2.7.7.tar.gz

[[email protected] ~]# su - hadoop

[[email protected] ~]$ tar -zxvf hadoop-2.7.7.tar.gz

建立hadoop相關的工作目錄:

[[email protected] ~]$ mkdir -p /home/hadoop/dfs/name/ /home/hadoop/dfs/data/^Chome/hadoop/tmp/

4、配置hadoop(修改7個配置檔案)

檔名稱:hadoop-env.sh、yarn-evn.sh、slaves、core-site.xml、hdfs-site.xml、mapred-site.xml、yarn-site.xml

[[email protected] ~]$ cd /home/hadoop/hadoop-2.7.7/etc/hadoop/

[[email protected] hadoop]$ vim hadoop-env.sh 指定hadoop的Java執行環境

25 export JAVA_HOME=/usr/local/src/jdk1.8.0_191

[[email protected] hadoop]$ vim yarn-env.sh 指定yarn框架的java執行環境

26 JAVA_HOME=/usr/local/src/jdk1.8.0_191

[[email protected] hadoop]$ vim slaves 指定datanode 資料儲存伺服器

tiandong64

tiandong65

[[email protected] hadoop]$ vim core-site.xml 指定訪問hadoop web介面訪問路徑

19 <configuration>

20 <property>

21 <name>fs.defaultFS</name>

22 <value>hdfs://tiandong63:9000</value>

23 </property>

24

25 <property>

26 <name>io.file.buffer.size</name>

27 <value>131072</value>

28 </property>

29

30 <property>

31 <name>hadoop.tmp.dir</name>

32 <value>file:/home/hadoop/tmp</value>

33 <description>Abase for other temporary directories.</description>

34 </property>

35 </configuration>

[[email protected] hadoop]$ mkdir -p /home/hadoop/tmp

[[email protected] hadoop]$ vim hdfs-site.xml

hdfs的配置檔案,dfs.http.address配置了hdfs的http的訪問位置,dfs.replication配置了檔案塊的副本數,一般不大於從機的個數。

19 <configuration>

20 <property>

21 <name>dfs.namenode.secondary.http-address</name>

22 <value>tiandong63:9001</value>

23 </property>

24

25 <property>

26 <name>dfs.namenode.name.dir</name>

27 <value>file:/home/hadoop/dfs/name</value>

28 </property>

29 <property>

30 <name>dfs.datanode.data.dir</name>

31 <value>file:/home/hadoop/dfs/data</value>

32 </property>

33

34 <property>

35 <name>dfs.replication</name>

36 <value>2</value>

37 </property>

38

39 <property>

40 <name>dfs.webhdfs.enabled</name>

41 <value>true</value>

42 </property>

43 </configuration>

[[email protected] hadoop]$ vim mapred-site.xml

19 <configuration>

20 <property>

21 <name>mapreduce.framework.name</name>

22 <value>yarn</value>

23 </property>

24

25 <property>

26 <name>mapreduce.jobhistory.address</name>

27 <value>tiandong63:10020</value>

28 </property>

29

30 <property>

31 <name>mapreduce.jobhistory.webapp.address</name>

32 <value>tiandong:19888</value>

33 </property>

34 </configuration>

[[email protected] hadoop]$ vim yarn-site.xml 該檔案為yarn框架的配置,主要是一些任務的啟動位置

15 <configuration>

16

17 <!-- Site specific YARN configuration properties -->

18 <property>

19 <name>yarn.nodemanager.aux-services</name>

20 <value>mapreduce_shuffle</value>

21 </property>

22

23 <property>

24 <name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

25 <value>org.apache.hadoop.mapred.ShuffleHandler</value>

26 </property>

27

28 <property>

29 <name>yarn.resourcemanager.address</name>

30 <value>tiandong63:8032</value>

31 </property>

32

33 <property>

34 <name>yarn.resourcemanager.scheduler.address</name>

35 <value>tiandong63:8030</value>

36 </property>

37

38 <property>

39 <name>yarn.resourcemanager.resource-tracker.address</name>

40 <value>tiandong63:8031</value>

41 </property>

42

43 <property>

44 <name>yarn.resourcemanager.admin.address</name>

45 <value>tiandong63:8033</value>

46 </property>

47

48 <property>

49 <name>yarn.resourcemanager.webapp.address</name>

50 <value>tiandong63:8088</value>

51 </property>

52 </configuration>

複製到其他datanode節點(192.168.199.4/5)

[[email protected] ~]$ scp -r /home/hadoop/hadoop-2.7.7 [email protected]

[[email protected] ~]$ scp -r /home/hadoop/hadoop-2.7.7 [email protected]

三、啟動hadoop:

在tiandong63上面啟動(使用hadoop使用者)

1、格式化:

[[email protected] ~]$ cd /home/hadoop/hadoop-2.7.7/bin/

[[email protected] bin]$ ./hdfs nodename -format

2、啟動hdfs:

[[email protected] ~]$ /home/hadoop/hadoop-2.7.7/sbin/start-dfs.sh

檢視程序(tiandong63上面檢視namenode):

[[email protected] ~]$ ps -axu | grep namenode --color

Warning: bad syntax, perhaps a bogus '-'? See /usr/share/doc/procps-3.2.8/FAQ

hadoop 42851 0.4 17.6 2762196 177412 ? Sl 18:13 1:26 /usr/local/src/jdk1.8.0_191/bin/java -Dproc_namenode -Xmx1000m -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/home/hadoop/hadoop-2.7.7/logs -Dhadoop.log.file=hadoop.log -Dhadoop.home.dir=/home/hadoop/hadoop-2.7.7 -Dhadoop.id.str=hadoop -Dhadoop.root.logger=INFO,console -Djava.library.path=/home/hadoop/hadoop-2.7.7/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/home/hadoop/hadoop-2.7.7/logs -Dhadoop.log.file=hadoop-hadoop-namenode-tiandong63.log -Dhadoop.home.dir=/home/hadoop/hadoop-2.7.7 -Dhadoop.id.str=hadoop -Dhadoop.root.logger=INFO,RFA -Djava.library.path=/home/hadoop/hadoop-2.7.7/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Dhadoop.security.logger=INFO,RFAS -Dhdfs.audit.logger=INFO,NullAppender -Dhadoop.security.logger=INFO,RFAS -Dhdfs.audit.logger=INFO,NullAppender -Dhadoop.security.logger=INFO,RFAS -Dhdfs.audit.logger=INFO,NullAppender -Dhadoop.security.logger=INFO,RFAS org.apache.hadoop.hdfs.server.namenode.NameNode

hadoop 43046 0.2 13.3 2733336 134344 ? Sl 18:13 0:35 /usr/local/src/jdk1.8.0_191/bin/java -Dproc_secondarynamenode -Xmx1000m -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/home/hadoop/hadoop-2.7.7/logs -Dhadoop.log.file=hadoop.log -Dhadoop.home.dir=/home/hadoop/hadoop-2.7.7 -Dhadoop.id.str=hadoop -Dhadoop.root.logger=INFO,console -Djava.library.path=/home/hadoop/hadoop-2.7.7/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/home/hadoop/hadoop-2.7.7/logs -Dhadoop.log.file=hadoop-hadoop-secondarynamenode-tiandong63.log -Dhadoop.home.dir=/home/hadoop/hadoop-2.7.7 -Dhadoop.id.str=hadoop -Dhadoop.root.logger=INFO,RFA -Djava.library.path=/home/hadoop/hadoop-2.7.7/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Dhadoop.security.logger=INFO,RFAS -Dhdfs.audit.logger=INFO,NullAppender -Dhadoop.security.logger=INFO,RFAS -Dhdfs.audit.logger=INFO,NullAppender -Dhadoop.security.logger=INFO,RFAS -Dhdfs.audit.logger=INFO,NullAppender -Dhadoop.security.logger=INFO,RFAS org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode

在tiandong64和tiandong65上面檢視程序(datanode)

[[email protected] ~]# ps -aux|grep datanode

Warning: bad syntax, perhaps a bogus '-'? See /usr/share/doc/procps-3.2.8/FAQ

hadoop 3938 0.3 12.2 2757576 122592 ? Sl 18:13 1:04 /usr/local/src/jdk1.8.0_191/bin/java -Dproc_datanode -Xmx1000m -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/home/hadoop/hadoop-2.7.7/logs -Dhadoop.log.file=hadoop.log -Dhadoop.home.dir=/home/hadoop/hadoop-2.7.7 -Dhadoop.id.str=hadoop -Dhadoop.root.logger=INFO,console -Djava.library.path=/home/hadoop/hadoop-2.7.7/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/home/hadoop/hadoop-2.7.7/logs -Dhadoop.log.file=hadoop-hadoop-datanode-tiandong64.log -Dhadoop.home.dir=/home/hadoop/hadoop-2.7.7 -Dhadoop.id.str=hadoop -Dhadoop.root.logger=INFO,RFA -Djava.library.path=/home/hadoop/hadoop-2.7.7/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -server -Dhadoop.security.logger=ERROR,RFAS -Dhadoop.security.logger=ERROR,RFAS -Dhadoop.security.logger=ERROR,RFAS -Dhadoop.security.logger=INFO,RFAS org.apache.hadoop.hdfs.server.datanode.DataNode

3、啟動yarn(在tiandong63上面,即啟動分散式計算):

[[email protected] ~]$ /home/hadoop/hadoop-2.7.7/sbin/start-yarn.sh

在tiandong63上面檢視程序(resourcemanager程序)

[[email protected] ~]$ ps -ef|grep resourcemanager --color

hadoop 43196 1 0 18:14 pts/1 00:02:55 /usr/local/src/jdk1.8.0_191/bin/java -Dproc_resourcemanager -Xmx1000m -Dhadoop.log.dir=/home/hadoop/hadoop-2.7.7/logs -Dyarn.log.dir=/home/hadoop/hadoop-2.7.7/logs -Dhadoop.log.file=yarn-hadoop-resourcemanager-tiandong63.log -Dyarn.log.file=yarn-hadoop-resourcemanager-tiandong63.log -Dyarn.home.dir= -Dyarn.id.str=hadoop -Dhadoop.root.logger=INFO,RFA -Dyarn.root.logger=INFO,RFA -Djava.library.path=/home/hadoop/hadoop-2.7.7/lib/native -Dyarn.policy.file=hadoop-policy.xml -Dhadoop.log.dir=/home/hadoop/hadoop-2.7.7/logs -Dyarn.log.dir=/home/hadoop/hadoop-2.7.7/logs -Dhadoop.log.file=yarn-hadoop-resourcemanager-tiandong63.log -Dyarn.log.file=yarn-hadoop-resourcemanager-tiandong63.log -Dyarn.home.dir=/home/hadoop/hadoop-2.7.7 -Dhadoop.home.dir=/home/hadoop/hadoop-2.7.7 -Dhadoop.root.logger=INFO,RFA -Dyarn.root.logger=INFO,RFA -Djava.library.path=/home/hadoop/hadoop-2.7.7/lib/native -classpath /home/hadoop/hadoop-2.7.7/etc/hadoop:/home/hadoop/hadoop-2.7.7/etc/hadoop:/home/hadoop/hadoop-2.7.7/etc/hadoop:/home/hadoop/hadoop-2.7.7/share/hadoop/common/lib/*:/home/hadoop/hadoop-2.7.7/share/hadoop/common/*:/home/hadoop/hadoop-2.7.7/share/hadoop/hdfs:/home/hadoop/hadoop-2.7.7/share/hadoop/hdfs/lib/*:/home/hadoop/hadoop-2.7.7/share/hadoop/hdfs/*:/home/hadoop/hadoop-2.7.7/share/hadoop/yarn/lib/*:/home/hadoop/hadoop-2.7.7/share/hadoop/yarn/*:/home/hadoop/hadoop-2.7.7/share/hadoop/mapreduce/lib/*:/home/hadoop/hadoop-2.7.7/share/hadoop/mapreduce/*:/contrib/capacity-scheduler/*.jar:/contrib/capacity-scheduler/*.jar:/contrib/capacity-scheduler/*.jar:/contrib/capacity-scheduler/*.jar:/home/hadoop/hadoop-2.7.7/share/hadoop/yarn/*:/home/hadoop/hadoop-2.7.7/share/hadoop/yarn/lib/*:/home/hadoop/hadoop-2.7.7/etc/hadoop/rm-config/log4j.properties org.apache.hadoop.yarn.server.resourcemanager.ResourceManager

在tiandong64和tiandong65上面檢視程序(nodemanager)

[[email protected] ~]# ps -aux|grep nodemanager --color

Warning: bad syntax, perhaps a bogus '-'? See /usr/share/doc/procps-3.2.8/FAQ

hadoop 4048 0.5 15.7 2802552 158380 ? Sl 18:14 1:42 /usr/local/src/jdk1.8.0_191/bin/java -Dproc_nodemanager -Xmx1000m -Dhadoop.log.dir=/home/hadoop/hadoop-2.7.7/logs -Dyarn.log.dir=/home/hadoop/hadoop-2.7.7/logs -Dhadoop.log.file=yarn-hadoop-nodemanager-tiandong64.log -Dyarn.log.file=yarn-hadoop-nodemanager-tiandong64.log -Dyarn.home.dir= -Dyarn.id.str=hadoop -Dhadoop.root.logger=INFO,RFA -Dyarn.root.logger=INFO,RFA -Djava.library.path=/home/hadoop/hadoop-2.7.7/lib/native -Dyarn.policy.file=hadoop-policy.xml -server -Dhadoop.log.dir=/home/hadoop/hadoop-2.7.7/logs -Dyarn.log.dir=/home/hadoop/hadoop-2.7.7/logs -Dhadoop.log.file=yarn-hadoop-nodemanager-tiandong64.log -Dyarn.log.file=yarn-hadoop-nodemanager-tiandong64.log -Dyarn.home.dir=/home/hadoop/hadoop-2.7.7 -Dhadoop.home.dir=/home/hadoop/hadoop-2.7.7 -Dhadoop.root.logger=INFO,RFA -Dyarn.root.logger=INFO,RFA -Djava.library.path=/home/hadoop/hadoop-2.7.7/lib/native -classpath /home/hadoop/hadoop-2.7.7/etc/hadoop:/home/hadoop/hadoop-2.7.7/etc/hadoop:/home/hadoop/hadoop-2.7.7/etc/hadoop:/home/hadoop/hadoop-2.7.7/share/hadoop/common/lib/*:/home/hadoop/hadoop-2.7.7/share/hadoop/common/*:/home/hadoop/hadoop-2.7.7/share/hadoop/hdfs:/home/hadoop/hadoop-2.7.7/share/hadoop/hdfs/lib/*:/home/hadoop/hadoop-2.7.7/share/hadoop/hdfs/*:/home/hadoop/hadoop-2.7.7/share/hadoop/yarn/lib/*:/home/hadoop/hadoop-2.7.7/share/hadoop/yarn/*:/home/hadoop/hadoop-2.7.7/share/hadoop/mapreduce/lib/*:/home/hadoop/hadoop-2.7.7/share/hadoop/mapreduce/*:/contrib/capacity-scheduler/*.jar:/contrib/capacity-scheduler/*.jar:/home/hadoop/hadoop-2.7.7/share/hadoop/yarn/*:/home/hadoop/hadoop-2.7.7/share/hadoop/yarn/lib/*:/home/hadoop/hadoop-2.7.7/etc/hadoop/nm-config/log4j.properties org.apache.hadoop.yarn.server.nodemanager.NodeManager

注意:start-dfs和start-yarn.sh這兩個指令碼可以使用start-all.sh代替

4、檢視HDFS分散式檔案系統狀態

[[email protected] ~]$ /home/hadoop/hadoop-2.7.7/bin/hdfs dfsadmin -report

四、hadoop的簡單使用

執行hadoop計算任務,word count字數統計

在HDFS上建立資料夾:

[[email protected] ~]$ /home/hadoop/hadoop-2.7.7/bin/hadoop fs -mkdir /test/input

檢視資料夾:

[[email protected] ~]$ /home/hadoop/hadoop-2.7.7/bin/hadoop fs -ls /test/

drwxr-xr-x - hadoop supergroup 0 2018-12-30 21:40 /test/input

上傳檔案:

建立一個計算的檔案:

[[email protected] ~]$ more file1.txt

welcome to beijing

my name is thunder

what is your name

上傳到HDFS的/test/input資料夾中

[[email protected] ~]$ /home/hadoop/hadoop-2.7.7/bin/hadoop fs -put /home/hadoop/file1.txt /test/input

檢視是否上傳成功:

[[email protected] ~]$ /home/hadoop/hadoop-2.7.7/bin/hadoop fs -ls /test/input

Found 1 items

-rw-r--r-- 2 hadoop supergroup 56 2018-12-30 21:40 /test/input/file1.txt

計算:

[[email protected] ~]$ /home/hadoop/hadoop-2.7.7/bin/hadoop jar /home/hadoop/hadoop-2.7.7/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.7.jar wordcount /test/input /test/output

檢視執行結果:

[[email protected] ~]$ /home/hadoop/hadoop-2.7.7/bin/hadoop fs -cat /test/output/part-r-00000

beijing 1

is 2

my 1

name 2

thunder 1

to 1

welcome 1

what 1

your 1