k8s(一)、 1.9.0高可用叢集本地離線部署記錄

一、部署說明

1.節點

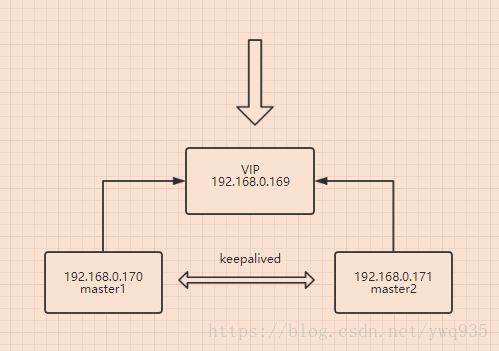

master1: IP:192.168.0.170/24 hostname:171

master2: IP:192.168.0.171/24 hostname:172

VIP:192.168.0.169/24

2.工具版本:

etcd-v3.1.10-linux-amd64.tar.gz

kubeadm-1.9.0-0.x86_64.rpm

kubectl-1.9.0-0.x86_64.rpm

kubelet-1.9.9-9.x86_64.rpm

kubernetes-cni-0.6.0-0.x86_64.rpm

socat-1.7.3.2-2.el7.x86_64.rpm

docker離線映象:

etcd-amd64_v3.1.10.tar

k8s-dns-dnsmasq-nanny-amd64_v1.14.7.tar

k8s-dns-kube-dns-amd64_1.14.7.tar

k8s-dns-sidecar-amd64_1.14.7.tar

kube-apiserver-amd64_v1.9.0.tar

kube-controller-manager-amd64_v1.9.0.tar

kube-proxy-amd64_v1.9.0.tar

kubernetes-dashboard_v1.8.1.tar

kube-scheduler-amd64_v1.9.0.tar

pause-amd64_3.0.tar

以上安裝包的雲盤連結隨後附上.有梯子翻牆的直接裝,無需離線包。

=================================================

k8s 高可用2個核心 apiserver master和etcd

apiserver master:(需高可用)叢集核心,叢集API介面、叢集各個元件通訊的中樞;叢集安全控制;

etcd :(需高可用)叢集的資料中心,用於存放叢集的配置以及狀態資訊,非常重要,如果資料丟失那麼叢集將無法恢復;因此高可用叢集部署首先就是etcd是高可用叢集;

kube-scheduler:排程器 (內部自選舉)叢集Pod的排程中心;預設kubeadm安裝情況下–leader-elect引數已經設定為true,保證master叢集中只有一個kube-scheduler處於活躍狀態;

kube-controller-manager: 控制器(內部自選舉)叢集狀態管理器,當叢集狀態與期望不同時,kcm會努力讓叢集恢復期望狀態,比如:當一個pod死掉,kcm會努力新建一個pod來恢復對應replicasset期望的狀態;預設kubeadm安裝情況下–leader-elect引數已經設定為true,保證master叢集中只有一個kube-controller-manager處於活躍狀態;

kubelet: 將agent node註冊上apiserver

kube-proxy: 每個node上一個,負責service vip到endpoint pod的流量轉發,老版本主要通過設定iptables規則實現,新版1.9基於kube-proxy-lvs 實現

二、提前準備

1.關閉防火牆,selinux,swap

systemctl stop firewalld

systemctl disable firewalld

systemctl disable firewalld

setenforce 0

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/sysconfig/selinux

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/sysconfig/selinux

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/selinux/config

swapoff -a

echo 'swapoff -a ' >> /etc/rc.d/rc.local2.核心開啟網路支援

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl -p /etc/sysctl.conf若執行sysctl -p 時報錯,嘗試:

modprobe br_netfilter

ls /proc/sys/net/bridge三、配置keepalived

yum -y install keepalived

cat >/etc/keepalived/keepalived.conf <<EOL

global_defs {

router_id LVS_k8s

}

vrrp_script CheckK8sMaster {

script "curl -k https://192.168.0.169:6443"

interval 3

timeout 9

fall 2

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 61

# 主節點權重最高 依次減少

priority 120

advert_int 1

#修改為本地IP

mcast_src_ip 192.168.0.170

nopreempt

authentication {

auth_type PASS

auth_pass sqP05dQgMSlzrxHj

}

unicast_peer {

#註釋掉本地IP

#192.168.0.170

192.168.0.171

}

virtual_ipaddress {

192.168.0.169/24

}

track_script {

CheckK8sMaster

}

}

EOL

systemctl enable keepalived && systemctl restart keepalived

master2上修改配置裡的相應ip和優先順序後同樣啟動.

檢視vip是否正常漂在master1上:

[root@170 pkgs]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:1c:5d:71 brd ff:ff:ff:ff:ff:ff

inet 192.168.0.170/24 brd 192.168.0.255 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.0.169/24 scope global secondary ens33

valid_lft forever preferred_lft forever

四、etcd配置

1.配置環境變數:

export NODE_NAME=170 #當前部署的機器名稱(隨便定義,只要能區分不同機器即可)

export NODE_IP=192.168.0.170 # 當前部署的機器 IP

export NODE_IPS="192.168.0.170 192.168.0.171 " # etcd 叢集所有機器 IP

# etcd 叢集間通訊的IP和埠

export ETCD_NODES="170"=https://192.168.0.170:2380,"171"=https://192.168.0.171:23802.建立ca和證書和祕鑰

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

chmod +x cfssl_linux-amd64

mv cfssl_linux-amd64 /usr/local/bin/cfssl

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

chmod +x cfssljson_linux-amd64

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl-certinfo_linux-amd64

mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo生成ETCD的TLS 祕鑰和證書

為了保證通訊安全,客戶端(如 etcdctl) 與 etcd 叢集、etcd 叢集之間的通訊需要使用 TLS 加密,本節建立 etcd TLS 加密所需的證書和私鑰。

建立 CA 配置檔案:

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "8760h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "8760h"

}

}

}

}

EOF==ca-config.json==:可以定義多個 profiles,分別指定不同的過期時間、使用場景等引數;後續在簽名證書時使用某個 profile;

==signing==:表示該證書可用於簽名其它證書;生成的 ca.pem 證書中 CA=TRUE;

==server auth==:表示 client 可以用該 CA 對 server 提供的證書進行驗證;

==client auth==:表示 server 可以用該 CA 對 client 提供的證書進行驗證;

cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF“CN”:Common Name,kube-apiserver 從證書中提取該欄位作為請求的使用者名稱 (User Name);瀏覽器使用該欄位驗證網站是否合法;

“O”:Organization,kube-apiserver 從證書中提取該欄位作為請求使用者所屬的組 (Group);

==生成 CA 證書和私鑰==:

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

ls ca*==建立 etcd 證書籤名請求:==

cat > etcd-csr.json <<EOF

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"192.168.0.170",

"192.168.0.171"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOFhosts 欄位指定授權使用該證書的 etcd 節點 IP;

每個節點IP 都要在裡面 或者 每個機器申請一個對應IP的證書

生成 etcd 證書和私鑰:

cfssl gencert -ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes etcd-csr.json | cfssljson -bare etcd

ls etcd*

mkdir -p /etc/etcd/ssl

cp etcd.pem etcd-key.pem ca.pem /etc/etcd/ssl/將生成的私鑰檔案分發到master2上:

scp -r /etc/etcd/ssl root@192.168.0.171:/etc/etcd/3.安裝etcd

wget http://github.com/coreos/etcd/releases/download/v3.1.10/etcd-v3.1.10-linux-amd64.tar.gz

tar -xvf etcd-v3.1.10-linux-amd64.tar.gz

mv etcd-v3.1.10-linux-amd64/etcd* /usr/local/bin

mkdir -p /var/lib/etcd # 必須先建立工作目錄

cat > etcd.service <<EOF

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

Documentation=https://github.com/coreos

[Service]

Type=notify

WorkingDirectory=/var/lib/etcd/

ExecStart=/usr/local/bin/etcd \\

--name=${NODE_NAME} \\

--cert-file=/etc/etcd/ssl/etcd.pem \\

--key-file=/etc/etcd/ssl/etcd-key.pem \\

--peer-cert-file=/etc/etcd/ssl/etcd.pem \\

--peer-key-file=/etc/etcd/ssl/etcd-key.pem \\

--trusted-ca-file=/etc/etcd/ssl/ca.pem \\

--peer-trusted-ca-file=/etc/etcd/ssl/ca.pem \\

--initial-advertise-peer-urls=https://${NODE_IP}:2380 \\

--listen-peer-urls=https://${NODE_IP}:2380 \\

--listen-client-urls=https://${NODE_IP}:2379,http://127.0.0.1:2379 \\

--advertise-client-urls=https://${NODE_IP}:2379 \\

--initial-cluster-token=etcd-cluster-0 \\

--initial-cluster=${ETCD_NODES} \\

--initial-cluster-state=new \\

--data-dir=/var/lib/etcd

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

#注意,上方的環境變數一定要先配置好再進行這一步操作,在master2上export環境變數時注意修改ip

mv etcd.service /etc/systemd/system/

systemctl daemon-reload

systemctl enable etcd

systemctl start etcd

systemctl status etcd

master2同樣方法安裝etcd

驗證服務

etcdctl \

--endpoints=https://${NODE_IP}:2379 \

--ca-file=/etc/etcd/ssl/ca.pem \

--cert-file=/etc/etcd/ssl/etcd.pem \

--key-file=/etc/etcd/ssl/etcd-key.pem \

cluster-health預期結果:

2018-04-06 20:07:41.355496 I | warning: ignoring ServerName for user-provided CA for backwards compatibility is deprecated

2018-04-06 20:07:41.357436 I | warning: ignoring ServerName for user-provided CA for backwards compatibility is deprecated

member 57d56677d6df8a53 is healthy: got healthy result from https://192.168.0.170:2379

member 7ba40ace706232da is healthy: got healthy result from https://192.168.0.171:2379若有失敗,重新配置

systemctl stop etcd

rm -Rf /var/lib/etcd

rm -Rf /var/lib/etcd-cluster

mkdir -p /var/lib/etcd

systemctl start etcd五.docker安裝

若原來已經安裝docker版本,建議解除安裝後安裝docker-ce版本:

yum -y remove docker docker-common基於aliyun安裝docker-ce方法:

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

# Step 2: 新增軟體源資訊

sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# Step 3: 更新並安裝 Docker-CE

sudo yum makecache fast

#檢視repo中所有版本的docker-ce

yum list docker-ce --showduplicates | sort -r

docker-ce.x86_64 18.03.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 18.03.0.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.12.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.12.0.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.09.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.09.0.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.06.2.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.06.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.06.0.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.03.2.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.03.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.03.0.ce-1.el7.centos docker-ce-stable

sudo yum -y install docker-ce-17.03.2.ce

#若提示依賴docker-ce-selinux,則先執行yum -y install docker-ce-selinux-17.03.2.ce

# Step 4: 開啟Docker服務

sudo service docker start && systemctl enbale docker 六.安裝kubelet、kubectl、kubeadm、kubecni

準備好提前下載好的rpm包:

[root@170 pkgs]# cd /root/k8s_images/pkgs

[root@170 pkgs]# ls

etcd-v3.1.10-linux-amd64.tar.gz kubeadm-1.9.0-0.x86_64.rpm kubectl-1.9.0-0.x86_64.rpm kubelet-1.9.9-9.x86_64.rpm kubernetes-cni-0.6.0-0.x86_64.rpm socat-1.7.3.2-2.el7.x86_64.rpm

[root@170 pkgs]# yum -y install *.rpm

修改kubelet啟動引數裡的cgroup驅動以便相容docker:

vi /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

#Environment="KUBELET_CGROUP_ARGS=--cgroup-driver=systemd"

Environment="KUBELET_CGROUP_ARGS=--cgroup-driver=cgroupfs"

systemctl enable docker && systemctl restart docker

systemctl daemon-reload && systemctl restart kubelet在master2上同樣操作

七.kubeadm初始化

將準備好的本地的docker images映象匯入本地倉庫內

[root@170 docker_images]# ls

etcd-amd64_v3.1.10.tar k8s-dns-dnsmasq-nanny-amd64_v1.14.7.tar k8s-dns-sidecar-amd64_1.14.7.tar kube-controller-manager-amd64_v1.9.0.tar kubernetes-dashboard_v1.8.1.tar pause-amd64_3.0.tar

flannel:v0.9.1-amd64.tar k8s-dns-kube-dns-amd64_1.14.7.tar kube-apiserver-amd64_v1.9.0.tar kube-proxy-amd64_v1.9.0.tar kube-scheduler-amd64_v1.9.0.tar

[root@170 docker_images]# for i in `ls`;do docker load -i $i;doneconfig.yaml初始化配置檔案:

cat <<EOF > config.yaml

apiVersion: kubeadm.k8s.io/v1alpha1

kind: MasterConfiguration

etcd:

endpoints:

- https://192.168.0.170:2379

- https://192.168.0.171:2379

caFile: /etc/etcd/ssl/ca.pem

certFile: /etc/etcd/ssl/etcd.pem

keyFile: /etc/etcd/ssl/etcd-key.pem

dataDir: /var/lib/etcd

networking:

podSubnet: 10.244.0.0/16

kubernetesVersion: 1.9.0

api:

advertiseAddress: "192.168.0.170"

token: "b99a00.a144ef80536d4344"

tokenTTL: "0s"

apiServerCertSANs:

- "170"

- "171"

- 192.168.0.169

- 192.168.0.170

- 192.168.0.171

featureGates:

CoreDNS: true

EOF初始化叢集

kubeadm init --config config.yaml結果:

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

as root:

kubeadm join --token b99a00.a144ef80536d4344 192.168.0.169:6443 --discovery-token-ca-cert-hash sha256:ebc2f64e9bcb14639f26db90288b988c90efc43828829c557b6b66bbe6d68dfa

[[email protected]170 docker_images]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

170 Ready master 5h v1.9.0 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://18.3.0

171 Ready master 4h v1.9.0 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://18.3.0

注意:提示資訊中的kubeadm join那一行請儲存好,後期使用kubeadm擴增叢集node時,需要在新的node上使用這行命令加入叢集

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config配置master2:

#拷貝pki 證書

mkdir -p /etc/kubernetes/pki

scp -r /etc/kubernetes/pki/ root@192.168.0.171:/etc/kubernetes/

#拷貝初始化配置

scp config.yaml root@192.168.0.171:/etc/kubernetes/

#在master2上初始化

kubeadm init --config /etc/kubernetes/config.yaml 八、安裝網路外掛kube-router

wget https://github.com/cloudnativelabs/kube-router/blob/master/daemonset/kubeadm-kuberouter.yaml

kubectl apply -f kubeadm-kuberouter.yaml

[root@170 docker_images]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-546545bc84-j5mds 1/1 Running 2 5h

kube-system kube-apiserver-170 1/1 Running 2 5h

kube-system kube-apiserver-171 1/1 Running 2 4h

kube-system kube-controller-manager-170 1/1 Running 2 5h

kube-system kube-controller-manager-171 1/1 Running 2 4h

kube-system kube-proxy-5dg4j 1/1 Running 2 4h

kube-system kube-proxy-s6jtn 1/1 Running 2 5h

kube-system kube-router-wbxvq 1/1 Running 2 4h

kube-system kube-router-xrkbw 1/1 Running 2 4h

kube-system kube-scheduler-170 1/1 Running 2 5h

kube-system kube-scheduler-171 1/1 Running 2 4h

部署完成

預設情況下,為了保證master的安全,master是不會被排程到app的。使用排程tanit規則打破這個限制,使pod可以部署在master中:

kubectl taint nodes --all node-role.kubernetes.io/master-配置私有docker倉庫,加速使用:

vim /lib/systemd/system/docker.service

註釋#ExecStart=/usr/bin/dockerd

ExecStart=/usr/bin/dockerd --insecure-registry registry.yourself.com:5000

#填寫自己的docker倉庫地址6.8補充:

k8s叢集通過kubeadm加入新的node:

在叢集初始化完成時,會提示如下資訊:

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

as root:

kubeadm join --token b99a00.a144ef80536d4344 192.168.0.169:6443 --discovery-token-ca-cert-hash sha256:ebc2f64e9bcb14639f26db90288b988c90efc43828829c557b6b66bbe6d68dfa其中kubeadm join是為後期新的node加入叢集時使用的,在公司生產上的叢集部署好時此命令沒有儲存,且token時有實效性的。後期有node加入叢集,join命令的兩個引數可以在master節點上通過如下命令獲取:

token:

[email protected]:~# kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

b97h00.a144ef08526d4345 <forever> <never> authentication,signing The default bootstrap token generated by 'kubeadm init'. system:bootstrappers:kubeadm:default-node-tokenhash:

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

7eb62962040916673ab58b0c40fb451caef7538452f5e6c8ff7719a81fdbf633然後在新node上執行:

kubeadm join --token=$TOKEN $MASTER_IP:6443 --discovery-token-ca-cert-hash sha256:$HASH檢視node,kubectl get nodes新node已進入Ready狀態。(在join前請保證node上安裝好kubelet、docker、kubeadm、kubernetes-cni、socat,並啟動kubelet、docker,參考上方步驟)